Military AI isn’t trustworthy until warfighters use it under pressure. Here’s how operator-centered trust metrics and acquisition gates speed adoption in defense.

Trustworthy Military AI Starts With the Warfighter

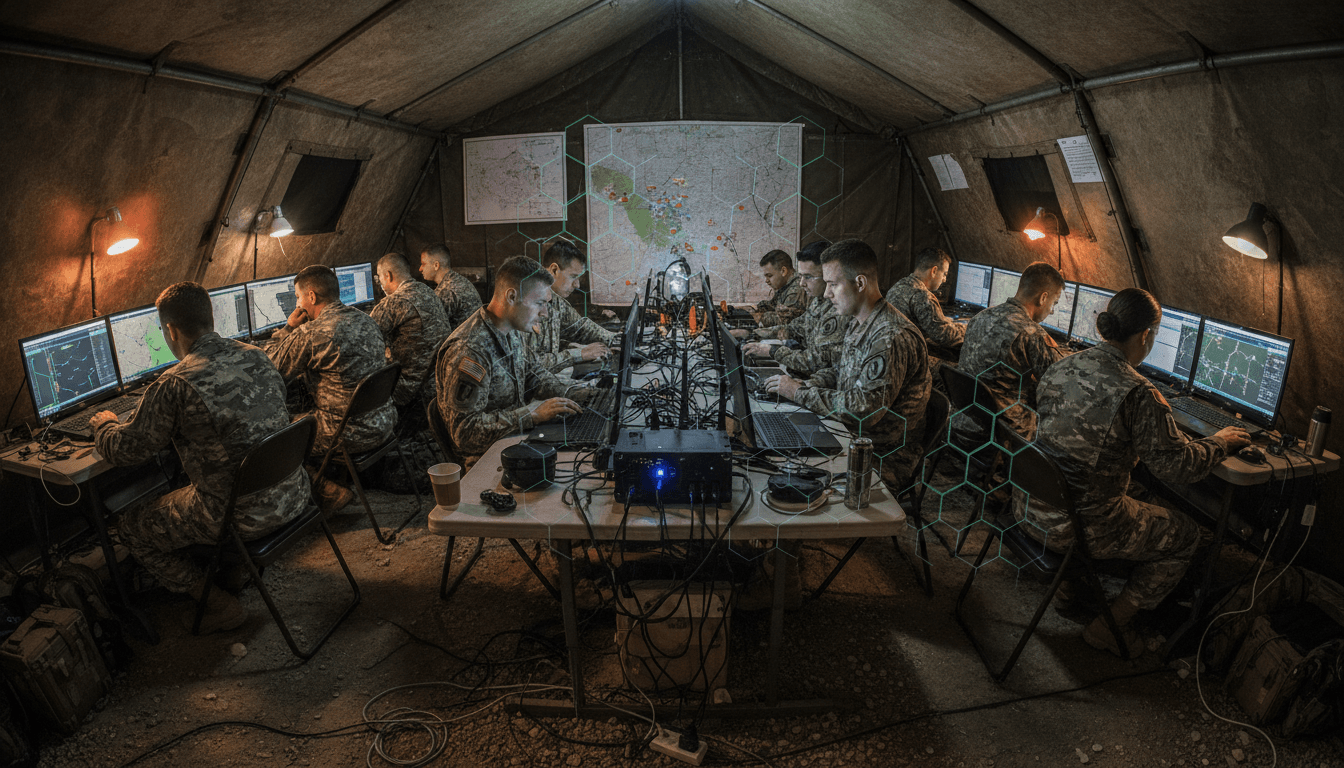

A combat unit doesn’t care that an AI model passed 47 lab test cases. They care whether it works when the SATCOM drops, the data’s stale, the interface is clunky, and the clock is brutal. That’s the gap at the center of military AI adoption: the U.S. Department of Defense can produce “responsible AI” paperwork for years and still fail the only test that matters—will warfighters actually use it under pressure?

Most defense AI programs don’t fail because the technology is impossible. They fail because trust is defined by the wrong people, at the wrong time, using the wrong yardsticks. Engineers optimize for technical assurance. Lawyers optimize for accountability. Program offices optimize for milestone survival. Warfighters optimize for mission success.

For the “AI in Defense & National Security” series, this post takes a firm stance: operator-defined trust has to be the decisive standard for fielding AI-enabled systems in national security. Technical and legal trust still matter—but they should enable adoption, not substitute for it.

The real trust problem: three definitions that don’t match

“Trustworthy AI” isn’t one thing inside the Pentagon. It’s three competing definitions that drive three different acquisition futures. If you don’t name the tension, you can’t fix it.

1) Engineer-defined trust: secure, validated… and often unusable

When engineers set the standard, trust tends to mean:

- resilience to adversarial manipulation (data poisoning, prompt injection, model inversion)

- verification and validation pipelines

- controlled testing and accreditation

- hardened

MLOpsinfrastructure

That work is necessary, especially for AI in contested environments where adversaries actively try to corrupt sensors, degrade inputs, or spoof signatures.

But here’s the problem: technical assurance is an infinite game. You can always demand another test, another patch, another set of controls. The acquisition system already rewards this behavior because it’s easy to document and defend in reviews. The result is predictable: AI systems that look pristine in a lab and feel brittle in the field.

2) Operator-defined trust: adoption, diffusion, and performance under stress

When warfighters define trust, it tends to mean:

- it performs in degraded and low-bandwidth conditions

- it reduces workload instead of adding it

- it fits existing tactics and battle rhythms

- it fails gracefully

This definition is harder to measure in spreadsheets, but it’s the only one that survives contact with reality.

Operator trust is also the core of AI adoption in defense systems. A model can be “accurate” and still be abandoned if it:

- takes too long to generate outputs

- requires constant data grooming

- forces the unit to change workflows in the middle of a deployment

- produces confident answers without clear uncertainty signals

The bottom line: trust is behavior. If the unit doesn’t rely on it during real operations, the system isn’t trustworthy.

3) Compliance-defined trust: explainability and blame assignment

When lawyers, policy staff, or compliance offices define trust, the emphasis often shifts to:

- explainability requirements

- human-in-the-loop process controls

- documentation for auditability

- interoperability checklists

These aims aren’t wrong. But the acquisition incentive can drift toward a darker outcome: building systems that are easier to litigate than to fight with.

If “trust” becomes primarily about who can be held accountable, AI diffusion will stay slow—and adversaries willing to accept more operational risk will move faster.

Memorable rule: If your definition of trust doesn’t predict battlefield adoption, it’s not the primary definition—it’s paperwork.

Why defense acquisition keeps producing AI people won’t use

The defense acquisition system is structurally misaligned with operator trust. It rewards exquisite requirements, slow timelines, and risk avoidance—exactly the opposite of what AI in national security demands.

AI is not a one-and-done procurement. It’s closer to a living capability:

- models drift as conditions change

- data pipelines break

- interfaces need iteration

- updates can introduce new failure modes

Traditional programs are designed to freeze requirements early and then spend years delivering to that frozen target. That approach is how you end up with systems that “work on paper” but collapse when deployed.

The frustrating part is that the U.S. defense ecosystem has seen this movie before: overly complex command-and-control software, logistics systems that don’t survive theater realities, radios that pass compliance tests but fail in heat and dust. AI will repeat the pattern unless the department builds operator-centered trust gates into how it buys, tests, and fields AI-enabled systems.

What “operator-centered trustworthy AI” looks like in practice

Operator-centered trust can be measured. It’s not vibes. It just uses metrics that map to combat realities.

The four questions that should decide whether AI ships

If you’re building or buying military AI, these questions should be treated like a field-readiness checklist:

- Does it work in DDIL conditions? (disrupted, degraded, intermittent, low bandwidth)

- Does it reduce cognitive load? Or does it add dashboards, toggles, and new failure points?

- Can a unit integrate it without contractor dependency? If every workflow change requires an engineering degree, adoption will stall.

- How does it fail? Graceful degradation beats catastrophic collapse—every time.

Those questions translate into testable requirements:

- offline and edge performance benchmarks

- time-to-decision measurements during exercises

- operator error rates and training time metrics

- fallback modes (manual workflows, last-known-good models, cached outputs)

Design principles that reliably increase warfighter trust

I’ve found that the fastest path to trust isn’t “more features.” It’s fewer surprises.

Prioritize these traits:

- Latency discipline: If output arrives after the tactical decision window, it’s dead weight.

- Uncertainty display: Don’t hide confidence levels; show them clearly.

- Explainability for the user, not the auditor: A squad leader needs “why this recommendation” in plain language, not a 30-page model card.

- Workflow fit: AI should plug into existing battle drills before it tries to change them.

- Training that matches deployment reality: If it only works on pristine data and stable networks, it’s not field-ready.

This is where trust in autonomous systems for military use becomes concrete: operators accept autonomy when they understand boundaries, failure modes, and escalation paths.

Policy and acquisition moves that make warfighter trust the standard

If operator trust is the decisive metric, the acquisition process must force it to matter. Otherwise, it gets overridden by the metrics that are easier to brief.

1) Assign a single “trust arbiter” with directive authority

Trust is currently split across offices: engineering assurance, service-level fielding, legal compliance, and digital policy. That fragmentation guarantees slow decisions and diluted accountability.

A workable fix is to empower a central authority to arbitrate trade-offs among:

- operational trust (adoption and mission performance)

- technical trust (security and robustness)

- legal trust (accountability and policy compliance)

This role must be able to stop programs that don’t earn operator confidence—not just suggest improvements.

2) Add an operator trust gate as a formal milestone requirement

Congress and DoD leadership have a clean lever: make “operator trust assessment” a required milestone before production or operational deployment for AI-enabled systems.

A credible operator trust gate includes:

- early operator evaluation (not just end-stage OT&E)

- iterative testing tied to mission scenarios

- measurable adoption intent (would units choose it when alternatives exist?)

- documented failure drills (what operators do when AI output is wrong)

This directly supports better policy and decision-making for national security because it aligns approvals with real operational value.

3) Fund operator-led field experimentation with direct vendor feedback

Operator trust is earned during exercises, deployments, and messy environments—not conference rooms.

DoD needs broader authorities and budgets that let units:

- pilot AI tools rapidly

- modify interfaces and workflows

- run side-by-side comparisons against current processes

- provide direct feedback to vendors on what fails in the field

If vendor feedback loops are slow, AI improvement cycles stall. If improvement cycles stall, trust never accumulates.

A practical blueprint: how to build warfighter trust in 90 days

You can compress trust-building if you treat it like an operational campaign, not a compliance project. Here’s a field-tested sequence that organizations supporting defense AI can apply.

Days 0–15: Pick missions, not models

Start with a narrow operational problem:

- targeting support

- ISR triage and prioritization

- maintenance prediction for a specific platform

- cyber alert reduction for a defined network segment

Success metric example: reduce analyst queue time by 30% in an exercise cell or cut false positives by 25% while preserving detection rate.

Days 15–45: Run DDIL-first prototyping

Test under constraints that resemble real operations:

- offline / edge compute mode

- noisy data and missing fields

- intermittent connectivity

- adversarial red-teaming of inputs

If the system can’t tolerate ugly conditions, it won’t survive deployment.

Days 45–75: Measure adoption drivers, not just accuracy

Track:

- training time to basic proficiency

- time-to-decision impact

- operator overrides and why they happen

- failure frequency and recovery time

Accuracy without usability is a trap. Military AI adoption depends more on friction than on F1 scores.

Days 75–90: Ship a “trusted minimum” and iterate

Field the smallest useful capability with:

- clear boundaries (“use for X, not for Y”)

- visible confidence signals

- a manual fallback mode

- a rapid update path

Operators will forgive limitations. They won’t forgive unpredictability.

The strategic payoff: faster diffusion, better deterrence

When warfighters define what AI can be trusted, diffusion accelerates—and diffusion is where military advantage lives. Not in demos, not in PowerPoint, and not in lab scores.

This matters for national security outcomes because adversaries aren’t waiting for perfect assurance. They’re fielding, learning, iterating, and accepting risk. The U.S. can still be disciplined without being paralyzed, but only if it treats operator trust as the center of gravity.

For this “AI in Defense & National Security” series, the throughline is consistent: AI isn’t just a technology upgrade. It’s a force employment problem. The systems that win are the ones units choose to rely on.

Snippet-worthy takeaway: A military AI system is “trustworthy” only when operators prefer it over the old way during real missions.

What to do next if you’re responsible for defense AI outcomes

If you’re a program executive, a capability developer, a vendor, or a policy lead, take three actions immediately:

- Define operator trust metrics before the next milestone review. If you can’t measure field confidence, you can’t manage it.

- Run DDIL and failure-mode tests early. Don’t wait for formal operational test events to discover basic fragility.

- Create a real feedback loop from units to builders. Monthly improvement cycles beat annual “requirements rewrites.”

The Pentagon will keep buying AI. The question is whether it will field AI that warfighters actually trust—AI that changes outcomes in a contested environment—or whether “responsible AI” becomes another binder on a shelf.

What would happen if, in 2026 budget decisions, operator trust became the metric that program offices feared most—and pursued most aggressively?