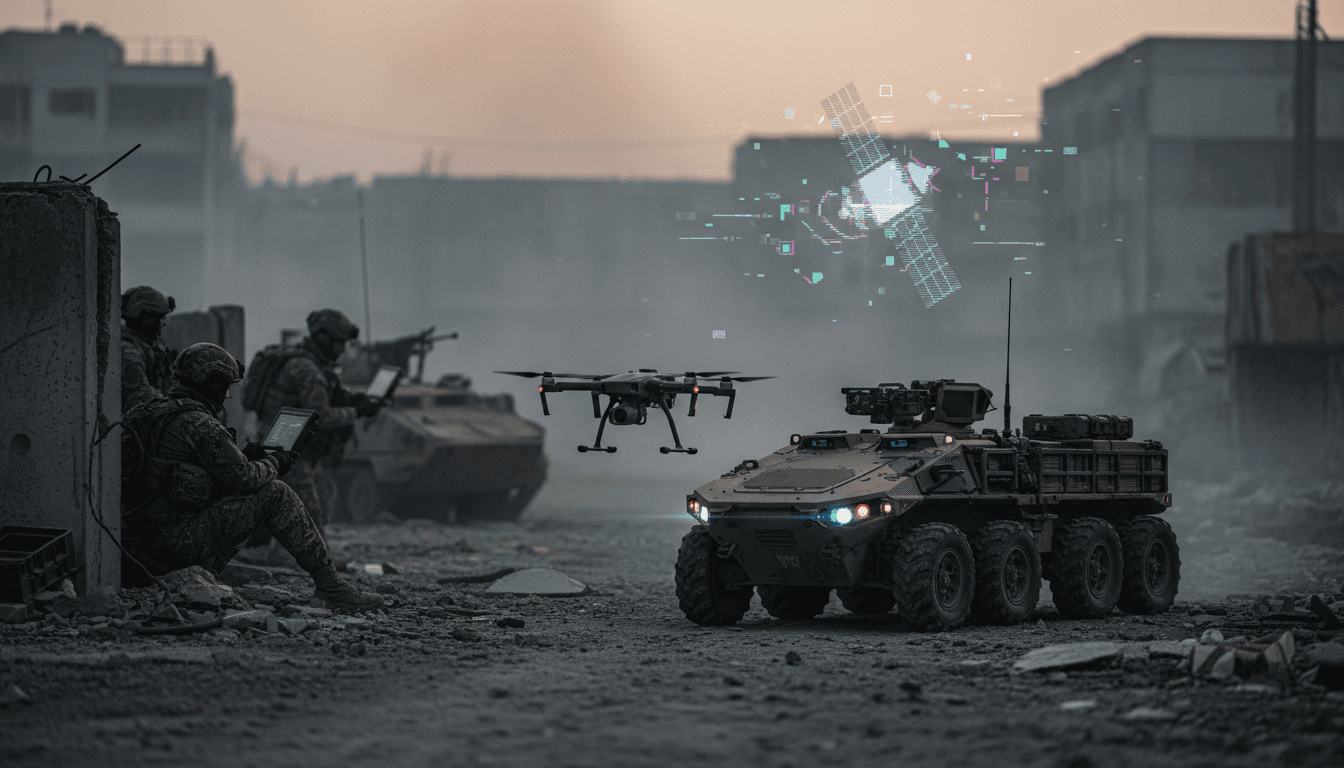

True AI autonomy keeps missions running when GPS and data links fail. Learn what it is, why it matters, and how to procure it in 2026.

True AI Autonomy: Winning When GPS and Links Fail

A tough lesson from the last two years of battlefield innovation is that “autonomous” often means “works great until the spectrum gets noisy.” When GPS is jammed, data links are cut, and operators are overloaded or unavailable, plenty of modern systems stop being “AI-enabled” and start being expensive paperweights.

That failure mode isn’t theoretical. It’s been stress-tested in the Russo-Ukrainian War, where electronic warfare has turned connectivity into a consumable and where the demand for drone pilots has collided with the reality of training time and human fatigue. Meanwhile, Russia and China are designing for the environment they expect to fight in: contested, degraded, and denied.

For this installment of our AI in Defense & National Security series, I’m going to take a clear stance: the West won’t get to resilient, scalable autonomy by upgrading remote control. We get there by building systems that can perceive, decide, and act onboard—even when they’re alone, jammed, and under pressure.

The autonomy myth that keeps breaking programs

Most organizations buy “autonomy features” and then wonder why they don’t get autonomous outcomes. The root problem is language: procurement teams, operators, and vendors often use the same word—autonomous—to describe totally different realities.

Here’s the line that matters in 2025:

If a platform needs GPS, a stable data link, or constant human inputs to complete its mission, it isn’t truly autonomous.

A practical definition of true AI autonomy

True AI autonomy is onboard capability—sensors plus algorithms plus compute—that lets a drone, robot, or munition complete an end-to-end mission without relying on external connectivity or continuous human supervision.

That includes three concrete functions:

- Perceive: Understand the environment with onboard sensing (vision, radar, inertial sensors, etc.).

- Decide: Choose actions in real time given uncertainty and adversary interference.

- Act: Navigate, avoid threats/obstacles, and execute tasks (recon, resupply, targeting) independently.

That definition is intentionally strict. Why? Because battlefields punish wishful thinking. If your CONOPS assumes “the link will probably be there,” you’ve already designed a weakness an adversary can buy for a few hundred dollars in jamming gear.

Why true autonomy is a strategic imperative (not a science project)

The value of AI autonomy in defense is simple: it preserves mission effectiveness when the battlefield removes your crutches. And those crutches—GPS and comms—are exactly what capable adversaries attack first.

Electronic warfare turns connectivity into a liability

The Russo-Ukrainian War has repeatedly shown that jamming and spoofing can sharply reduce the effectiveness of precision and remotely operated systems when they depend on GPS or datalinks. You can’t “train harder” to fix a missing signal.

What changes with true autonomy is the dependency stack:

- Navigation shifts from GPS-first to multi-sensor, map-aware, inertial + vision/radar approaches.

- Targeting shifts from “operator sees and clicks” to onboard perception with constrained rules.

- Mission execution shifts from “constant joystick inputs” to supervision-by-exception.

The strategic point: autonomy is what makes mass viable. If every drone needs a dedicated operator and an unjammed link, scaling up just creates a larger queue of failures.

The operator bottleneck is real—and it’s measurable

Allies have delivered Ukraine enormous numbers of drones, but there’s a hard limiter: humans. Training pipelines take time (months, not weeks), and even skilled operators saturate quickly under stress, fatigue, and attrition.

True autonomy doesn’t remove humans from the system; it changes how humans contribute:

- One operator can supervise multiple systems.

- Routine tasks (route following, obstacle avoidance, return-to-base) stop consuming attention.

- Humans focus on intent, constraints, and escalation decisions, not continuous piloting.

If your autonomy plan doesn’t reduce operator workload, it’s not an autonomy plan—it’s a feature list.

Logistics and casualty evacuation are the fastest win

Here’s an under-discussed reality: the most immediate ROI for autonomy often isn’t strike—it’s sustainment. In Ukraine, a large share of unmanned ground vehicle missions has been reported as logistics and casualty evacuation (with one estimate at 47%).

This matters because logistics is where denial environments are brutal:

- Routes change daily.

- Daylight movement is punished.

- Links fail exactly when you need them most.

A tele-operated evacuation robot that loses comms can freeze in place—turning a rescue attempt into a stalled target. True autonomy (onboard navigation, local obstacle handling, safe fallback behaviors) directly improves survival odds by keeping the vehicle moving with purpose even when it’s “cut off.”

The real barriers: not algorithms, ecosystems

Western defense organizations aren’t blocked by a lack of clever ideas. They’re blocked by missing infrastructure that makes autonomy iteration fast. If you want systems that evolve on 18–24 month cycles, you need an ecosystem that can test, learn, and update at that rhythm.

Data scarcity and the “reinvent the wheel” tax

Autonomy is hungry for data: sensor streams, labeled imagery, terrain, weather, edge cases, failure modes. Yet many programs treat data as a byproduct instead of a strategic asset.

The result is predictable:

- Each vendor builds small, siloed datasets.

- Validation is inconsistent.

- Models generalize poorly when moved to new terrain, lighting, seasons, or adversary tactics.

A better approach is boring—but powerful: shared, government-backed baseline datasets (with controlled tiers for sensitivity) that let innovators compete on capability rather than who can afford the most collection flights.

Testing ranges: autonomy dies in PowerPoint

Autonomy improves through repetition. Field trials, rapid adjustments, back to the range. When access to ranges is limited or administratively painful, teams overfit to simulation and underfit to reality.

If you want resilient autonomy, you need:

- Frequent live trials (weekly, not quarterly)

- EW-contested environments as the default

- Standardized “autonomy scorecards” (navigation success rates, mission completion under jamming, recovery behaviors)

Competitions help, too, because they force clarity: either your system completes the course under constraints or it doesn’t.

Open-source collaboration (with grown-up security)

Defense autonomy often lives behind walls—some for good reasons. But treating every building block as secret creates duplication and slows maturation.

The middle path is workable:

- Open, vetted libraries for non-sensitive components (mapping, state estimation, autonomy middleware)

- Strong software supply-chain controls

- Clear boundaries around sensitive tactics and signatures

Done right, this expands the talent pool and shortens time-to-capability.

Architecture decides whether autonomy survives contact

You can’t buy “true AI autonomy” as a one-time procurement. Adversaries adapt. Sensors change. Compute gets smaller. New jammers show up. That means the system’s architecture has to assume upgrades are constant.

Modular, upgradeable systems are the only scalable path

Militaries can’t replace entire fleets every two years. The realistic approach is:

- Modular payload bays (swap sensors quickly)

- Standardized compute interfaces

- Software-defined behaviors that can update without depot-level rebuilds

- Open standards where possible to avoid vendor lock

One-liner worth remembering: If autonomy can’t be upgraded in months, it will be defeated in months.

What “procurement signals” should actually say

Vendors build what buyers reward. If contracts reward “autonomy-like features” instead of autonomy under denial, that’s what the market produces.

Procurement language should demand outcomes such as:

- Mission completion with no GPS

- Mission completion with no data link for X minutes/hours

- Demonstrated fallback behaviors (safe return, continue mission under constraints, handoff to another node)

- Performance reporting: success rates, abort causes, recovery success

If you can’t measure it, you can’t scale it.

What next-generation AI autonomy looks like (and what to plan for now)

The next phase of autonomy won’t just be “today’s drones, but smarter.” It’ll change tasking, tempo, and the boundary between platforms.

Mission autonomy: from task execution to intent interpretation

Near-term, humans set goals (“search this grid,” “deliver to this coordinate”). Within a few years, the more disruptive capability is systems that can operate from commander’s intent:

- Prioritize targets based on context

- Re-route around new threats without asking

- Allocate roles across a team (sense, decoy, relay, strike)

That’s not a license for unbounded behavior. It’s a requirement for operating when humans can’t micromanage at machine speed.

Navigation and sensing beyond GPS

Expect serious investment in:

- Vision/radar-based navigation and mapping

- Inertial + terrain-referenced approaches

- Multi-modal sensor fusion that remains useful in fog, low light, and clutter

The goal isn’t perfect perception. The goal is graceful degradation—the system still completes the mission at acceptable risk when conditions get messy.

Counter-robot warfare becomes a standard mission

As autonomous systems proliferate, so do systems designed to kill them. Ukraine has already shown the logic of interceptor drones and machine-on-machine engagements.

Planning implication: autonomy programs should budget for survivability against:

- Small interceptors

- Spoofing and decoy tactics

- Rapidly changing EW profiles

Autonomy that can’t adapt becomes a predictable target.

A practical playbook for defense leaders in 2026 budget cycles

If you want true AI autonomy, fund the infrastructure that makes iteration cheap and constant. Here are five moves that actually shift outcomes.

- Stand up shared autonomy datasets (tiered access, standardized labeling, continuous updates).

- Build autonomy test ranges that assume EW (jamming/spoofing as a routine condition, not a special event).

- Require modular architectures in solicitations (payload, compute, software upgrade paths; clear interface standards).

- Procure autonomy by measured outcomes (GPS-denied navigation success rate, comms-loss mission completion rate, recovery behaviors).

- Create on-ramps for non-traditional vendors with faster contracting lanes and realistic test opportunities.

These aren’t glamorous. They’re how you stop paying the “prototype forever” tax.

Where this fits in AI for defense and national security

AI in defense isn’t only about analysis dashboards or faster targeting cycles. It’s also about operational continuity—keeping surveillance, logistics, and mission planning functioning when an adversary tries to blind and deafen you.

True AI autonomy is the connective tissue across the whole series theme:

- Surveillance: persistent sensing without continuous control links

- Cybersecurity & resilience: reduced dependency on fragile external signals

- Mission efficiency: fewer operators per system, faster OODA at the edge

- Force modernization: architectures that evolve at tech speed, not bureaucracy speed

If you’re responsible for capability development or acquisition, the question worth sitting with is simple: Are you buying autonomy that looks good in a demo, or autonomy that survives when the battlefield turns hostile to connectivity?

If you want to pressure-test your autonomy roadmap—datasets, test strategy, modular architecture, and measurable requirements—this is exactly the kind of problem our teams help defense organizations work through.