AI risk scoring can speed Air Force waiver decisions, reduce grounded aircraft, and balance safety with readiness. See practical use cases and next steps.

AI Risk Scoring to Fix Air Force Readiness Bottlenecks

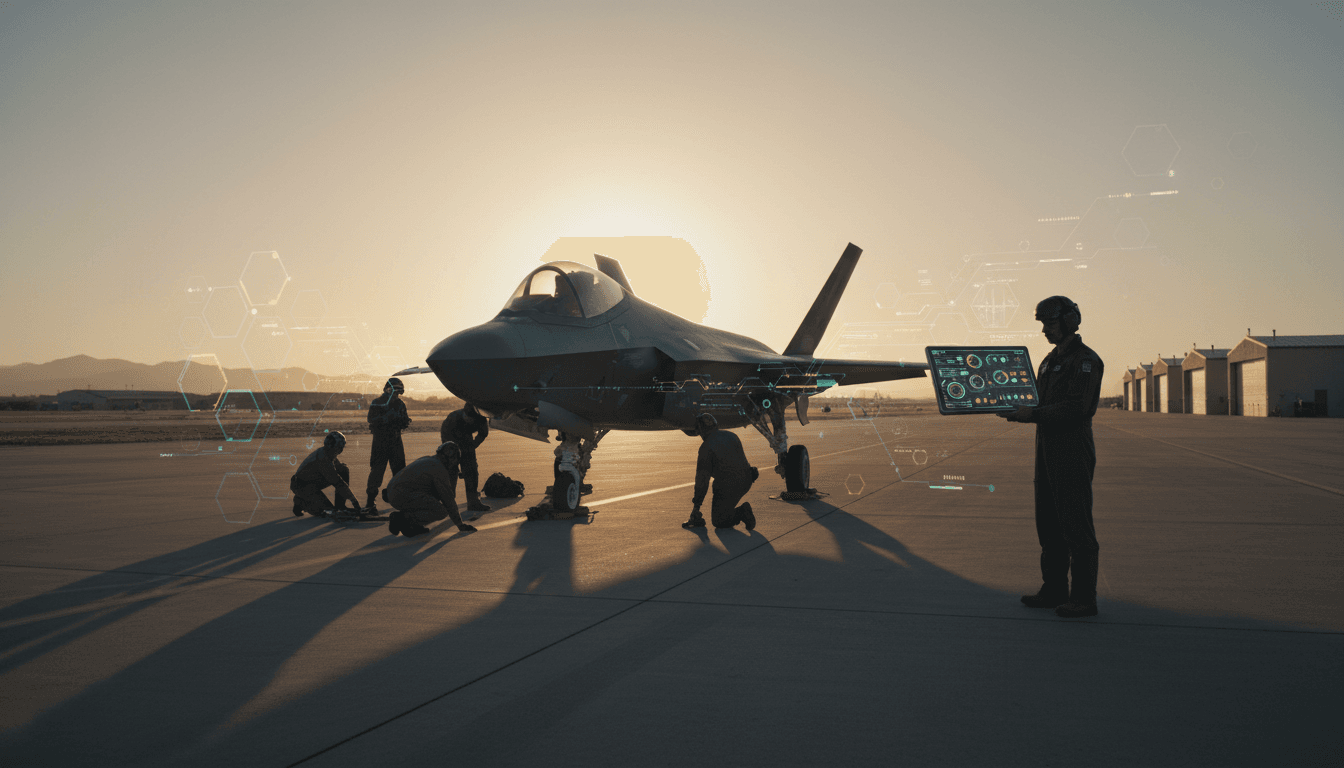

A fighter sits at the end of the flight line. Fuel’s topped off. Crew chiefs are finishing checks. The pilot is geared up. Then someone spots a small crack—barely out of spec. A waiver request goes in. Hours later, it comes back denied.

That aircraft doesn’t stay grounded because the unit can’t fight. It stays grounded because the decision process can’t move at the speed of the mission.

The U.S. Air Force’s readiness problem is often framed as a supply chain problem, a fleet-age problem, or an acquisition problem. Those are real. But there’s a quieter choke point hiding in plain sight: how technical risk decisions get made—and how slowly they travel through rigid approval workflows. In a force with fewer than 5,000 aircraft projected in inventory and only 62% mission capable (meaning roughly 1,900 aircraft can’t fly their designed missions on a given day), “red ink” isn’t just annoying. It’s operationally expensive.

This post is part of our AI in Defense & National Security series, and I’m going to take a clear stance: the fastest path to more combat-ready aircraft isn’t only buying new jets—it’s modernizing risk decisions with AI-enabled, commander-centered workflows. Not to bypass engineering. To make engineering advice faster, more consistent, and more transparent.

The hidden bottleneck: technical authority vs. operational urgency

The bottleneck is centralized decision authority paired with personal liability. In many Air Force weapon systems, a single engineering authority (often a chief engineer) is the final approver for deviations from technical data. That structure creates consistency and protects airworthiness—but it also creates a predictable outcome under stress: default-to-no.

Why “no” wins when accountability is asymmetric

Engineers are trained—and often legally exposed—to treat deviations as unacceptable unless proven safe. Maintainers and operators, on the other hand, live in a world of tradeoffs: mission timing, adversary pressure, weather windows, tanker availability, and the reality that aging platforms don’t behave like factory-fresh airframes.

When one side bears near-total personal downside, risk tolerance collapses. The system doesn’t need a villain. It produces the result automatically.

A readiness system that can’t say “yes, with conditions” will eventually say “no” to the mission.

The fleet reality makes spec purity harder every year

The average Air Force aircraft age is now about 32 years. Sustainment is increasingly an exercise in “keep it flying” under imperfect conditions: parts delays, substitute materials, nonstandard repairs, and legacy upgrades that depend on fragile sub-tier suppliers.

Meanwhile, the modernization timeline stays long. The Government Accountability Office has found that major defense programs average nearly 12 years to deliver the first version of a new system, with software often the drag factor.

So we’re left with a near-term requirement: generate combat power from what we have, faster.

Why this readiness problem is perfect for AI (and where it’s not)

AI fits this problem because the decision inputs are high-volume, repetitive, and data-rich. But AI is a poor fit if leaders expect it to “replace judgment.” The right approach is narrower and more practical: AI should standardize and accelerate risk assessment so humans can decide sooner and with clearer evidence.

What AI can do well: pattern recognition across technical history

Most waiver requests aren’t truly novel. They rhyme with past cases: similar cracks, similar locations, similar platform hours, similar environmental exposure, similar maintenance actions, similar outcomes.

A well-designed machine learning system can:

- Compare a proposed deviation to historical maintenance and failure data

- Estimate probability of failure over a time window (e.g., next sortie, next 10 hours, next 30 days)

- Flag conditions that change risk (salt air, high-G training cycles, runway quality, mission profile)

- Recommend mitigations (inspection interval changes, restrictions, short-term repair options)

The key is not pretending the model is omniscient. It’s making sure the model is consistently faster than the manual process, and honest about confidence.

What AI shouldn’t do: become the new single point of failure

If AI becomes “the approval,” you’ve simply swapped one black box for another. Worse, you may create brittle dependencies on data pipelines and model updates.

AI should be:

- An engineering advisor at machine speed

- A decision support tool that produces auditable rationale

- A workflow accelerator, not a permission gate

A better model: commander-owned readiness with AI-backed engineering advice

Commanders should own readiness decisions; engineers should own quantified technical advice. That’s the cultural shift the original argument points toward—and AI is the missing enabler that makes it plausible at scale.

Here’s the practical difference:

- Old model: Engineer approves/denies deviation → commander receives outcome

- Better model: Engineer + AI produce quantified risk + mitigations → commander decides, records rationale

What “quantified risk” looks like in practice

Not “safe/unsafe.” Not “in/out of spec.” Those are blunt instruments.

Quantified risk can be expressed as:

- Failure likelihood within a defined operating envelope

- Severity if failure occurs (mission abort vs. catastrophic loss)

- Detectability (chance inspections catch degradation before failure)

- Time-to-failure distribution (how risk grows per flight hour)

- Mitigation effectiveness (how much an inspection or restriction reduces risk)

This is how high-reliability industries operate when perfection isn’t available. Aviation already lives on probabilistic thinking in other domains; maintenance waivers often don’t.

Turning waivers into structured, reusable “risk objects”

One reason approvals are slow is because waiver requests are messy: inconsistent write-ups, scattered attachments, unclear operating context.

AI can enforce structure without adding burden:

- Auto-extract key fields from maintenance notes

- Attach relevant technical orders and prior cases

- Generate a consistent “risk card” the commander and engineer both read

The result is simple: fewer back-and-forth emails, fewer missing details, faster decisions.

Where AI creates real readiness gains: three high-impact use cases

The fastest readiness improvements will come from shrinking decision cycle time and preventing repeat grounding events. These use cases map directly to those outcomes.

1) Real-time waiver triage and routing

Most organizations treat every deviation like it deserves the same process. That’s a mistake.

AI can triage requests into lanes:

- Green lane: within historically accepted bounds → recommend approval with standard mitigations

- Amber lane: uncommon but comparable cases exist → escalate with suggested options

- Red lane: high severity/low detectability → immediate engineer and safety review

If you cut decision time from hours (or days) to minutes for green-lane cases, sortie generation changes.

2) Predictive readiness: forecast bottlenecks before they ground sorties

A grounded aircraft is usually the last frame of a long movie.

AI can forecast:

- Which tail numbers are trending toward non-mission capable status

- Which parts are likely to become “no-substitute” blockers

- Which inspection cycles are mismatched to how the unit actually flies

Done right, this becomes mission planning with maintenance reality baked in, not bolted on.

3) Digital thread for sustainment decisions (auditability wins trust)

AI adoption fails when people can’t explain why the system recommended something.

A sustainment “digital thread” should log:

- The technical condition

- The model’s risk estimate and confidence

- The human rationale (engineer and commander)

- The operational outcome (did it fly, did it fail, did it require follow-on repair)

That audit trail does two things:

- Improves the model over time

- Protects decision-makers by showing good-faith, evidence-based judgment

“Won’t this compromise safety?” The real safety risk is slower decisions

Safety and readiness aren’t opposites. Uncertainty is the enemy of both.

A rigid process often claims the banner of safety while creating operational side effects:

- Crews rush last-minute changes because approvals arrive late

- Units hoard parts or make informal workarounds to avoid delays

- Engineers become overwhelmed, leading to inconsistent decisions

A measured AI approach can actually strengthen airworthiness by:

- Standardizing waiver quality

- Highlighting weak signals humans miss

- Enforcing mitigations and follow-up inspections

The goal is not “approve more.” The goal is decide faster, decide consistently, and document the tradeoff.

Guardrails that should be non-negotiable

If you’re building AI for defense logistics and maintenance decision support, these guardrails matter:

- Human decision authority remains explicit (especially for high-severity cases)

- Model confidence is displayed and low-confidence cases auto-escalate

- Red-team testing for edge cases and adversarial data issues

- Data provenance controls (what data the model used, and whether it’s current)

- Fail-safe operations when networks or data feeds degrade

What leaders can do in 90 days (without waiting for a decade-long program)

This isn’t an “after acquisition reform” problem. It’s a workflow problem.

Here’s a practical 90-day starter plan I’ve seen work in other regulated environments:

- Pick one platform + one waiver class (e.g., recurring structural deviations)

- Standardize the waiver template so inputs become consistent

- Build a historical case library (approved/denied + outcomes)

- Deploy an AI triage assistant that drafts risk cards and routes cases

- Measure two metrics weekly:

- Median time-to-decision

- Sorties lost due to pending engineering disposition

If you can show a measurable reduction in “awaiting disposition” downtime, you’ve created the internal demand signal to scale.

The bigger point for AI in Defense & National Security

The defense AI conversation often gravitates toward autonomy, targeting, and intelligence analysis. Sustainment decisions don’t sound as glamorous. They’re also where combat power quietly evaporates.

A force with modern algorithms but grounded aircraft is not a modern force. AI in national security has to include the unglamorous systems: maintenance workflows, waiver approvals, technical data interpretation, and supply forecasting.

The Air Force’s readiness numbers—62% mission capable, ~1,900 aircraft unable to fly their designed missions—aren’t just a logistics headache. They’re a strategic constraint.

If your organization is serious about AI-enabled readiness, start where friction is highest and decisions repeat daily: risk assessment, waiver disposition, and sustainment triage. What would change in your sortie generation if “hours later: denied” became “five minutes: approved with constraints”—and everyone could see exactly why?

If you’re building or buying AI for defense logistics, maintenance decision support, or mission planning workflows, the fastest wins come from systems that produce quantified risk, auditable rationale, and faster routing—not flashy dashboards.