AI-driven deterrence is becoming essential as Iran and North Korea create a two-front nuclear challenge. Learn how AI improves warning, planning, and resilience.

AI Deterrence for Iran & North Korea’s Nuclear Shift

A two-front nuclear problem breaks the mental model most deterrence planning still relies on: that crises arrive one at a time, with enough “strategic bandwidth” to concentrate intelligence, diplomacy, and forces on a single region.

The hard numbers already point to why this matters. By the last confirmed public accounting in September 2025, Iran held 440.9 kg of uranium enriched to 60%—a short technical step from weapons-grade material. In parallel, U.S. open-source and government assessments describe North Korea as fielding a broader set of nuclear-capable delivery options and expanding its nuclear-use doctrine. Put those trends together, and you get the scenario planners dread: two nuclear flashpoints with overlapping timelines.

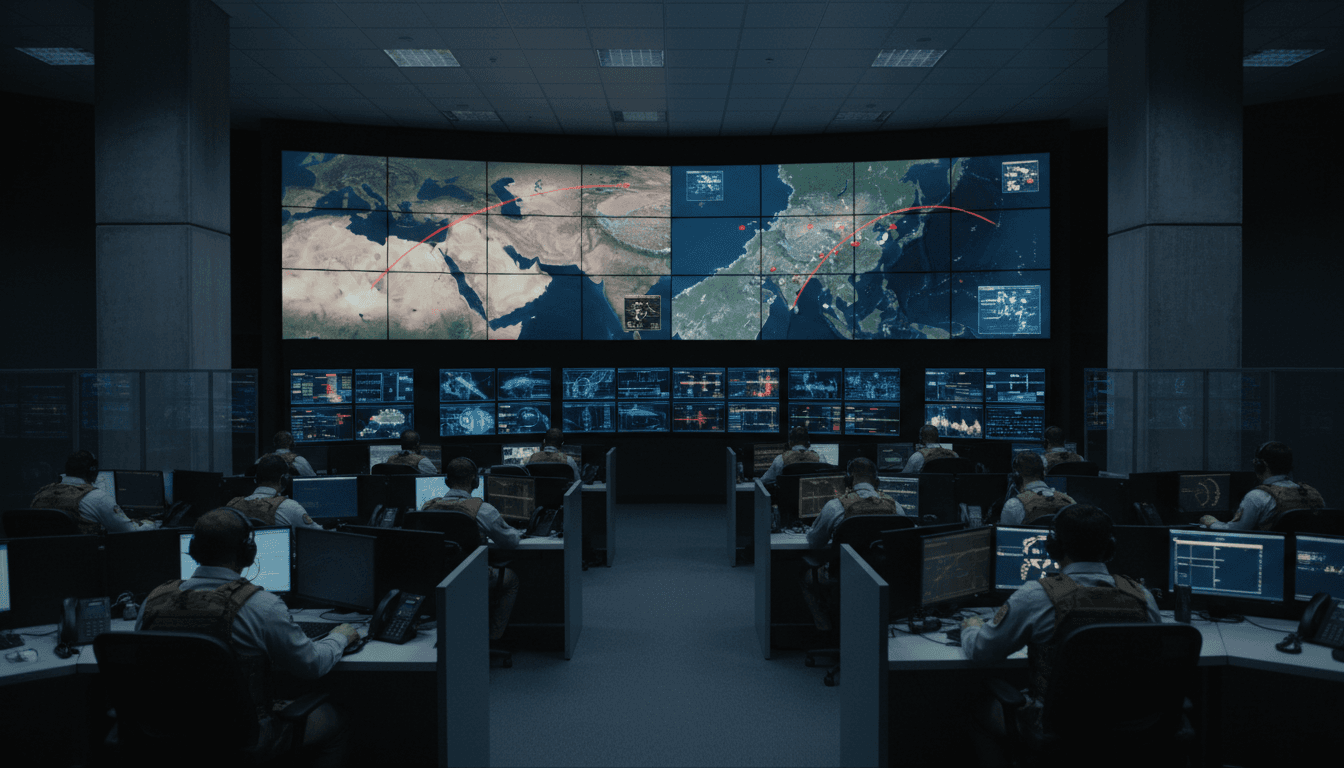

This post is part of our AI in Defense & National Security series, so I’m going to take a stance: AI is becoming a core enabler of credible U.S. deterrence—not because it “decides” policy, but because it compresses the time between detection, understanding, and response. In a two-front nuclear challenge, that time compression is the difference between coordinated crisis management and improvisation.

Why the “two-front” nuclear problem is harder than it sounds

Answer first: Two simultaneous nuclear contingencies don’t just double workload—they multiply risk because the U.S. must make linked decisions under tighter time pressure with allies who have different thresholds and priorities.

A single-theater nuclear crisis is already a stress test for intelligence fusion, readiness, and signaling. A dual-theater crisis creates failure modes that are easy to underestimate:

- Cognitive overload at the top: Senior decision-makers can’t absorb two fast-moving nuclear pictures at once without structured support.

- Resource contention: ISR collection, missile-defense readiness, bomber tasking, cyber teams, and diplomatic attention get pulled in opposite directions.

- Alliance timing problems: Seoul and Tokyo may need reassurance on hours-to-days timelines, while Israel and Gulf partners may be operating on minutes-to-hours timelines.

- Adversary opportunity: Each adversary can probe for openings when the U.S. is distracted elsewhere.

Deterrence isn’t a vibe. It’s a system: credible capability, clear signaling, and confidence that you can manage escalation. A two-front problem attacks all three.

The most dangerous interaction: cross-theater signaling

The interaction risk isn’t theoretical. If Washington surges forces to one region, the other adversary can interpret it as reduced coverage—or intentionally portray it that way to extract concessions. Deterrence becomes less about what you can do and more about what you can do at the same time.

That’s where AI-enabled planning and decision support starts to matter.

What’s changing in North Korea and Iran—and why it compresses timelines

Answer first: North Korea is building a more survivable and diversified nuclear delivery set, while Iran’s enrichment posture and reduced transparency shrink warning time—together they reduce the margin for diplomatic and military maneuver.

North Korea: toward a diversified, harder-to-stop force

Public reporting and expert analysis describe North Korea’s trajectory in three reinforcing directions:

- Doctrine and thresholds: A law enacted in September 2023 expanded conditions for nuclear use, lowering the threshold relative to prior posture.

- Delivery diversity: Solid-fueled road-mobile missiles, sea-based options, and interest in multiple warheads complicate defense.

- Crisis leverage: The more credible and survivable the force, the more Pyongyang can attempt limited escalation while betting the U.S. will avoid major retaliation.

From a deterrence perspective, this shifts the problem from “prevent the first nuclear use” to “prevent a coercive ladder where the adversary believes they can control escalation.”

Iran: enrichment momentum plus weaker verification

On Iran, the technical facts that matter are straightforward:

- Large 60% stockpile (as of Sept 2025): 440.9 kg is not weapons-grade, but it’s close in enrichment terms.

- Verification gaps: By November 2025, the IAEA reported it could no longer verify the status of Iran’s near–near-weapons-grade stockpile after Iran halted cooperation following June 2025 strikes on key facilities.

This is the combination that keeps planners up: enrichment capacity + less transparency = less warning.

The bridge between the two: a long history of alignment

The strategic nightmare isn’t just two separate challenges. It’s collaboration—technology transfer, materials, components, or training flows. Even limited assistance can shorten timelines, diversify options, and raise the cost of interdiction.

Where AI actually helps deterrence (and where it doesn’t)

Answer first: AI strengthens deterrence by improving detection, prediction, and decision speed across multiple theaters—but it must be constrained to avoid brittle automation and escalation accidents.

A lot of AI talk in national security drifts into science fiction. The useful frame is simpler: AI is a force multiplier for analysts and operators under time pressure.

1) Threat monitoring: fusing sensors into a coherent story

Deterrence starts with seeing reality faster than your adversary can manipulate it. In a two-front scenario, AI supports:

- Multi-INT fusion: Correlating satellite imagery, maritime tracking, SIGINT-derived patterns, cyber telemetry, and open-source indicators.

- Change detection at scale: Flagging unusual activity around known sites (tunnel entrances, new construction, unusual logistics flows).

- Anomaly detection for deception: Spotting activity patterns that don’t match “normal” operations—useful when an adversary is trying to mask a breakout or test preparations.

The value here isn’t that AI “finds the nuke.” The value is that it triages attention, so scarce expert hours go to the right places.

2) Predictive analytics: early warning without pretending to predict the future

Good predictive analytics doesn’t claim certainty. It provides ranked risk and “what would have to be true” logic.

In nuclear monitoring, useful predictive outputs look like:

- Leading indicator dashboards (e.g., procurement signals, test-site reactivation patterns, unusual movement of specialized equipment)

- Scenario likelihood bands (high/medium/low) with explicit assumptions

- Decision-impact estimates (how a U.S. move changes adversary incentives in 24/72/168 hours)

This is especially valuable when the U.S. must choose between actions that have different cross-theater effects.

3) Decision support: compressing cycles without skipping judgment

The most underappreciated benefit of AI in deterrence is structured speed:

- Generating consistent brief formats across theaters

- Stress-testing response options against escalation models

- Surfacing second-order effects (for example, “surge BMD to Theater A increases vulnerability perception in Theater B”)

Done right, AI makes it harder for a fast-moving crisis to devolve into ad hoc decision-making.

Where AI does not belong: autonomous nuclear decision-making

There’s a clean line worth drawing: AI should not be the final authority for nuclear use decisions.

In high-stakes escalation, models can be brittle, data can be manipulated, and “optimal” recommendations can ignore politics and human intent. The right approach is human-led, AI-assisted decision-making with rigorous auditing, red-teaming, and fail-safes.

The operational playbook: AI-driven deterrence in two theaters

Answer first: The U.S. needs an AI-enabled deterrence stack that’s built for concurrency—shared indicators, allied data sharing, and theater-specific response options that don’t cannibalize each other.

Here’s what that looks like in practice.

Build a shared “dual-theater warning picture”

Most organizations still maintain regional dashboards that don’t talk to each other. In a two-front nuclear environment, that’s a structural vulnerability.

A better model:

- Common indicator library: A standardized set of nuclear and missile indicators used across combatant commands and key agencies.

- Cross-theater correlation rules: Automated prompts like, “If Theater A escalates to X, increase watch posture for Theater B indicators Y and Z.”

- Priority arbitration: Predefined logic for which collections and assets surge first—and which stay in reserve.

Treat allied intelligence sharing as a product, not a meeting

The source article emphasizes the need for close coordination with allies. The operational reality is that coordination fails when it’s personality-driven.

AI helps if it’s used to create:

- Shared analytic workspaces with consistent labeling

- Rapid translation and summarization across partner languages

- Confidence scoring and provenance tracking (who collected what, when, and under what assumptions)

This isn’t glamorous, but it’s how you avoid “parallel realities” across Washington, Seoul, Tokyo, Jerusalem, and European capitals.

Hardening the AI layer against cyber manipulation

Any AI-enabled deterrence system becomes a target. If adversaries can poison data, spoof signatures, or flood collection channels, they can induce hesitation or provoke overreaction.

Minimum safeguards that should be non-negotiable:

- Model and data provenance controls (tamper-evident logs)

- Red-team adversarial testing focused on spoofing and deception

- Fallback modes that degrade gracefully to traditional workflows

- Segmentation so a compromise in one theater doesn’t contaminate the other

If you can’t defend the AI pipeline, you don’t have decision advantage—you have a new attack surface.

Practical questions leaders should ask right now

Answer first: If your deterrence plan can’t answer these questions crisply, you’re not ready for a two-front nuclear crisis.

Use this as a quick self-audit for defense organizations, national security teams, and industry partners supporting them:

- What are our top 20 indicators of imminent nuclear or missile escalation in each theater—and which are shared?

- What’s our maximum acceptable latency from collection to analyst review to leadership brief?

- Which assets are “dual-theater critical,” and what’s the pre-approved prioritization scheme?

- How do we validate AI outputs under deception? What’s the human verification workflow?

- What’s our allied data-sharing posture on day one of crisis, not day ten?

- What’s the cyber plan to protect models, data pipelines, and analytic workspaces during peak demand?

These aren’t academic. They’re the difference between controlled escalation and reactive scrambling.

What to do next: turning AI into real deterrence capacity

Two-front nuclear deterrence is a concurrency problem. AI is the best tool we have for concurrency at scale, but only if it’s engineered for trust, resilience, and allied use—not just for flashy demos.

If you’re building or buying AI for defense and national security, aim for systems that:

- prioritize early warning and triage over “perfect prediction,”

- are designed for multi-theater decision support,

- can operate securely under cyber pressure and deception, and

- integrate allies from the start rather than bolting them on later.

The next nuclear crisis may not arrive politely in sequence—one theater at a time. If Iran and North Korea continue on trajectories that compress warning time, U.S. deterrence will be tested on a simple question: can we see clearly and act coherently in two places at once?