AI fire control aims to cut cognitive load and speed decisions in artillery and air defense. Here’s what’s realistic, what’s hard, and what to build next.

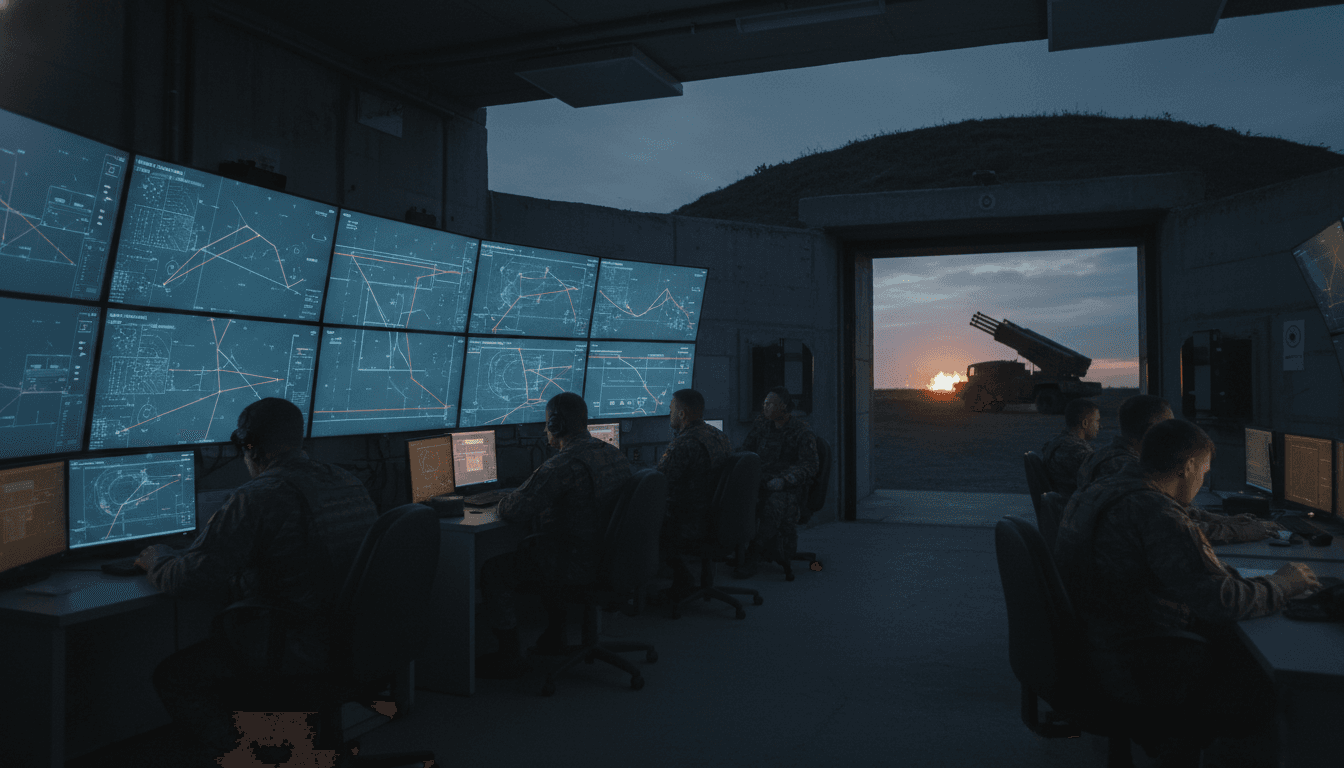

AI Fire Control: The Army’s Plan for Leaner Crews

Artillery and air defense don’t have a “slow day” problem—they have a seconds-matter problem.

When threats come in mass (drones, rockets, cruise missiles, loitering munitions) the bottleneck is rarely the missile itself. It’s the human capacity to sense, interpret, decide, and coordinate fast enough, across enough tracks, while staying resilient under jamming and information overload.

That’s why the Army’s interest in using AI to help man artillery and air defense units isn’t a tech curiosity. It’s a staffing and survivability reality: fewer soldiers available, more targets in the air, and a battlespace that’s too data-dense for manual workflows. This post sits in our AI in Defense & National Security series because it shows where AI helps most—and where it still falls short.

Why the Army wants AI in artillery and air defense

The direct answer: the Army wants AI to reduce cognitive load and speed up fire control decisions when threats are “massed” and multi-axis. Senior leaders have been explicit that the goal isn’t just automation for its own sake; it’s maintaining tempo when the fight throws more data at you than a human team can process.

At the AUSA annual meeting, Maj. Gen. Frank Lozano described a future in which AI can help map threats, process large volumes of sensor data, and support AI-enabled fire control with minimal manning in engagement operations centers. That’s a blunt admission of what’s coming: defending critical assets and maneuver forces will require teams that can act faster than human-only loops.

The operational pain point: “too many tracks, too little time”

Air and missile defense is increasingly a classification and prioritization problem at scale. Operators must constantly answer:

- Which tracks are real threats vs. decoys?

- Which threats are most dangerous right now?

- What interceptor, effector, or fires option is appropriate?

- What’s the best geometry, timing, and deconfliction plan?

AI can help by triaging, predicting, and recommending, especially when threats arrive in swarms or layered salvos.

The staffing pain point: keeping crews ready is hard

Even before you add AI, “manning” fire control and operations centers is expensive in human terms:

- Training pipelines are long.

- 24/7 coverage burns people out.

- Talent retention competes with the private sector.

If AI can safely automate portions of monitoring and correlation, it can reduce the number of eyes required on glass at all times—without pretending humans should disappear.

What “AI-enabled fire control” should actually mean

Here’s the thing about AI in national security: the phrase is cheap; the implementation is not.

AI-enabled fire control should mean an AI system that improves the speed and quality of targeting decisions while staying auditable, bounded, and operator-controllable. If it can’t explain why it recommended an action—or can’t operate under degraded comms—then it’s not ready for the mission.

From “sensor data” to “actionable decisions”

To be useful in artillery and air defense, AI needs to sit across a pipeline:

- Ingest: radar tracks, EO/IR feeds, SIGINT cues, friendly force locations, airspace constraints, weather, terrain.

- Fuse: correlate detections into coherent tracks; reduce duplicates and false positives.

- Assess: classify object type; estimate intent; predict future position and impact area.

- Prioritize: rank threats by risk-to-force/asset and time-to-impact.

- Recommend: propose intercept or fires options, with confidence and constraints.

- Deconflict: ensure safe fires, airspace control, and fratricide avoidance.

- Learn: update based on post-engagement truth and operator feedback.

Most AI demos focus on steps 2–4. The real warfighting value shows up when steps 5–6 are reliable under stress.

A critical distinction: language models aren’t enough

The Army leader’s caution is justified: language models aren’t designed for spatial reasoning, real-time situational awareness, or safety-critical control. They can help with:

- Summarizing situation reports

- Drafting commander’s updates

- Translating between formats and systems

- Explaining doctrine or checklists

But fire control depends on geometry, timing, uncertainty, and adversarial deception. That typically points to a hybrid stack: classical algorithms + probabilistic models + deep learning for perception, with careful human-machine interface design.

The hard part: trust, control, and “human in the loop” in real operations

The direct answer: the Army’s AI challenge isn’t just model accuracy; it’s operator trust under time pressure. When AI is doing the watching, humans can lose context. Then, when it’s time to decide, they’re asked to sign off on recommendations they didn’t personally build.

Lozano called out this tension directly: if you rely on AI for ongoing surveillance, how do you get human decision-makers up to speed quickly enough when it counts?

Don’t argue about slogans; specify the control model

“Human in the loop/out of the loop/on the loop” turns into a religious debate unless you define the decision boundary.

A practical approach in air defense looks like:

- AI auto-triage: AI sorts tracks and flags anomalies continuously.

- AI recommends: AI proposes intercept windows, effector selections, and engagement sequences.

- Human authorizes: humans approve engagement or set pre-authorized rules under defined conditions.

- AI executes within constraints: the system runs the engagement plan inside strict rules of engagement, geofencing, and safety constraints.

This is less about philosophical purity and more about engineering the workflow so humans stay current.

The trust equation: explanation beats confidence scores

A confidence score alone doesn’t help an operator at 2 a.m. during a saturation raid.

Operators trust systems that:

- Show the top 3 reasons behind a recommendation (e.g., trajectory, emitter signature, speed/altitude profile)

- Show what would change the recommendation (e.g., if track speed drops below X, classify as UAV)

- Show constraints (e.g., intercept not possible due to deconfliction window)

- Support fast “sanity checks” (a quick view of raw sensor evidence)

If you want AI adoption, invest as much in the interface and explanation layer as you do in the model.

What “minimal manning” could look like—without compromising safety

The direct answer: minimal manning should mean fewer people doing repetitive correlation work, not fewer people accountable for lethal decisions. That’s a line worth defending.

In practice, leaner crews work when AI takes over:

- Track correlation and duplicate cleanup

- Routine air picture maintenance

- Alerting and anomaly detection

- Drafting engagement recommendations

- Post-engagement logging and reporting

And humans retain:

- Rules of engagement interpretation

- High-stakes approvals and overrides

- Cross-domain coordination (airspace, cyber, EW, coalition partners)

- Risk decisions when information is incomplete

A realistic near-term deployment pattern (12–24 months)

If you’re advising a program office or an industry team, this is the path that usually succeeds:

- Decision support first: deploy AI as advisory, not authoritative.

- Measure the right outcomes: time-to-identify, time-to-recommend, false-alarm rate, operator workload, and deconfliction errors.

- Run in parallel: AI watches while humans do the standard process; compare outputs.

- Graduate permissions: expand from “recommend” to “execute under constraints” only after performance is stable.

This is how you earn operational confidence instead of trying to mandate it.

Why this ties directly to national security modernization

This push is part of a bigger pattern across the AI in Defense & National Security landscape:

- AI for surveillance and sensor fusion to handle scale

- AI for mission planning to compress the kill chain

- AI for cybersecurity to detect anomalies and defend networks that carry targeting data

- AI for autonomy to keep operating when comms are contested

Artillery and air defense sit at the intersection of all four.

What industry teams should build (and what to stop pitching)

The direct answer: the Army is asking industry to close a capability gap between what’s imaginable and what’s fieldable—especially under contested conditions. That means vendors win by being boring in the right ways: reliable, measurable, testable.

Build for contested, messy reality

If your AI concept requires pristine data, uninterrupted cloud access, and a perfect network, it will fail at the first field exercise.

Industry solutions should assume:

- Sensor dropouts and spoofing attempts

- Data latency and partial observability

- Cyber risk on tactical networks

- Coalition interoperability constraints

Focus on measurable performance, not “AI-ness”

I’ve found procurement conversations get sharper when teams lead with operational metrics. Examples that matter:

- Reduce track-to-threat classification time from minutes to seconds (measured in exercises)

- Cut false alarms by X% while maintaining detection probability

- Reduce operator workload: fewer manual clicks/actions per engagement

- Improve deconfliction success rate: fewer engagement plan conflicts flagged late

If you can’t propose a test plan, you’re not selling a capability—you’re selling hope.

Stop pitching black boxes for lethal decisions

The fastest way to lose credibility is to suggest AI should “just decide.” Commanders and program executives don’t need more pressure; they need systems that make human judgment more informed and faster.

Position AI as:

- A watch officer that never blinks

- A fusion analyst that never gets tired

- A planner that can simulate hundreds of options quickly

That framing fits both mission reality and responsible deployment.

Practical Q&A: what leaders ask about AI in air defense

Will AI replace air defense operators?

No. AI will replace the most repetitive parts of the workflow—and that’s a good thing. The accountability for engagements, escalation control, and rule interpretation stays human.

What data does AI need to be effective?

At minimum: time-synced sensor tracks, ground truth labels from exercises/engagements, environmental context (terrain/weather), and friendly-force data for deconfliction. The hard part is not collecting data; it’s governing it and keeping it usable.

What’s the biggest technical risk?

Adversarial deception and out-of-distribution behavior. The enemy will intentionally present “new” patterns. Systems need detection of novelty and a safe fallback mode, not blind confidence.

Where this goes next

AI for artillery and air defense is headed toward a clear operational goal: faster targeting decisions with fewer people in the room—without losing human control. The Army is right to want this, and also right to admit the technology isn’t fully there yet.

If you’re working in defense tech, the opportunity is straightforward and demanding: build AI that performs under stress, explains itself quickly, and plugs into real fire control and battle management workflows. If you’re a leader responsible for modernization, push for programs that measure cognitive load reduction and decision quality—not just model accuracy.

The open question for 2026 isn’t whether AI will show up in air defense operations centers. It’s whether it will arrive as trusted decision support—or as yet another dashboard that operators learn to ignore when the sky fills up.