PacFleet is pushing AI-enabled speed in the WEZ. See how AI reshapes CONOPS, C2, and readiness—and what to require for fieldable, secure autonomy.

AI-Enabled PacFleet: Faster Concepts, Faster Readiness

Speed is now an operational requirement, not a nice-to-have. When Adm. Steve Koehler says Pacific Fleet is “building the airplane while we fly it,” he’s describing a deliberate shift: concepts, capabilities, and training are being developed at the same time, because the Indo-Pacific threat environment won’t wait for a traditional acquisition cycle.

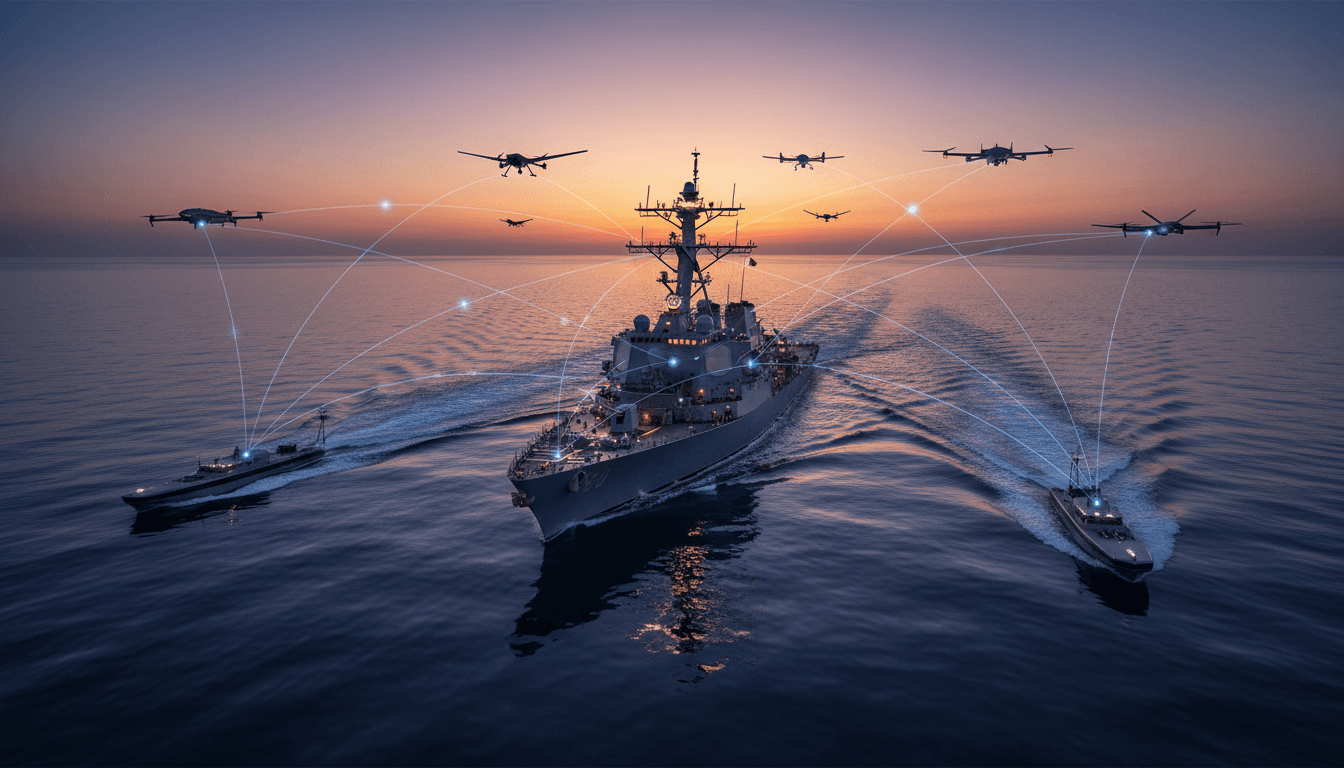

For anyone working in AI in defense & national security, PacFleet’s message is unusually clear: AI isn’t just an analytics tool—it’s a way to compress the time between sensing, deciding, and acting, while keeping ships and unmanned systems ready inside the weapons engagement zone (WEZ). The Fleet is already using AI for data analysis, and the next step is broader: AI to strengthen command and control, accelerate maneuver-and-fires cycles, and support operations from competition through conflict.

What follows is the practical part: what “move fast” actually means for fleets, what AI changes (and what it doesn’t), and the non-obvious requirements—data, authority, security, and “right to repair”—that determine whether this approach works.

Why PacFleet is racing: the WEZ punishes slow organizations

PacFleet is rushing because contested environments penalize delayed decisions and fragile logistics. In the WEZ, adversaries can contest comms, target replenishment, and apply pressure across cyber, space, and maritime domains at once. If a force needs weeks to field a software update, retask an unmanned system, or integrate a new sensor workflow, it’s already behind.

Koehler’s framing matters: he’s not asking for experimentation as a side project. He’s describing experimentation paired with rehearsal—meaning exercises aren’t only “validation”; they’re used to invent and refine new concepts of operation (CONOPS) in near-real time.

This is a core theme in the AI in Defense & National Security series: AI value shows up when it’s embedded into operational loops, not when it’s parked in a lab environment.

“Embracing the red” is a modern AI requirement

Koehler’s call to “embrace the red” (Navy-speak for surfacing problems early and fixing them fast) maps directly to how successful AI programs run:

- AI models fail in predictable ways under distribution shifts (new environments, adversary tactics, degraded sensors).

- Operational friction (permissions, network segmentation, export controls, certification timelines) becomes the real blocker.

- The fastest teams treat failure as test data, not as a PR risk.

Most defense organizations still manage AI like a one-time delivery. PacFleet is signaling a different stance: continuous iteration, continuous learning, and continuous fielding.

AI’s real job at sea: compress the decision cycle without losing control

AI’s highest payoff for a maritime force is decision advantage—turning more data into better actions faster than the adversary. Koehler highlighted AI for analysis today, and a future where AI helps commanders and sailors “balance the art and science of warfare” to drive superior outcomes.

That’s not a science fiction promise. It’s a practical focus on three things:

- Command and control augmentation (not replacement)

- Faster action between maneuver and fires

- Operating under degraded communications

Where AI is already useful (and relatively low drama)

The easiest wins tend to be “assistive” AI that reduces staff workload and improves consistency:

- Multi-source data triage and prioritization (what matters right now)

- Pattern spotting across ISR feeds, track data, maintenance logs, and cyber alerts

- Automated summarization for watch teams and commanders

- Course-of-action comparison tools that expose tradeoffs (time, risk, fuel, exposure)

These uses matter because maritime C2 can drown in information. The problem isn’t lack of data—it’s too much data with uneven trust and varying latency.

The hard part: AI for contested operations

The contested version of AI is different from the enterprise version. It must work when GPS is degraded, networks are jammed, sensors are spoofed, and adversaries are actively shaping what you see.

If you’re building AI for operational fleets, expect these non-negotiables:

- Edge execution: models must run locally when cloud reach-back is unavailable.

- Graceful degradation: when confidence drops, the system must fall back to simpler rules or human-controlled modes.

- Provenance and explainability-by-design: not a thesis, just enough clarity for operators to trust outputs under pressure.

- Adversarial resilience: detection of spoofing, anomalies, and “too-good-to-be-true” patterns.

Here’s the stance I’ve found works: treat AI as a junior watchstander—fast, tireless, and fallible. You still need a human chain of command that owns the decision.

Rapid acquisition plus rapid learning: the only way to keep up

PacFleet’s “rapid acquisition strategy” is really a rapid learning strategy. Buying gear faster helps, but the real acceleration happens when the Fleet can:

- test capabilities in exercises,

- collect performance data,

- revise CONOPS,

- update software and tactics,

- and then repeat—quickly.

That loop is how you turn “cool prototypes” into dependable operational advantage.

What The Forge-style thinking changes

Koehler pointed to Indo-Pacific Command’s expeditionary foundry concept (often discussed in the context of forward production and adaptation). The strategic point is bigger than 3D printing: forward forces need the ability to adapt without waiting for a perfect supply chain or contractor availability.

For AI, that implies:

- Deployable toolchains (MLOps in disconnected settings)

- Approved model update processes (so updates don’t take months)

- A “battle rhythm” for model performance checks (drift, bias, false alarms)

If the model can’t be updated and verified fast, it will age out in the field.

“Right to repair” is also “right to reconfigure” (and it’s central to autonomy)

PacFleet is explicitly tying readiness to sailor authority. Koehler argued sailors need the confidence and authority to install new parts without waiting on contractors—and to reconfigure unmanned systems during a fight.

This is one of the most important, least-discussed requirements for AI-enabled fleets: who is allowed to change what, when, and how?

If your unmanned system requires a contractor laptop, a proprietary cable, and a special permission letter to change a payload profile, it’s not “operational.” It’s a demo.

What “right to repair” looks like in AI-enabled systems

In practical terms, it means programs must plan for:

- On-platform diagnostics that are usable by sailors, not just engineers

- Modular software and mission packages (swap capabilities without breaking certification)

- Documented interfaces and configuration control that supports rapid changes and accountability

- Training pipelines so crews can operate and maintain autonomy features safely

This is where industry often gets uncomfortable. But the reality is simple: operational resilience beats vendor convenience.

Operating concepts AI is reshaping (and what leaders should ask for)

AI changes CONOPS by changing what’s fast, what’s cheap, and what’s scalable. In maritime operations, that typically shows up as more distributed operations, more autonomous sensing, and tighter integration across partners.

Here are four CONOPS shifts that align with PacFleet’s direction:

1) Distributed maritime operations with AI-assisted coordination

Answer first: AI helps coordinate many nodes (ships, aircraft, unmanned systems) when humans can’t manually track every interaction.

The key is not “AI decides the battle.” The key is AI supporting:

- deconfliction,

- tasking suggestions,

- time-sensitive warnings,

- and resource-aware coordination.

Leaders should ask vendors: How does your system behave when comms drop for 30 minutes? What’s the local fallback plan?

2) Unmanned system re-tasking at the edge

Answer first: Autonomy only matters if crews can retask it during the mission.

That means mission planning tools must be:

- usable in real operational tempo,

- auditable (who changed what),

- and secure against tampering.

Leaders should ask: What’s the shortest path from “new tasking” to “confirmed execution,” and what approvals are required?

3) Predictive readiness and maintenance that supports contested logistics

Answer first: AI can reduce readiness surprises by forecasting failures and optimizing spares—but only if the data is clean and the workflow is real.

A credible approach connects:

- maintenance logs,

- condition-based sensors,

- supply availability,

- and operational schedules.

Leaders should ask: What decision will this model change next week? If it can’t change a decision, it’s not a readiness capability—it’s a report.

4) Coalition interoperability as a design constraint

Answer first: In the Indo-Pacific, coalition operations are the default, so AI systems must handle partner data rules and variable network trust.

This pushes architectures toward:

- data tagging and policy enforcement,

- role-based sharing,

- and federated or compartmented analytics.

Leaders should ask: How do you prevent “AI sprawl” from breaking classification boundaries or partner agreements?

A practical checklist for AI leaders supporting fleet modernization

The fastest programs make a few decisions early and stick to them. If your organization is building or buying AI for maritime operations (or any WEZ-adjacent mission), this checklist keeps you honest:

- Define the decision, not the model. Name the operator decision you’re speeding up or improving.

- Plan for degraded operations. Require edge compute, offline modes, and graceful degradation.

- Treat data as a weapon system dependency. If data pipelines fail, the capability fails.

- Build a verification rhythm. Model performance checks must be routine, not special events.

- Design for reconfiguration by operators. “Right to repair” includes mission and autonomy configuration.

- Secure the full lifecycle. Supply chain risk, model tampering, and update integrity must be engineered in.

- Prove it in exercises, then iterate. Fielding without feedback is how AI becomes shelfware.

If you only do one of these: tie every AI deliverable to a specific operational decision and a measurable time-to-action improvement.

What PacFleet’s push signals for 2026 defense AI programs

PacFleet is signaling that fleet modernization is now inseparable from AI-enabled operations—not because AI is trendy, but because the pace of adaptation has become a combat factor. The organizations that win will be the ones that can field, learn, and adjust faster than their adversaries.

This also reframes the vendor conversation. The most valuable partners won’t just sell capability; they’ll help build a repeatable loop: experiment, rehearse, measure, update, and re-field—while protecting security and operator trust.

If your team is trying to modernize maritime C2, autonomy, or readiness workflows, the next step is straightforward: map your highest-stakes decisions, identify where the current cycle time is lost, and design an AI system that still performs when the network doesn’t.

Where do you see the biggest bottleneck today—data access, authority to change configurations, or the time it takes to turn exercise lessons into fielded updates?