Russia’s gray zone sabotage tests Europe’s resilience. Here’s how AI can spot weak signals early and help defend critical infrastructure.

AI Spots Russia’s Sabotage Playbook in Europe

On November 15, an explosion tore through a rail line near Mika, Poland—right on a route used to move equipment and aid toward Ukraine. Polish authorities called it sabotage, charged three Ukrainian nationals, and said the plot was directed by Russian intelligence. That single blast matters less for the damage it caused than for what it signals: Europe is dealing with a sustained “gray zone” campaign designed to feel like bad luck, accidents, and local crime—until it doesn’t.

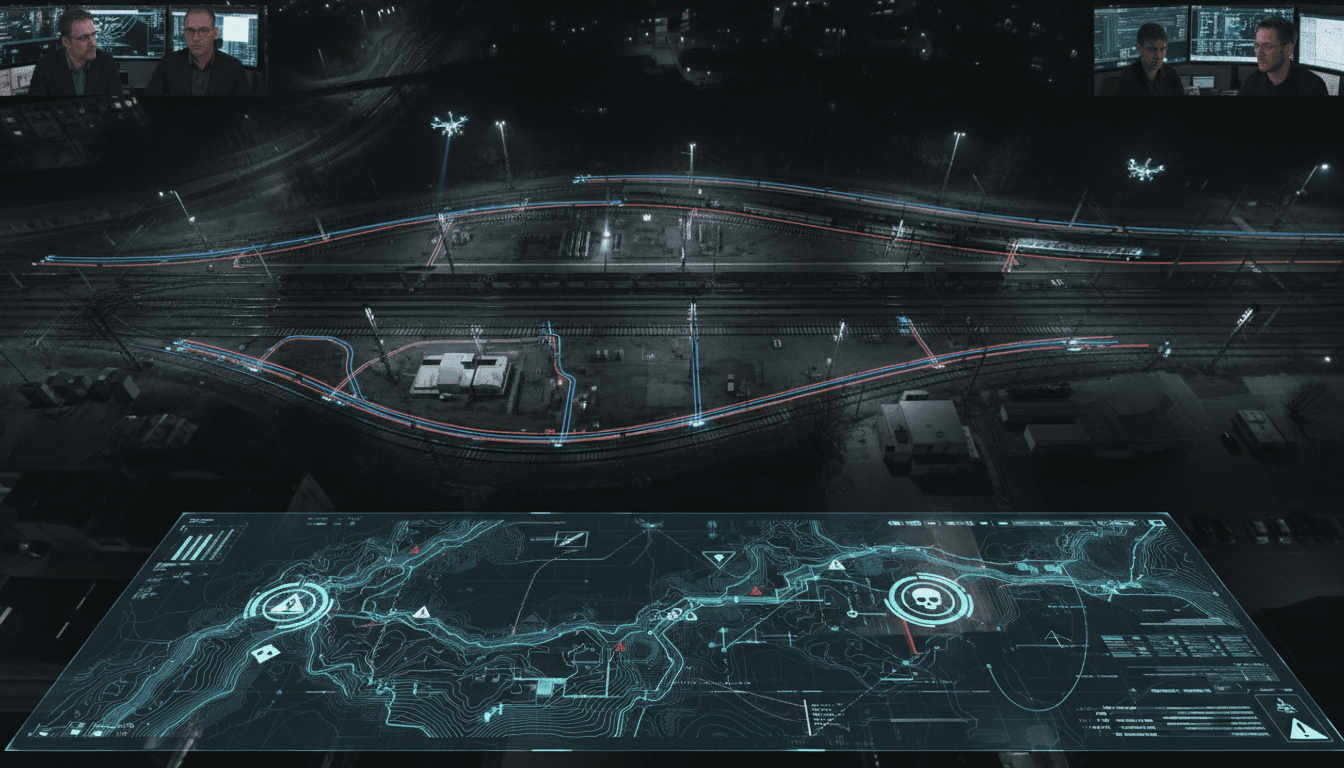

This is exactly the kind of threat most organizations are structurally bad at seeing. Not because the signals don’t exist, but because they’re scattered: a warehouse fire here, a severed fiber cable there, navigation jamming that looks like “technical issues,” a recruitment attempt buried inside encrypted chats. Hybrid sabotage is a pattern problem. And pattern problems are where AI—used responsibly and with the right guardrails—earns its keep.

In this installment of our AI in Defense & National Security series, I’ll translate the reported wave of sabotage across Europe into a practical question for national security leaders and critical infrastructure operators: what would it take to detect a shadow campaign early, attribute it faster, and respond without overreacting?

Russia’s “shadow war” is designed to stay below the threshold

The point of gray zone operations isn’t battlefield victory. The point is friction: delay shipments, raise insurance rates, force airport closures, spook voters, and make governments look ineffective.

The reported tactics share a simple logic:

- Physical disruption of logistics routes (rail lines, depots, ports, warehouses)

- Digital and electromagnetic interference (GPS jamming, comms disruption)

- Critical infrastructure probing (energy and undersea data links)

- Narrative manipulation to keep attribution disputed and responses slow

This matters because NATO’s collective defense mechanisms are politically heavy and intentionally hard to trigger. Sabotage campaigns exploit that reality. If every incident can be argued as isolated, ambiguous, or non-state crime, strategic coordination slows down—and the attacker buys time.

A useful way to think about it: Russia isn’t trying to “win” each incident. It’s trying to normalize the incidents. Once disruption becomes routine, the defender’s new baseline is higher cost, higher uncertainty, and more domestic arguments about whether supporting Ukraine is “worth it.”

The proxy pipeline is the operational center of gravity

A striking feature in the reporting is the reliance on proxies—often vulnerable people, migrants, financially desperate individuals, and in some cases minors recruited through encrypted messaging apps. Recruitment reportedly starts with low-risk tasks (taking photos, dropping packages) and escalates into arson, sabotage, or bomb placement.

From a counterintelligence and law enforcement perspective, this proxy model has three advantages:

- Plausible deniability: the handler stays at arm’s length.

- Scale: many small cells can be activated with minimal cost.

- Political confusion: using Ukrainian recruits inside NATO states muddies narratives and can inflame domestic tensions.

From an AI standpoint, the proxy model also creates an opening:

- Proxies leave behavioral and logistical traces.

- Handlers reuse tradecraft patterns.

- Campaigns require reconnaissance before action.

Put differently: a sabotage act is the final step of a longer workflow. If you only look for the final step (the blast, the fire, the cut cable), you’re always late.

What “early warning” actually looks like in hybrid sabotage

Early warning isn’t a single alert. It’s a stack of weak signals that only becomes persuasive when correlated:

- Increased “innocent” photography of facilities and rail chokepoints

- Unusual device purchases and shipment patterns (within legal bounds to monitor)

- Repeated travel and presence near infrastructure nodes at odd hours

- Spikes in drone sightings near depots, ports, substations

- Clusters of GPS anomalies overlapping logistics corridors

- Recruitment chatter patterns and tasking language in known channels

Human analysts can catch some of this. They cannot catch it consistently across borders, languages, and jurisdictions at scale.

Where AI fits: making weak signals add up to a clear picture

AI doesn’t replace investigators or intelligence analysts. It reduces the odds that a coordinated campaign hides inside bureaucratic seams. The best deployments I’ve seen focus on three jobs: correlation, prioritization, and speed.

1) Multi-source fusion: one picture, not ten dashboards

Hybrid threats cross domains—physical, cyber, electromagnetic, financial, and informational. AI is most valuable when it helps fuse:

- OSINT (news, public incident reports, ship/rail data where lawful)

- Industrial telemetry (SCADA alerts, sensor anomalies, access logs)

- Cybersecurity signals (phishing, intrusion attempts, lateral movement)

- Aviation/navigation interference data (GPS jamming reports)

- Law enforcement case data (arson clusters, suspicious logistics)

A practical deliverable is a shared risk map that updates daily: which nodes are being probed, which corridors are experiencing interference, and which incidents look statistically linked.

2) Pattern-of-life and anomaly detection for critical infrastructure

Most organizations still treat physical security and cyber security as separate worlds. Sabotage campaigns don’t.

AI-based anomaly detection can flag:

- Access attempts outside normal shift patterns

- New “maintenance” behaviors that don’t match historical baselines

- Sensor readings consistent with tampering (doors, fences, vibration)

- Drone activity correlated with pre-incident reconnaissance windows

The point isn’t to produce a perfect “sabotage” label. The point is to raise the confidence that an incident is part of a cluster, so decision-makers can respond proportionally.

3) Language AI for recruitment detection (with strict oversight)

Encrypted apps and multilingual recruitment make manual monitoring hard. But there are viable, lawful approaches:

- Analyze reported recruitment attempts and tip-line messages

- Build multilingual classifiers for recurring tasking templates (e.g., “take photos of…”, “deliver package…”, “count vehicles…”)

- Identify escalation markers (requests for incendiaries, timers, rail clamps, thermite-like materials)

Guardrails matter. These systems should be audited, bias-tested, and constrained so they don’t turn into broad population surveillance. You want focused threat detection tied to clear legal authorities and oversight.

4) Faster attribution without pretending attribution is easy

Attribution is political as much as technical. AI can help the technical side by connecting:

- Device signatures and component sourcing patterns

- Handler workflows and reuse of instructions

- Timing clusters (incidents that occur around key policy events)

- Geographic overlaps with known hostile intelligence activity

But AI can’t solve the hardest piece: what a government chooses to say publicly, and when.

A better objective is: reduce the time to high-confidence internal attribution, so response options aren’t delayed by weeks of uncertainty.

“Below Article 5” doesn’t mean “below consequences”

Gray zone campaigns thrive when the defender believes the only meaningful response is escalation. That’s a trap.

There’s a middle ground that works: clear thresholds, pre-agreed response menus, and visible resilience. AI supports all three.

Response menu: proportional, fast, and repeatable

When every incident becomes an ad hoc debate, attackers gain tempo. Mature defenders pre-wire responses, such as:

- Temporary hardening of specific rail/logistics corridors based on risk scores

- Surge inspections and targeted counterintelligence operations

- Diplomatic and legal actions tied to attribution confidence levels

- Coordinated public messaging that explains disruption without amplifying panic

- Selective sanctions and asset actions against enabling networks

AI’s job here is to justify prioritization: where to surge, what to protect, and what can wait.

Resilience is deterrence when sabotage is the strategy

If the strategy is disruption, then rapid recovery is deterrence.

For critical infrastructure operators, that means:

- Redundancy in routing (rail alternatives, port alternatives, data reroutes)

- Faster mean-time-to-repair via predictive maintenance and spare planning

- Backup navigation procedures where GPS jamming is frequent

- Exercises that combine cyber incident response with physical sabotage scenarios

A good metric to track isn’t just “number of incidents.” It’s:

How many hours of operational impact did incidents cause this quarter—and is that number going down?

AI can help you drive that number down by predicting likely target sets and pre-positioning resources.

A practical blueprint: an AI-enabled hybrid threat cell

Most companies and agencies get this wrong by buying tools before designing the workflow.

Here’s a field-tested structure that scales across public-private boundaries:

- Hybrid Threat Cell (HTC): a standing team combining security, cyber, legal, comms, and operations.

- Common data model: define what constitutes an “incident,” a “probe,” and a “cluster.”

- Fusion layer: ingest multi-source data with strict access control.

- AI triage: score incidents for link probability and operational risk.

- Human adjudication: analysts validate, document, and decide escalation.

- Response playbooks: pre-approved actions mapped to risk tiers.

- After-action learning: continuously update models using confirmed cases.

If you want one sentence to guide the investment: use AI to shorten the path from weak signal to coordinated action.

What leaders should do in Q1 2026

This wave of sabotage reporting is arriving at a predictable moment: winter logistics strain, tight defense industrial timelines, and political debates across Europe about budgets and war fatigue. The operational incentives for more gray zone pressure are obvious.

If you’re responsible for defense, national security, or critical infrastructure, three steps are immediately worthwhile:

- Build a shared incident taxonomy across agencies and operators so correlation is possible.

- Stand up a fusion-and-triage pipeline (even a minimal one) that produces a weekly cluster assessment.

- Run one cross-domain exercise: GPS jamming + warehouse fire + disinformation rumor + supply reroute.

None of this requires science fiction. It requires coordination, disciplined data handling, and leadership that treats hybrid warfare as an operational reality—not a headline.

Europe’s core challenge isn’t a lack of information. It’s turning scattered disruptions into a coherent picture quickly enough to respond. AI, applied carefully, is one of the few tools that can do that at the speed gray zone campaigns demand.

If the next year brings a “cluster” of incidents rather than one spectacular attack, the most important question won’t be whether Europe can attribute them eventually. It’ll be whether Europe can see the pattern early enough to blunt the impact before the attacker sets the tempo.