AI can strengthen counter-drug ops in the Caribbean by improving maritime awareness, reducing misidentification, and creating audit-ready targeting evidence.

AI Counter-Drug Ops: Lessons From Venezuela Risks

The U.S. says it’s targeting narcotrafficking in Caribbean waters. The operational reality looks bigger: a major naval buildup, long-range bomber flights, joint exercises within miles of Venezuela’s shoreline, and reported strikes that have destroyed 21 boats and killed 83 crew members. Those numbers don’t just signal intensity—they signal escalation risk.

Here’s what I think most people miss: counter-narcotics in 2025 is an information problem before it’s a firepower problem. When identification, attribution, and intent are uncertain, every tactical action becomes strategically fragile. That’s where AI and advanced analytics belong in the story—not as a shiny add-on, but as the discipline that can keep interdiction operations lawful, proportional, and defensible.

This post uses the Venezuela-Caribbean moment as a case study for the AI in Defense & National Security series: how AI can improve maritime domain awareness, reduce misidentification, and create the audit trails democratic governments need when operations turn politically and legally contentious.

The Venezuela case shows what happens when “targeting” isn’t provable

When a government claims it’s striking “narco-trafficking boats,” the credibility hinges on one question: How do you know? Not “how confident are you,” but what is the evidence chain that a vessel was engaged in criminal activity and that lethal force was justified.

In the RSS article’s reporting and commentary, a central tension emerges in plain language during a U.S. Senate hearing: the U.S. Coast Guard’s leadership distinguishes law enforcement authority (interdict, disable engines, board, seize, arrest, prosecute) from military strikes that destroy vessels and kill crews. That distinction matters because it determines:

- Which legal authorities apply (domestic law, maritime law, rules of engagement)

- What standards of evidence are required (probable cause vs. military necessity)

- What oversight mechanisms exist (courts, Congress, inspectors general)

When operations move from interdiction to destruction, the burden shifts from “we stopped contraband” to “we applied lethal force correctly.” If the government can’t show its work, you don’t just risk backlash—you risk strategic isolation and an escalatory loop with Venezuela.

AI doesn’t solve policy. But it can solve a recurring operational failure: insufficiently explainable targeting.

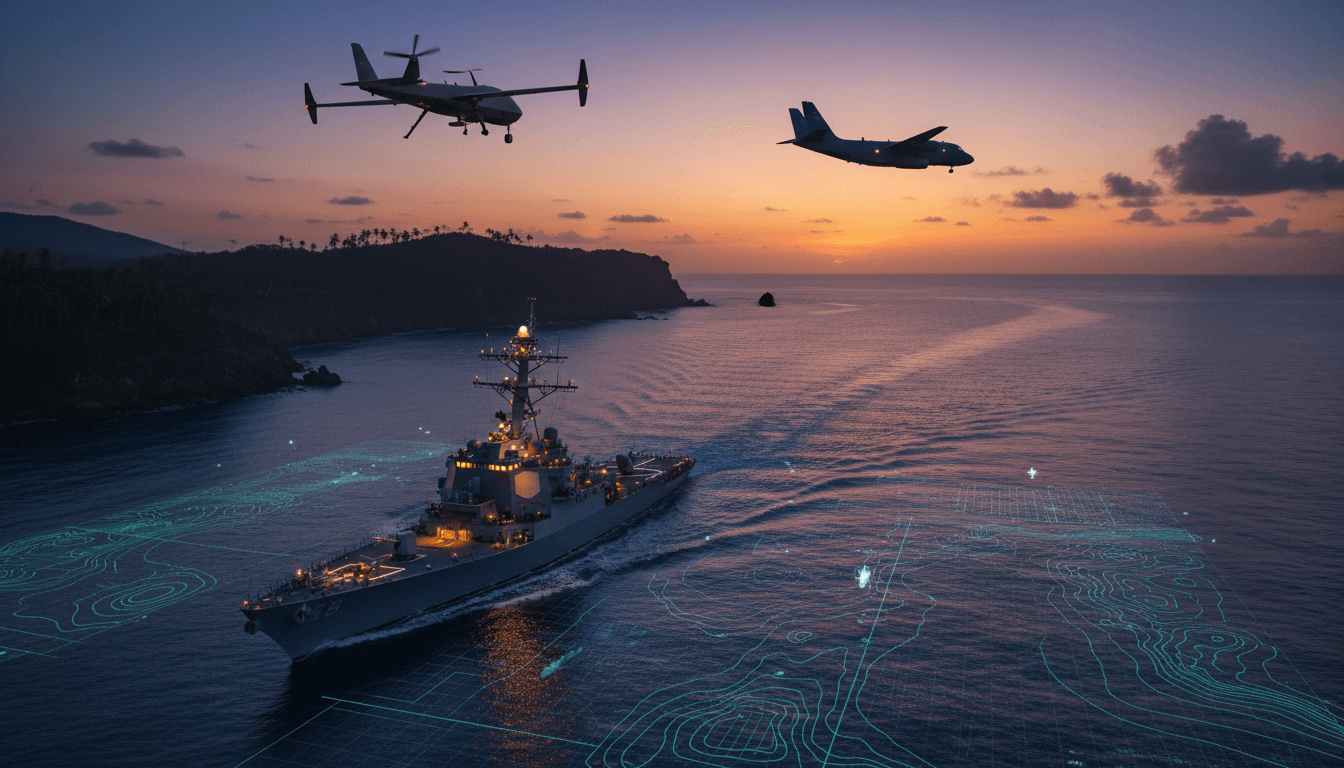

AI-enabled maritime domain awareness is the real force multiplier

The fastest way to improve counter-narcotics outcomes without spiraling into armed conflict is to treat the Caribbean as an analytics theater.

Maritime domain awareness (MDA) is the fused picture of vessels, aircraft, signals, patterns, and context that lets commanders answer: who is out there, what are they doing, and what will they do next?

What AI adds that “more ships” can’t

More hulls in the water can increase presence, but it doesn’t automatically increase clarity. AI-driven MDA can:

-

Fuse multi-source sensor data

- Satellite imagery (SAR and EO)

- AIS and RF emissions

- Airborne radar tracks

- Signals intelligence metadata

- Weather and sea-state context

-

Detect behavior patterns associated with trafficking

- “Going dark” behavior (AIS off in predictable corridors)

- Rendezvous patterns and loitering in low-traffic zones

- High-speed profiles consistent with go-fast craft

- Course changes that mirror historical smuggling routes

-

Generate probability-based risk scores with transparent features

- Not just “target = high risk,” but “high risk because: AIS off + prior route similarity + suspicious rendezvous + anomalous speed profile.”

If you’ve ever watched how quickly tactical narratives collapse under public scrutiny, you’ll appreciate this: AI’s value isn’t only prediction—it’s documentation. If an operation ends in detainees, seized narcotics, and a prosecution, the evidence trail is a strength. If it ends in deaths, that trail becomes essential to legitimacy.

Practical model outputs that matter in the Caribbean

For teams building defense AI systems, “accurate model” is too vague. The useful outputs are operational:

- Interdiction windows: predicted time/area where interception is most feasible

- Asset-tasking recommendations: which aircraft/ship should shadow which track

- Confidence intervals: where the model is guessing vs. knowing

- Collateral-risk estimates: likelihood the contact is fishing/legitimate transit

A blunt stance: if your system can’t express uncertainty clearly, it’s not ready for lethal-adjacent missions.

The hard part: AI must support lawful, attributable decisions

The RSS article spotlights concerns about legal authority and the possibility of still-secret legal interpretations. Even setting politics aside, operational teams face a real constraint: interdiction actions must be justifiable in the forum that comes next—Congress, courts, allies, public opinion, or all four.

Explainable AI is not a buzzword in rules-of-engagement environments

In counter-narcotics, AI is often pitched as “faster targeting.” That’s backwards. In contentious maritime operations, AI should be pitched as better justification.

An explainable targeting workflow should preserve:

- Data provenance: what sensors produced the signals, when, and with what error bars

- Chain of custody for digital artifacts (imagery, tracks, intercept metadata)

- Human-in-the-loop decision records: who approved, under what authority

- Model audit logs: model version, thresholds, feature importance, confidence

Those elements turn “trust us” into “here’s the record.” And in a scenario with high escalation risk near Venezuela, that record is deterrence in its own right.

A realistic operating concept: “AI recommends, humans justify”

For high-risk maritime operations, the best structure is:

- AI proposes candidate interdictions and ranks them.

- Analysts validate with independent sources (multi-INT confirmation).

- Legal/command authorities review whether the action fits the mission and authority.

- Operators interdict with minimum necessary force, escalating only when lawful.

- Evidence and audit trails are packaged automatically for oversight/prosecution.

This is slower than a strike. It’s also far less likely to trigger a strategic crisis.

Using AI to reduce escalation risk (not increase it)

The RSS article describes a worrying dynamic: increased U.S. regional presence, Venezuelan mobilization (reported at 200,000 soldiers), and political signaling that blurs counternarcotics with coercive pressure.

When both sides read intent through military posture, mistakes get expensive fast.

De-escalation features AI programs should include

If you’re modernizing defense planning with AI, build for de-escalation by design:

- Blue/red “intent dashboards”: show what observable actions would be interpreted as escalation by the other side

- Anomaly alerts for accidental provocation: aircraft transponder behavior, near-coast patrol density, proximity thresholds

- Course-of-action simulation: estimate second-order effects (retaliatory mobilization, diplomatic rupture, partner-nation blowback)

Counterintuitively, the strongest AI contribution here is not tactical. It’s strategic decision support: preventing leaders from confusing activity with progress.

The myth that lethal action “solves” trafficking

Trafficking networks are adaptive systems. When you remove one route, they:

- shift corridors

- fragment shipments

- increase use of semi-submersibles or containerized methods

- recruit expendable crews

If a campaign measures success by destroyed boats instead of disrupted networks and prosecutions, it invites a cycle of higher lethality for diminishing returns.

AI can help by tracking network adaptation:

- link analysis on facilitators, financiers, and logistics nodes

- predictive disruption planning (which node removal causes the biggest slowdown)

- measuring displacement effects (where traffic moved after an operation)

What an AI-powered counternarcotics stack looks like in practice

A modern counter-drug architecture doesn’t start with autonomy. It starts with integration.

Layer 1: Sensing and collection

- SAR satellite cueing for night/all-weather detection

- EO confirmation passes for classification

- Maritime patrol aircraft radar tracks

- Passive RF mapping (where emissions appear/disappear)

Layer 2: Fusion and analytics

- Track fusion (resolving duplicates, smoothing noisy tracks)

- Pattern-of-life baselines for legitimate traffic

- Risk scoring with explainable features

Layer 3: Mission planning and interdiction support

- Optimal intercept geometry

- Fuel/time modeling for aircraft and cutters

- Dynamic tasking when targets change routes

Layer 4: Evidence, oversight, and accountability

- Auto-generated case files for seizures/arrests

- Immutable audit logs (who saw what, when, and what they decided)

- Post-operation assessments that measure network disruption, not headlines

If you’re leading an AI program in defense, this is the maturity test: Can your system help operators do the right thing and prove they did it?

Actionable guidance for defense and security leaders

If your organization is exploring AI for maritime security or counter-narcotics, here’s what works in the real world.

-

Prioritize identification over interception speed

- Faster decisions aren’t better if they’re wrong.

-

Set explicit confidence thresholds tied to actions

- Example: below threshold = shadow; above threshold = interdict; only under strict conditions = lethal escalation.

-

Invest in “evidence-grade” data engineering

- If it can’t stand up to oversight, it won’t survive a crisis.

-

Measure outcomes that matter

- Arrests, prosecutions, seizures, and network disruption—plus displacement tracking.

-

Red-team your models for deception

- Smugglers spoof AIS, change routes, and use decoys. Assume your model will be gamed.

Where this goes next for AI in Defense & National Security

The Venezuela-Caribbean episode is a live demonstration of a broader truth: AI in defense is inseparable from governance. Better sensors and smarter models can improve maritime interdiction, but the strategic payoff comes from keeping operations credible, accountable, and less escalatory.

If your goal is to reduce narcotrafficking while avoiding war, the path is clear: build an AI-enabled maritime domain awareness capability that produces actionable leads, transparent confidence, and audit-ready records.

If you’re evaluating AI for counter-narcotics operations—or trying to modernize intelligence analysis and mission planning in politically sensitive theaters—this is the moment to get serious about systems that produce proof, not just predictions. What would change in your operation if every high-stakes decision came with a defensible evidence trail by default?