Inside the Army’s island war games: 75 tech tests show how AI-enabled C2, autonomy, and faster fires reshape readiness—and where bottlenecks remain.

AI-Driven Army Training: Lessons from Island War Games

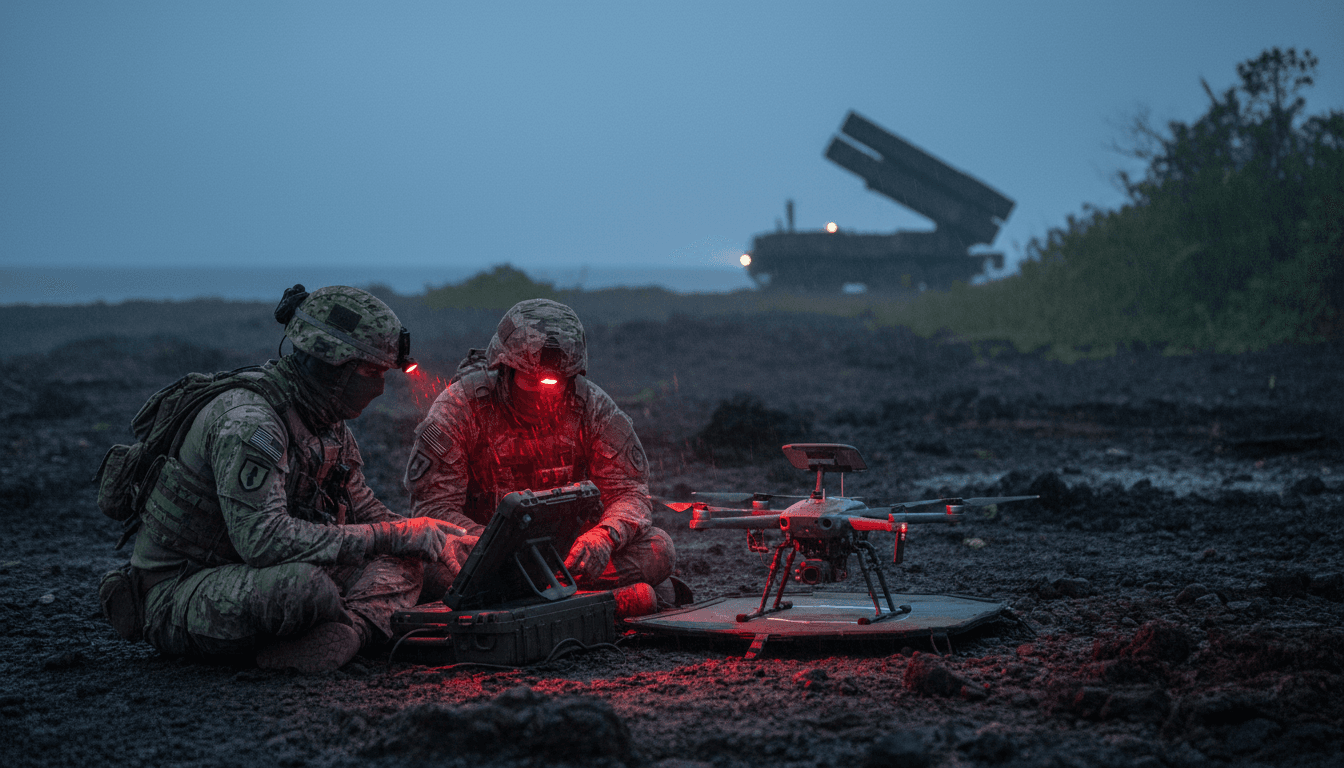

A modern Army division just ran a two-week “island fight” rehearsal with 75 separate technology experiments—and the most interesting part wasn’t the drones, rockets, or lasers. It was the uncomfortable moment when leaders realized the tech can move faster than the permission structure.

That gap—between what new systems can do and what organizations allow them to do—is where AI in defense readiness either becomes a decisive advantage or an expensive distraction. The Army’s Joint Pacific Multinational Readiness Center (JPMRC) rotation in Hawaii, featuring the 25th Infantry Division, exposed both sides: a force learning to see farther, decide faster, and strike at longer range… while still wrestling with approval workflows, cognitive overload, and the basics of staying connected when things get chaotic.

This post is part of our “AI in Defense & National Security” series, where we track how AI shows up in the real world: command-and-control, autonomous systems, intelligence analysis, cybersecurity, and mission planning. The JPMRC exercise is a clean case study because it’s not a lab demo—it’s soldiers, mud, time pressure, partner forces, and a simulated enemy trying to break your network.

The real story: AI readiness is a training problem, not a tech problem

The fastest path to “AI-enabled warfighting” isn’t buying a model or adding a sensor. It’s changing how units train, evaluate decisions, and recover when the network degrades.

At JPMRC, the Army ran a multi-domain scenario: island defense, maritime threats, long-range fires, partner nations, and multiple U.S. service branches. That matters because AI doesn’t add much value if the problem is simple. AI earns its keep when the environment is noisy—too many signals, too many options, too little time.

Here’s what this kind of exercise does better than slide decks:

- It forces systems integration: sensors, radios, drones, fires, and command posts have to work together.

- It produces decision data: timing, handoffs, approvals, and delays become measurable.

- It exposes “process debt”: the Army can add new tools quickly, but the bureaucracy around them can lag for years.

One of the most revealing observations from the exercise: targeting data moved faster, but fire approval sometimes took about an hour due to risk posture and outdated processes. That’s not a bandwidth issue. That’s a human-and-policy bottleneck.

AI doesn’t fix slow decision-making if your organization still requires yesterday’s approvals for today’s timelines.

“Transformation in Contact” shows what rapid modernization looks like

The Army’s Transformation in Contact initiative is the background engine here. Instead of waiting for perfect requirements and multi-year procurement cycles, the Army is pushing new capabilities into operational units, then iterating quickly based on soldier feedback.

The JPMRC rotation showcased what “rapid learning” can mean in practice:

- New launched effects (unmanned reconnaissance gliders and drone-like systems)

- Loitering munitions and one-way attack drones

- Counter-drone measures, including a laser engagement

- HIMARS integration, including air movement scenarios that simulate raids and rapid repositioning

- Power and sustainment improvements at the tactical edge (charging laptops, drones, satellite terminals, and comms gear)

Why this matters for AI in national security: most AI benefits aren’t delivered by a single platform. They’re delivered by a system-of-systems that turns observations into decisions into effects—fast.

The island fight is a stress test for AI-enabled mission planning

Island and archipelago scenarios punish the basics:

- Long ranges make resupply and comms harder.

- Distributed units increase the need for shared situational awareness.

- Air and maritime threats compress reaction time.

That’s exactly the environment where AI-assisted mission planning and decision support are most valuable—if (and it’s a big if) commanders can trust what they’re seeing and the network stays usable.

Next-generation command and control is the make-or-break layer

The clearest signal from the exercise wasn’t “more drones.” It was senior leaders emphasizing next-generation command and control (C2).

C2 is the layer that decides whether AI becomes:

- an assistant that improves speed and confidence, or

- a dashboard that creates noise and fragility.

The Army’s long-standing problem is painfully relatable: units start a mission with good shared understanding, and then that understanding degrades as soon as people move, terrain changes, comms get contested, or systems don’t talk to each other.

That’s why the focus on introducing next-gen C2 into full divisions (not just special demo events) is a big deal. AI-enabled C2 isn’t just about fancy interfaces. It’s about:

- Resilient connectivity (multiple paths, graceful degradation)

- Data fusion (one picture, not ten partial ones)

- Decision support (highlight what matters now)

- Speed with accountability (faster decisions without losing control)

A practical way to say it: AI that can’t survive contested comms will fail in the missions that matter most.

What “think faster, shoot faster” really requires

The exercise highlighted a hard truth: faster sensors and faster targeting tools don’t automatically yield faster outcomes.

To compress timelines safely, units need:

- Clear authorities (who can approve what, under which conditions)

- Pre-planned decision thresholds (when the machine’s recommendation is enough)

- Trusted data lineage (where the information came from and how fresh it is)

- Rehearsed fallback behaviors (what happens when the model or network goes away)

AI helps most when it’s paired with training doctrine that treats the decision loop as a weapon system.

Autonomy at the edge creates a new risk: cognitive overload

The Army saw a very real downside of rapid tech insertion: cognitive overload.

When units add drones, electronic warfare kits, satellite terminals, counter-drone tools, digital fires workflows, and new C2 applications all at once, leaders can end up managing gear instead of commanding.

One commander’s field observation says a lot: when power fails or systems go dark, you still reach for the map to find out what was real.

That isn’t nostalgia. It’s a warning.

A useful rule: “AI should remove decisions, not add screens”

If your AI program increases the number of dashboards, chat rooms, and alerts a leader must monitor, you’re doing it wrong.

High-performing AI-enabled units tend to build toward:

- Fewer interfaces with better prioritization

- Exception-based reporting (only interrupt when something changes mission outcomes)

- Role-based automation (don’t show everyone everything)

- Standardized tactical drills for data loss and deception

And deception is the key point. As autonomy expands, adversaries will target the trust layer: spoofing, jamming, decoys, and data poisoning. That’s why cognitive load is also a cybersecurity issue.

The future of fires: rockets, tubes, and “special effects” working together

Modern fires aren’t becoming “all drones” or “all rockets.” They’re becoming mixed and adaptive.

The JPMRC experimentation reflected a broader shift: artillery organizations are turning into effects organizations that employ a portfolio—traditional tubed artillery, rockets like HIMARS, loitering munitions, reconnaissance drones, electronic warfare payloads, and decoys.

This matters for AI in defense because the selection problem becomes harder:

- Which effect is optimal for this target?

- What’s the probability of kill under jamming?

- How much signature do we expose?

- What’s the resupply cost per outcome?

AI decision support is well-suited to these trade-offs, but only if the data is available and the decision rights are clear.

A concrete benchmark leaders are watching

Real-world conflicts have highlighted sustained artillery demand on the order of thousands of 155mm rounds per day in high-intensity fighting, plus large monthly totals. The implication is straightforward: even as autonomy grows, industrial capacity and logistics still shape combat power.

A smart modernization approach doesn’t pick “old vs. new.” It engineers a force that can:

- surge traditional fires when needed,

- use autonomous systems for scouting and precision,

- and keep operating when the spectrum is contested.

What defense tech teams should learn from this exercise

If you’re building AI for defense readiness, mission planning, ISR, or autonomous systems, JPMRC-style rotations tell you what will actually get adopted.

1) Build for degraded operations by default

Assume limited bandwidth, intermittent comms, GPS disruption, and contested RF.

Design requirements should include:

- offline modes,

- local inference options where appropriate,

- delayed synchronization,

- and clear “confidence” indicators.

2) Treat approval workflows as part of the product

One hour fire approvals can erase the advantage of fast sensors.

AI programs need:

- documented authorities,

- auditable recommendations,

- and tools that reduce perceived risk for commanders (not increase it).

3) Prioritize interoperability over novelty

AI that can’t ingest data from common tactical systems—or can’t output in formats units can use—won’t survive contact with real exercises.

4) Measure what matters: time, trust, and outcomes

The best AI demos optimize accuracy. The best AI field systems optimize:

- time-to-decision (minutes matter),

- operator trust (do people follow it under stress?),

- mission outcome impact (did it change the result?).

5) Train the humans like they’re part of the system

AI readiness is a human performance problem.

Units need training that covers:

- recognizing spoofing and deception,

- validating information under pressure,

- switching to manual processes without losing tempo,

- and leading teams through tech complexity.

What happens next: continuous transformation becomes the new normal

The Army is signaling that modernization won’t come in “versions” anymore. It’s moving toward continuous transformation, where exercises produce fixes in weeks, not years.

For national security leaders and defense innovators, that’s the opportunity: if you can deliver AI capabilities that hold up in messy, multi-domain training—where the enemy jams, the network breaks, and leaders get overloaded—you’re building something that can scale.

The bigger question for 2026 planning cycles is simple: will AI be integrated as a decision advantage, or bolted on as another layer of complexity? The difference won’t be determined in acquisition documents. It’ll be determined in exercises like JPMRC, where the force finds out what it can actually do when it’s tired, contested, and on the clock.

If you’re evaluating AI for mission planning, autonomous systems, or next-gen C2, focus less on the model and more on the system: data flow, decision rights, resilience, and training. That’s where readiness is built.