AI in OT breaks when trust and lifecycle controls are missing. Learn a practical roadmap for safer AI-driven detection without adding new plant-floor risk.

Securing AI in OT: What Breaks and How to Fix It

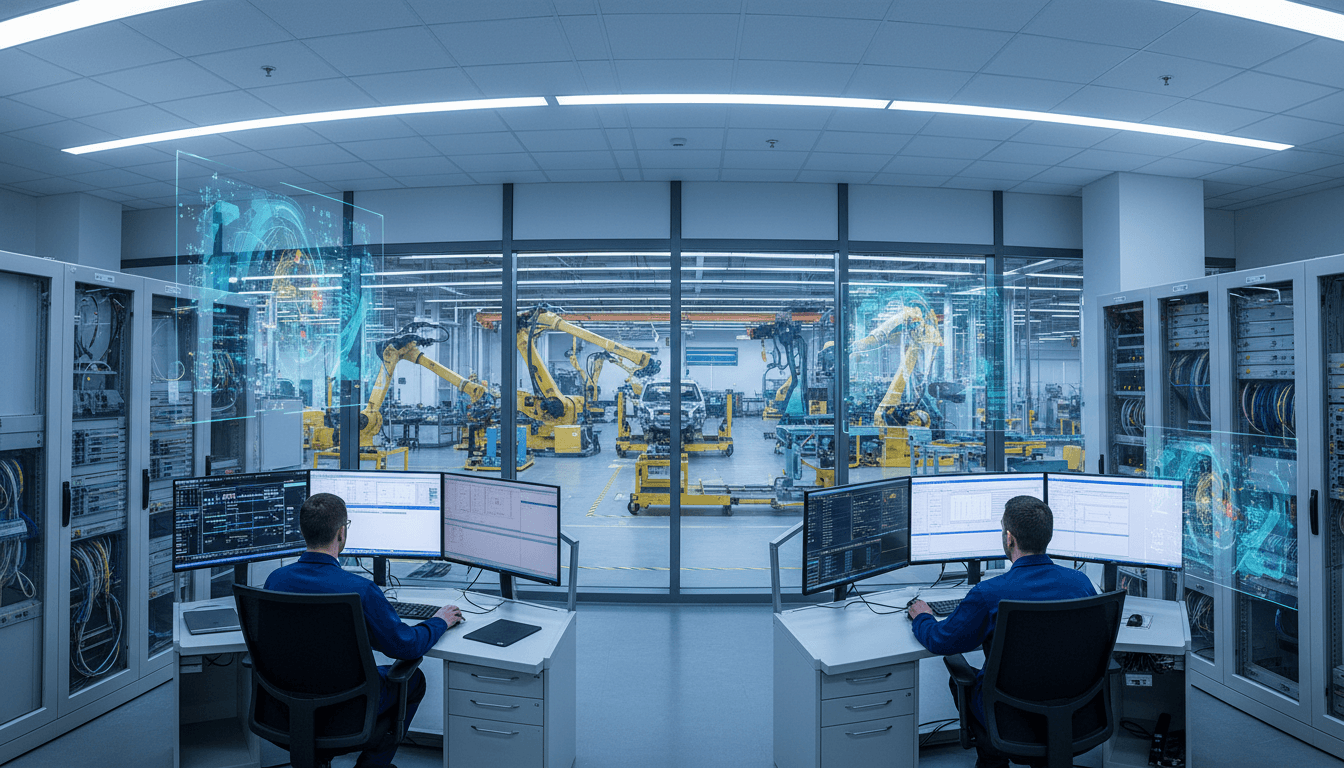

A control room doesn’t care that your AI demo looked amazing in a board meeting. It cares that outputs are predictable, explainable, and safe—every minute of every shift. That’s why AI in operational technology (OT) is tricky: many modern AI systems are built for probabilistic reasoning and fast iteration, while OT environments are built for deterministic behavior and long asset lifecycles.

The timing is telling. In December 2025, a joint advisory from government partners laid out principles for secure AI integration in OT—understand AI, assess AI use, establish governance, and embed safety and security. I agree with the direction. I also think most organizations are underestimating the work required to make those principles real on a plant floor.

This post is part of our AI in Cybersecurity series, focused on how AI can strengthen threat detection and prevention. Here’s the stance I’ll defend: AI can help secure OT—but only after you build trust in your data and your devices, and only when you treat models as safety-relevant components with lifecycle controls.

The core mismatch: nondeterministic AI vs deterministic OT

AI in OT fails first at the seams: OT expects repeatability; many AI models don’t guarantee it. Large language models (LLMs) and agentic systems can produce different outputs for the same prompt, can “hallucinate,” and can degrade over time through drift. That’s normal in consumer and IT contexts. In OT, it’s a problem.

If your AI is advising on:

- chemical dosing rates,

- pressure thresholds,

- machine torque or feed rates,

- safety interlocks or maintenance overrides,

…then “usually correct” isn’t good enough. You need bounded behavior.

Drift is not a bug—it’s a property

OT processes evolve slowly, but they do evolve: new suppliers, different ambient conditions, equipment wear, minor control tuning, even seasonal variation. AI models, especially those trained on historical distributions, can become misaligned without anyone touching them.

A practical rule I’ve found useful: if you can’t specify how you’ll detect drift and what you’ll do when it happens, you’re not ready to deploy the model.

Explainability isn’t academic in OT

OT operators need to understand why an alert fired. If they can’t interpret it quickly, they’ll ignore it—or worse, take the wrong action.

In safety-adjacent environments, explainability becomes operational:

- What sensor or tag drove the alert?

- What baseline is being used?

- What’s the confidence and what’s the failure mode?

- What’s the safe action if the model is wrong?

If your AI can’t answer those questions, it shouldn’t be in the loop.

Trust starts with devices: AI is only as reliable as its inputs

Most AI discussions start with models. OT security reality starts with device identity and data integrity.

AI depends on trustworthy telemetry: sensor readings, PLC states, historian logs, engineering workstation changes, and network traffic. If an attacker can spoof any of that, AI becomes an amplifier for bad decisions.

Here’s the uncomfortable truth: many OT environments still struggle with basic authenticity controls—firmware verification, signed updates, attestation, and asset identity at scale. When those foundations are missing, “AI governance” becomes paperwork.

Minimum trust foundation for AI in OT

If you’re planning AI-driven threat detection or AI-assisted operations in OT, treat these as gating requirements:

-

Cryptographic device identity

- Unique identity per device (not shared credentials)

- Strong provenance from manufacturing through commissioning

-

Signed and verifiable firmware and updates

- Code signing enforced where possible

- Controlled update paths (including vendor tooling)

-

Attestation (when feasible)

- Evidence a device is running known-good firmware/config

- Detects tampering that could poison telemetry

-

Lifecycle governance for keys and credentials

- Rotation policies that match OT realities (planned outages)

- Automated inventory of certificates/keys to avoid manual drift

-

Supply chain verification artifacts

- Software bills of materials (SBOMs)

- Cybersecurity bills of materials (CBOMs)

These aren’t “nice to haves.” They’re how you keep AI from learning from lies.

Snippet-worthy truth: In OT, the AI problem is often a device identity problem wearing a new label.

Cloud-dependent AI collides with OT connectivity (and governance)

A lot of AI products assume continuous outbound access for updates, telemetry export, and model improvements. OT networks often can’t support that—and in many facilities, they never should.

This creates three hard constraints:

1) You can’t rely on always-on vendor update paths

Even if your AI is deployed “on-prem,” many solutions still depend on cloud services for retraining, tuning, or feature updates. OT teams may not allow persistent outbound connectivity, and change windows can be quarterly or annual.

2) Model lifecycle must match asset lifecycle

OT assets can run for decades. AI models typically don’t. If you deploy a model, you need a plan for:

- retraining cadence,

- validation and rollback,

- version pinning,

- long-term support,

- knowledge transfer when staff changes.

If the vendor can’t support that, you’ll inherit long-lived architectural debt.

3) Governance must include data boundaries

AI governance in OT can’t just be about “acceptable use.” It must define:

- what data leaves the plant (if any),

- how it’s anonymized or minimized,

- who can query the model,

- how prompts and outputs are logged,

- how incident response works when AI is involved.

If you can’t audit it, you can’t trust it.

How attackers will use AI against OT (and what defenders should do now)

Attackers don’t need to “hack the AI” to benefit from AI. They can use AI to:

- scale vulnerability research,

- improve phishing and social engineering against engineering teams,

- generate malware variants faster,

- automate reconnaissance and lateral movement playbooks,

- craft convincing operator-facing artifacts (reports, tickets, even fake runbooks).

The OT-specific nightmare scenario is subtle: attacker actions masked by normal-looking operator screens. This is not hypothetical; pre-AI incidents have already demonstrated how effective interface manipulation can be.

Defensive posture: assume AI increases attacker speed

If threat actors can use AI to accelerate discovery and exploitation, defenders need more layers—especially in critical infrastructure.

A realistic, high-value stack looks like this:

- Prevention: network segmentation, allowlisting where possible, secure remote access, hardened engineering workstations

- Detection: OT network detection and response (NDR), passive monitoring, integrity monitoring of critical endpoints

- Response: practiced playbooks, offline procedures, safe shutdown paths

- Deception: decoy assets and honeytokens tuned to OT protocols

- Recovery: tested backups, known-good images, and restoration processes aligned to safety requirements

One-liner you can reuse internally: If AI makes attackers faster, your controls need to be layered—not just smarter.

Where AI helps in OT without adding reckless risk

AI has a place in OT security, but it’s not where vendors often start.

The safest, most practical win: AI-driven anomaly detection using traditional machine learning in passive monitoring. Passive matters because it reduces the chance you’ll disrupt control operations or introduce new attack paths.

A pragmatic “start here” use case: passive anomaly detection

Done well, ML-based anomaly detection can spot:

- new or rare OT protocol commands,

- unexpected PLC program downloads,

- unusual engineering workstation behavior,

- lateral movement between OT zones,

- changes in historian data patterns that suggest spoofing or manipulation.

This aligns tightly with the broader AI in cybersecurity theme: using AI to analyze anomalies and automate triage, not to improvise decisions in safety-critical loops.

How to keep anomaly detection from burning out operators

OT teams already deal with alarm fatigue. AI alerts that can’t be explained quickly will get tuned out.

Design requirements that reduce operational friction:

- Alert on “new, risky, and actionable,” not “different.”

- Attach context: affected tags, protocol, source/destination, change history, comparable prior events.

- Provide a recommended action tier: observe, verify, contain, escalate.

- Make it easy to suppress known-benign patterns with approval and audit trails.

If your AI can’t reduce workload, it isn’t helping.

A workable roadmap: secure AI integration for OT teams

Most companies get this wrong by starting with model selection. Start with scope and safety.

Step 1: Classify the AI’s role (advisory vs in-the-loop)

Write it down in plain language:

- Is the AI advisory (suggests, flags, summarizes)?

- Is it in-the-loop (influences settings, tickets, or operator actions)?

- Is it on-the-loop (can act, but under strict supervision and rollback)?

If it’s anywhere near in-the-loop for control decisions, require formal safety review.

Step 2: Define trust boundaries and data quality gates

Before training or deployment:

- Identify authoritative data sources.

- Specify how you’ll detect spoofing or tampering.

- Set minimum quality thresholds (missingness, latency, outliers).

- Log and quarantine suspect inputs.

Step 3: Build model lifecycle controls like you would for safety systems

Your AI system needs lifecycle discipline:

- versioned models and datasets,

- test suites against known scenarios,

- drift monitoring and retraining triggers,

- rollback procedures,

- change management aligned to OT maintenance windows.

Step 4: Test for “operator trust,” not just accuracy

Accuracy metrics won’t save you if the control room doesn’t trust the output.

Run tabletop exercises and live pilots focused on:

- time-to-interpret,

- false positive cost,

- failure mode behavior,

- clarity of recommended actions,

- handoff between OT, IT, and security.

Step 5: Make segmentation and access control non-negotiable

AI won’t compensate for flat networks.

At minimum:

- segment OT zones and conduits,

- tightly control remote access,

- restrict vendor pathways,

- monitor east-west traffic,

- separate AI monitoring infrastructure from control paths.

This is where AI supports the program: it helps you detect when segmentation is being tested or bypassed.

What to do next (if you’re trying to buy or build this in 2026)

AI in OT is a double-edged sword: it can strengthen threat detection, but it can also introduce new attack surfaces and new kinds of operational failure.

If you’re evaluating AI for OT security this quarter, I’d start with two questions:

- Can we prove the integrity of the data feeding the model?

- Can operators understand and trust outputs under pressure?

If the answer to either is “not yet,” focus on foundations—identity, signing, segmentation, passive monitoring—then deploy AI where it reduces risk rather than creates it.

The bigger question for the year ahead: Will your OT AI strategy create measurable resilience, or will it become another long-lived system you’re afraid to touch?