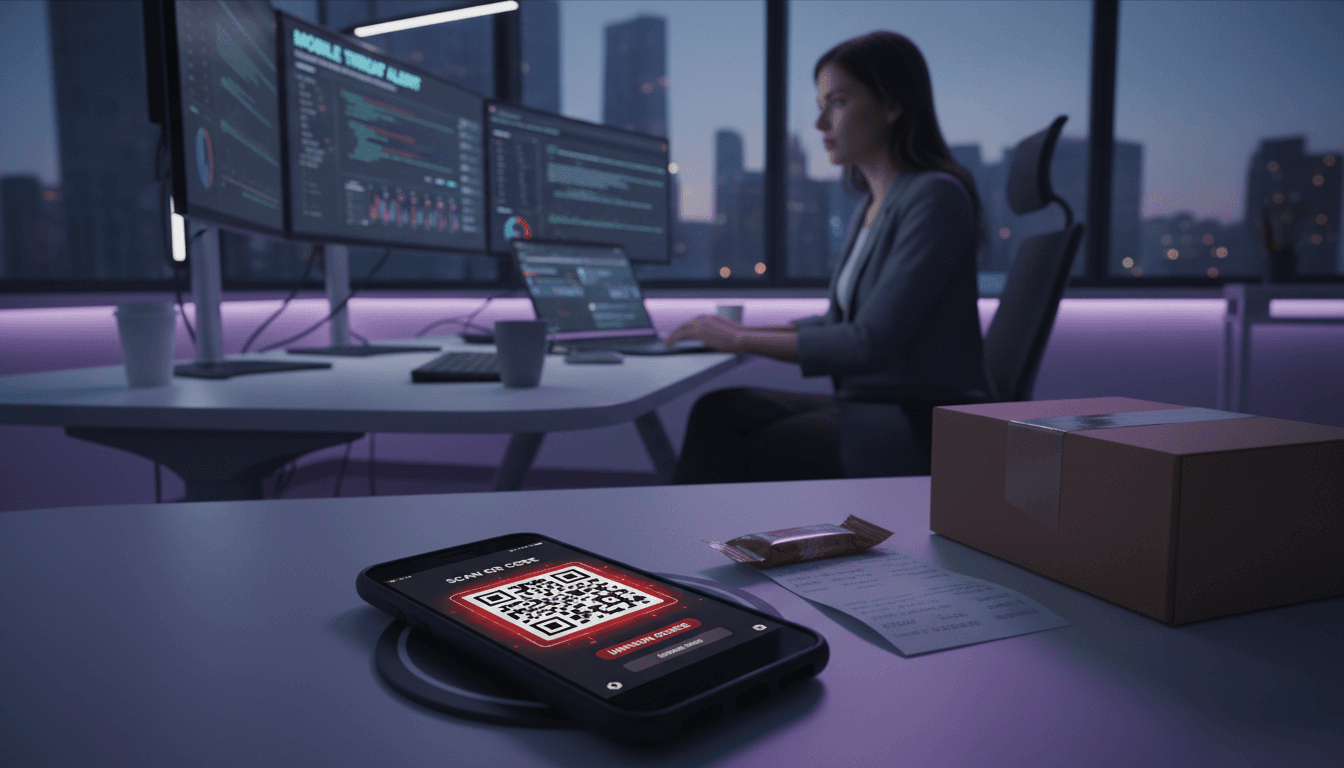

QR phishing is fueling DocSwap Android malware via fake delivery apps. Learn how AI-powered threat detection spots QR lures and suspicious app behavior early.

AI Defense Against QR Phishing and Fake Delivery Apps

QR codes have quietly become one of the most reliable ways to move people from skeptic mode to tap-install mode. That’s exactly why the North Korea–linked threat actor Kimsuky is pushing DocSwap Android malware through QR phishing pages disguised as delivery tracking and “security verification” flows.

Most security programs still treat QR codes like harmless shortcuts to a website. Attackers treat them like a channel switch: get a user to open a link on a desktop, then nudge them onto a mobile device where controls are weaker, visibility is lower, and “just install the app” feels normal—especially during the December shipping rush.

This post breaks down what’s happening in the DocSwap campaign and, more importantly, how AI-powered threat detection can spot QR phishing patterns, deceptive Android app behavior, and state-sponsored tradecraft early enough to stop the damage.

What the DocSwap campaign gets right (from an attacker’s view)

Answer first: DocSwap succeeds because it mixes QR redirection, fake “identity checks,” and realistic delivery-app decoys to make risky Android installation steps feel legitimate.

The campaign attributed to Kimsuky uses phishing sites that mimic a major South Korean logistics brand, then steers victims into installing an Android APK that ultimately loads a second, encrypted payload—an updated DocSwap variant.

A few details matter for defenders:

QR codes aren’t the payload—they’re the steering wheel

The QR code is doing something subtle: it’s not merely linking to a malicious download. It’s creating a handoff:

- A user reaches a booby-trapped URL (often via smishing or phishing).

- If the user is on desktop, the page shows a QR code to scan.

- Scanning moves the user to an Android device where the attacker can pressure them to install an app.

That’s a visibility killer. Many email security tools see the original URL but don’t follow the QR-based branch. Many SOC processes also struggle to correlate “desktop click” + “mobile install” as a single attack chain.

The “security module” trick targets human instincts

Android warns users when installing from unknown sources. Kimsuky’s response is a story that sounds official: install this module to verify your identity due to customs security policies. That’s not sophisticated malware engineering—it’s sophisticated persuasion.

The malware behavior is designed to look normal while it escalates

The malicious delivery app uses decoys like OTP-style screens and then opens a legitimate tracking page in a WebView to reduce suspicion. Meanwhile, it connects to attacker infrastructure and enables a wide range of remote access commands—keystrokes, audio capture, camera usage, file ops, location, and harvesting contacts/SMS/call logs.

The reality? If you’re only looking for “known bad APK hashes,” you’re late.

Why QR phishing is spiking—and why December is prime time

Answer first: QR phishing works because it exploits attention scarcity, mobile habits, and the logistics-and-delivery context people already trust.

December is a perfect storm:

- Employees are traveling and working from personal devices more often.

- Delivery notifications are constant, so messages blend into the noise.

- IT support coverage is thinner around holidays.

Add the attacker’s QR tactic and you get a clean bypass of classic “hover over the link” advice. On a phone camera screen, there’s no hovering. There’s scanning and acting.

A stance I’ll defend: QR codes should be treated as untrusted input by default, the same way you treat email attachments.

Where AI-powered threat detection actually helps (and where it doesn’t)

Answer first: AI helps most when it’s used for correlation and behavior—connecting scattered signals into one story and flagging app actions that don’t match the user’s intent.

“Use AI” is not a plan. Here’s what AI does well against campaigns like DocSwap.

1) AI correlation: stitching desktop-to-mobile attack paths

The DocSwap chain is fragmented by design. You might see:

- An inbound smishing or email

- A short-lived phishing domain

- A desktop visit that shows a QR code

- A mobile visit that triggers an APK download

- Post-install network beacons to a command server

AI-driven correlation (often built into modern XDR and email security platforms) can connect these into a single incident graph:

- Same recipient + same lure theme (“delivery”, “tracking”, “customs verification”)

- Same domain family / hosting patterns

- Same infrastructure (IP, TLS fingerprints, ASN patterns)

- Similar redirect logic (User-Agent checks,

tracking.php-style gating)

That correlation is the difference between “a user installed an app” and “this is an active QR phishing campaign with malware staging.”

2) Behavioral analysis: the app doesn’t behave like a delivery app

A real delivery app doesn’t need to:

- Request permissions to manage external storage immediately

- Attempt to install additional packages

- Register persistent services that survive user interaction

- Connect to a suspicious high-port command channel

- Expose broad RAT-like command surfaces

AI-based mobile threat defense (MTD) and endpoint behavioral engines can flag intent mismatch:

“If the app’s declared purpose is tracking parcels, but it activates microphone/camera routines, enumerates SMS, and maintains a background service, treat it as hostile.”

This is where ML models trained on benign vs malicious Android behavior outperform rule-only approaches—especially when attackers rename packages and rotate droppers.

3) Phishing detection: QR pages have fingerprints

Even when domains rotate, QR phishing pages often reuse:

- Template layouts and scripts

- Redirect trees and server-side User-Agent logic

- Repeated social-engineering phrases (“security module”, “verification”, “policy requirement”)

NLP models and computer vision models can classify these pages based on page text, structure, and even screenshot similarity.

A practical approach I’ve found works: treat “QR presented after desktop visit” as a high-risk feature and increase scrutiny automatically.

Where AI won’t save you by itself

AI won’t help if your organization:

- Allows unmanaged Android devices to access email and corporate chat

- Has no mobile app install controls

- Has no telemetry from DNS, email, and endpoints to correlate

- Treats “user-installed app” as a helpdesk issue rather than a security incident

AI needs signals. No signals, no detection.

A practical defense plan for QR phishing and Android malware

Answer first: Combine policy controls, mobile telemetry, and AI-assisted triage so QR lures get contained before installs spread.

This is the part security teams can implement without waiting for a “big platform refresh.”

1) Put QR codes into your phishing playbooks

Most phishing training still focuses on email links. Update guidance to include:

- Don’t scan QR codes from unexpected delivery/payment notices

- If you must verify a shipment, use the official app store listing or type the company name directly

- Treat “install a security module” as a red flag, always

Also add a SOC runbook step: when a phishing page shows a QR code, analyze both desktop and mobile branches.

2) Reduce the blast radius with Android controls

If your workforce uses Android for work (BYOD or corporate-owned), enforce:

- Blocking installs from unknown sources (and preventing user override where possible)

- Play Protect and device integrity checks

- App allowlists for corporate profiles

- MDM/MAM policy that blocks “side-loaded” apps from accessing corporate email/chat

DocSwap relies on the user pushing through warnings. Don’t let policy be optional.

3) Watch for “decoy + RAT” patterns on mobile

DocSwap uses a decoy OTP flow and then opens a legitimate tracking page while a background service connects out. Detection opportunities include:

- Suspicious background services registered soon after install

- High-frequency permission prompts inconsistent with app category

- Network beacons to rare destinations and unusual ports

- WebView opening a legitimate domain immediately after entering a “delivery number”

AI-driven anomaly detection can reduce noise here by learning normal mobile app behavior in your environment (what’s typical for your users, your region, your carrier networks).

4) Automate response: contain first, investigate second

QR phishing campaigns move fast. If your SOC waits for full confirmation, you’ll be chasing infections across personal devices.

Good automation targets:

- Quarantine the message (email/SMS gateway where possible)

- Block the domain and known redirect infrastructure at DNS

- Push a mobile advisory to affected users (“If you installed X, disconnect and contact IT”)

- Trigger device-level scans via MTD/EDR

- Force credential resets for users who entered credentials into lookalike portals

Automation doesn’t replace analysts—it buys them time.

“People also ask” quick answers (for busy teams)

How do you detect QR phishing in the enterprise?

Detect QR phishing by combining email/SMS analysis, URL redirection tracing, and page classification. AI helps by correlating desktop visits with later mobile downloads and spotting reused phishing templates.

Why are fake delivery apps so effective?

They match a real, frequent user behavior: tracking packages. Attackers exploit urgency and normalize installing apps outside official stores, especially when messages mimic local logistics brands.

What’s the fastest way to reduce risk from Android malware like DocSwap?

Block unknown-source installs, enforce MDM/MAM controls for corporate accounts, and deploy mobile threat defense telemetry that can flag RAT-like behavior immediately after installation.

What this means for the “AI in Cybersecurity” series

DocSwap is a clean example of why modern defense isn’t just about “better signatures.” Attackers are optimizing the workflow—how a person moves from message → device → install → trust.

AI in cybersecurity earns its keep when it does two things reliably: connects signals across channels and flags behavior that violates intent. QR phishing and fake delivery apps hit both of those strengths.

If you’re tightening budgets for 2026, here’s my blunt take: prioritize AI-powered detection where you have the biggest visibility gaps—mobile endpoints, redirect chains, and cross-channel correlation. That’s where campaigns like this thrive.

What would change in your organization if every QR-driven install attempt automatically opened an incident and triggered containment within five minutes?