GPT-5 bug bounty signals how AI security works in SaaS: tool permissions, data governance, and robust platforms. Use this checklist to harden AI services.

GPT-5 Bug Bounty Lessons for AI Security in SaaS

A “Just a moment…” page and a 403 Forbidden error doesn’t sound like cybersecurity news. But it’s a useful reminder: the most advanced AI systems in the U.S. still live inside ordinary web infrastructure—CDNs, WAF rules, authentication layers, rate limits, and bot defenses. And that’s exactly why a GPT-5 bio bug bounty call (even when the public page is briefly inaccessible) is a meaningful signal for anyone building digital services.

Bug bounties are one of the few security practices that consistently scale with product growth. They invite outside scrutiny, force crisp thinking about threat models, and build a habit that matters even more in AI: you don’t get to choose how people will try to break your system.

This post is part of our AI in Cybersecurity series, focused on how AI detects threats, prevents fraud, and automates security operations. Here, we’ll treat the GPT-5 bio bug bounty moment as a case study in AI security for SaaS: what it implies, why U.S. tech leaders keep investing in bounties, and how to run an AI bug bounty program that produces real risk reduction (not just PR).

Why an AI bug bounty matters more than a typical bounty

An AI bug bounty matters because the attack surface isn’t just code—it’s also behavior.

Traditional bug bounties look for issues like XSS, SSRF, IDOR, auth bypass, privilege escalation, and data exposure. Those still apply. But AI products add a second category: model-integrated risks. That includes prompt injection, tool/plugin abuse, data leakage through retrieval, and workflow manipulation in agentic systems.

The reality? Many teams treat these as “model problems” when they’re often product security problems:

- A prompt injection that triggers a tool call is basically an authorization failure.

- A model that reveals sensitive info from a retrieval index is often a data governance failure.

- An agent that can be tricked into emailing a file is typically a workflow isolation failure.

A bio-focused bounty (as implied by “bio bug bounty”) raises the bar even higher. It suggests special attention to misuse pathways where failures aren’t just embarrassing—they’re high-consequence.

Snippet-worthy point: AI security is application security plus behavior security, tied together by governance.

The “403 Forbidden” lesson: robust AI still depends on boring security

A 403 on a public page usually means access is blocked by a policy: a web application firewall rule, geofencing, bot mitigation, misconfigured permissions, or rate limiting. That’s not the core story, but it’s instructive.

AI services are increasingly delivered as U.S.-based SaaS platforms—APIs, consoles, and enterprise admin layers. Those platforms don’t just need model safety. They need the fundamentals:

- Identity and access management (SSO, MFA, least privilege)

- Network protections (WAF, DDoS controls)

- Secure API gateways and abuse throttling

- Logging, anomaly detection, and incident response

If you’re running AI features in production, this should sound familiar: model risk gets attention, while the “boring” web stack gets postponed. Most companies get this wrong. Attackers won’t.

AI security teams are blending disciplines

In 2025, the strongest U.S. security programs for AI products look less like a single “AI safety” group and more like a tight partnership among:

- Product security

- ML engineering

- Trust & safety

- Governance, risk, and compliance (GRC)

- Security operations (SecOps)

Bug bounties become the shared language between these groups because they produce concrete artifacts: proof-of-concepts, reproduction steps, and severity rationales.

What a “bio” AI bounty implies about risk and governance

A bio-oriented AI bug bounty implies a sharper threat model. In practical terms, it usually means the program is looking for weaknesses that could:

- Enable unsafe instructions or restricted guidance

- Bypass policy enforcement (“jailbreaks” are the simplistic label; enforcement bypass is the real issue)

- Exfiltrate sensitive information (system prompts, hidden rules, proprietary data)

- Misuse tools or agents to take actions beyond intent

- Degrade monitoring and detection (e.g., evasion of abuse classifiers)

This is where AI governance stops being a slide deck and becomes engineering work. If you can’t clearly define:

- what the system must refuse,

- what it may do with tools,

- what data it can access,

- and how you’ll detect misuse,

then you can’t meaningfully reward findings because you can’t reliably score their impact.

The best AI bug bounties pay for “failure chains,” not tricks

A high-quality report rarely looks like “I got it to say something weird.” It looks like a chain:

- Prompt injection leads to a hidden instruction being followed.

- That triggers an external tool call.

- The tool call is insufficiently scoped.

- The output is stored or shared in a way that leaks data.

That chain is a measurable product risk. It’s the kind of thing bounties should reward heavily.

Snippet-worthy point: Pay for exploit paths that cross boundaries—model → tool → data → action.

How U.S. digital service leaders design AI bug bounty programs

An AI bug bounty program works when it’s treated as a pipeline into engineering, not as an inbox.

Here’s what I’ve found works in real teams: tight scope, clear rules, and fast triage. Otherwise, you’ll drown in low-signal submissions.

1) Define scope like an engineer, not a marketer

Researchers need to know exactly what’s in scope:

- Specific products (API, chat UI, enterprise console)

- Environments (prod vs. staging)

- Model families and features (memory, file upload, browsing, tool use)

- Data boundaries (what counts as sensitive)

If the program is bio-focused, the abuse scope should be explicit too: what misuse scenarios are allowed to test, what’s prohibited, and how to report safely.

2) Write severity guidelines that fit AI

CVSS alone doesn’t capture “the model followed a hidden instruction and emailed a file.” You need a severity rubric that accounts for:

- Impact (data exposure, unauthorized action, policy bypass)

- Exploitability (repeatable, scalable, works across accounts)

- Blast radius (single session vs. multi-tenant)

- Detectability (would your monitoring catch it?)

A practical approach is to map AI issues into familiar buckets:

- Authorization failures (tooling scope, permission checks)

- Data exposure (RAG indices, logs, transcripts)

- Integrity failures (tampering with workflows or outputs)

- Abuse enablement (ways to scale harmful use)

3) Engineer “secure-by-default” tool use

Agentic AI is where bounty findings get expensive fast.

If a model can call tools (email, file systems, ticketing, cloud resources), treat every tool call like an API request from an untrusted client:

- Require explicit user confirmation for high-impact actions

- Use least-privileged, per-tenant credentials

- Implement allowlists for destinations (domains, repositories, buckets)

- Log every action with a traceable

request_id - Add rate limits and anomaly detection per user and per org

If you do this well, prompt injection becomes annoying—but not catastrophic.

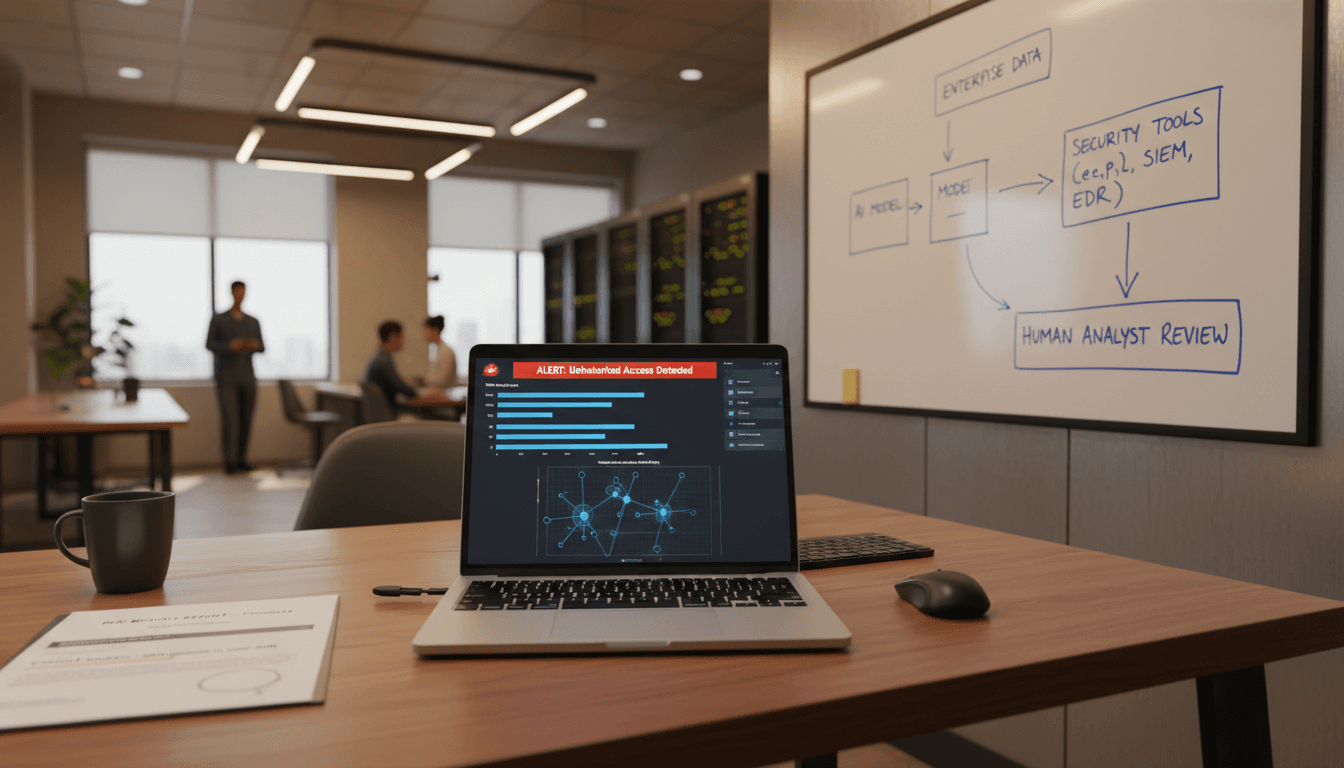

4) Automate triage with AI, but keep humans on the decisions

Yes, AI in cybersecurity can help triage bounty submissions:

- Cluster duplicate reports

- Extract reproduction steps

- Flag likely policy violations

- Route to the right owner based on components mentioned

But payout decisions, researcher communication, and final risk acceptance need a human. Otherwise, you’ll create new incentives for researchers to “optimize for the classifier.”

Actionable checklist: hardening AI products against bounty-class findings

If you run a U.S.-based SaaS product with AI features, these are the controls that reduce the findings you don’t want to discover the hard way.

Security controls you can implement this quarter

- Prompt and instruction boundary controls: clearly separate system/developer/user content; sanitize tool inputs; prevent hidden instruction persistence.

- Tool permissioning: per-action authorization checks; scoped tokens; deny-by-default tool access.

- RAG and memory governance: tenant isolation; document-level ACLs; redact secrets; avoid indexing sensitive tokens.

- Abuse monitoring: detect high-frequency prompts, repeated refusals, suspicious tool-call patterns, and anomalous data access.

- Secure logging: store prompts and tool outputs with redaction; restrict access; set retention windows.

Testing practices that actually catch issues

- Build an internal “red team harness” that replays known attack patterns (prompt injection templates, tool misuse attempts).

- Add regression tests for safety policies the same way you test auth.

- Use canary tenants and synthetic data to validate isolation.

Snippet-worthy point: If your AI feature can take actions, you need authorization tests, not just safety prompts.

People also ask: practical questions about AI bug bounties

Do bug bounties work for AI security?

Yes—when the scope is tight and the payout rubric rewards real impact. Bounties are especially effective at uncovering edge-case interactions between UI, APIs, and model behavior that internal teams miss.

What’s the difference between a model jailbreak and a real vulnerability?

A jailbreak is a symptom. A vulnerability is a repeatable bypass that causes unauthorized access, unauthorized actions, or measurable policy enforcement failure at scale.

Should mid-sized SaaS companies run an AI bug bounty?

If you have public-facing AI features, file upload, tool use, or enterprise tenants, a small program can pay for itself quickly. Start private (invite-only), tune triage, then expand.

What this means for AI-powered digital services in the United States

The broader trend is clear: U.S. tech companies aren’t treating AI security as a side quest anymore. They’re operationalizing it—through governance, monitoring, and programs like bug bounties that bring outside pressure to internal controls.

And that’s the real lesson behind a GPT-5 bio bug bounty call: strong AI products require platform robustness. You need the fundamentals (auth, logging, isolation) and the AI-specific guardrails (tool scope, instruction boundaries, abuse detection). Neglect either side and researchers—and attackers—will find the gap.

If you’re building or buying AI for your organization, your next step is straightforward: inventory every place your model touches data or takes actions, then decide what you’d be willing to pay a researcher to prove wrong. That number is often a good proxy for what you should fix first.

What would your bug bounty reveal about your AI product: a few prompt oddities—or a chain that crosses tools, data, and real-world actions?