AI-driven vulnerability management prioritizes what attackers target, not just what scanners find. Reduce exposure windows with threat intelligence and automation.

AI-Prioritized Vulnerability Management That Cuts Risk

A typical enterprise vulnerability scan doesn’t fail because it misses issues. It fails because it finds too many.

In 2024, more than 40,000 CVEs were published. That pace has turned “patch everything critical” into a fantasy—especially when attackers can start exploiting new flaws in about 15 days after disclosure. If your vulnerability management program still runs on periodic scans, static CVSS scores, and a long spreadsheet of tickets, you’re not managing risk. You’re managing backlog.

This post is part of our AI in Cybersecurity series, and I’m going to take a clear stance: modern vulnerability management is a prioritization problem, and AI is the most practical way to solve it—when it’s fed with real threat intelligence and wired into your workflows.

Why traditional vulnerability management overwhelms teams

Traditional vulnerability management (VM) produces a mountain of findings and then asks humans to triage them with limited context. The output looks “comprehensive,” but the decision-making is often guesswork.

CVSS isn’t a patch plan

CVSS is a severity score, not a likelihood score. It tells you how bad a vulnerability could be if exploited—not whether it will be exploited against you this week.

That creates a common failure mode:

- Teams chase the highest CVSS issues first.

- Attackers exploit something with a lower CVSS that’s widely weaponized.

- The organization still gets burned, even while “patching critical.”

A 9.8 that nobody is targeting isn’t the same as a 7.5 that’s actively used in ransomware campaigns. Treating them as equal is how patch programs get busy but not safer.

The backlog grows because remediation capacity is finite

Even strong IT teams can’t patch everything quickly. Maintenance windows are limited. Legacy systems can’t always be updated safely. Some fixes require testing, vendor coordination, or change-management approvals.

The constraint is almost never “finding vulnerabilities.” It’s remediation throughput. That’s why prioritization is the whole game.

Siloed teams create a dangerous disconnect

When threat intelligence and VM operate separately, two predictable problems show up:

- Threat intel teams see early signals—proof-of-concept code, exploit chatter, active scanning—but those insights don’t make it into the patch queue.

- VM teams see thousands of findings but don’t know what attackers actually care about.

The result is a lot of effort spent on the wrong work at the wrong time.

What changes when threat intelligence drives prioritization

Threat intelligence adds the missing ingredient: real-world attacker behavior.

Instead of asking “How severe is this vulnerability in theory?” you start asking “How likely is this to be used against us, on systems that matter?” That shift is where risk-based vulnerability management becomes real.

Threat context turns vulnerability data into an action list

Threat intelligence can tell you things VM scanners can’t, such as:

- Is there an exploit in the wild?

- Is it being used in ransomware or botnet campaigns?

- Are threat actors discussing it or trading exploit code?

- Is it being mass-scanned on the internet?

When you enrich your vulnerability findings with those signals, your patch queue stops being a raw list and becomes a ranked plan.

A good VM program measures exposure. A modern VM program measures exposure that attackers are actively trying to use.

Risk-based prioritization aligns security work with business risk

Risk-based vulnerability management combines external threat likelihood with internal importance.

A practical risk formula looks like this:

- Asset criticality: Is it customer-facing? Does it touch money, identity, or regulated data?

- Exploitability signals: Known exploitation, exploit maturity, weaponization speed.

- Exposure: Internet-facing, reachable from low-trust zones, common attack paths.

- Compensating controls: WAF rules, segmentation, EDR coverage, hardening.

Threat intelligence is what makes the “exploitability signals” component trustworthy—because it’s based on what attackers are doing, not what a score says they could do.

Faster MTTR where it matters

Mean time to remediation (MTTR) shouldn’t be a single number across all vulnerabilities. That’s how teams end up optimizing the average while leaving the real landmines untouched.

Threat-informed prioritization pushes you toward the metric that actually matters:

- MTTR for actively exploited vulnerabilities on critical assets

That’s the number leadership should care about, and it’s the number that reduces breach probability.

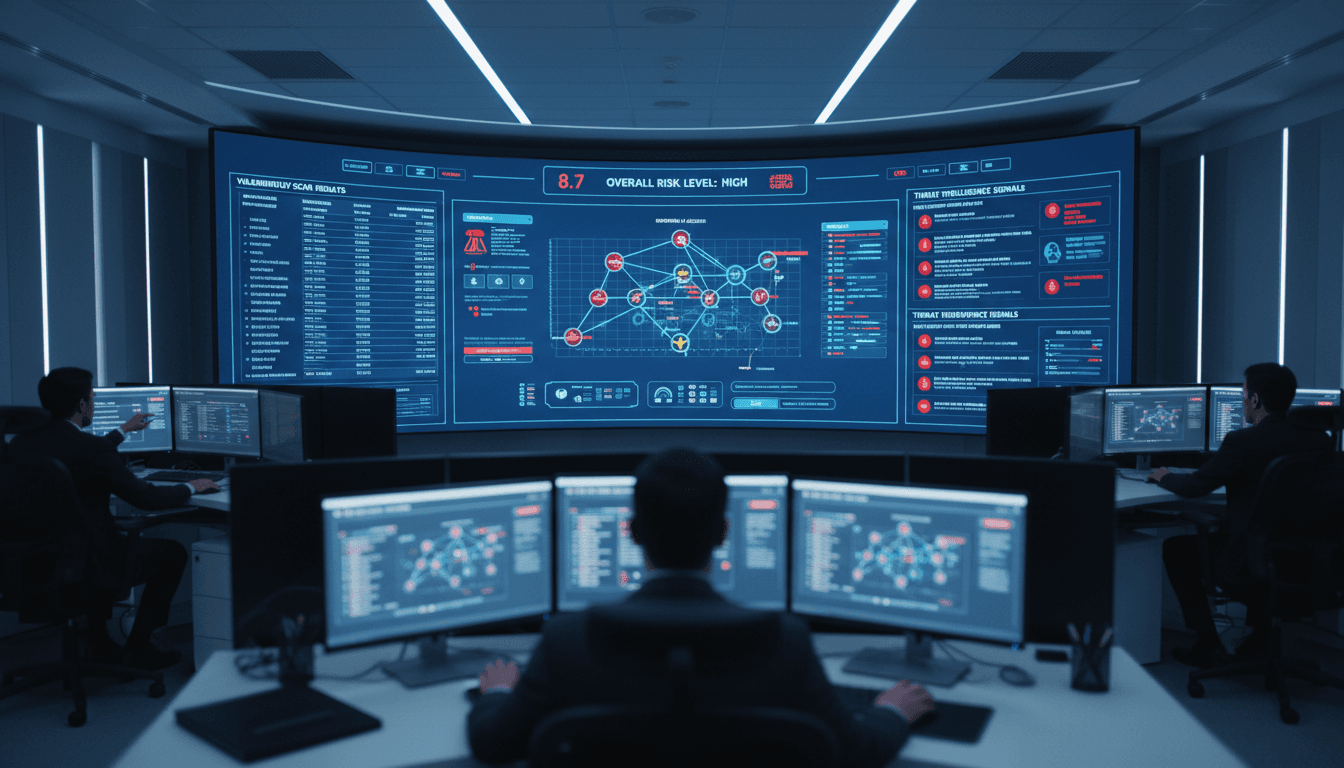

Where AI fits: from intelligence to automated decisions

Threat intelligence is valuable, but it can also be noisy—multiple sources, changing signals, inconsistent quality. This is where AI earns its keep.

AI helps by doing two jobs at once: (1) continuous analysis at scale, and (2) consistent prioritization logic that doesn’t get tired.

AI-powered vulnerability prioritization: what it actually means

AI in vulnerability management isn’t magic. In practice, it’s usually a combination of:

- Machine learning models that predict exploitation probability using features like exploit publication, scanning activity, adversary chatter, and historical patterns

- Anomaly detection that flags sudden spikes in exploitation indicators (for example, a CVE that suddenly becomes a top target)

- Automated risk scoring that updates continuously as new intelligence arrives

This is the bridge between “we have intel” and “we changed what we patch today.”

A concrete example: reprioritizing the patch queue in hours

Here’s a realistic scenario I’ve seen play out in mature programs:

- A weekly scan identifies 600 vulnerabilities, including 35 rated “critical” by CVSS.

- Midweek, threat intelligence reports active exploitation of one CVE that affects a middleware component.

- AI-driven scoring boosts that CVE to the top because:

- exploitation is confirmed,

- it’s trending across multiple telemetry sources,

- your asset inventory shows it’s present on two internet-facing systems.

- The system auto-creates high-priority tickets for the specific affected hosts and routes them to the right owners.

- SOC monitoring adds temporary mitigations (virtual patching, WAF rules, detection logic) while IT schedules the emergency change.

The key isn’t that AI “found” the vulnerability. The key is that AI compressed decision time from days of meetings into minutes of automated triage.

AI also improves the quality of conversations with leadership

Executives don’t want a chart showing “vulnerabilities patched this month.” They want to know:

- What’s most likely to hurt us?

- What are we doing about it?

- What’s the residual risk?

Threat intelligence plus AI-driven scoring gives you language leaders understand:

- “This vulnerability is being used in ransomware campaigns right now.”

- “It exists on systems that process payments.”

- “We can reduce exposure within 24 hours by patching these 12 hosts first.”

That’s how security teams get approval for downtime, emergency change windows, and extra remediation resources.

A practical integration roadmap (no rebuild required)

You don’t need to rip and replace your VM platform to integrate threat intelligence and AI prioritization. Most organizations can get meaningful gains in 30–60 days with the right sequence.

Step 1: Map the workflow you actually use

Answer three blunt questions:

- Where do vulnerability findings enter the system?

- Who decides priority today, and using what rules?

- Where does work get stuck (ownership, change windows, testing, approvals)?

If you can’t draw your end-to-end flow on one page, automation will amplify chaos instead of reducing it.

Step 2: Normalize asset criticality (or nothing else will work)

AI scoring is only as useful as the asset context behind it. You need at least a tiering model:

- Tier 0: crown jewels (identity, payment, sensitive data)

- Tier 1: customer-facing production

- Tier 2: internal business systems

- Tier 3: dev/test and low-impact systems

If you skip this, your program will “optimize” patching on the wrong machines.

Step 3: Use threat-informed scoring, not just more dashboards

Pick a scoring approach that updates as threat conditions change. Many programs combine:

- CVSS (technical impact)

- KEV-style known exploitation flags (exploitation reality)

- EPSS-like probability signals (likelihood)

- Internal exposure and asset value (business impact)

The goal is a single ranked queue your remediation teams trust.

Step 4: Automate routing and ticketing

Prioritization without execution is just reporting.

Wire your intelligence-driven scores into your workflow tools so that:

- the right team gets the ticket (server, app, network, cloud)

- the ticket includes context (exploit status, affected products, mitigations)

- SLAs differ by risk tier (not one-size-fits-all)

Step 5: Build a feedback loop that improves the model

Treat this like an engineering system, not a compliance ritual. After major patch cycles or incidents, ask:

- Did our scoring correctly predict what became urgent?

- Which “critical” issues stayed unexploited for months?

- Which lower-scored issues became active threats?

That feedback is what makes AI prioritization more accurate over time.

Metrics that prove you’re reducing risk (not just closing tickets)

If your metrics reward volume, you’ll get volume. Measure outcomes that reflect attacker reality.

Track these five:

- MTTR for known exploited vulnerabilities (especially on Tier 0/1 assets)

- Count of exploitable vulnerabilities on internet-facing systems

- Exposure window: time between “weaponized” signal and mitigation/patch

- Reopen rate: vulnerabilities that reappear due to config drift or failed deployments

- Patch SLA adherence by risk tier (not by CVSS category)

A mature AI-driven vulnerability management program usually shows a clear pattern: fewer emergency fire drills, faster remediation of the issues that matter, and a shrinking set of high-risk exposures.

Where this is heading in 2026: continuous, intelligence-driven VM

The next step in the AI in Cybersecurity narrative is straightforward: vulnerability management becomes continuous and predictive. Scans and spreadsheets won’t disappear overnight, but the direction is clear.

- Prioritization will update in near real time.

- Controls will be applied dynamically (temporary mitigations first, patches next).

- Security teams will spend less time arguing about priority and more time reducing exposure.

If you want a practical next move, start small: pick one business-critical environment (identity, VPN, remote access, payment, customer portal) and build an intelligence-driven “fast lane” for it. Prove the MTTR improvement. Then scale.

You’ll know you’re doing it right when your patch queue stops looking like a backlog and starts looking like a plan. What would change for your team if the top 20 items on that list were truly the ones attackers are chasing this week?