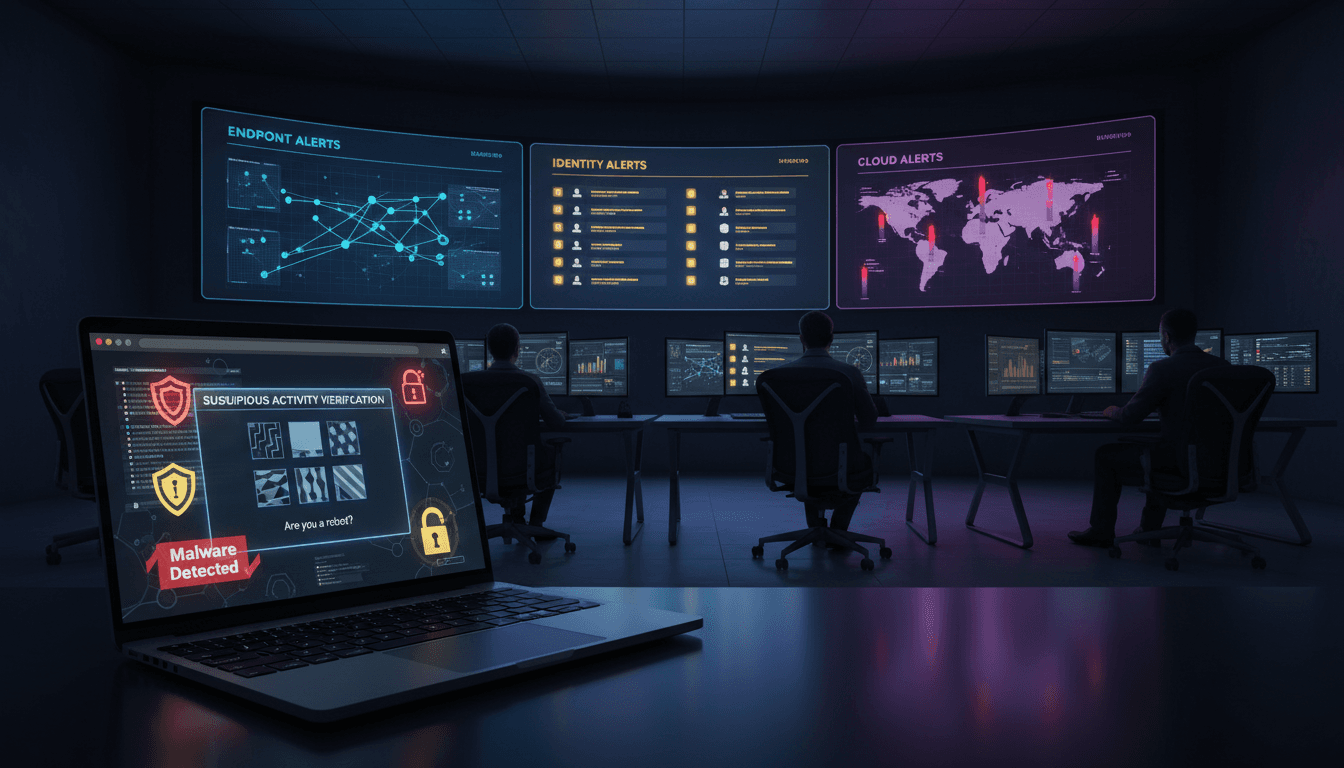

A fake CAPTCHA led to a 42-day Akira ransomware compromise. Learn how AI threat detection can spot the quiet middle—lateral movement, staging, and exfiltration.

AI Threat Detection vs Fake CAPTCHA Ransomware

Most companies still treat CAPTCHA prompts as “website stuff,” not as a security control that can be abused. That blind spot is exactly what made a recent Akira ransomware incident so costly: one employee clicked a fake CAPTCHA on a compromised website, and the attackers stayed inside for 42 days.

The uncomfortable part isn’t the initial trick. Social engineering works. The real failure is what happened after: the environment logged the attacker’s activity but didn’t act on it. This post is part of our AI in Cybersecurity series, and I’m going to take a firm stance—ransomware dwell time is now primarily a detection engineering problem, and AI-powered detection is the fastest way to shrink it.

Below is the story arc of the Akira intrusion, what it reveals about modern ransomware operations, and how AI-driven threat detection and response can catch the “quiet middle” of the breach—when attackers are moving laterally, staging data, and setting up the final disruption.

The fake CAPTCHA is the new “enable macros”

Answer first: Fake CAPTCHA attacks work because they blend into normal browsing, and traditional controls often don’t treat “web interaction” as a high-signal security event.

In the incident analyzed by Unit 42, the chain started with an employee visiting a compromised car dealership website. The page displayed a familiar “prove you’re human” prompt. But it wasn’t a legitimate bot check—it was a ClickFix style social engineering lure designed to push a malicious payload.

Here’s why that matters in late 2025:

- Users have been trained to comply with friction screens: CAPTCHAs, MFA prompts, cookie banners, “verify” dialogs.

- Attackers increasingly package malware delivery as routine web hygiene.

- Security teams often focus training on email phishing, while web-based social engineering gets less attention.

The payload in this case was SectopRAT, a .NET-based remote access Trojan that gives attackers remote control, stealthy command execution, and a foothold to expand access.

What AI can spot at the CAPTCHA stage

Security teams often ask, “How do you stop a user from clicking?” My answer: assume they will, then instrument everything around the click.

AI-powered threat detection can reduce risk at this earliest phase by correlating weak signals that humans miss, such as:

- A user launching a suspicious child process after a browser interaction (browser → scripting host → downloader behavior)

- Rare domain patterns and URL characteristics across your org’s browsing history

- The first appearance of an unknown remote access tool across endpoints

- Mismatched browser reputation vs. on-endpoint behavior (the site “looks normal,” but the endpoint acts abnormal)

The value isn’t magic. It’s speed and correlation: AI models can compare “this looks like the last 10,000 sessions” vs. “this looks like the 12 sessions that preceded malware.

What a 42-day ransomware compromise actually looks like

Answer first: The longest part of most ransomware incidents is not encryption—it’s quiet expansion: credential theft, reconnaissance, lateral movement, and data staging.

After the SectopRAT foothold, the attackers (tracked as Howling Scorpius, associated with Akira distribution) established command-and-control and mapped the environment. Then they did what disciplined ransomware crews always do: go after privileged identity.

Over the 42-day window, they compromised multiple privileged accounts, including domain admin-level access, and moved laterally using familiar administrative protocols:

RDPSSHSMB

They accessed domain controllers, staged large archives with WinRAR across file shares, and pivoted between environments—moving from a business unit domain into corporate infrastructure and then into cloud resources.

This is the “quiet middle” that defenders underestimate. It’s not flashy. It’s operational.

The two moments that should terrify you

Two details from this incident should change how you prioritize controls:

- They targeted backups before encryption. Attackers deleted cloud storage containers holding backups and compute resources. If your backup strategy isn’t isolated, immutable, and monitored, ransomware becomes a business-stopping event rather than an IT incident.

- They exfiltrated about 1 TB before detonation. Double extortion isn’t a bonus tactic anymore. It’s standard operating procedure.

AI in cybersecurity is especially valuable here because exfiltration and backup tampering generate patterns—unusual authentication paths, atypical data movement, new tooling—that AI-driven analytics can flag faster than static rules.

“We had EDR” isn’t a defense strategy

Answer first: Logging without alerting is functionally the same as not seeing the attack.

The organization had two enterprise EDR tools deployed. The tools recorded the malicious activity—connections, lateral movement, data staging—but produced very few alerts.

That’s not an EDR problem. That’s an operations reality problem:

- Coverage gaps (some systems not fully instrumented)

- Misconfigured policies (telemetry on, prevention off)

- No tuned detections for “hands-on-keyboard” behavior

- Alert fatigue leading to aggressive suppression

The incident aligns with a broader pattern highlighted in Unit 42’s 2025 incident response findings: in 75% of investigated cases, evidence of compromise existed in logs but went unnoticed.

If your security program depends on an analyst noticing a perfect alert at the perfect time, you don’t have detection—you have hope.

Where AI-driven detection actually helps (and where it doesn’t)

AI doesn’t replace your SOC. It changes the workload.

It helps by:

- Connecting weak signals across identity, endpoint, network, and cloud

- Detecting behavioral anomalies (rare admin access paths, unusual RDP fan-out, odd SMB traversal patterns)

- Prioritizing incidents based on blast radius (e.g., “this endpoint touched a domain controller”)

- Generating investigations faster (entity timelines, stitched sessions, related indicators)

It doesn’t help if:

- Telemetry isn’t collected consistently

- Identity logs are incomplete

- Your org refuses to act on high-confidence detections (“we’ll review next week”)

AI is an amplifier. It amplifies what you feed it.

A practical AI-powered control map for this exact attack chain

Answer first: You stop 42-day compromises by placing AI-assisted detections on the transitions—where attackers change hosts, identities, or data states.

Here’s a control map you can apply this quarter, without rewriting your entire security program.

1) Web-to-endpoint transition (fake CAPTCHA → malware)

Goal: catch the first execution and persistence behaviors.

- Detect unusual process trees originating from browsers

- Block unsigned or rare binaries downloaded from newly-seen domains

- Use AI-based endpoint analytics to flag RAT-like behavior (beaconing patterns, remote control artifacts)

2) Privilege escalation and credential theft

Goal: identify identity misuse early.

- Alert on first-time privileged group membership changes

- Detect abnormal Kerberos patterns and suspicious ticket activity

- Monitor service account use (especially high-value accounts like

KRBTGTcontext and domain controller access patterns)

3) Lateral movement with “legit” protocols (RDP/SSH/SMB)

Goal: distinguish admin work from attacker movement.

- Use behavioral baselines: which admins RDP where, and when

- Flag “RDP fan-out” (one host touching many hosts in a short window)

- Correlate lateral movement with preceding malware on the origin endpoint

4) Data staging and compression

Goal: catch the prep work for exfiltration.

- Alert on mass file read + archive creation on file servers

- Detect uncommon use of compression tools on servers

- Use AI to score “data access sessions” by volume, novelty, and destination

5) Exfiltration and backup sabotage

Goal: prevent the point of no return.

- Monitor cloud storage deletion events and unusual lifecycle operations

- Detect new tools used for transfer (portable clients, unusual user agents)

- Set hard guardrails: immutable backups, separate credentials, and monitored admin actions

If you build detections around these transitions, ransomware crews lose their biggest advantage: time.

The response lesson: speed wins, but preparation wins more

Answer first: Incident response is faster when your telemetry is unified and your detections are already mapped to attacker behaviors.

In this case, Unit 42 helped by rapidly deploying tooling to establish comprehensive visibility and “stitching” together evidence across server logs, cloud logs, firewall traffic, SIEM data, and security tools. With a full picture of the attacker’s path, they recommended concrete remediation steps:

- Network segmentation to isolate critical infrastructure and restrict admin access pathways

- Credential rotation and privileged access tightening, including rolling critical Kerberos-related accounts to invalidate attacker persistence techniques

- Endpoint hardening (patching, removal of end-of-life systems, detection configuration)

- Cloud monitoring and backup strategy improvements

They also handled ransom negotiation and reportedly reduced the initial demand by about 68%—useful, but still not a “win.” The win is preventing the attacker from reaching that stage.

What to do next (especially before year-end change freezes)

Answer first: The most effective pre-ransomware work is boring: validate coverage, tune detections, and rehearse response.

December is a risky month for many orgs—staffing is thinner, change freezes are common, and attackers know it. If you want a pragmatic checklist you can run in the next 2–3 weeks, start here:

- Run an “alertability” audit: pick 10 known bad behaviors (RDP fan-out, WinRAR staging, suspicious cloud deletions) and confirm you get an alert—not just a log.

- Baseline admin behavior: you can’t detect anomalies if everything is “normal.”

- Harden backups like they’re production systems: separate identities, immutable storage, and monitored delete operations.

- Instrument the web-to-endpoint seam: fake CAPTCHAs and ClickFix lures live here.

- Adopt AI-assisted correlation where humans are weakest: cross-domain stitching, entity timelines, and prioritization by blast radius.

The AI in Cybersecurity theme keeps repeating across incidents: attackers scale with automation; defenders need automation to keep up. If your security stack collects data but doesn’t generate clear, timely decisions, you’ll keep reliving the same story—with a different ransomware name.

If a fake CAPTCHA can buy attackers 42 days, what could they do in your environment with 14 days… or even 7?