Gogs CVE-2025-8110 hit 700+ servers. Learn how AI-driven anomaly detection and automated response can contain zero-days before patches arrive.

AI Detection for Gogs Zero-Day: Stop Exploits Fast

More than 700 internet-exposed Gogs servers have already shown signs of compromise from an unpatched zero-day (CVE-2025-8110, CVSS 8.7). That’s the headline. The operational lesson is harsher: when attackers don’t need a public fix to move, your detection and response speed becomes the control that matters most.

This post is part of our AI in Cybersecurity series, and I’m going to use the Gogs incident as a real case study for a practical point: AI-driven threat detection is most valuable in the “no-patch-yet” window, when teams are forced to rely on monitoring, anomaly detection, and automated containment.

If you run any self-hosted Git service, CI/CD tooling, or developer infrastructure that touches production credentials, treat this as a wake-up call. Git platforms aren’t “just dev tools.” They’re a path to your cloud control plane.

What happened with the Gogs zero-day (and why it spread)

Answer first: The Gogs zero-day is being exploited because it enables arbitrary file overwrite via API behavior + symbolic links, which can be chained into remote code execution—and many instances are directly reachable from the internet.

Wiz researchers reported widespread exploitation of CVE-2025-8110, a flaw tied to improper symbolic link handling in Gogs’ PutContents API. The core issue isn’t exotic; it’s a familiar class of bug: a write primitive that becomes dangerous when attackers can steer it outside expected boundaries.

Here’s the sequence at a high level (simplified from the technical write-up):

- Attacker creates a repo

- Commits a symlink pointing outside the repo to a sensitive target

- Uses the file update API to write “through” the symlink to an arbitrary server path

- Overwrites Git config (notably

sshCommand) to execute attacker-controlled commands

Two details matter for defenders:

- This was described as a bypass of a prior Gogs RCE (CVE-2024-55947). In practice, that’s a reminder that “patched once” doesn’t mean “fixed forever.” Attackers love retesting the same surfaces.

- The campaign appears broad and opportunistic (“smash-and-grab”), with indicators like random 8-character repo names across compromised instances.

If your team assumes “nobody targets our internal dev server,” the Gogs story is the counterexample.

Why “just patch it” fails during active zero-day exploitation

Answer first: During an unpatched zero-day, the defensive playbook shifts from patch management to exposure reduction + high-signal detection + rapid containment.

When there’s no vendor fix, security teams get stuck in the gap between:

- engineering reality (you can’t hotfix a third-party app correctly under pressure), and

- attacker reality (they can automate exploitation across hundreds of targets).

That gap is where most organizations lose time. And time is the currency of incident scope.

The hidden cost: developer infrastructure is a credential factory

Git services hold:

- deployment keys and SSH access paths

- CI/CD pipeline definitions

- build artifacts and config files

- often, secrets that shouldn’t be there but are

Even if a Git server compromise doesn’t immediately hit production, it’s frequently a stepping stone to:

- source code theft

- supply chain tampering

- cloud credential access

- persistence via CI workflows

The same RSS source also highlighted attackers targeting leaked GitHub Personal Access Tokens (PATs) to discover secret names and run malicious workflows. The connection is straightforward: attackers want the platform that mints and routes secrets, not just the app server.

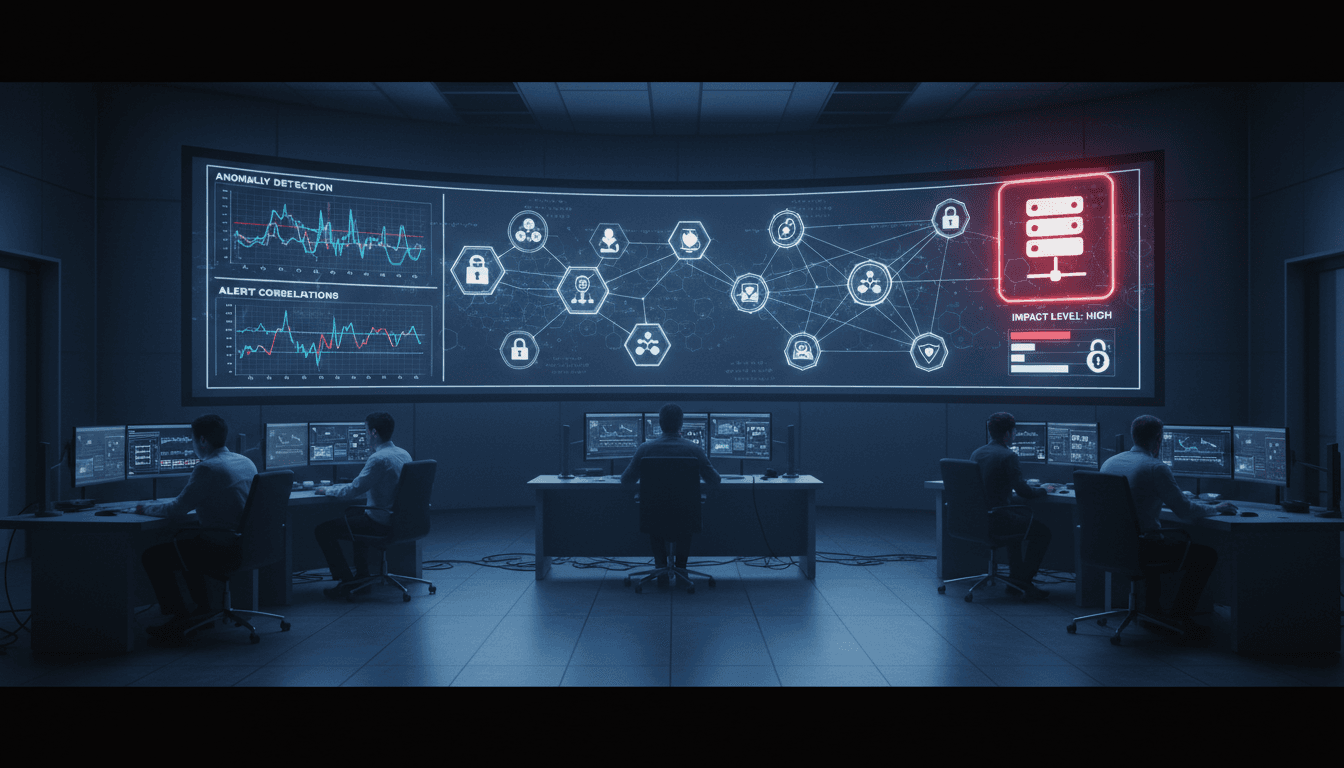

How AI-driven anomaly detection could have stopped this earlier

Answer first: AI helps most when it’s tuned to detect behavioral deviations—new repos, unusual API writes, symlink abuse patterns, and post-exploitation command execution—then trigger containment automatically.

I’ve found that teams over-rotate on AI as “better alerts.” The better framing is: AI is a compression engine for messy security telemetry, turning a flood of low-quality signals into a small set of high-confidence incidents you can act on.

For the Gogs case, an AI detection strategy would focus on four layers.

1) Detect the pre-exploit setup: repo creation patterns

The campaign left behind repos with 8-character random names and similar creation timing. That’s a gift to defenders.

High-signal detections:

- burst of new repositories created by a single account/IP

- repo owner/repo names matching high-entropy patterns (e.g., 8 random chars)

- repo visibility changes (public/private flips) that don’t match normal workflows

Where AI fits: Unsupervised models can baseline “normal repo creation behavior” per org, per team, and per IP range—then flag the outliers without you hand-writing 50 brittle rules.

2) Detect the exploit path: unusual PutContents API usage

API-level telemetry is often underused in self-hosted tooling.

High-signal detections:

PutContents(or equivalent) writes that touch unexpected file paths- write attempts following a new symlink commit

- mismatched user-agent patterns (automation frameworks tend to be consistent)

- repeated 4xx/5xx patterns that look like exploit probing

Where AI fits: Sequence models can spot suspicious chains like:

“new repo” → “symlink commit” → “API write” → “config change”

That chain matters more than any single event.

3) Detect post-exploitation: Git config and SSH command anomalies

The described technique mentions overwriting .git/config and manipulating sshCommand. If you only monitor Linux processes at the host level, you’ll still catch this—but you’ll catch it late.

High-signal detections:

.git/configmodifications outside standard git operationssshspawned by the service account in unusual contexts- outbound connections to rare destinations (especially from a repo server)

Where AI fits: AI-based EDR/NDR approaches reduce false positives by correlating:

- process lineage (what spawned SSH)

- network rarity (new ASN / new geo / new destination)

- timing (immediately after a web/API write)

This is the kind of correlation humans do well… when they have two hours and three tools open. AI does it continuously.

4) Detect lateral movement risk: secrets and CI/CD manipulation

Once a Git platform is compromised, the next move is usually “turn code into access.”

High-signal detections:

- new workflows/pipelines added outside change windows

- build scripts modified to exfiltrate env vars

- credentials used from new hosts shortly after repo server anomalies

Where AI fits: Behavioral analytics can flag “impossible travel” patterns for tokens, new execution environments, and suspicious workflow edits that resemble known attacker playbooks.

Practical containment steps while waiting for a patch

Answer first: If you run Gogs (or similar self-hosted Git services), your immediate goal is to reduce exposure and shrink blast radius, then hunt for compromise using concrete indicators.

Based on the incident details, here’s what I’d do in priority order.

1) Reduce exposure (fastest risk reduction)

- Remove public internet access to the Git service. Put it behind VPN, SSO proxy, or private network access.

- Disable open registration and review all admin accounts.

- Restrict API access paths at the reverse proxy/WAF level if feasible.

If you need one sentence for leadership: “Internet-exposed developer infrastructure is an incident, not a convenience.”

2) Hunt for compromise using Gogs-specific clues

Start with what the campaign already showed:

- search for repositories with 8-character random owner/repo names

- list repositories created around the same period (campaigns often cluster)

- review API logs for unusual

PutContentsbehavior - inspect

.git/configfor tampering, especially around SSH settings

3) Assume credential exposure and rotate accordingly

If a repo server is compromised, treat the following as potentially exposed until proven otherwise:

- SSH keys used for deployments

- CI/CD secrets stored in the platform

- cloud credentials present on the host

Then rotate in a sensible order:

- tokens/keys that can touch cloud control plane

- deployment keys

- developer tokens

4) Add temporary guardrails that AI can enforce automatically

Even without a patch, you can make exploitation harder and detection faster:

- alert on any symlink commits to sensitive paths (or block them if policy allows)

- rate-limit suspicious API endpoints

- auto-quarantine the service if it starts making rare outbound connections

This is where AI-driven SOAR-style workflows earn their keep: containment can be triggered by a high-confidence chain of signals, not a single noisy alert.

What this teaches security leaders about AI in cybersecurity

Answer first: The Gogs incident shows why AI belongs in security operations: it shortens the time between “first abnormal event” and “containment,” especially during zero-days.

Most companies get this wrong by treating AI as a bolt-on analytics layer. The better approach is to treat AI as a decision engine for three things:

- prioritization: which anomaly actually matters right now

- correlation: which 12 low-level events form one real incident

- orchestration: what gets contained automatically versus escalated

If you’re building your 2026 security plan (and yes, this December timing matters), focus less on “do we have an AI tool” and more on “do we have AI-powered response paths for zero-day windows.” Those windows are getting more common, not less.

Snippet-worthy stance: When patches don’t exist yet, detection and containment are your only real controls.

A quick FAQ teams ask after incidents like this

“If our Git service isn’t production, why is it high risk?”

Because it’s a bridge to production. Attackers don’t need prod access on day one—they need a place to steal secrets, change code, or hijack pipelines.

“Can AI really detect a brand-new exploit?”

AI won’t “recognize” the CVE name. It will detect the behavioral footprints: unusual API write patterns, repo creation bursts, rare outbound connections, and suspicious process chains.

“What’s the minimum AI-driven capability we should add?”

Start with anomaly detection + automated containment for:

- internet-facing dev tools

- identity and token usage

- CI/CD workflow changes

That combination catches the most damaging follow-on moves.

Where to go from here

The Gogs zero-day exploitation across 700+ instances is a clean example of a modern truth: attackers scale faster than manual security operations. The fix isn’t panic. It’s building a security posture that assumes a “no-patch-yet” period and still responds decisively.

If you’re evaluating AI in cybersecurity tools, use this incident as your test case. Ask vendors (or your internal team) to walk through a real workflow:

- How would we detect the repo creation and symlink pattern?

- How would we correlate that with API writes and host behavior?

- What containment happens automatically in the first 5 minutes?

What would your team want to happen if the next zero-day hits on a Friday night during holiday change-freeze—alert fatigue, or an automated quarantine that buys you time?