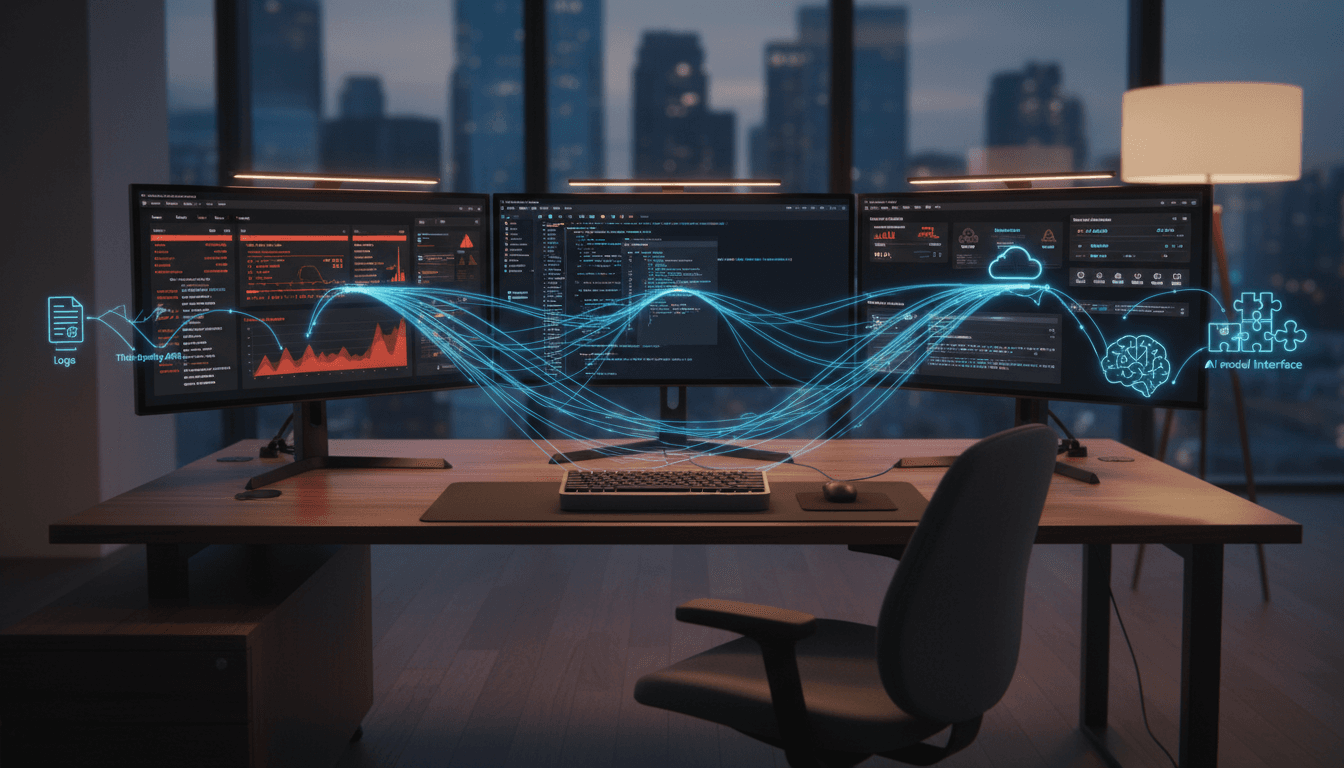

AI-powered code scanning prevents privacy leaks before deploy by tracking sensitive data flows into logs, third parties, and LLM prompts.

AI Security Starts in Code: Stop Leaks Before Deploy

A lot of security programs still treat privacy and data protection like something you “check” after an app ships. That model is breaking—fast. AI-assisted coding is pumping out more code, in more repos, with more integrations than most security and privacy teams can realistically review.

Here’s the uncomfortable truth: most data security and privacy incidents aren’t caused by exotic attacks. They’re caused by normal code paths doing unsafe things—logging too much, sending data to third parties without guardrails, or quietly wiring in AI tooling that no one approved. If you only detect the problem in production, you’re already in cleanup mode.

This post is part of our AI in Cybersecurity series, and the stance is simple: the highest ROI place to apply AI for data security is inside the development workflow—where you can prevent risky data flows before they become real incidents, real regulatory exposure, and real customer churn.

Why “detect it in production” is a losing strategy

Answer first: Production-only detection finds problems after the blast radius exists, which makes remediation slower, more expensive, and harder to verify.

Traditional stacks—runtime DLP, post-deployment privacy platforms, SIEM alerts—are useful, but they’re structurally reactive. By the time they fire:

- The data has already been collected, copied, or transmitted.

- Logs have already been shipped to multiple systems.

- Third-party SDKs have already received payloads.

- LLM prompts have already been formed and sent.

That’s why teams end up spending weeks on “data archaeology”: hunting where the sensitive data went, which services ingested it, and how to scrub or rotate it. The code fix is often trivial. The cleanup almost never is.

A better approach is to treat source code as the earliest and most complete record of intent. If you can see sensitive data flows before merge, you can stop a leak before it becomes an incident ticket, a customer escalation, or a regulator letter.

What changed in 2025: development velocity outpaced governance

AI app generation and AI coding assistants have made it normal for teams to:

- Spin up new services in hours

- Add integrations in a single PR

- Copy/paste patterns that include risky logging or prompt construction

Security headcount didn’t double to match that. So coverage drops unless you automate.

The three privacy failures that keep repeating (and how code-level AI catches them)

Answer first: The most common privacy failures show up as code patterns—logging oversharing, undocumented data flows, and ungoverned AI/third-party integrations.

The RSS article highlights three pain points that keep showing up across organizations. Here’s how they look in the real world, and why code scanning matters.

1) Sensitive data in logs: the “slow bleed” incident

Logging is the most underestimated data breach vector because it’s rarely malicious—just sloppy.

Common patterns:

- Dumping a full user object during debugging (

console.log(user)) - Logging request headers that contain tokens

- Logging exception objects that include payloads

- Logging “helpful context” that includes email, phone, address, or identifiers

Once sensitive data hits logs, it usually propagates:

- Observability platforms

- Managed log storage

- Data lakes

- Incident tooling

- Vendor support exports

Teams then scramble to mask, delete, or rotate data—often across systems that don’t support precise deletion. This is where you burn hundreds (or thousands) of engineering hours.

What works better: code-level detection that flags tainted data flows (PII, PHI, tokens, card data) reaching log sinks before merge. That’s a prevention win, not a detection win.

2) Data maps that drift: compliance documentation turns into fiction

If your org is subject to GDPR, HIPAA, or modern US state privacy laws, you’re expected to maintain accurate records of:

- What personal data you collect

- Where it’s stored

- What third parties receive it

- Why you process it (legal basis / purpose)

- Retention and minimization behavior

The operational reality is ugly: privacy teams run interviews, chase app owners, and maintain spreadsheets or GRC entries that are outdated the moment a new SDK is added.

The root cause: production-based mapping can’t reliably see what code could do—especially when SDKs, abstractions, and feature flags hide the actual flow.

What works better: continuously generated, evidence-backed data maps derived from the code itself. If the map updates when the code changes, it stays real.

3) Shadow AI inside repos: “it’s just a small experiment”

Many companies now restrict which AI services can be used and what data can be sent to them. But experiments spread.

A pattern I’ve seen repeatedly: a developer adds an LLM SDK “just to test something,” then it becomes a dependency, then it’s used in a customer-facing flow, and suddenly you’re sending support tickets, chat transcripts, or user profiles into prompts.

The RSS content points out that it’s common to find AI SDKs in 5%–10% of repositories during scans. That sounds small until you have 2,000 repos.

The actual risk isn’t “AI exists.” The risk is:

- Unapproved model providers

- Unclear data handling terms

- Oversharing in prompts

- Missing user notice/consent alignment

- No technical enforcement of policies

What works better: treat AI integrations as a governed data egress path. Detect LLM prompt construction, trace which data types flow into prompts, and enforce allowlists at code review time.

What “privacy-by-design” looks like in a modern AI development pipeline

Answer first: Privacy-by-design in 2025 means automated controls in IDE + CI that detect sensitive data flows, block risky sinks, and generate compliance evidence continuously.

A lot of teams say they want privacy-by-design. Fewer teams operationalize it.

Here’s a practical blueprint that scales without turning engineers into paperwork machines.

Step 1: Classify data types the way engineers actually work

Engineers don’t think in legal terms; they think in variables and payloads.

Define detection categories that match how data appears in code:

- Authentication secrets (API keys, OAuth tokens, session IDs)

- Direct identifiers (email, phone, national IDs)

- Financial (cardholder data)

- Health-related (PHI)

- Location and device identifiers

Good implementations track 100+ sensitive data types and treat them differently. A token in logs is not the same risk as a city name.

Step 2: Track data flow, not just patterns

Regex-only scanning catches obvious strings and misses real leaks.

What you want is data flow analysis:

- Follow data through functions and files

- Understand transformations (hashing, redaction, truncation)

- Recognize sanitization logic

- Identify when sensitive data reaches a sink

This matters a lot for AI prompts because the prompt often gets built across multiple variables and helper functions.

Step 3: Put guardrails where developers live: IDE + CI

If the first time an engineer sees a privacy issue is after deployment, you trained them to ignore privacy.

Put feedback in:

- IDE extensions (fast, local feedback)

- Pre-commit hooks (optional, but effective)

- CI checks (non-negotiable enforcement)

- PR annotations (actionable, reviewable)

A practical policy:

- Warn in the IDE

- Block on merge for high-severity flows (tokens to logs, PII to unapproved third parties, sensitive fields to LLM prompts)

Step 4: Generate compliance evidence automatically

Privacy teams need artifacts like:

- Records of Processing Activities (RoPA)

- Privacy Impact Assessments (PIA)

- Data Protection Impact Assessments (DPIA)

Instead of interviews and spreadsheets, generate drafts pre-filled with detected flows:

- Data categories collected

- Storage locations

- Third-party recipients

- AI integrations involved

- Identified risks and suggested controls

That turns compliance from a quarterly fire drill into a continuous process.

How AI-driven code scanning supports AI governance (without slowing teams)

Answer first: AI governance becomes enforceable when you can automatically detect AI SDKs, map prompt-bound data, and apply policy allowlists at pull request time.

A lot of “AI governance” programs stop at policy PDFs. Policies don’t stop data from entering prompts.

To make governance real, implement three controls:

- AI integration inventory: detect direct and indirect LLM/agent frameworks across repos (including hidden dependencies).

- Prompt-boundary protection: treat an LLM prompt as a sensitive sink, like a network egress.

- Allowlist policies: define which data types are allowed for which AI services (and block everything else by default).

If you do this well, engineers still move fast. They just stop making the same avoidable mistakes.

A useful one-liner for leadership: “We don’t govern AI by banning it—we govern it by controlling which data can reach it.”

Practical checklist: ship faster and reduce privacy risk

Answer first: Start with a small set of high-impact rules that prevent the most expensive classes of incidents.

If you’re rolling this out in Q1 planning, don’t start with 200 policies. Start with 8–12 rules that map to real incident cost.

Here’s a strong starter set:

- Block plaintext secrets (API keys, tokens) from logs

- Block PII objects from debug logging

- Block sensitive fields from analytics events unless explicitly approved

- Block PHI from being written to local disk or client storage

- Flag new third-party SDK additions that receive user data

- Detect AI SDKs/frameworks and require ownership tags

- Block sensitive data types from LLM prompts by default

- Require explicit sanitization functions for prompt inputs

- Generate an updated data map on every release

- Auto-create audit evidence packets for high-risk systems

If you implement only the first, second, and seventh rules well, you’ll prevent a surprising number of real-world incidents.

What to do next if you want fewer incidents (and fewer compliance surprises)

Most teams don’t need another dashboard. They need earlier control.

If you’re leading AppSec, privacy engineering, GRC, or platform engineering, a solid next step is to run a short pilot that answers three questions in under two weeks:

- How much sensitive data is flowing into logs today (from code paths)?

- Which repos contain AI integrations, and what data types reach prompts?

- How far off is your current data map from what the code actually does?

Once you can measure those, it gets much easier to justify budget and to set policy that engineers won’t hate.

Security teams keep saying they want to “shift left.” I think the better framing is: put prevention where the change happens. Code is where the change happens.

What would change in your org if every pull request had to prove, automatically, that it doesn’t leak sensitive data—or send it to an unapproved AI service?