A $0 authorization can be an early warning for cyber-espionage. Learn how AI-driven anomaly detection links card testing to downstream attacks.

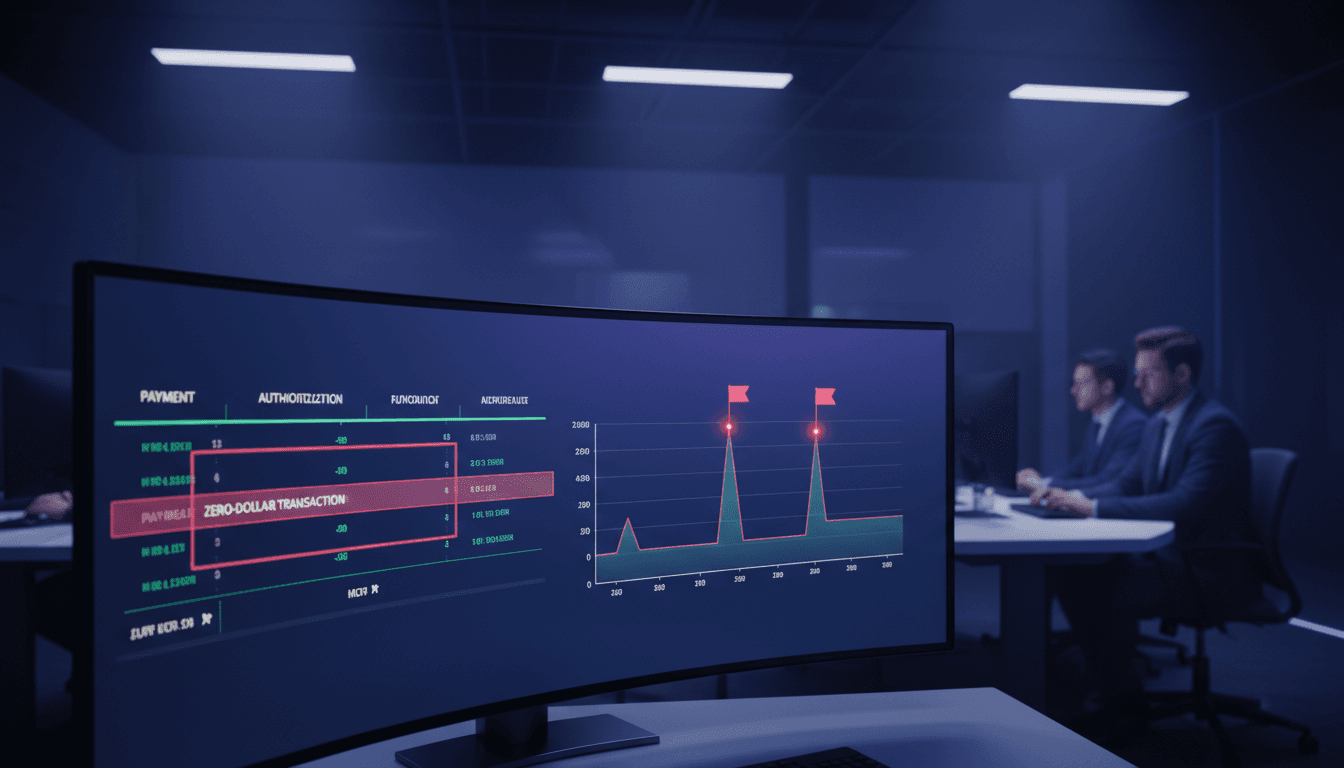

The $0 Transaction AI Flags Before It’s Too Late

A $0 payment authorization doesn’t look like an attack. It looks like noise.

Yet in late 2025, a pattern of zero-dollar and low-signal card activity showed up before an attempted purchase tied to access on an AI platform—activity that aligned with reporting around a Chinese state-sponsored espionage effort. The point isn’t that every $0 transaction equals a nation-state operation. The point is sharper: tiny anomalies in payment rails can be early-warning signals for high-impact cyber campaigns, especially when AI platforms and cloud services are the end target.

This post is part of our AI in Cybersecurity series, and it covers a practical lesson many security teams still miss: fraud telemetry (like card-testing) is security telemetry. Treat it that way, and you’ll catch threats earlier—sometimes weeks earlier.

Why a $0 transaction is a serious security signal

A $0 authorization is often used for legitimate reasons—card validation, account verification, or checking whether a card is active. Attackers use the same mechanism for a different purpose: confirming stolen cards work before spending them.

Here’s what makes that security-relevant (not just “fraud relevant”): a validated payment method can be used to create accounts, buy access, rent infrastructure, and mask attribution. When the downstream objective is cyber-espionage or intrusion enablement, the “payment event” is no longer an isolated fraud incident—it’s a step in the attack chain.

In the reported case, analysts observed activity consistent with card-testing services (including Chinese-operated testing infrastructure) preceding an attempted purchase on an AI platform. Even though the platform detected and blocked the attempt, the timeline highlights something defenders can operationalize:

Card-testing patterns can function as leading indicators for downstream cyber operations.

That’s an AI-in-cybersecurity story in a nutshell: small anomalies, correlated across datasets, revealing intent.

The fraud kill chain maps cleanly to the cyber kill chain

Payment fraud tends to follow repeatable mechanics. And repeatability is exactly what detection models love.

The observed progression: compromise → validation → resale → cashout

The source incident described a “textbook” progression:

- Compromised card appears in an authorization at a merchant known to be abused for testing

- Aging period, then another test (attackers check it’s still active)

- Additional tests (often consistent with resale and buyers verifying the card works)

- Attempted purchase on a target platform (in this case, an AI platform)

What matters for security leaders: the first 2–3 steps happen before the organization sees the final “bad” transaction. That gives you a window to act.

Where AI detection fits (and where it usually fails)

Most organizations still rely on a narrow lens:

- Fraud teams look at payments.

- Security teams look at logins, endpoints, and network.

- Risk teams look at compliance and geography.

Attackers don’t respect org charts. They move across systems.

AI-based anomaly detection is most effective when you feed it cross-domain signals:

- Payment attempts (including $0 auths)

- Account creation and identity proofing signals

- IP reputation and hosting patterns

- Device fingerprint changes

- Velocity indicators (how quickly behaviors escalate)

The failure mode I see most: companies deploy “AI” inside one tool (say, a SIEM or a fraud engine) and expect magic. The lift comes from correlation, not just classification.

How nation-state operators use the fraud ecosystem to reach AI platforms

The uncomfortable truth: the same carding ecosystem that fuels consumer fraud also supports advanced cyber operations. It’s cheap, scalable, and already industrialized.

Why stolen cards help attackers beyond “making money”

Using compromised payment cards to access Western platforms can:

- Reduce attribution: payment identity isn’t the operator’s identity

- Bypass geo restrictions: payment methods can unlock region-gated services

- Enable persistent access: accounts can be created and funded without linking to known operator infrastructure

- Buy time: even a short-lived paid account can be enough to run research, generate content, or automate tasks

When the target is an AI platform, the value isn’t just the subscription. It’s what that access can support: tooling, automation, research, and operational scale.

The “autonomous AI” angle changes the pacing, not the fundamentals

The source reporting references a campaign conducted primarily by an autonomous AI system. Whether you call it “agentic AI” or “autonomous tasking,” the security implication is straightforward:

- Attacks can iterate faster.

- Trial-and-error becomes cheaper.

- Operators can run more parallel experiments.

That makes early signals—like card testing—more valuable, because the time between “setup” and “action” keeps shrinking.

What to monitor: practical indicators your team can use this quarter

You don’t need a moonshot program to benefit from this. You need a tighter feedback loop between fraud signals and security controls.

High-signal payment indicators (especially for AI/SaaS platforms)

If you run a platform (AI, SaaS, developer tools, cloud, data), these indicators are worth treating as security events:

- $0 or $1 authorization bursts tied to new accounts

- Repeated authorization failures followed by one success

- Multiple cards used on one account in a short period

- One card used across many accounts (spray pattern)

- Country/IP mismatch between account profile, IP geolocation, and card BIN region

- Unusual “merchant descriptor” patterns linked to known testing behavior (when your processor provides it)

Account and identity indicators to correlate with payment anomalies

Payment anomalies become far more actionable when paired with:

- New account creation from datacenter or hosting IP ranges

- Device fingerprint instability (new device every session)

- Disposable email patterns and low-reputation domains

- High-velocity API usage soon after signup

- Unusual prompt/tool usage that suggests recon or automation (for AI platforms)

A $0 auth is rarely decisive alone. Correlated with identity and behavior signals, it becomes a strong trigger.

Mitigations that work (without wrecking your customer experience)

Defenders often assume they must choose between stopping fraud and keeping conversion high. That’s a false trade when you design controls as graduated friction.

For financial institutions: treat tester merchants like compromise beacons

If you’re an issuer or work in fraud operations:

- Score cards that interact with known tester merchants as likely compromised

- Trigger step-up verification or temporary holds on high-risk attempts

- Proactively reissue cards when repeated tester patterns appear

- Feed confirmed tester interactions back into models as labeled fraud precursors

This is one of the rare areas where you can get high precision: tester-merchant interactions aren’t random.

For AI platforms and merchants: add friction where it counts

If your product can be misused (and most powerful platforms can), these controls are practical and effective:

- 3D Secure / step-up authentication when risk is elevated

- Progressive trust: limit capabilities for brand-new accounts until signals look clean

- BIN + geo policy: not hard blocks, but risk boosts when card region and access region diverge

- Stronger velocity controls on signups, payment retries, and card changes

- Account linking detection: catch “constellations” of accounts sharing device traits, IP blocks, or payment fingerprints

Here’s what works in practice: don’t punish everyone. Punish the patterns.

Where AI improves these mitigations

Rule-based controls are necessary but brittle. Attackers adapt.

AI helps in three specific ways:

- Sequence detection: recognizing order and timing (test → age → retest → spend)

- Entity resolution: linking “different” accounts that are actually one operator (or one operation)

- Risk scoring with context: weighing payment signals alongside login, device, and behavioral features

If you’re building or buying AI-powered cybersecurity tooling, ask a blunt question: Can it model sequences and relationships, or does it only classify single events? The first catches campaigns. The second catches leftovers.

A simple playbook: turning fraud telemetry into security outcomes

Security teams love alerts. Business teams love approvals. You need a workflow that respects both.

Step 1: Define “payment anomalies” as security-relevant events

Create a shared taxonomy so fraud ops and SecOps mean the same thing when they say “tester.” Include:

- $0 auth

- micro-charge patterns

- retry velocity

- BIN/geo mismatch

Step 2: Build a correlation rule before you build a model

Before you invest in ML, start with correlation that’s easy to explain:

- Payment anomaly + new account + datacenter IP → step-up / queue for review

- Payment anomaly + API burst within 30 minutes → throttle / require verification

- Payment anomaly + multiple accounts per device → block cluster and investigate

You’ll get value fast, and you’ll generate labeled data for better modeling later.

Step 3: Close the loop with measurable outcomes

If your goal is LEADS, you still need internal proof. Track:

- Time from first tester signal to intervention (hours/days)

- Reduction in downstream chargebacks or abuse tickets

- Number of abusive accounts prevented per 1,000 signups

- Analyst time saved through automated clustering and enrichment

When you can say “we stopped 40 abusive accounts before they accessed premium capabilities,” budget conversations get easier.

What this means for 2026: defenders can’t ignore “small” signals anymore

The $0 transaction story is really about signal interpretation. Attackers use boring infrastructure because it blends in. Defenders win by treating cross-domain anomalies as a single narrative, not separate tickets.

AI in cybersecurity earns its keep here: it’s not replacing analysts; it’s connecting dots at machine speed—especially where payments, identity, and access intersect.

If you run an AI platform, a SaaS product, or any service that can be operationally useful to attackers, now’s the moment to combine fraud intelligence with security analytics. The next campaign won’t announce itself with malware. It may start with a $0 authorization.

Where do you have “boring” telemetry—payments, retries, verifications—that your security program still treats as someone else’s problem?