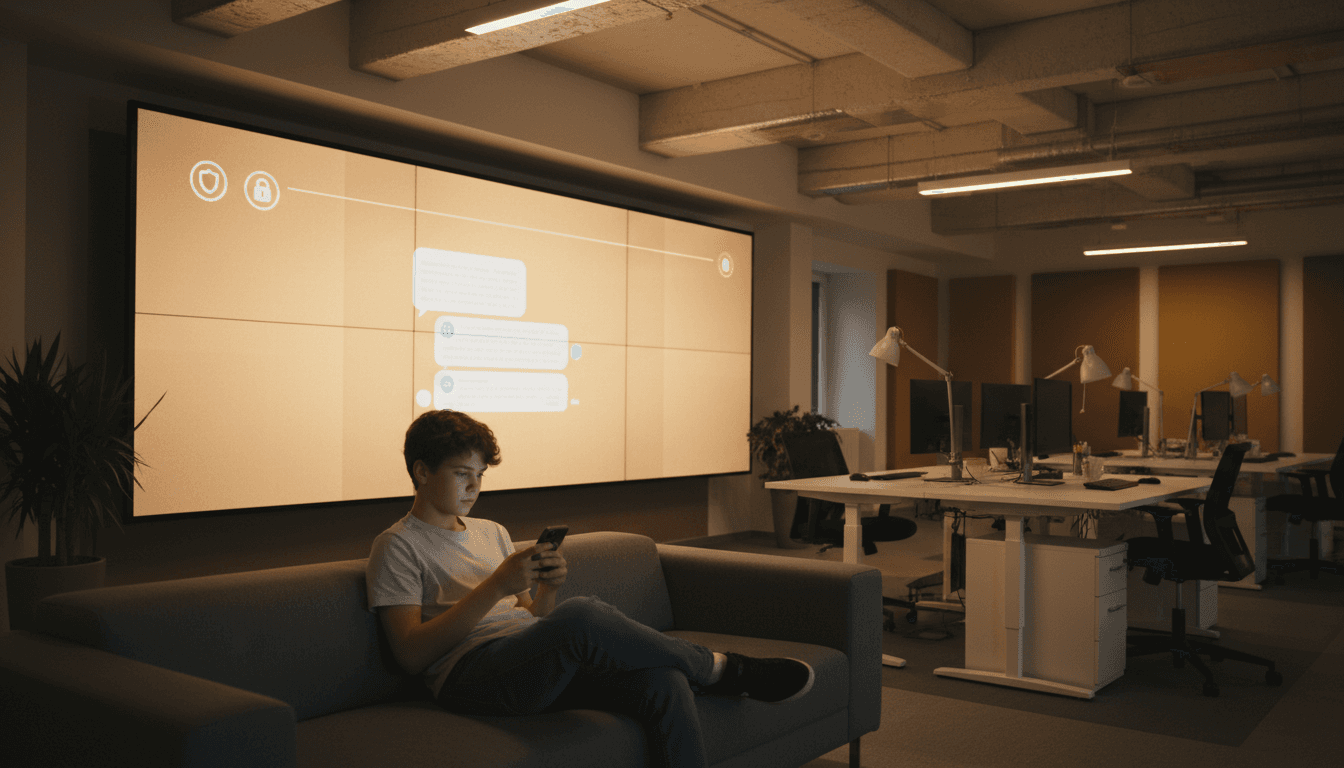

Teen AI chatbot habits expose safety risks—and lessons—for customer service chatbots. Build responsible personalization without creating harmful engagement loops.

Teen AI Chatbot Habits: Safety Lessons for CX Teams

Three in 10 U.S. teens use AI chatbots every day. That single behavior shift—daily, habitual, conversational use—should make anyone running a contact center or customer support chatbot sit up straighter.

Because teens aren’t treating chatbots like “search with nicer sentences.” They’re treating them like a relationship surface: always available, responsive, personalized, and increasingly sticky. The same design patterns that keep a teen talking to a chatbot can also keep a customer inside your support experience longer than necessary—or worse, trusting it too much.

If you’re building AI in customer service & contact centers, this is the moment to get serious about responsible personalization. Not the kind that pads engagement metrics, but the kind that earns trust, reduces risk, and still delivers fast resolutions.

What teen daily chatbot use really signals

Daily teen usage isn’t a novelty; it’s a product signal: conversational AI has crossed into “default behavior.” When a technology becomes daily, the user expectation changes from tool to companion-like utility.

For customer service, that expectation is already showing up in three ways:

- Customers expect memory and continuity. They want the bot to remember context across sessions, like it’s picking up a conversation.

- Customers expect empathy on demand. Not therapy. But they do expect language that feels understanding and human.

- Customers will disclose more than you think. Teens often start with basic questions, then drift into personal topics. Adults do this too—especially when stressed, angry, or embarrassed.

Here’s the stance I’ll take: If your support bot is good enough to feel “safe,” it’s good enough to become addictive—or dangerously over-trusted—unless you design guardrails.

The engagement trap: “helpful” can quietly become sticky

Most chatbot teams optimize for containment rate, average handle time, deflection, CSAT. Reasonable. But the teen pattern highlights a blind spot: systems that are pleasant and always available can create habitual usage even when the user doesn’t need them.

In media and entertainment, personalization is often built to maximize watch time. In contact centers, the analog is maximizing “self-service time.” That’s not inherently bad—until your bot starts behaving like it’s trying to keep the user chatting.

A practical metric shift for CX leaders: track Time-to-Resolution (good) separately from Time-in-Chat (not automatically good). If “time in chat” rises while resolution stays flat, you may be drifting into engagement-for-engagement’s-sake.

Why safety concerns are growing (and how that maps to CX risk)

Safety concerns around teen chatbot use tend to cluster into three buckets: privacy, psychological dependence, and harmful guidance. In a support setting, those map neatly to risks you can actually govern.

1) Privacy: oversharing is the default in chat

People reveal sensitive information in conversational interfaces because it feels like a private moment—even when it’s not. Teens are particularly prone to this; adults under stress aren’t far behind.

In a contact center, this creates predictable failure modes:

- Users paste account numbers, passwords, medical details, or addresses into a chat window.

- Bots trained on conversation logs accidentally ingest sensitive data.

- Agents receive escalations containing more personal context than they need.

Design move that works: build PII-aware redaction and “just-in-time” warnings.

A good support chatbot doesn’t just avoid collecting sensitive data—it actively nudges customers away from sharing it.

Examples of microcopy that reduces risk without feeling scolding:

- “For your security, don’t share passwords or full card numbers here.”

- “I can help with your order using the last 4 digits—no need to paste the full number.”

2) Dependence: when the bot becomes the coping mechanism

The RSS summary notes that teens may start with basic questions, but the relationship can become addictive. The mechanism isn’t mysterious: instant response + personalized tone + zero social friction.

In customer service, dependence shows up differently, but it’s still real:

- Customers return repeatedly to the bot for reassurance rather than resolution (“Is my refund really coming?” “Are you sure?”).

- The bot becomes the default emotional outlet for anger or anxiety.

- Users avoid human escalation even when it’s clearly needed.

Design move that works: implement confidence-based escalation and friction where appropriate.

If the model’s confidence is low, or the issue is high impact (refunds, cancellations, billing disputes), the bot should switch from “chatty helper” to “get this solved quickly.” That means:

- Shorter responses

- Clear options

- A prominent “talk to a person” path

3) Harm: unsafe advice, manipulation, and boundary confusion

Teen safety concerns often include chatbots giving bad advice, adopting an overly intimate tone, or failing to set boundaries. In CX, this can become:

- Incorrect policy guidance (“Yes, you can return that after 90 days”) that creates cost and customer rage.

- Over-promising outcomes (“I guarantee your refund today”) that the business can’t honor.

- Boundary confusion (“I’m always here for you”) that feels comforting, but is inappropriate for a commercial support setting.

Design move that works: add policy grounding and language boundaries.

A customer support chatbot should be explicit about what it can and can’t do:

- “I can help explain the policy and start a return. I can’t approve exceptions without a specialist.”

- “I’m here to help with your account. If you need urgent personal support, please contact local services.”

Responsible personalization: how to keep it helpful, not habit-forming

Personalization is the point—customers hate repeating themselves. But personalization without guardrails can mimic the same “sticky” dynamics we see in teen chatbot usage.

Here’s a practical framework I’ve used to evaluate responsible AI personalization in customer service.

The “3R” framework: Remember, Reduce, Refer

Remember (only what helps resolve issues).

- Store preferences like language choice, channel preference, accessibility needs.

- Store task context like product model, plan tier, warranty status.

- Avoid storing emotional disclosures or irrelevant personal details.

Reduce (steps, time, and cognitive load).

- Use the remembered context to shorten flows.

- Offer next-best actions (“Want me to start a replacement or troubleshoot first?”).

- Summarize before escalation (“Here’s what I understood…”).

Refer (to humans or verified sources at the right moment).

- Escalate when confidence is low.

- Escalate when the customer signals frustration.

- Route to specialists when the issue is high-risk.

This is the key: personalization should reduce time-to-resolution, not increase time-on-platform.

Guardrail checklist for customer service chatbots

If you’re running an AI contact center roadmap for 2026 planning, use this as a baseline:

- Data minimization: collect the smallest set of fields needed for the task.

- PII detection + redaction: protect logs, transcripts, agent handoffs.

- Policy grounding: responses constrained to approved policy and knowledge base.

- Tone constraints: no romantic/overly intimate language; no “dependency” cues.

- Escalation triggers: low confidence, repeated turns, strong negative sentiment.

- Auditability: conversation IDs, model versioning, and review workflows.

- User controls: clear “delete my chat,” “export transcript,” and “opt out” options.

What media & entertainment can teach CX about teen chatbot behavior

The campaign lens here matters: teen chatbot use is an audience behavior story. Media companies have spent years learning what increases retention—autoplay, endless scroll, personalized feeds. Some of those patterns can quietly slip into support experiences through “engagement metrics.”

Two transferable lessons:

Lesson 1: Personalization shapes identity, not just preferences

For teens, a chatbot that mirrors language and offers constant validation can become part of their self-narrative. In customer service, a bot that mirrors frustration can escalate conflict, while one that sounds falsely cheerful can feel dismissive.

A better practice is calibrated empathy:

- Validate the situation (“That’s frustrating—refund timing matters.”)

- Stay task-focused (“I can check the status and send an update.”)

- Avoid faux intimacy (“I’m always here for you”).

Lesson 2: Engagement loops don’t belong in support

In entertainment, engagement loops are the product. In customer support, the product is resolution.

If your bot is optimized like a feed, you’ll see:

- Too many follow-up prompts

- Overly long responses

- “By the way” tangents

- Suggesting extra features when someone is trying to cancel

If you need a one-line principle to align the team:

The best support conversation is the one that ends quickly with trust intact.

Practical Q&A CX leaders are asking right now

Should we block teens from using customer service chatbots?

Blocking rarely works and often backfires. A better approach is age-aware experiences where regulations require it, plus universal safety design (PII redaction, escalation, tone boundaries) that protects everyone.

How do we measure whether our chatbot is becoming “addictive” or overused?

Add two operational metrics:

- Repeat-contact rate within 24–48 hours for the same issue

- Turns-to-resolution (number of back-and-forth messages before completion)

If those rise without improvements in successful resolution, your bot is creating friction—or fostering reassurance loops.

What’s the safest way to personalize without creeping people out?

Use transparent personalization tied to the task:

- “I’m using your last order to pull the right troubleshooting steps.”

- “Want me to use your default shipping address?”

Avoid hidden inference (“It sounds like you’re anxious”) unless the user explicitly states it and you’re responding minimally and helpfully.

Where this leaves AI contact centers going into 2026

Teen daily AI chatbot usage is a warning label and a roadmap. The warning label: conversational AI can become habit-forming when it feels personal, always-on, and emotionally responsive. The roadmap: customers will expect that same fluency from AI customer support—and they’ll punish brands that treat safety as an afterthought.

If you’re building in the AI in Customer Service & Contact Centers space, aim your personalization at fewer steps, faster answers, and cleaner handoffs. Bake in boundaries that keep the bot in its lane. Your CSAT will thank you, and so will your legal team.

The next question worth asking: when your support chatbot gets really good at sounding human, will your organization be equally good at acting responsible?