Three practical lessons to build a sustainable AI advantage in contact centers: better data, human-in-the-loop workflows, and compounding evaluation.

Sustainable AI Advantage for Contact Centers: 3 Lessons

Most customer service teams in the U.S. are already “using AI”—and still getting beat on speed, quality, and cost.

That’s because the advantage doesn’t come from adding a chatbot or turning on agent-assist. It comes from building an operating system for AI in customer service that keeps improving after the launch buzz fades. If your AI support automation works for six weeks and then stalls (or quietly gets worse), you don’t have an AI strategy—you have a pilot.

This post is part of our AI in Customer Service & Contact Centers series, and it’s focused on one question: What creates a sustainable AI advantage in support and contact centers—especially for U.S. SaaS and digital service providers? I’ll share three lessons I’ve seen separate teams that ship value every quarter from teams that keep “replatforming” the same ideas.

Lesson 1: Treat your support data like a product (not exhaust)

A sustainable AI advantage starts with support data quality because models learn from what you feed them—and customers feel the gaps immediately.

Most teams try to fix AI accuracy with bigger models or more prompts. That can help, but it doesn’t scale. The scalable path is to make your customer support content and interaction data reliable enough that your AI can ground answers, route correctly, and take actions without hallucinating.

What “support data” actually includes

In contact centers, “data” isn’t just a knowledge base article. It’s a messy ecosystem:

- Knowledge base (public + internal), release notes, policy docs

- Past tickets, chat logs, call transcripts, dispositions

- CRM fields, entitlements, contracts, plan limits

- Product telemetry (errors, usage events), incident history

- Macros, templates, agent notes, escalation reasons

If those sources contradict each other, an AI customer service agent will confidently produce contradictions too.

The fastest way to improve AI accuracy: a quality loop

Here’s what works in practice:

- Define a single “source of truth” per topic (refund policy, SLA, security, billing). If you have three versions, choose one and deprecate the rest.

- Instrument AI answers with citations to internal sources (even if customers don’t see them). No citation, no trust.

- Create a “defect taxonomy” for AI failures: wrong policy, outdated info, missed edge case, bad handoff, tone issue.

- Review a fixed sample weekly (for example, 50 AI-handled conversations across top intents) and assign defects.

- Fix the upstream cause: update the doc, adjust routing, add a structured field, or change the escalation rule.

A memorable rule: If you can’t measure why the bot was wrong, you can’t make it reliably right.

Contact center example: billing and cancellations

Billing is where support automation usually breaks first. Policies change. Entitlements vary by plan. Agents use tribal knowledge.

A sustainable approach is to store policy as:

- A clear written policy (human-readable)

- A structured policy table (machine-readable): cancellation window, proration rules, region/state constraints, plan exceptions

- A decision record: “policy updated on date X, reason Y”

When your AI support tool can reference both narrative and structured policy, you get fewer “creative” answers and more consistent outcomes.

Lesson 2: Design for “human-in-the-loop” from day one

Sustainable AI advantage means accepting a reality: contact center automation is never fully done. Human-in-the-loop isn’t a safety net—it’s the engine.

Teams often bolt on human review after something goes wrong. The better approach is to design workflows where humans teach the system continuously, with minimal friction.

Where humans add the most value (and where they don’t)

Humans should intervene where the business risk is high or the context is complex:

- Refunds, credits, charge disputes

- Account access and identity verification

- Privacy requests and data deletion

- Outage communications and incident-specific guidance

- Contract/SLA exceptions for enterprise accounts

Humans shouldn’t be spending time on:

- Reset password flows

- Status checks

- Basic how-to questions already answered in docs

- Ticket tagging and routing (after you’ve trained it)

The point is not “more automation.” It’s automation with reliable boundaries.

A practical approval model for AI in customer support

I like a simple three-tier model that scales:

- Tier A (Auto-resolve): Low risk, high volume. AI can answer and close.

- Tier B (Suggest + agent sends): Medium risk. AI drafts; agent approves.

- Tier C (Assist only): High risk. AI summarizes, retrieves sources, proposes next steps.

You can move intents between tiers over time based on measurable performance.

The KPI that matters: containment with quality gates

Containment rate is popular, but it can hide damage if quality drops.

A better standard for AI contact center metrics is:

- Verified containment = containment only when post-chat CSAT is stable (or improving) and recontact rate doesn’t spike.

If your bot “solves” issues by ending chats quickly, you’ll see it in reopens, chargebacks, escalations, and social complaints.

Winter reality check: holiday volume and staffing

It’s December, and many U.S. support orgs are juggling holiday traffic, staffing gaps, and end-of-year renewals. This is exactly when AI can either prove its value or torch trust.

A sustainable setup is to:

- Expand Tier A only for well-tested intents

- Increase Tier B coverage to keep throughput high without risking policy mistakes

- Tighten Tier C guardrails for anything involving money, access, or compliance

The teams that do this don’t panic-hire in January.

Lesson 3: Build compounding advantages, not one-time features

A sustainable AI advantage comes from compounding systems: feedback, evaluation, and change management that make the AI better every month.

Buying a tool can give you baseline capabilities. What competitors can’t easily copy is your internal machine for improving AI performance with your customers, your product, and your workflows.

The “AI moat” in customer service is process, not models

For U.S. SaaS and digital services, the defensible edge typically comes from:

- Proprietary conversation patterns (your customers’ real questions)

- Deep product context (telemetry + incidents + known issues)

- Fast policy updates (billing, security, compliance)

- Operational integration (CRM, ticketing, identity, status, orders)

When these pieces connect, AI becomes a throughput multiplier.

Put an evaluation harness in place (or you’ll argue forever)

If your team debates AI quality based on anecdotes, progress will stall.

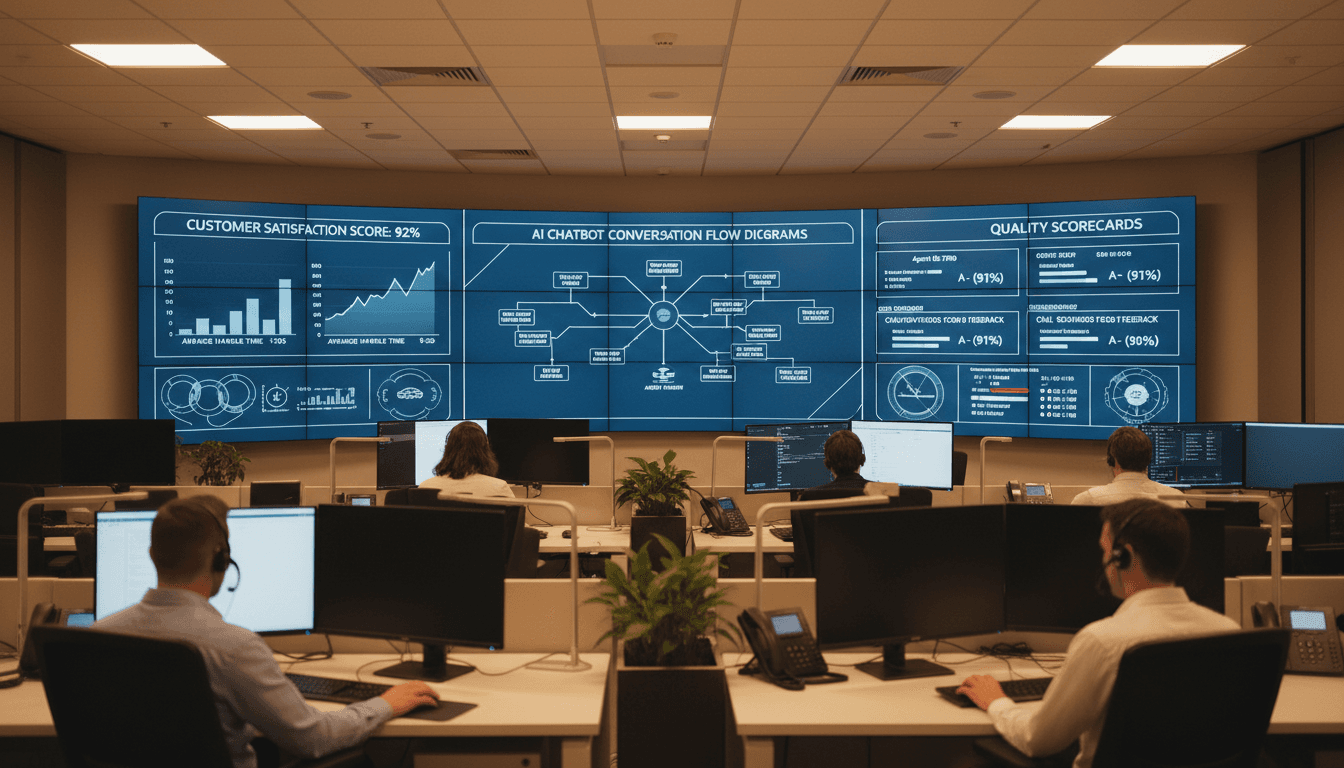

A lightweight evaluation harness for customer service AI includes:

- A curated set of 200–500 real conversations across top intents

- A rubric with pass/fail criteria: correctness, policy compliance, tone, escalation timing

- A weekly scorecard and trendline (not a one-off test)

- Regression tests: “did we break anything after the last change?”

This is where sustainable advantage is built: not in the demo, but in the discipline.

Don’t skip change management (agents will tell you the truth)

Your agents are your highest-signal sensor network.

Make it easy for them to:

- Flag bad AI suggestions with one click

- Tag the reason (wrong policy, missing context, outdated doc)

- Add the “correct answer” or best macro

Then reward the behavior. If agents think feedback disappears into a black hole, they’ll stop giving it—right when you need it most.

A concrete operating cadence (what to do every week)

If you want AI support automation that keeps improving, run a weekly cadence:

- Monday: review top defect types + top escalation drivers

- Tuesday: update sources of truth (KB/policies), improve routing, adjust tool permissions

- Wednesday: ship prompt/workflow changes + add regression tests

- Thursday: shadow new flows with a small agent group and capture feedback

- Friday: publish a simple internal “AI release note” so everyone knows what changed

Small increments beat quarterly rewrites.

People also ask: what makes AI in contact centers “sustainable”?

Q: Does sustainable AI advantage require training a custom model?

No. Many U.S. SaaS teams get strong results using capable foundation models with good retrieval, workflow design, and evaluation. Custom training helps in narrow cases, but it’s not the starting point.

Q: What’s the biggest mistake teams make with AI customer service chatbots?

They optimize for a launch metric (like containment) instead of building a quality loop that prevents regressions and policy drift.

Q: How do you keep AI answers up to date as the product changes?

Treat documentation and policy updates as part of the release process. If product ships weekly, support knowledge has to ship weekly too—ideally with structured fields that AI can reliably use.

What to do next if you want a durable edge

A sustainable AI advantage in contact centers isn’t mysterious. It’s built on three repeatable lessons: productize your support data, design human-in-the-loop workflows, and create compounding improvement systems (evaluation + feedback + change management).

If you’re a U.S. tech company or SaaS provider, this is the difference between “we tried AI” and “AI is now a core part of how we deliver service.” The second group doesn’t just reduce cost per ticket—they respond faster during incidents, retain customers during renewals, and scale without burning out agents.

If you’re planning your 2026 roadmap, ask your team this: Which part of our AI support system gets measurably better every week—and who owns that improvement?