Multilingual AI can scale language support without sacrificing trust. See where it works, where it fails, and how to deploy it responsibly.

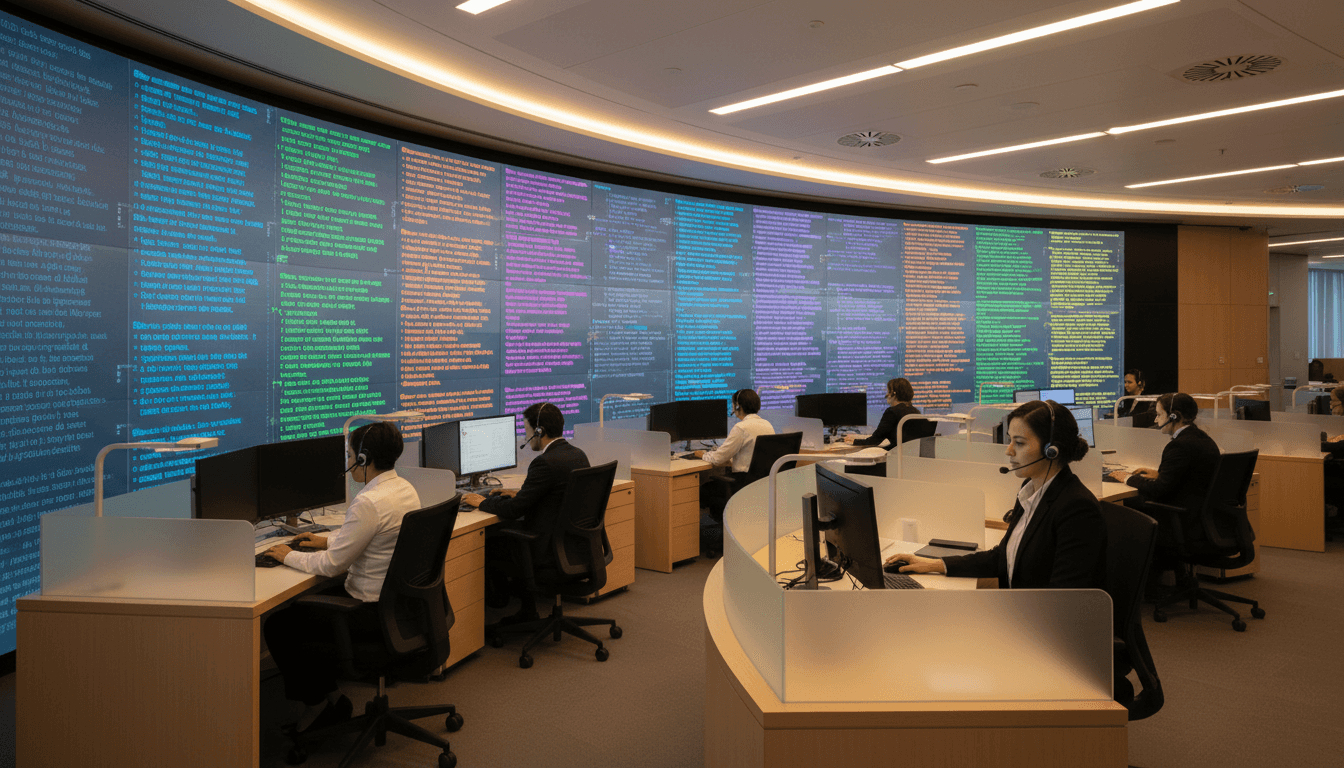

Multilingual AI Support That Customers Actually Trust

December is when language gaps hit hardest. Returns spike, shipping deadlines get tense, and customers who were willing to “make do” in a second language the rest of the year suddenly want help in the one they’re most comfortable with—fast.

Most companies respond the same way: they add a chatbot, turn on “auto-translate,” and hope for the best. And then they’re shocked when customer satisfaction drops even though average handle time improves.

Here’s the thing about multilingual AI in customer service: the technology is good enough to scale language support, but the operating model is where most contact centers fail. The winning approach in 2025 isn’t “bot-first.” It’s human-first with AI doing the heavy lifting—translation, intent capture, routing, and after-call work—while agents stay accountable for outcomes.

Multilingual AI works—if you don’t ask it to do the wrong job

AI translation is practical for customer service today, but it’s not a replacement for judgment, empathy, or cultural context. It excels at high-volume, low-ambiguity interactions and struggles when the customer’s real problem is emotional, complex, or full of implied meaning.

Industry practitioners are largely aligned on a simple split:

- Great fits for AI: FAQs, order status, password resets, appointment scheduling, form filling, identity verification flows, and collecting structured details before escalation.

- Risky fits for AI: billing disputes, cancellations, complaints, fraud, medical/insurance explanations, and anything where tone and nuance decide whether you keep or lose the customer.

This is the core lesson for any “AI in Customer Service & Contact Centers” program: automate the predictable; protect the relationship.

What multilingual AI is genuinely good at

When multilingual AI is deployed with guardrails, it can deliver three concrete outcomes:

- Coverage across more languages without hiring bottlenecks. AI translation can support dozens—or hundreds—of languages across chat, email, and voice.

- Consistency across channels. Customers get a similar quality bar whether they contact you via chat, messaging, email, or phone.

- Faster resolution for simple tasks. When the interaction is transactional, real-time translation and intent detection reduce back-and-forth.

One practical example from the field: targeted language customization (not just generic translation) paired with AI-assisted tools in languages like Spanish and Tagalog has been associated with a 20% drop in call volumes while maintaining high customer satisfaction in at least one implementation. That’s what “automation at scale” is supposed to look like.

Where multilingual AI breaks down

Language is more than words. The hardest problems aren’t vocabulary; they’re meaning.

Multilingual AI still has trouble with:

- Idioms and slang (“I’m done with this” vs. “I’m done for today”)

- Regional dialects (the same word can be polite in one region and rude in another)

- Code-switching (customers mixing languages mid-sentence)

- Emotion and implied intent (sarcasm, frustration, fear)

If you deploy AI as the primary “front door” and force customers through it, these failure modes show up as repeat contacts, escalations, and churn.

Responsible AI starts with the first 30 seconds of the interaction

Responsible AI in multilingual support means customers feel helped—not processed. That’s not philosophical. It’s operational.

A human-first pattern consistently outperforms a bot-first pattern for high-stakes service:

- Start with a real greeting and confirmation of language preference (agent or human-supervised flow).

- Use AI for real-time translation and structured intake (issue type, account lookup, intent, constraints).

- Keep a clear escape hatch to a human at every step. No “dead ends.”

This approach respects the customer and protects the brand. It also avoids the trap many teams fall into: treating AI chatbots as a cost-cutting moat between customers and agents.

A strong multilingual AI strategy should make it easier to reach a human, not harder.

The trust problem: customers don’t like being tricked

Customers will use automation when it saves time. They don’t use it when it feels deceptive.

If you’re rolling out AI-driven translation in voice or chat, bake in two trust signals:

- Disclosure that translation assistance is being used (simple, not legalistic)

- A fast “talk to an agent” option in the customer’s preferred language

One opinion I’ll stand by: if your AI experience hides the human option, you’re not doing customer experience—you’re doing customer deflection.

Designing multilingual AI for contact centers: a practical blueprint

The best multilingual AI deployments look like orchestration, not replacement. You’re building a system where AI handles language mechanics and agents handle accountability.

Below is a model that works across voice, chat, and messaging.

Step 1: Capture language preference early (and store it)

Don’t make customers repeat themselves every time they contact you.

- Ask for preferred language in IVR, web, and app settings

- Detect language automatically (with confirmation)

- Store preference in CRM for future routing

Success metric: percentage of interactions routed in the customer’s preferred language on the first attempt.

Step 2: Use multilingual AI for routing, not just translation

Translation is helpful, but intent detection + routing is where you win handle time and satisfaction.

Examples:

- Detect “billing dispute” vs. “refund status” vs. “service outage” in the customer’s language

- Route to the right queue with the right permissions

- Attach a short AI-generated interaction summary to reduce re-explaining

Success metric: transfer rate and time-to-correct-queue.

Step 3: Give agents real-time support (without overwhelming them)

If you want agents to embrace multilingual AI, it has to reduce cognitive load.

What works in practice:

- Real-time translated transcript (two columns: customer / agent)

- Suggested replies that match your brand tone

- Terminology controls for product names, legal phrases, and regulated language

- One-click insertion of approved explanations for fees, policies, and consent

What doesn’t work: dumping raw model output into the agent desktop and calling it “assistance.”

Success metric: agent adoption rate plus quality scores on translated interactions.

Step 4: Build a “do not automate” list

Every contact center needs explicit boundaries. Create a list of interaction types that require human ownership, even if AI assists.

Common “human-owned” categories:

- cancellations/retention

- escalations and complaints

- vulnerable customer situations

- fraud or account takeover

- complex payment arrangements

Success metric: reduction in repeat contact for these categories.

Dialects, idioms, and brand voice: the details that separate good from painful

Multilingual AI that ignores dialects creates “technically correct” conversations that still feel wrong.

If you support Spanish, for example, “neutral Spanish” often isn’t enough. Customers notice when your phrasing sounds foreign, overly formal, or regionally mismatched—especially in emotionally charged moments like disputes or service outages.

Three practical moves:

1) Maintain a multilingual terminology glossary

Create an approved glossary for:

- product names

- plan tiers

- legal/consent terms

- shipping and returns language

- common troubleshooting steps

This reduces inconsistent translations and prevents the AI from inventing phrasing that conflicts with policy.

2) Tune for politeness and formality levels

Some languages encode formality directly. The wrong tone can feel disrespectful.

Set guidelines for:

- formal vs. informal address

- apology language

- escalation language

- refusal language (saying “no” without sounding dismissive)

3) QA multilingual conversations like you QA English

If you don’t measure it, you won’t fix it.

A workable QA approach:

- sample translated chats/calls weekly by language

- check for meaning preservation (not word-for-word)

- flag cultural mismatches and update your glossary

- track top failure intents (where translation breaks)

Compliance is becoming a multilingual requirement, not a “nice to have”

Regulations and consumer expectations are pushing multilingual support from optional to mandatory in more regions. One concrete example contact center leaders are watching is Quebec’s language requirements (often discussed in the context of Bill 96), which raise the bar on French-language customer communications.

Even outside regulated regions, this is the reality in 2025:

- Customers expect to self-serve and get support in their language across channels

- Brands are judged on inclusion, not just speed

- Data protection requirements apply regardless of language

If you’re using AI translation, treat it as part of your security and compliance surface area:

- restrict what data is sent for translation

- redact sensitive fields where possible

- log agent actions and handoffs

- define retention policies for transcripts

What to measure: the multilingual AI scorecard that actually matters

If you only track cost savings, you’ll accidentally optimize for worse customer experiences. Use a balanced scorecard.

Here are metrics that show whether multilingual AI is helping or hurting:

- Containment rate (by language) with CSAT guardrails

- First contact resolution (FCR) by language

- Repeat contact rate within 7 days (a hidden translation-failure signal)

- Escalation and transfer rates

- Quality assurance scores on translated interactions

- Customer effort score (CES) for multilingual journeys

- Agent sentiment and adoption (burnout rises when tools create extra work)

A simple rule: If containment rises but repeat contacts also rise, your AI is “winning” the wrong battle.

Next steps: a low-risk way to start multilingual AI in your contact center

If you want leads, savings, and happier customers, start with a pilot that’s hard to mess up.

A practical 30–45 day pilot:

- Pick one channel (chat or email is easiest to QA)

- Pick one high-volume language (often Spanish in North America)

- Restrict scope to low-risk intents (status checks, simple updates, FAQs)

- Add human oversight and a clear escalation path

- Measure FCR, CSAT, and repeat contacts against a baseline

Once the pilot is stable, expand to voice with real-time transcription and translation—again, with agents in control.

Responsible multilingual AI isn’t about proving the model is smart. It’s about proving customers feel understood.

Where do you think your contact center has the biggest language friction today: routing, translation accuracy, or the handoff between automation and agents?