Automated agent monitoring in Amazon Connect shows where AI in customer service is headed: scalable QA, faster coaching, and smarter compliance.

Amazon Connect Agent Monitoring: What AI Changes Now

Most contact centers don’t have a “quality problem.” They have a coverage problem.

A typical QA team can review only a small slice of interactions—often 1–3% of calls and chats—because manual evaluation is slow and expensive. That means coaching is based on a tiny sample, trends are spotted late, and compliance issues can hide in the 97% nobody sees.

That’s why AWS adding automated agent monitoring to Amazon Connect matters. It’s not just another checkbox feature. It’s a clear signal of where modern customer service is headed: AI-powered quality management and performance analytics that operate across every interaction, not just a handful. In this post (part of our AI in Customer Service & Contact Centers series), I’ll break down what automated monitoring really changes, how to use it responsibly, and how to turn it into measurable outcomes—shorter handle times, better CSAT, and fewer avoidable escalations.

Automated agent monitoring fixes the “small sample” QA trap

Automated agent monitoring is valuable for one main reason: it scales quality oversight without scaling headcount.

Traditional QA programs are built around constrained human review. You pick a few calls per agent per month, score them against a rubric, and hope those calls represent what’s really happening. They usually don’t—especially in peak seasons like December, when:

- New hires ramp fast

- Policies change (returns, shipping exceptions, holiday promos)

- Customer emotions run hotter

When AI monitors a much larger share of interactions, you get a different operating model:

- Continuous visibility: You don’t wait weeks to notice a script is failing or an escalation reason is spiking.

- Consistent scoring signals: Humans vary; AI can apply the same detection criteria every time.

- More coaching moments: Instead of quarterly “performance surprises,” you can coach weekly with evidence.

Here’s the stance I’ll take: If your QA program can’t see at least 30–50% of interactions (directly or via analytics), it’s not a QA program—it’s a sampling exercise. Automated monitoring is how teams close that gap.

What “monitoring” means in practice

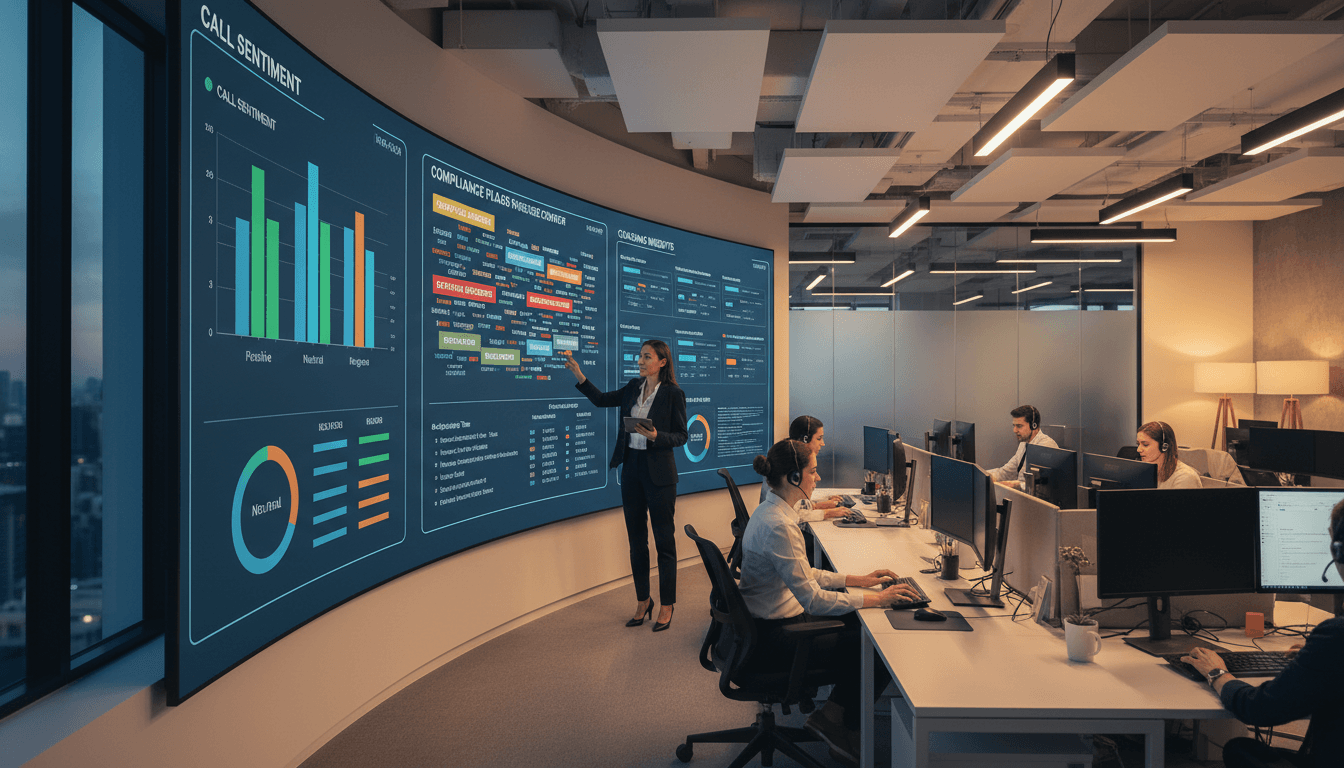

In modern cloud contact center platforms, automated monitoring typically includes capabilities like:

- Conversation transcription (for voice) and ingestion (for chat)

- Policy/script adherence detection (did they verify identity? mention disclosures?)

- Sentiment and tone signals (customer and agent)

- Risk and compliance flags (PII exposure, prohibited phrases)

- Topic and reason detection (why customers are contacting you)

- Coaching insights (patterns correlated with low CSAT or high transfers)

Even if AWS’s specific implementation details differ by configuration and region, the direction is consistent across the market: quality is becoming data-driven and near-real-time.

AWS is competing with enterprise SaaS—AI features are how it wins

Amazon Connect has always been more than “a phone system in the cloud.” AWS positioned it as a customer service platform that can plug into enterprise workflows, data systems, and analytics stacks.

But when you compete in enterprise customer service, you’re up against ecosystems like Salesforce and other established SaaS vendors. In that arena, AI-driven differentiation isn’t optional because buyers expect three things:

- Faster time to value (you can’t spend 9 months building a QA analytics layer)

- Operational proof (show me how this improves AHT, FCR, CSAT, compliance)

- Scale without fragility (holiday peaks, outage spikes, product launches)

Automated agent monitoring checks all three boxes when implemented well.

The strategic shift: from “contact center” to “contact center operating system”

Once monitoring is automated, the contact center stops being a black box and starts behaving like an observable system:

- You can measure the impact of a new policy within days.

- You can detect if a specific queue is creating avoidable transfers.

- You can see how certain phrases correlate with refunds or churn.

This is the same evolution we’ve watched in software engineering (logs → metrics → tracing). Customer service is following that path: interactions are becoming analyzable events.

Where automated monitoring actually improves outcomes (and where it doesn’t)

Automated agent monitoring produces value when you connect it to specific operational decisions—not when you treat it as surveillance.

Below are the highest-ROI use cases I see in AI-powered contact centers.

1) Quality management that doesn’t collapse under volume

Answer first: AI monitoring expands QA coverage and helps you prioritize human review.

The best pattern is a “hybrid QA” workflow:

- AI screens 100% (or as much as feasible) and flags interactions by risk, sentiment, or compliance triggers

- Human QA reviews the highest-impact set (for coaching nuance and fairness)

- Coaching focuses on the few behaviors that move outcomes

This is how teams stop wasting QA time on “perfect” calls while missing the interactions that create escalations, chargebacks, or regulatory risk.

2) Faster agent ramp and better coaching

Answer first: Monitoring makes coaching more timely and more specific.

Coaching works when it’s anchored to real examples. Automated monitoring can surface:

- A trend in missed verification steps

- Repeated moments where agents talk over customers

- Specific parts of the call where confusion spikes (billing, plan changes, cancellations)

A practical coaching rhythm that works:

- Weekly 30-minute micro-coaching based on 2–3 flagged calls

- One behavior goal per week (not five)

- Re-check the same metrics after the goal is implemented

That’s how you tie AI-powered performance analytics to real behavior change.

3) Compliance and risk: fewer “unknown unknowns”

Answer first: AI monitoring reduces the chance that policy violations hide in unreviewed calls.

This matters most in regulated environments (finance, healthcare, insurance), but even retail and travel have compliance-like obligations: disclosures, refund policies, identity checks, and consent.

A smart approach is to classify monitoring outputs into tiers:

- Tier 1: Must-fix compliance breaches (immediate action)

- Tier 2: High-risk behaviors correlated with disputes/escalations

- Tier 3: Coaching opportunities (tone, clarity, empathy)

Where it doesn’t help much

Automated monitoring won’t fix:

- Broken policies (“the refund policy is inconsistent, so agents improvise”)

- Bad routing (“customers bounce queues, so sentiment tanks”)

- Low authority (“agents can’t actually solve the issue, so AHT rises”)

AI can reveal these problems quickly, but leadership still has to change the underlying system.

The hidden risk: turning monitoring into surveillance

If you deploy automated agent monitoring without guardrails, it backfires. Agents will feel watched, managers will overreact to noisy signals, and trust collapses.

Here’s a sentence worth repeating internally:

Monitoring should create coaching and clarity, not fear and punishment.

Set policy guardrails before you turn it on

Before rolling out automated monitoring, document answers to:

- What is monitored? (calls, chats, screen actions, after-call work?)

- What’s the purpose? (coaching, compliance, process improvement—ranked)

- Who can see what? (agent, team lead, QA, compliance)

- How long is data retained? (and why)

- How are disputes handled? (agents must be able to challenge context)

If you can’t explain these rules in plain language to agents, you’re not ready.

Avoid “single-score management”

One of the quickest ways to misuse AI monitoring is to convert multiple signals into a single agent score and manage to it.

Better: keep separate metrics for separate goals.

- Compliance: pass/fail requirements

- Quality: rubric categories with examples

- Customer outcomes: CSAT, FCR

- Efficiency: AHT (with context)

AHT alone, for example, can push agents to rush customers. AI should reduce that pressure by showing why calls are long (confusing policy, broken tooling, repeat contacts), not by punishing the agent for time.

How to implement automated monitoring in Amazon Connect (without chaos)

Answer first: Start narrow, prove impact, then scale coverage.

A rollout plan that tends to work in real contact centers:

Step 1: Pick one queue and one goal

Choose a queue with clear volume and consistent call types (billing, returns, password resets). Pick a goal that’s measurable in 30 days:

- Reduce transfers by 10%

- Improve QA adherence on two mandatory steps

- Cut repeat contacts for one issue category

Step 2: Define the rubric and “what good looks like”

AI needs clear definitions. Humans do too.

- Write 6–10 rubric items max

- Mark 2–3 as critical compliance

- Provide example phrases that count as “pass”

Step 3: Calibrate with humans for two weeks

Run automated monitoring outputs alongside human QA. Your aim is to answer:

- What’s accurate?

- What’s noisy?

- What’s missing context?

Expect to tune thresholds and categories. If you don’t calibrate, you’ll lose trust fast.

Step 4: Operationalize the insights

Insights that don’t change anything become dashboard clutter.

Put the outputs into real workflows:

- Weekly coaching queues for team leads

- A compliance review lane for Tier 1 flags

- Monthly process review for recurring top issues

Step 5: Measure impact with a simple scorecard

Use a small set of metrics:

- QA compliance pass rate

- Escalation rate

- CSAT (or post-contact survey score)

- Transfer rate

- AHT (interpreted with the above)

If you can’t show movement, adjust the program—not the agents.

People also ask: practical questions teams have right now

Will automated monitoring replace QA analysts?

No. It changes their job. The best teams use QA analysts for calibration, coaching design, edge-case review, and fairness checks—not for listening to random calls all day.

Does AI monitoring work for both voice and chat?

Yes, and chat is often easier because it’s already text. Voice depends on transcription quality and audio conditions. Expect better results when you have consistent call flows and good audio capture.

How do you keep it fair for agents?

Fairness comes from process, not promises:

- Transparent rules

- Human review for high-impact actions

- The right to challenge context

- Separate coaching metrics from disciplinary triggers

What this signals for 2026 contact center strategy

AWS adding automated agent monitoring to Amazon Connect is a validation of a bigger trend: AI in customer service is moving from “front door automation” (bots) to “operations automation” (quality, coaching, compliance, analytics). Bots get the headlines, but monitoring is where many teams find consistent ROI.

If you’re leading a contact center, here’s the bet I’d make going into 2026 planning: the winners won’t be the teams with the fanciest virtual agent—they’ll be the teams that can observe performance across every interaction and turn those signals into better coaching and better processes.

If you want to pressure-test your readiness, start with one question: If you had visibility into 80% of your interactions next month, what would you change first—coaching, routing, policy, or knowledge? The honest answer tells you where AI-powered monitoring will pay off fastest.