AI voice agents are ready for real contact center work in 2026. Learn the guardrails, roadmap, and ROI use cases to deploy safely and fast.

Stop Waiting: AI Voice Agents That Work in 2026

A lot of contact centers are making the same expensive mistake right now: they’re treating AI voice agents like an all-or-nothing bet. Either the AI can handle everything perfectly (including edge cases, sarcasm, obscure policy exceptions, and the dreaded “my situation is unique”), or they don’t deploy it at all.

Most companies get this wrong. The goal for 2026 isn’t “replace agents.” It’s remove avoidable work—the repeatable, high-volume contacts that clog queues, inflate costs, and burn out your best people right when seasonal volume spikes hit.

The reality? AI in customer service is already good enough to produce measurable ROI in the contact center—if you design it like a system, not a demo. The teams that wait for “perfect AI” aren’t avoiding risk. They’re choosing a different risk: being outpaced on speed, cost, and customer experience.

The real cost of waiting isn’t theoretical

If you’re delaying AI because you’re worried about hallucinations, inaccurate answers, or brand risk, you’re not irrational. You’re just focusing on the wrong comparison.

The correct comparison isn’t:

- AI voice agent vs. flawless human service

It’s:

- AI voice agent with guardrails vs. your current reality (hold times, transfers, inconsistent answers, and agent churn)

Here’s what waiting usually costs in plain terms:

- Higher cost per contact because simple issues still hit live agents.

- Longer queues because peak load gets absorbed by humans only.

- Lower CSAT because customers experience delays and repeated identity/policy questions.

- Agent attrition because agents spend too much time on repetitive work and too little time on meaningful problem-solving.

One of the most “invisible” costs is what I’d call operational drag: old IVR trees, outdated knowledge articles, and brittle workflows that force customers to repeat themselves. A modern AI contact center approach reduces drag by routing, resolving, and summarizing interactions with far less friction.

AI voice agents are already solving the right problems

A practical way to judge whether AI is ready is to stop asking, “Can it handle everything?” and start asking, “Can it handle the top 10 contact reasons with reliable outcomes?”

In most contact centers, a small set of intents drives a huge share of volume:

- password resets and login help

- order status and delivery updates

- appointment scheduling and changes

- billing questions and payment arrangements

- address updates

- policy explanations and eligibility checks

- basic troubleshooting

These are exactly the interaction types that modern virtual agents (voice and digital) handle well—because success doesn’t require a philosophical conversation. It requires:

- fast identification of intent

- accurate retrieval of approved information

- structured workflows (verify identity, check status, complete action)

- graceful escalation when confidence drops

The strongest implementations don’t try to be charming. They try to be correct, quick, and accountable.

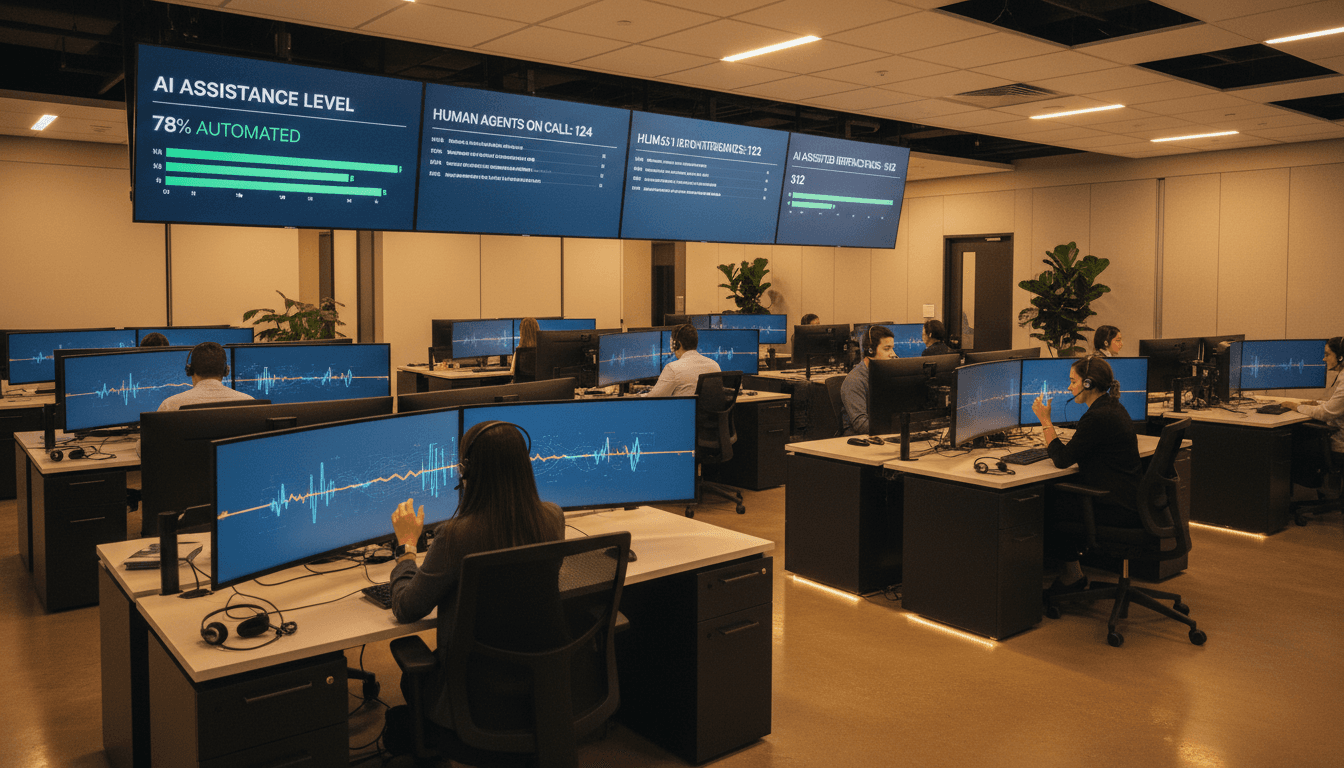

The hybrid model is the winning model

The most reliable operating pattern in 2026 is a hybrid contact center:

- AI handles routine and repeatable interactions end-to-end.

- Humans focus on exceptions, judgment calls, empathy-heavy situations, and revenue opportunities.

- AI supports humans with summaries, next-best actions, and real-time knowledge suggestions.

This matters because your best agents are too expensive—and too valuable—to spend their day reading policy paragraphs and checking order status.

“Hallucinations” are a design problem, not a reason to stall

Hallucinations sound scary because the worst-case stories are memorable. But in customer service automation, hallucinations are usually the result of poor architecture choices:

- letting a model answer from the open web or unvetted documents

- weak prompt constraints

- no confidence thresholds

- no escalation paths

- no monitoring or feedback loops

You don’t solve hallucinations by avoiding AI. You solve them by boxing the AI into the business truth.

Guardrails that actually work in contact centers

If you’re serious about deploying AI voice agents safely, these controls are non-negotiable:

- Grounded knowledge: Answers must come from curated sources (approved policies, current product catalog, CRM/OMS data).

- Confidence-based routing: If confidence is below a threshold, the bot escalates—immediately.

- Policy enforcement: Some intents should never be answered generatively (refund approvals, legal claims, medical advice). Use templates and deterministic flows.

- Conversation boundaries: The agent should explicitly state what it can and can’t do (“I can help you track an order or update your address”).

- Auditability: Store transcripts, resolution codes, and “why” signals so QA can spot patterns.

Hallucination risk doesn’t disappear by waiting. It disappears by building systems where the AI can’t invent.

Legacy systems are the bottleneck (and AI exposes it fast)

Many contact centers say they’re “not ready for AI,” but what they really mean is:

- knowledge is outdated or contradictory

- customer data isn’t accessible in real time

- workflows require three systems and a swivel chair

- call reasons aren’t tagged cleanly

- ownership is split across CX, IT, ops, and compliance

AI doesn’t create those problems. It makes them impossible to ignore.

What modernization really means

Modernizing your contact center for AI isn’t just buying software. It’s aligning four layers:

- Data layer (customer identity, order/billing status, entitlements)

- Knowledge layer (approved answers, change control, versioning)

- Workflow layer (what steps resolve the issue, what systems are touched)

- Experience layer (voice, chat, messaging, consistent outcomes)

If your current IVR is a maze and your knowledge base is a graveyard, deploying AI forces a cleanup. That cleanup is a feature, not a drawback—because it improves human performance too.

A practical AI readiness roadmap (that doesn’t take a year)

If your leadership team is stuck between “move fast” and “don’t break trust,” here’s a roadmap that works without betting the farm.

Step 1: Pick two use cases with clear ROI

Choose intents with:

- high volume

- low variance

- low compliance risk

- clear success criteria

Common starting points:

- order status / appointment status

- billing balance / payment processing (with strict verification)

Define success like an operator, not a marketer:

- containment rate (resolved without live agent)

- transfer rate

- average handle time impact

- first contact resolution

- CSAT for AI-handled contacts vs. baseline

Step 2: Build your “truth set” before you build the bot

This is where projects win or die.

Create a small, maintained package of:

- approved responses (plain language)

- policies with effective dates

- system-of-record fields (what data is authoritative)

- escalation rules (what must go to humans)

If you do nothing else, do this. A contact center AI agent is only as good as the truth you feed it.

Step 3: Design escalation like you mean it

Customers don’t hate automation. They hate getting stuck.

Escalation should feel like a feature:

- immediate handoff when confidence drops

- transcript + summary delivered to the agent

- customer doesn’t repeat authentication and context

If your escalation forces repetition, your AI rollout will get blamed for problems that existed before.

Step 4: Instrument everything (or you’ll argue about anecdotes)

AI projects become political when measurement is weak.

Track:

- top intents and deflection/containment by intent

- hallucination events (defined as ungrounded or incorrect responses)

- time-to-resolution

- repeat contact rate within 7 days

- agent workload mix (routine vs. complex)

Then run weekly tuning. AI isn’t “set it and forget it.” But it also doesn’t require a PhD team—just disciplined ops.

Step 5: Expand from voice to digital (or vice versa) with shared logic

The best contact centers don’t build separate brains for phone, chat, and messaging.

Create shared:

- knowledge and policy objects

- intent taxonomy

- workflow APIs

- compliance rules

Then deploy across channels. Customers will move between voice and digital anyway. Your automation should follow them.

What buyers should ask vendors before signing anything

If you’re evaluating AI voice agents or chatbots for customer service, don’t get distracted by a slick demo voice.

Ask questions that reveal production readiness:

- How does the system ground responses? (Show me the knowledge sources and governance.)

- What happens when confidence is low? (Show escalation logic and thresholds.)

- How do you prevent policy drift? (How are updates approved and deployed?)

- What’s your approach to identity verification? (Voice + data checks + fraud controls.)

- Can you integrate with our CRM/CCaaS/WFM stack? (Not “can,” but “how long and with what effort.”)

- How do we measure containment vs. customer frustration? (Show reporting and QA workflows.)

A simple rule: If the vendor can’t explain their guardrails in one minute, you’ll be the one explaining the outage to leadership.

People also ask: “Will AI replace contact center agents?”

No—and betting on full replacement is a bad strategy.

Here’s the more accurate framing: AI will replace tasks, not people, and the centers that use AI well will redesign roles around exception handling, relationship building, and revenue retention.

You’ll still need humans for:

- ambiguous situations and policy exceptions

- high-emotion calls (fraud, cancellations, medical/financial distress)

- negotiation and retention

- complex troubleshooting across multiple systems

What changes is the workload mix. And that’s the whole point.

The stance I’ll take: if you’re waiting for “perfect AI,” you’re already late

Contact center automation is entering 2026 with a clear pattern: AI voice agents and chatbots are delivering value now, especially when they’re grounded in controlled knowledge, integrated into workflows, and paired with strong escalation.

If you’re part of our AI in Customer Service & Contact Centers series, you’ve seen the same theme from multiple angles: the winners aren’t the teams with the fanciest model. They’re the teams that treat AI like operations—measured, governed, and continuously improved.

If you want a practical next step, do this in the next 30 days:

- Pull your top 20 contact reasons.

- Identify the first 2 intents you can automate safely.

- Define containment, escalation, and QA requirements before you pick a tool.

The question worth asking now isn’t whether AI belongs in your contact center. It’s whether your competitors will train their automation on your customers’ expectations before you do.