Create AI-powered super agents with safer agent-assist, better knowledge retrieval, and smarter workflows—without betting trust on chatbots.

Build Super Agents with AI (Without Bot Risk)

A lot of contact centers are finding out the hard way that “AI-first” customer service can become risk-first customer service.

When a chatbot confidently gives the wrong policy, the customer doesn’t blame the bot. They blame you. And in 2025, as more teams roll out generative AI across support channels, that gap between promised efficiency and real-world reliability is where trust goes to die.

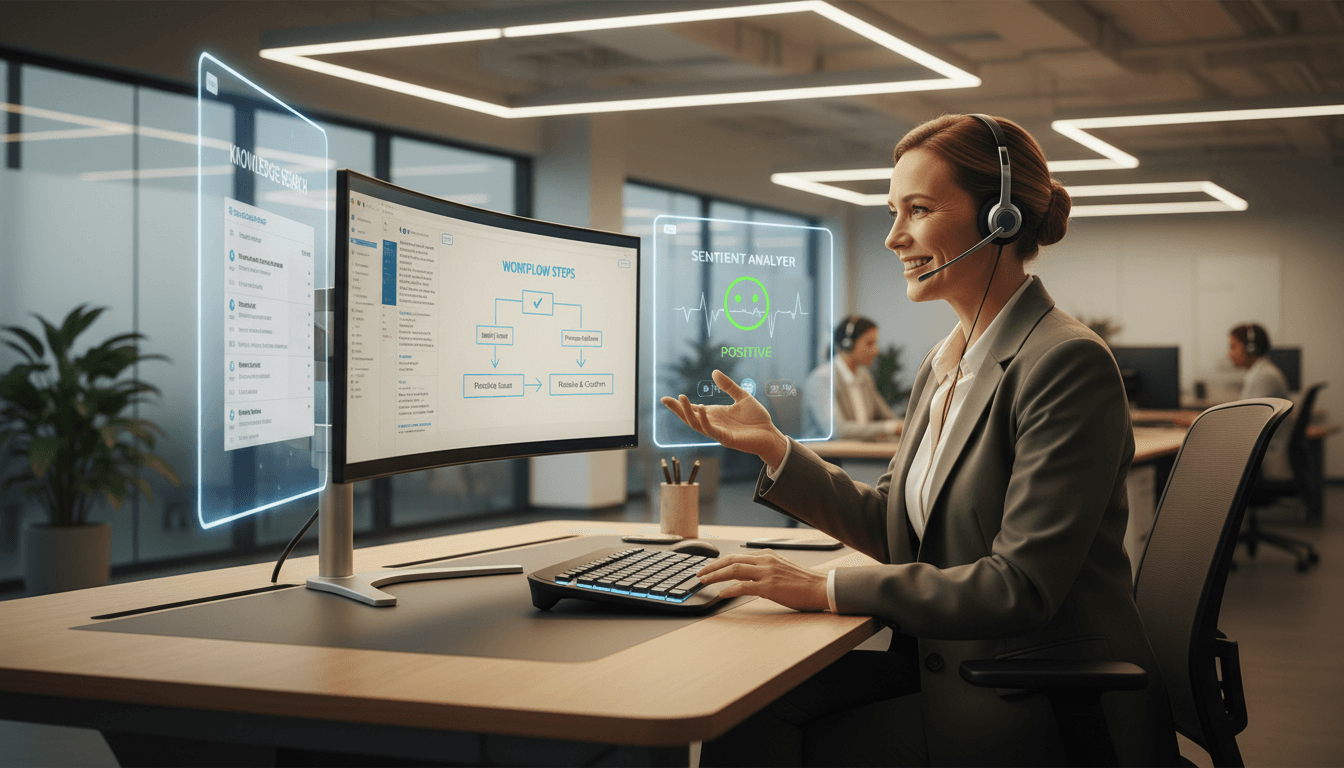

Here’s the better approach I keep seeing work: use AI to make your human agents faster, more accurate, and easier to train—while keeping customers shielded from direct AI answers when the stakes are high. That’s how you create super agents: agents who can handle more complexity with less stress, and resolve issues without the frantic tab-switching that drags down handle time.

“Super agents” are built, not hired

A super agent isn’t a mythical top performer you poach from a competitor. A super agent is what you get when your operation consistently gives agents three things:

- The right answer fast (even when policies change)

- The right workflow next (even when systems are fragmented)

- The right help at the right moment (even when a conversation turns)

AI in customer service is most valuable when it improves those three outcomes. Not with flashy demos—through boring, operational wins: fewer errors, shorter training time, and smoother resolution paths.

And yes: this is part of the broader shift happening across the AI in Customer Service & Contact Centers landscape. The trend is moving away from “replace agents” toward “multiply agents.” That’s the strategy that scales without lighting your compliance team on fire.

The myth: chatbots are the main AI use case

Most companies still talk about contact center AI as if the end goal is to deflect every interaction into a bot. The reality is more nuanced:

- Customers will tolerate automation for simple tasks.

- Customers won’t tolerate automation when they’re stressed, confused, or dealing with money, medical issues, travel, or account access.

- Teams underestimate the cost of maintaining “accurate” bot behavior when policies, promos, and processes change weekly.

If you want scalable support, agent-assist is the safer starting point—and often the faster ROI.

Use AI to fix the hardest part of support: finding the truth

The single most common failure mode I see in contact centers isn’t rude agents or bad intent—it’s inconsistent information.

Your knowledge base grows like a junk drawer:

- Three articles for the same issue

- Conflicting versions of a process

- Old policy pages that never got retired

- “Temporary” exceptions that became permanent

Humans can’t reliably sort that out mid-call, under time pressure. AI can.

What “knowledge AI” should actually do

The goal isn’t to generate answers from scratch. The goal is to retrieve and rank what you already have, then present it clearly.

A high-performing agent-assist knowledge layer should:

- Search across sources (KB, CRM notes, policy docs, internal wiki)

- Identify the most current version of guidance

- Explain the answer with citations or references inside your systems

- Flag duplicates and obsolete articles for cleanup

That last point matters more than people admit. A knowledge base doesn’t stay clean because you buy software—it stays clean because the software makes decay visible.

Practical example: policy changes during the holiday surge

It’s December. Your contact volume spikes. Returns, shipping delays, billing confusion, travel changes—pick your industry.

Now add a policy update (there’s always a policy update).

Without AI:

- Agents search manually

- Supervisors get flooded with “which article is right?” escalations

- Customers receive inconsistent answers

With agent-assist AI:

- The agent sees the latest guidance immediately

- The desktop suggests the correct disposition and next action

- Supervisors focus on true exceptions, not scavenger hunts

That’s how AI-powered agents become faster without becoming reckless.

Reduce handle time by removing the “six tabs” problem

Most contact centers still require agents to juggle six or seven applications to resolve one interaction: CRM, billing, order system, identity verification, knowledge base, ticketing, and a QA tool… at minimum.

The fix isn’t telling agents to “work smarter.” The fix is reducing unnecessary context switching.

Where RPA fits (and where it doesn’t)

Robotic process automation (RPA) works well when the process is:

- Repetitive

- Rule-based

- High-volume

- Low-exception

Pair AI with RPA and you get a strong pattern:

- AI identifies intent and what needs to happen next

- RPA completes the clicks, copy-pastes, and form fills

- The agent confirms and communicates

This is the underrated super-agent combo: AI for decisioning + RPA for execution.

A simple workflow worth automating first

If you’re deciding where to start, choose a workflow that causes both long average handle time (AHT) and high error rates. For many teams, that’s:

- Address changes

- Refund eligibility checks

- Plan changes / upgrades

- Password resets with verification steps

Automate the mechanical steps, not the customer conversation. That’s how you keep quality high while speeding up the boring parts.

Sentiment analysis: stop treating it like a score

Sentiment analysis is often sold as if AI can read emotions better than humans. In practice, humans are usually better at emotional nuance.

The real value of sentiment analysis in contact centers is operational:

- Detecting dissatisfaction trends across thousands of interactions

- Flagging calls that need supervisor intervention

- Identifying coaching opportunities based on patterns, not anecdotes

What good sentiment monitoring looks like

Use sentiment analysis as a signal, not a verdict. A practical implementation includes:

- Real-time alerts when sentiment drops quickly (a “call going off the rails” moment)

- Post-interaction trend reporting by queue, reason code, product, or policy

- Supervisor workflows that make it easy to barge in, message the agent, or offer suggested de-escalation language

And one opinionated take: if your sentiment tool produces a score but doesn’t produce an action, it’s a dashboard ornament.

Why “humans in the loop” is the safest AI strategy

Direct-to-customer generative AI has three problems that don’t go away with optimistic planning:

- Hallucinations (confidently incorrect answers)

- Security exposure (prompt injection and data leakage)

- Legal/privacy risk (recording, processing, and disclosure issues)

The RSS source highlighted major examples of AI risk playing out publicly:

- A travel brand found liable after a chatbot provided incorrect policy information.

- A retailer faced legal action tied to call recording and third-party AI processing allegations.

Even if your company never becomes a headline, the damage can happen quietly:

- Refunds issued incorrectly

- Wrong commitments made to customers

- Regulatory complaints

- Trust erosion that shows up later as churn

The “agent shield” model

If you want a scalable and defensible approach, use AI behind the scenes:

- AI drafts responses, recommends next steps, and surfaces knowledge

- The agent approves, edits, and owns the final communication

- The system logs what AI suggested vs. what the agent sent

That final bullet is a big deal for compliance and continuous improvement. It creates an audit trail and helps you tune what the AI is allowed to suggest.

The AI-enabled agent desktop: where most rollouts succeed or fail

AI doesn’t live in a vacuum. It lives inside the agent desktop.

If you add AI as “application number eight,” adoption drops. If you embed AI into the workflow agents already use, adoption rises.

What a unified desktop needs in 2026 planning

Most teams reviewing their 2026 roadmap should push for an agent desktop that unifies:

- Channels: voice, email, messaging, chat, video

- Context: customer history, open cases, recent orders, entitlements

- Knowledge: policies, procedures, troubleshooting steps

- Automation: RPA actions, forms, dispositioning

- Quality: call summaries, wrap-up assistance, QA flags

AI should feel like a helpful layer across the desktop—not a separate destination.

Metrics that prove you’re creating super agents

If you want proof (and a budget that renews), tie your AI in contact centers program to metrics leaders already care about:

- Time to competency: reduce new-hire ramp time by weeks, not days

- First contact resolution (FCR): increase by removing wrong answers and rework

- Average handle time (AHT): decrease through workflow automation and faster knowledge retrieval

- Error rate / rework rate: fewer credits, fewer repeat contacts

- Agent attrition: lower burnout by reducing cognitive load

One more: track tool friction.

If agents need to fight the desktop, your AI project becomes an expensive distraction.

A practical rollout plan (that doesn’t create chaos)

Most companies get this wrong by trying to launch “AI” as a single big feature. A better plan is to launch capabilities in an order that compounds.

Phase 1: Clean retrieval (2–6 weeks)

- Connect AI search across your existing knowledge sources

- Prioritize “top 20 contact drivers” content

- Add feedback buttons: “helpful / not helpful” + reason

Phase 2: Guided workflows (4–10 weeks)

- Add next-best-action suggestions

- Introduce RPA for 1–3 high-volume workflows

- Standardize dispositions and outcomes

Phase 3: Real-time assist (6–12 weeks)

- Live call summarization and wrap-up help

- Supervisor sentiment alerts with clear intervention actions

- Compliance guardrails (PII redaction, restricted topics)

Phase 4: Continuous improvement (ongoing)

- Weekly review of “AI suggested vs. agent sent” deltas

- KB de-duplication and retirement based on AI flags

- Coaching programs tied to pattern insights

If you’re trying to drive leads (and not just ship features), the best moment to talk to prospects is usually after Phase 1, when teams can already see reduced friction and faster answers.

The contact center future: fewer bots, stronger agents

AI-powered customer support isn’t a contest between humans and machines. It’s an operations design problem: how do you increase throughput and consistency without increasing risk?

Building super agents is the most direct path. Give agents AI that retrieves the truth, reduces clicks, and warns when an interaction is going sideways. Keep humans in the loop for judgment calls and customer-facing commitments.

If you’re planning your 2026 contact center roadmap now, this is the question worth asking internally: Where are we still forcing humans to do computer work—and where should computers be assisting humans instead?