AI customer support can scale without sacrificing quality—if every interaction feeds a learning loop. See the model U.S. digital services can copy.

AI Customer Support That Improves With Every Interaction

Most companies still treat customer support like a factory line: tickets in, tickets out. That mindset breaks the moment you hit real scale—especially if you’re a digital service provider serving millions of users across multiple channels (chat, email, voice, in-product help) with expectations of instant resolution.

OpenAI’s internal support model (shared publicly in late 2025) shows a more practical direction for U.S. tech companies: treat support as a learning system, not a queue. Every interaction should make the next interaction faster, safer, and more consistent. This isn’t about “deflecting” tickets with a chatbot. It’s about building an AI-powered customer service operating model where knowledge, quality measurement, and automation reinforce each other.

This post is part of our “AI in Customer Service & Contact Centers” series, and it focuses on what digital-first teams can copy: the architecture, the people model, and the measurement approach that turns daily conversations into durable improvements.

Support at scale is an operating model problem, not a staffing problem

The core point: hypergrowth support can’t be solved by hiring alone. If your volumes grow faster than your headcount, the math wins every time. Even well-run contact centers hit diminishing returns when they only optimize for throughput.

A better framing is: support is an engineering and operations design challenge. The goal isn’t “close tickets faster.” The goal is help users get what they need with the least friction, while continuously reducing repeat issues and inconsistent answers.

Here’s what changes when you adopt that stance:

- You stop measuring success purely by average handle time and backlog.

- You treat “one-off weird tickets” as signals for product and policy gaps.

- You invest in infrastructure that turns frontline work into reusable assets.

If you run a U.S.-based SaaS product, fintech platform, marketplace, healthcare portal, or any subscription digital service, this matters because customer support is increasingly part of the product experience. Users don’t separate “the app” from “the help.” They blame the whole system.

The holiday reality check (December 2025)

Late December is a stress test for support teams: renewals, billing confusion, travel-related usage spikes, end-of-year procurement, and holiday staffing constraints. AI customer support systems that learn continuously are simply more resilient during these seasonal spikes than teams that rely on static macros and brittle decision trees.

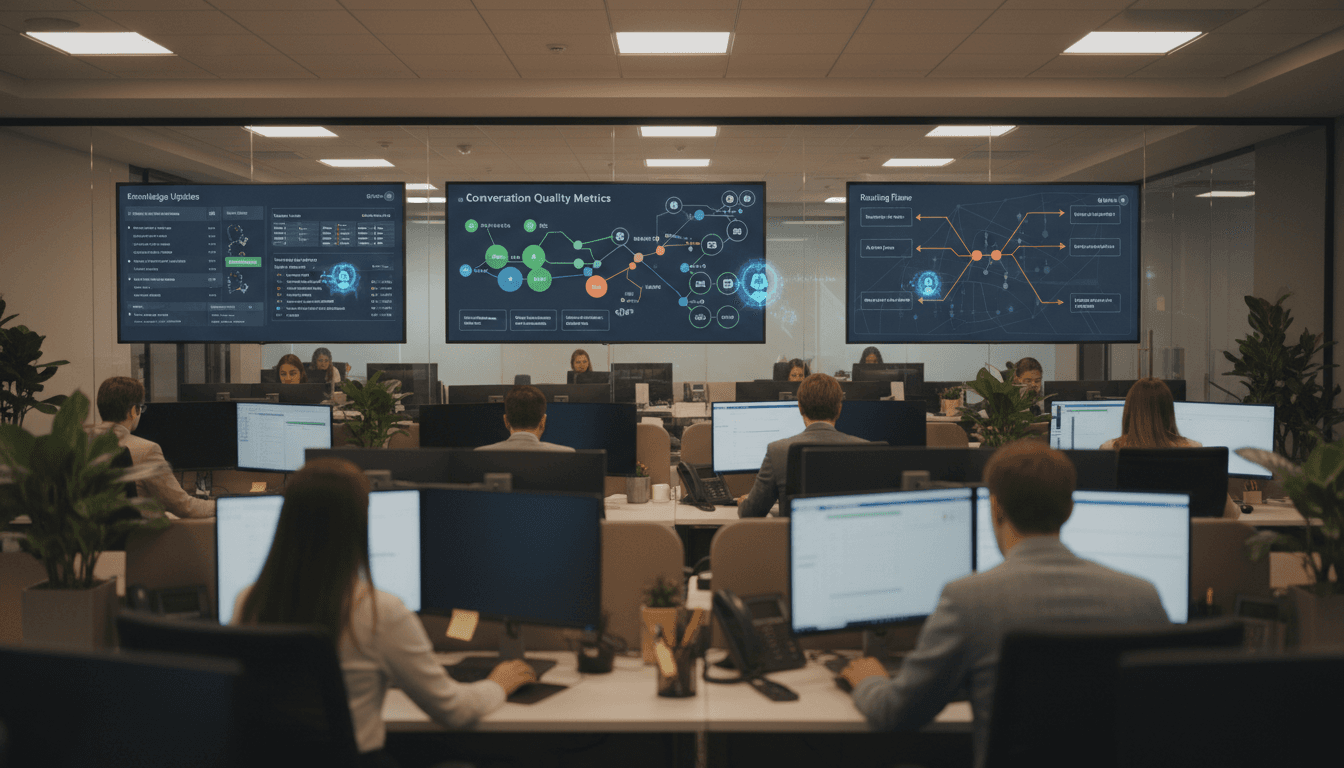

The three-part blueprint: surfaces, knowledge, and evals

OpenAI describes its model as a loop connecting three building blocks. This is the part many companies miss: you don’t get reliable AI support by buying a chatbot. You get it by connecting where customers ask, what the system knows, and how you measure quality.

1) Surfaces: meet customers where they actually get stuck

“Surfaces” are the places users ask for help:

- Web chat and in-app messaging

- Email support

- Phone or voice support

- Help embedded directly inside the product (tooltips, guided flows, contextual help)

The trend across U.S. digital services is clear: in-product support is growing faster than traditional ticketing. When customers can resolve an issue without leaving the workflow (updating a payment method, exporting data, resetting MFA), you reduce frustration and cut cost per resolution.

A practical stance I’ve found works: treat each support surface as a product surface. Give it owners, roadmaps, and quality metrics—because it shapes retention as directly as your onboarding flow.

2) Knowledge: stop treating docs as static content

Most knowledge bases fail for one reason: they’re written as documentation projects, not as living systems. In a learning support model, knowledge is continuously updated from real interactions:

- Repeated confusion becomes a clearer article or in-product explanation

- Edge cases become internal playbooks

- Policy decisions become structured guidance that AI can apply consistently

This is the bridge to strong AI-driven support systems: when your knowledge is grounded in real cases, your automation gets more accurate and your agents stop improvising.

3) Evals and classifiers: define “good support” in measurable terms

This is the most transferable idea for contact centers: quality has to be codified and tested, not just coached.

Evals and classifiers let teams measure things like:

- Correctness (did we provide the right answer?)

- Tone (was the interaction respectful and clear?)

- Policy adherence (did we follow refund rules, safety rules, compliance constraints?)

- Escalation judgment (did the system know when not to answer?)

If you want to use AI in contact centers responsibly, this is non-negotiable. Without evaluation loops, you don’t have an AI support program—you have a roulette wheel.

Snippet-worthy truth: If you can’t write a test for “good support,” you can’t scale “good support.”

Your support team has to become systems thinkers

A surprising shift in OpenAI’s approach is organizational: support reps aren’t treated as “ticket processors.” They’re treated as builders who improve the system.

That’s a strong model for U.S. tech organizations because it solves a common failure mode: AI gets implemented around the support team instead of with them. Then adoption stalls, edge cases pile up, and frontline trust collapses.

What “support reps as builders” looks like in practice

In a learning-loop model, experienced reps:

- Flag conversations that should become test cases

- Propose new categories (classifiers) when patterns emerge

- Prototype lightweight automations to close workflow gaps

- Improve knowledge articles based on what customers actually ask

This changes training, too. It’s no longer just “here are policies and scripts.” It’s also:

- How to evaluate AI outputs

- How to spot structural gaps

- How to feed improvements back into the system

For leaders, the hiring profile shifts. You still need empathy and communication, but you also want curiosity and comfort with tools. The future contact center agent is part support specialist, part QA analyst, part product feedback engine.

Production-grade AI support needs observability, not magic

A lot of AI customer service rollouts fail because teams deploy automation without visibility. When something goes wrong—wrong refund, wrong guidance, wrong tone—nobody can answer “why did it do that?” quickly.

OpenAI’s internal description emphasizes platform primitives that enable production operations:

- Traces and observability so teams can replay runs and inspect tool calls

- Classifiers for tone/correctness/policy checks

- Voice support capability for real-time conversations

- Evaluation dashboards to track quality trends over time

Even if you’re not using OpenAI’s internal tools, the principle is universal:

If your AI can take actions (refunds, account changes, cancellations), you need auditability and step-level logs. For U.S. companies dealing with chargebacks, privacy expectations, and increasingly strict internal risk reviews, observability isn’t an extra feature—it’s what makes the whole idea acceptable.

A practical “from zero to real” rollout path

If you’re building an AI-powered customer support program in 2026 planning cycles, this sequence tends to work:

- Start with Q&A + citations to internal knowledge (no actions yet)

- Add classification and routing (what is this issue? how urgent? who owns it?)

- Add safe automations (status lookups, password reset guidance, invoice retrieval)

- Add high-risk actions only after evals mature (refunds, account changes)

- Expand to voice support once your text workflows are stable

Skipping steps 2 and 4 is where most teams get burned.

How “learning that compounds” reduces cost and raises quality

The compounding effect is the point: daily conversations become production tests, and production tests shape future behavior.

Here’s the cause-effect chain that makes this powerful:

- A confusing billing edge case appears 50 times this week

- Reps flag it and turn it into an eval test

- Knowledge is updated (and maybe the product UI is clarified)

- The AI agent is measured continuously against the eval

- Next week, that edge case is resolved correctly in seconds—across chat, email, and voice

That loop improves three outcomes that executives care about:

- Faster resolution (users get answers quickly)

- Lower cost per contact (fewer escalations and repeat contacts)

- Higher consistency (policy adherence and tone don’t vary by agent)

Just as important: it creates a disciplined way to decide when the AI should not answer. That’s how you prevent confident nonsense from becoming a brand problem.

“People also ask” style questions (answered directly)

Does AI customer support replace human agents? No. The winning model pairs AI with humans: AI handles routine, lookup-heavy work; humans handle ambiguity, exceptions, and relationship-sensitive cases.

What’s the difference between a chatbot and an AI support agent? A chatbot typically follows scripted flows. An AI support agent can reason over context, use tools (like billing systems), and is governed by evals, classifiers, and observability.

What should we measure in AI-driven customer service? Measure correctness, policy adherence, escalation accuracy, customer satisfaction, repeat contact rate, and automation success rate—not just handle time.

A support model U.S. digital services can actually copy

The most useful takeaway from OpenAI’s approach isn’t any single tool. It’s the operating model:

- Connect every channel to the same learning loop

- Treat knowledge as a product that evolves from real conversations

- Codify quality with evals and classifiers

- Make reps co-builders so improvements ship fast

- Instrument everything so you can debug and audit outcomes

If you’re leading customer support, CX operations, or a contact center transformation, a good next step is an internal workshop with three outputs:

- A list of your top 25 contact drivers (by volume and by pain)

- A first set of 20–30 eval tests defining “good” for those drivers

- A roadmap for which actions AI can take safely in the next 90 days

That’s the difference between “we added a chatbot” and “we built AI in customer service that keeps getting better.”

Support is becoming something users experience inside the product, not a destination. The teams that win in 2026 won’t be the ones that answered the most tickets—they’ll be the ones whose systems learned the fastest.

If you’re building toward an AI-powered contact center, what’s the one support interaction you’d most like to eliminate by fixing the underlying system?