Amazon Connect shows what AI at contact center scale looks like—$1B run rate and 12B minutes optimized. Here’s the playbook to apply in your support org.

Amazon Connect’s AI Playbook for Modern Contact Centers

Amazon Connect hitting a $1 billion annualized revenue run rate isn’t just a fun milestone for AWS—it’s a signal that AI in customer service has crossed from “promising pilot” into operational reality. Even more telling: Amazon reports AI optimized over 12 billion minutes of customer interactions in the last year. That’s not a lab demo. That’s production-scale contact center work.

Most companies still approach AI for contact centers like a feature hunt: buy a chatbot, bolt it onto the IVR, measure containment, call it a day. Amazon Connect’s story suggests a different framing. Treat AI as part of a system: routing, self-service, agent workflows, analytics, and governance all designed to improve customer outcomes and agent experience without turning the contact center into a science project.

This post is part of our “AI in Customer Service & Contact Centers” series, and I’m using Amazon Connect as a case study—not to worship a vendor, but to extract a playbook you can apply whether you’re on AWS or not.

The real disruptor wasn’t AI—it was operations

Answer first: Amazon Connect won early because it removed contact center friction (hardware, long implementations, vendor sprawl), and that operational reset created the runway for AI to actually work.

Amazon’s origin story is a familiar one for enterprise support leaders: too many vendors, too much complexity, too many fees, and a looming infrastructure bill. In 2007, Amazon’s internal customer service team decided to replace three contact center vendors rather than pay a reported $3M up-front hardware upgrade plus ongoing licensing and maintenance. The internal solution rolled out via a pilot in 2007 and full deployment in 2008.

Here’s the part support and CX leaders should underline: the internal system wasn’t built as “a contact center platform.” It was built to solve business constraints—scale, reliability, cost, and speed—while aligning to a customer-obsessed culture. That’s why it spread internally “like wildfire” across divisions and acquisitions. Reportedly, it generated $60M in estimated annual savings compared to competitive solutions.

What this means for your AI roadmap

If your current environment is a patchwork (telephony here, WFM there, analytics somewhere else, a chatbot living on an island), AI will underperform. Not because the model is weak—because your system can’t feed it the right context, can’t act on its recommendations, and can’t govern it.

Practical move:

- Map your top 10 contact drivers end-to-end (entry channel → authentication → intent → resolution → wrap-up → follow-up).

- Identify where context gets lost (CRM not available, knowledge base out of date, no consistent dispositioning).

- Fix the workflow breaks before expecting AI to “save” the experience.

From “weekend deployments” to enterprise scale: why speed matters

Answer first: Cloud-native contact centers win because they compress time-to-value, and that speed is what lets you iterate on AI safely and profitably.

When Amazon Connect launched publicly in 2017, the headline wasn’t “AI.” It was the idea that enterprises could replace traditional telephony infrastructure with a cloud-native architecture and get live faster. The AWS article points out that what used to be a year-long buildout could become a weekend project for many organizations.

Speed isn’t a vanity metric in customer service. It directly affects:

- Cost-to-serve: faster iteration means faster removal of repeat contacts.

- Customer satisfaction: you can adjust flows and fix pain points without waiting for quarterly releases.

- Agent productivity: new tooling reaches the floor while it still matters.

And it matters a lot right now. December is peak season for many support orgs—returns, delivery issues, billing changes before year-end, and holiday staffing constraints. A platform that can adapt quickly is not a “nice to have.” It’s risk management.

The COVID test still applies in 2025

Amazon Connect proved itself during the pandemic because remote agent support wasn’t a bolt-on—it was inherent to the architecture. The lesson is durable: resilience comes from designing for volatility.

Ask yourself:

- Can you spin up 50–500 seasonal agents without shipping equipment?

- Can you maintain QA and coaching when supervisors are remote?

- Can you change routing and self-service flows in hours—not weeks?

If any of those are “no,” your AI program will struggle because you’re trying to modernize on top of a brittle operating model.

AI at scale: why “checkbox AI” changed the adoption curve

Answer first: AI adoption in the contact center accelerates when it’s operationally simple—easy to enable, easy to measure, and tied to daily workflows.

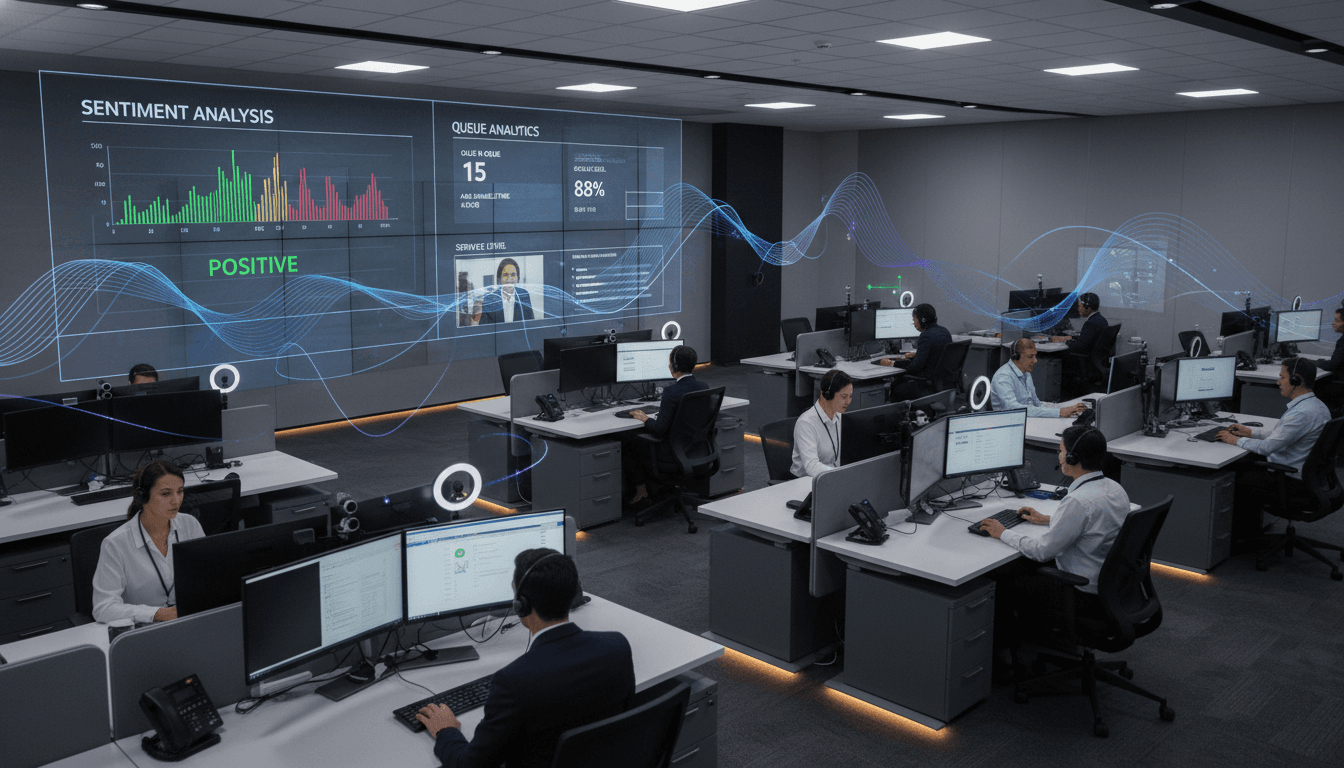

Amazon Connect’s big AI step (starting around 2019) was adding conversational analytics, sentiment analysis, and related capabilities without the usual heavy lift. The AWS post describes enabling AI as close to selecting a checkbox rather than weeks of complex deployment.

That “ease” is more strategic than it sounds. In most contact centers, the constraint isn’t willingness to use AI—it’s bandwidth:

- IT can’t support another six-month integration.

- Ops leaders can’t afford disruption during peak.

- Supervisors won’t adopt tooling that adds admin work.

A practical AI maturity ladder (steal this)

If you want measurable results in AI-powered contact centers, phase your rollout like this:

-

Observe (Weeks 1–4):

- Turn on speech and chat analytics.

- Build a baseline: top intents, transfer rates, repeat contact, average handle time (AHT), after-call work (ACW), sentiment trends.

-

Assist (Weeks 4–10):

- Deploy agent assist: suggested replies, knowledge recommendations, real-time guidance.

- Start with 1–2 queues to avoid boiling the ocean.

-

Automate (Weeks 8–16):

- Add self-service for the top 3 intents that are high volume and low emotional complexity (order status, password reset, appointment changes).

- Focus on resolution quality, not just containment.

-

Orchestrate (Quarter 2+):

- Use AI-driven routing: intent + sentiment + customer value + agent skills.

- Automate wrap-up and call summaries to cut ACW.

-

Proactively engage (Quarter 3+):

- Use analytics signals to trigger outbound notifications, deflecting avoidable inbound contacts.

This ladder matches what we’re seeing across the market: AI that starts with visibility and assistance builds trust faster than AI that jumps straight to full automation.

GenAI in the contact center: where value shows up first

Answer first: The fastest ROI from generative AI usually comes from agent workflows—summaries, wrap-up, knowledge drafting—because it reduces time spent on admin without risking customer trust.

The AWS article describes a pivot toward LLM capabilities such as:

- Automated agent wrap-up

- Call summarization

- LLM-based self-service experiences

If you’re looking for a sane place to start with generative AI for customer support, I’ll take a stance: start with post-contact automation before you put LLMs directly in front of customers.

Why?

- Wrap-up is expensive (ACW adds up fast).

- Wrap-up is inconsistent (agents choose different dispositions and notes).

- Wrap-up errors damage reporting, coaching, and root-cause work.

What “good” looks like (specifics to copy)

A solid GenAI wrap-up flow should produce:

- A 3–5 bullet summary of what happened

- A clear outcome (resolved, pending, escalated)

- Customer intent and reason codes aligned to your taxonomy

- Next best action (refund issued, replacement shipped, follow-up scheduled)

- A compliance-safe note style (no sensitive data echoed back)

Then require a human review step until quality is proven in your environment.

Snippet-worthy truth: Generative AI doesn’t replace process discipline—it punishes the lack of it.

If your knowledge base is outdated, if policies aren’t consistent, or if exceptions aren’t documented, the model will amplify confusion at scale.

Safety, ethics, and “agentic AI”: the next hard problem

Answer first: The next competitive edge in AI for contact centers will come from governance and guardrails, not flashier demos.

AWS frames what’s ahead as two inflection points:

- Safe and ethical agentic AI at enterprise scale

- Moving from reactive to proactive customer engagement

Agentic AI (systems that can take actions, not just generate text) raises the stakes. In contact centers, “take action” might mean:

- issuing refunds or credits

- changing account settings

- canceling services

- scheduling technicians

- updating identity or address details

If you’re considering this direction, you need non-negotiables:

- Permissioning: which actions can AI recommend vs execute?

- Audit trails: what data did it use, and what did it change?

- Escalation rules: when does the system hand off to a human?

- Customer transparency: how will customers know what’s automated?

A simple governance checklist for 2026 planning

Use this in your Q1 planning cycles:

- Define “high-risk” intents (billing disputes, fraud, account closure, medical/financial topics).

- Require human approval for high-risk actions until error rates are proven low.

- Build red-team scenarios: prompt injection, policy loopholes, angry customer pressure.

- Track model quality with operational metrics (recontact rate, escalations, compliance flags), not just accuracy scores.

What to copy from Amazon Connect (even if you never buy it)

Answer first: The Amazon Connect story reinforces five principles that predict success with AI in customer service.

- Start with a unified foundation. AI needs consistent data, routing, and workflows.

- Optimize for speed of change. The contact center is a living system; AI makes that more true, not less.

- Make adoption easy for ops teams. If it takes months to enable, it won’t reach scale.

- Prioritize agent experience. AI that removes ACW and improves guidance earns trust.

- Treat safety as a product requirement. The future is agentic; governance starts now.

If you’re building a 2026 roadmap, you don’t need 30 AI features. You need 3–5 capabilities that connect to outcomes: faster resolution, lower repeat contact, higher customer satisfaction, and a job that agents can actually sustain.

The question I’d leave you with: when your contact center adds more automation next year, will it feel like a smoother experience—or will it feel like a maze that just happens to talk faster?