Terraform support for DataSync Enhanced mode makes S3-to-S3 transfers faster, repeatable, and AI-ops ready. Standardize data moves at scale.

Terraform + DataSync Enhanced Mode for Faster S3 Moves

Most teams don’t lose time on “big” cloud projects—they lose it on the repeatable stuff that never stays repeatable. Data transfers are a perfect example: copying objects between S3 buckets sounds simple until you’re doing it across accounts, environments, Regions, or thousands of prefixes, under time pressure, with someone asking for progress updates every hour.

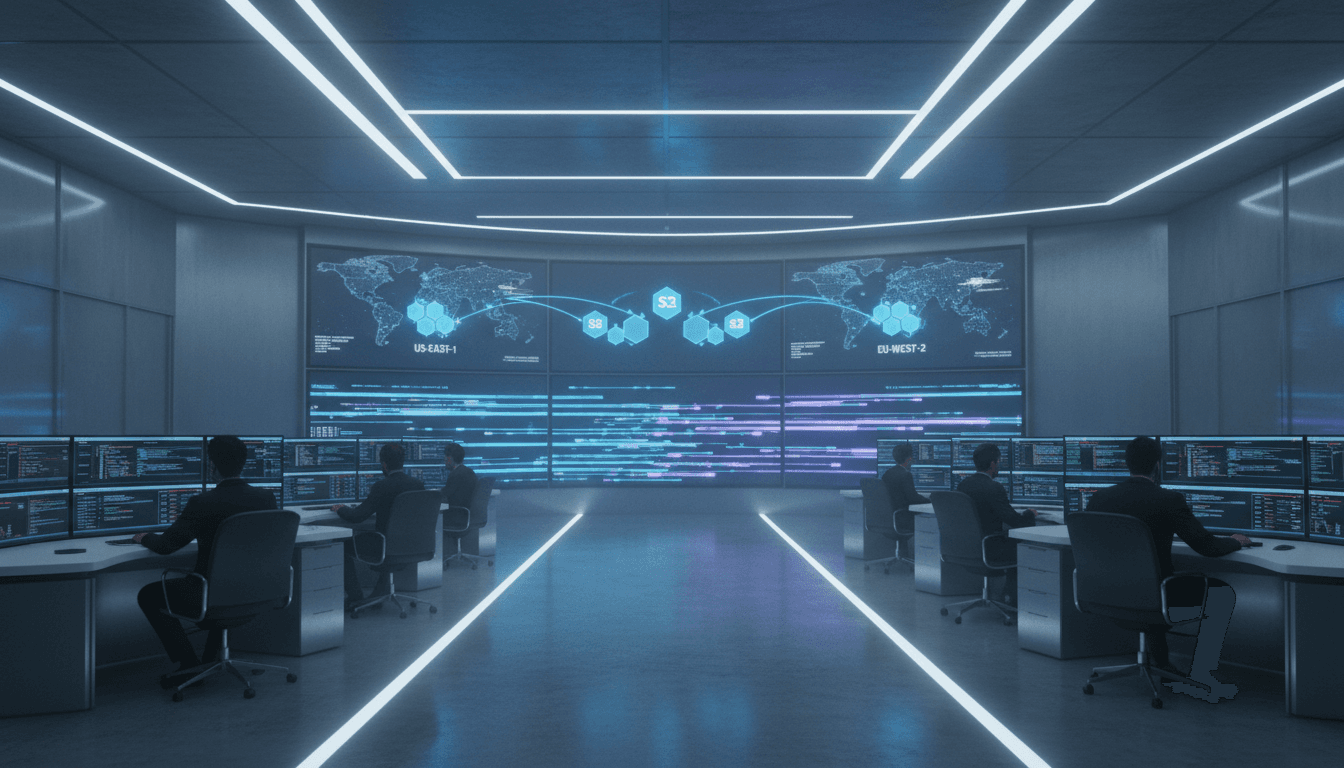

AWS just removed a big chunk of that friction: the AWS DataSync Terraform module now supports Enhanced mode for transfers between S3 locations. Practically, that means you can define high-performance S3-to-S3 transfers as infrastructure-as-code and roll them out consistently—without hand-configuring tasks in consoles.

In our AI in Cloud Computing & Data Centers series, we keep coming back to one theme: AI can’t optimize what your team can’t reliably describe and reproduce. Terraform support for DataSync Enhanced mode is one of those “quiet” releases that makes AI-ready operations more realistic, because it turns data movement into a deployable, versioned artifact.

What Terraform support for DataSync Enhanced mode actually changes

Answer first: It turns high-throughput S3-to-S3 transfers into a repeatable, automated pattern your platform team can standardize and scale.

DataSync has long been a go-to service for secure, accelerated data movement. The new part here is the Terraform module support for Enhanced mode when the source and destination are both S3 locations. Enhanced mode is designed for performance and scale, using parallelism under the hood and adding richer transfer metrics.

Why that matters operationally:

- Standardization: You can templatize transfers (tags, logging, schedules, naming, IAM boundaries) and apply them across dozens of teams.

- Speed of provisioning: New environment? New account? New Region? The transfer stack becomes a Terraform apply, not a checklist.

- Auditability: Reviews become code reviews. Approvals become pull requests. Drift becomes visible.

This is the connective tissue between “we want AI-driven workload management” and “we can’t even keep our migration scripts consistent across teams.” Automated infrastructure is the prerequisite.

Enhanced mode: the practical benefits you’ll notice

Answer first: Enhanced mode focuses on throughput, scale, and observability—three things that tend to break first during large data moves.

From the release details, Enhanced mode provides:

- Parallel processing for higher performance and better scalability

- Removal of file count limitations, which matters when you’re moving massive object sets

- Detailed transfer metrics for monitoring and management

If you’ve ever had a transfer job that looked fine in test but fell apart in production because the object count exploded, you already understand why “file count limitations” is not a minor footnote.

And those detailed metrics are more than dashboards. They’re the raw material for automation: alarms, error budgets, anomaly detection, and eventually AI-assisted decisions (more on that below).

Why data transfer automation is an AI-ready infrastructure pattern

Answer first: AI operations thrive on predictable workflows and measurable outcomes—Terraform + DataSync Enhanced mode provides both.

A lot of “AI in cloud operations” talk gets stuck at the model layer. In practice, the quickest wins show up where the system is already instrumented and codified:

- Codified: Terraform defines what “a transfer” is.

- Instrumented: Enhanced mode produces detailed metrics.

- Repeatable: You can run the same pattern in dev/stage/prod and compare performance.

Once you have that, intelligent resource allocation and workload management stop being vague goals and start becoming automation opportunities.

Bridge point #1: Infrastructure automation enables AI optimization

When transfers are defined in Terraform, you can create guardrails that AI (or simpler rule engines) can work within:

- Enforce naming conventions and tagging for chargeback/showback

- Apply consistent schedules (batch windows, blackout windows)

- Standardize alert thresholds and notification routing

- Encode security posture (KMS expectations, IAM scopes)

AI can help decide when to run transfers and how to prioritize them, but it can’t help much if every team builds a one-off pipeline.

Bridge point #2: Terraform integration supports orchestration

S3-to-S3 transfer jobs don’t live in isolation. They’re usually one step in a workflow:

- Landing raw data → curating → feature store builds → model training

- Migrating app data → validating → swapping endpoints

- Replicating datasets → scanning → indexing for retrieval

Terraform is the lingua franca for orchestrating that surrounding infrastructure: buckets, IAM, KMS, VPC endpoints, event rules, notifications. Adding Enhanced mode support to the DataSync module means the transfer step can join the same lifecycle and release discipline.

Bridge point #3: Enhanced mode metrics are fuel for dynamic scaling

Enhanced mode’s detailed metrics are what you need for automated tuning. Even before you introduce AI, you can build strong feedback loops:

- Detect sustained throttling patterns and adjust scheduling

- Trigger retries or remediation workflows when error rates spike

- Identify chronic “hot prefixes” and refactor storage layout

Then, if you do introduce AI-driven operations, the model has something to learn from: consistent telemetry across many runs.

Where this fits in modern data centers (and why December timing matters)

Answer first: Year-end is when teams feel the pain of bulk data movement—budget resets, migrations, compliance deadlines, and new AI initiatives.

December is peak “everything needs to be moved by Q1” season. I see a recurring pattern:

- New fiscal year planning: data platform modernization gets approved

- AI pilots graduating: teams need larger, cleaner datasets in the right accounts and Regions

- Compliance reviews: retention, classification, and access policies force bucket re-orgs

- Post-incident fixes: people realize their backup/replication story isn’t as clean as they thought

In that context, Terraform support is not just about developer convenience. It’s how you scale operationally without burning out the engineers who “know the console steps.”

Practical use cases that benefit immediately

Answer first: If you move large S3 datasets repeatedly across environments, Enhanced mode + Terraform is a direct productivity and reliability win.

1) AI/ML data pipeline promotion (dev → prod)

Many AI teams start training on a messy dev dataset, then need to promote curated data to prod buckets with clear lineage.

A Terraform-defined DataSync task lets you:

- Version the transfer config alongside your data pipeline code

- Recreate the same transfer pattern per environment

- Add consistent monitoring and tagging for governance

This is especially useful when model training happens in one account while curated datasets must live in another.

2) Cross-team dataset distribution at scale

If your org has a central data platform team, distributing datasets is often a constant request stream.

Instead of bespoke scripts, you can offer an internal module wrapper:

- Input: source bucket/prefix, destination bucket/prefix, schedule, ownership tags

- Output: a standardized DataSync task in Enhanced mode, plus alarms and logs

The result is a self-service pattern that still respects platform guardrails.

3) Migration waves with repeatable runbooks

Migrations rarely happen once. They happen in waves: app A, then app B, then app C.

Terraform gives you:

- A consistent “transfer stack” you can stamp out per wave

- Change history for what was moved, how, and when

- A path to automate validation steps around the transfer

When someone asks “what changed between wave 2 and wave 3?”, you can answer with diffs.

How to implement it without creating a new mess

Answer first: Treat DataSync tasks like productized infrastructure: opinionated defaults, strong guardrails, and measurable SLOs.

Terraform makes it easy to create 50 transfer tasks. That’s not always a good thing. The goal is fewer patterns, reused more often.

Establish “golden defaults” for transfer tasks

Build an internal standard that includes:

- Ownership tags (team, cost center, data domain)

- Alarm baselines (failure counts, duration thresholds)

- Logging and notification integration

- Naming conventions that encode source/destination intent

If you’re serious about AI-driven resource optimization, these tags and metrics become your training data.

Put policy around what can be provisioned

Use your normal controls (code review, CI checks, policy-as-code) to prevent risky patterns:

- Block transfers into buckets without required encryption

- Require explicit prefixes (avoid accidental whole-bucket syncs)

- Require explicit schedules for high-cost windows

Make transfer health measurable

Don’t just ask, “Did it finish?” Ask measurable questions:

- What’s our median transfer duration per TB for this dataset?

- How many retries per week is acceptable?

- Which datasets are consistently slow—and why?

Once you track those, you can automate decisions like prioritization and scheduling.

Common questions teams ask before adopting Enhanced mode

Answer first: Most concerns are about cost, governance, and operational noise—not about raw transfer speed.

“Will this increase our cloud bill?”

Enhanced throughput can shift costs indirectly (more data moved faster during peak windows, more frequent runs because it’s easier). The fix isn’t avoiding the feature—it’s managing it:

- Attach budget alerts and tagging discipline

- Schedule large runs in predictable windows

- Build a simple approval path for unusually large transfers

“How do we keep 10 teams from creating 10 different patterns?”

Create a thin internal wrapper module with your defaults and guardrails, and let teams configure only what they must (source/destination, schedule, owners).

“Does this help with AI ops, or is that marketing?”

It helps because it creates structured, versioned, observable transfer workloads. That’s exactly what AI-assisted operations need to make meaningful recommendations.

If your data movement isn’t defined as code and measured consistently, your AI ops program will end up optimizing spreadsheets.

Next steps: turn data movement into an operational capability

Terraform support for AWS DataSync Enhanced mode is a strong signal of where cloud operations are headed: repeatable building blocks that scale across teams, with enough observability to automate intelligently.

If you’re running AI workloads in the cloud—or planning to in early 2026—treat your data transfers as first-class infrastructure. Version them. Monitor them. Make them self-service with guardrails. That’s how you keep training pipelines, feature stores, and analytics platforms fed without creating a new operational bottleneck.

If you were to standardize just one workflow in your environment before your next AI initiative ramps up, would it be S3 dataset promotion, migration waves, or cross-account distribution?