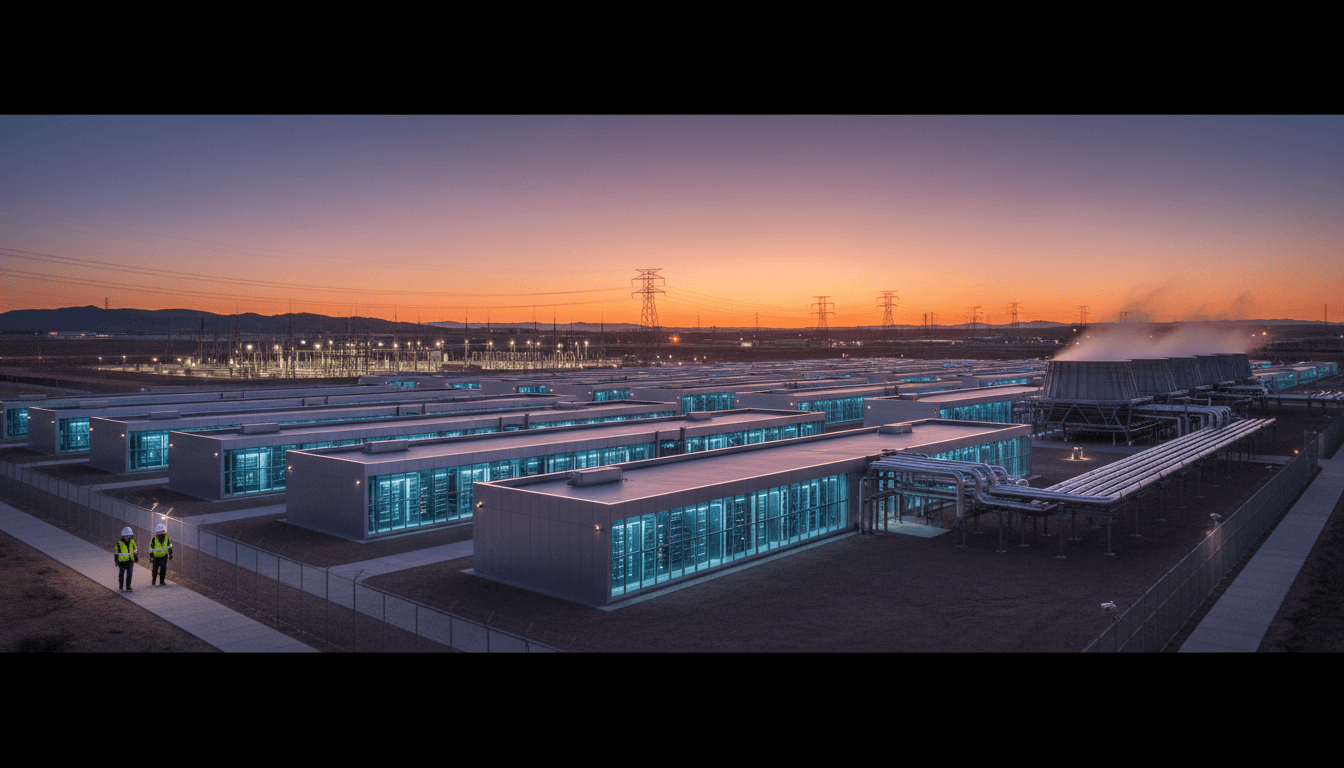

Five new Stargate AI datacenter sites could speed up U.S. digital services. Here’s what it changes for latency, cost, reliability, and scale.

Stargate AI Datacenters: What 5 New Sites Mean for U.S.

Most people hear “five new AI datacenter sites” and think it’s a story for hyperscalers and hardware teams. It’s not. It’s a story about how fast AI features will ship inside U.S. digital services in 2026, and who’s going to be able to afford reliability when demand spikes.

The RSS source points to an OpenAI update about OpenAI, Oracle, and SoftBank expanding Stargate with five new AI datacenter sites, but the page itself wasn’t accessible (403). So rather than pretend we have details we don’t, this post focuses on what an expansion like that predictably changes for cloud computing, AI infrastructure, and the SaaS and digital service teams building on top of it—especially in the U.S.

If you’re responsible for product, platform, or growth, here’s the practical read: more AI datacenter capacity changes latency, availability, unit economics, and compliance options. Those four things decide whether your “AI roadmap” becomes a real feature set—or a backlog of half-finished pilots.

Why five new AI datacenter sites matters (even if you don’t run infrastructure)

Answer first: Adding AI datacenter sites increases available GPU capacity and geographic distribution, which typically improves throughput, reduces latency, and stabilizes pricing for AI workloads.

AI products don’t fail because the model is “not smart enough.” They fail because they’re slow, too expensive at scale, or unreliable during peak hours. More sites generally means:

- Lower latency for real-time AI (voice agents, live chat copilots, fraud scoring at checkout)

- Better resilience through multi-site failover and capacity shifting

- More predictable capacity during seasonal surges (holiday retail, tax season, travel spikes)

- Stronger data residency and compliance postures when workloads can stay within specific regions

This matters because AI in digital services is becoming less “one big chatbot” and more dozens of embedded micro-features:

- Auto-generated help center answers

- Call summarization and QA scoring

- Document ingestion and extraction

- Personalized recommendations

- Sales outreach drafting and account research

Each feature might be small, but the aggregate demand is huge. Datacenter expansion is the unglamorous prerequisite.

The U.S. angle: infrastructure is becoming a competitive moat

When U.S.-based AI leaders expand domestic AI infrastructure, it affects who can ship dependable AI products in the U.S. market. If capacity is scarce, only the largest players can pay for premium throughput and priority. When capacity grows, more mid-market SaaS companies and startups can ship AI features without “rate limit roulette.”

And yes, it also signals something else: AI isn’t a temporary spike. Companies don’t commission new sites for a fad.

What “Stargate” signals about the next phase of AI infrastructure

Answer first: A multi-party buildout (OpenAI + Oracle + SoftBank) signals a shift from experimentation to industrial-scale AI capacity planning—where compute supply chains, power, and enterprise-grade operations matter as much as model quality.

You don’t need insider details to read the tea leaves. Large AI datacenter programs usually indicate three realities:

- Demand is outpacing supply for accelerated compute (GPUs/AI accelerators).

- Enterprise workloads are becoming steady-state, not occasional spikes.

- Power and cooling constraints are now product constraints.

If your team works in digital services, the impact shows up as a new set of “platform fundamentals” you can’t ignore:

- Inference efficiency becomes a first-class KPI

- Caching and retrieval become critical cost controls

- Multi-region architecture stops being optional for customer-facing AI

AI inference is the new “page load time”

A practical stance: latency is a product feature.

If your AI feature takes 8–12 seconds to respond, customers won’t care that the answer is “better.” They’ll bounce, escalate to a human, or disable the feature. Additional datacenter sites can help reduce round-trip time, but only if you design for it:

- Stream tokens instead of waiting for full completion

- Precompute likely responses for common intents

- Use smaller models for first-pass triage, bigger models only when needed

How new AI datacenters change SaaS economics (and why CFOs should care)

Answer first: More AI datacenter capacity tends to reduce price volatility and improves availability, which lowers the risk of rolling out AI features tied to usage-based cost.

The uncomfortable truth: many AI product launches fail in finance review, not engineering review. The model works. The demo is impressive. Then someone asks, “What does this cost at 10 million requests a month?” and the room gets quiet.

New datacenter sites don’t automatically make AI “cheap,” but they often correlate with:

- More stable supply, reducing scarcity-driven pricing pressure

- Better regional routing, improving utilization

- Operational maturity, reducing downtime and incident costs

A simple framework for AI unit economics

If you’re building AI into a digital service, start with three numbers:

- Cost per AI action (per chat, per summary, per document)

- Actions per user per month (realistic usage, not demo usage)

- Gross margin target (especially if you’re bundling AI into subscription tiers)

Then decide how you’ll control costs when usage grows:

- Route low-stakes tasks to cheaper models

- Set guardrails (limits, cooldowns, queuing)

- Use retrieval to shrink prompt size

- Store and reuse outputs when appropriate

Snippet-worthy rule: If you can’t explain your AI feature’s unit cost in one sentence, you’re not ready to scale it.

The operational upside: reliability, multi-region design, and incident response

Answer first: More AI datacenter sites enable better redundancy and regional failover—if your application is built to take advantage of it.

AI features are increasingly mission-critical. When an AI agent drafts customer replies, the support queue depends on it. When AI scores leads, revenue ops depends on it. That means AI incidents aren’t “nice-to-have outages” anymore.

Here’s what I’ve found works for teams that want reliable AI in production:

Build an “AI reliability” playbook (before you need it)

Treat AI like any other production dependency:

- Define SLOs: e.g., p95 latency, success rate, timeouts

- Implement circuit breakers: degrade to simpler behavior (templates, search results)

- Add observability: log prompts/latency/token counts with careful redaction

- Run chaos drills: simulate provider timeouts and regional failures

Design for fallback, not perfection

A mature AI experience isn’t “always perfect.” It’s predictably helpful.

Examples of graceful degradation:

- If summarization fails: show top highlights extracted from metadata

- If an agent is slow: offer “send a human follow-up” or “email me the answer”

- If retrieval fails: present search results with confidence labels

When new AI datacenters come online, your product benefits most if you already support:

- Multi-region routing

- Retries with jitter

- Idempotent job handling

- Async processing for heavy tasks

Energy, location, and compliance: the hidden constraints behind AI growth

Answer first: AI datacenters are constrained by power, cooling, and regulatory requirements, so expansions often aim to balance geography, energy availability, and enterprise compliance.

In the “AI in Cloud Computing & Data Centers” series, we keep coming back to a theme: infrastructure decisions quietly shape product capabilities.

AI infrastructure isn’t just racks of servers. It’s:

- Power contracts and grid capacity

- Cooling systems and water usage strategy

- Physical security and supply chain controls

- Data governance and auditability

For U.S. businesses, the compliance angle is getting sharper. Buyers increasingly ask:

- Where is data processed?

- What logs are retained?

- How is customer data separated?

- What’s the incident response process?

More sites can mean more options to align with customer requirements—especially for regulated verticals like healthcare, finance, and public sector.

What to ask your vendors after a datacenter expansion announcement

You don’t need their blueprint. You need clarity on outcomes:

- Which regions will serve my traffic by default?

- Can I pin workloads to a region?

- What redundancy options exist (active-active vs active-passive)?

- What are the published SLOs and support tiers?

- How do you handle data retention for prompts and logs?

If a vendor can’t answer these clearly, the expansion won’t help you when you hit scale.

Practical next steps: how to turn AI capacity growth into product wins

Answer first: Teams that benefit most from new AI datacenter capacity are the ones that already have a clear workload map, cost controls, and reliability design.

If you’re planning 2026 AI work, use this checklist to translate “more AI infrastructure” into shipped value:

-

Inventory AI workloads

- Real-time chat/voice

- Batch summarization

- Document processing

- Search + retrieval

-

Match workload to architecture

- Real-time: multi-region, low-latency routing, streaming

- Batch: queues, async jobs, aggressive retries

-

Control unit cost

- Model routing by task complexity

- Prompt size limits and retrieval optimization

- Caching for repeated queries

-

Instrument everything

- Token usage per feature

- Cost per customer/account

- p95 latency and timeout rates

-

Plan a “failure mode” UX

- Users tolerate constraints. They don’t tolerate confusion.

The payoff is straightforward: when infrastructure expands and availability improves, you’re positioned to ship AI features faster—and to sell them with confidence.

Where this is heading for U.S. digital services in 2026

More Stargate-style capacity is one of the clearest signals that AI is becoming a standard layer of U.S. digital services, like search, payments, and analytics before it. The companies that win won’t be the ones with the flashiest demo. They’ll be the ones who can offer fast, reliable, cost-controlled AI inside everyday workflows.

If you’re building in this space, treat datacenter expansion news as a product opportunity: revisit your latency targets, shore up multi-region design, and get serious about unit economics.

What’s the one AI feature your customers would actually use every day—if you could guarantee it stays under two seconds and within budget?