New OpenSearch multi-tier storage adds a writeable warm tier backed by S3. Learn how to cut costs, keep performance, and automate tiering for AI ops.

OpenSearch Multi-Tier Storage: Faster Search, Lower Cost

Data teams rarely lose sleep over search relevance. They lose sleep over runaway index growth, unpredictable query latency, and the monthly bill that climbs every time the business says “keep more history.”

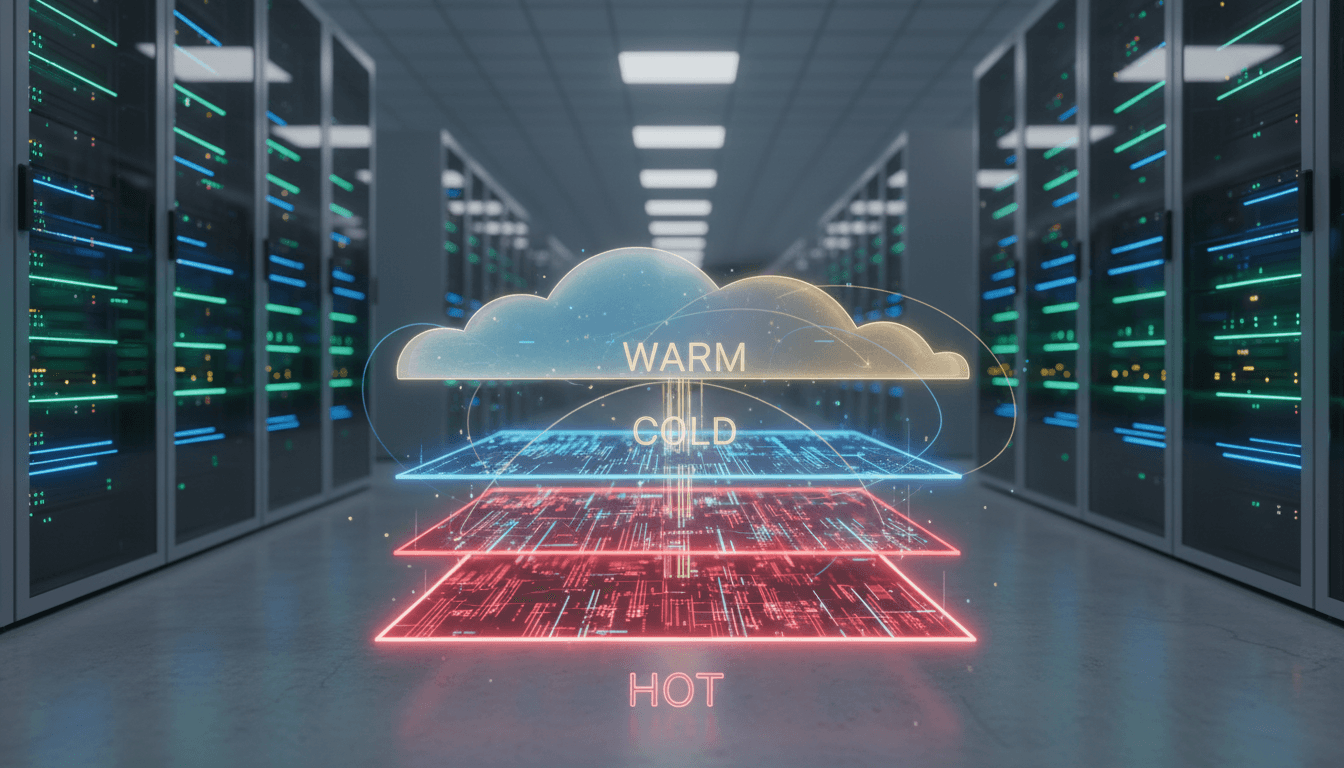

AWS’s new multi-tier storage for Amazon OpenSearch Service (announced Dec 17, 2025) is a strong signal that cloud search is heading in the same direction as modern data lakes: separate compute performance from long-term storage cost. It blends local instance storage for speed with Amazon S3-backed storage for durability and economics, and it does something UltraWarm couldn’t do: the warm tier can accept writes.

For our “AI in Cloud Computing & Data Centers” series, this matters because storage tiering isn’t just a cost trick. It’s a foundation for AI-driven infrastructure optimization—the ability to place data where it delivers the best mix of latency, durability, and cost, then adjust automatically as access patterns change.

What AWS just changed in OpenSearch (and why it’s a big deal)

Answer first: Amazon OpenSearch Service now offers a new two-tier (hot + warm) multi-tier storage architecture powered by OpenSearch Optimized Instances (OI2), using local instance storage plus Amazon S3 to improve durability and performance while reducing cost for less frequently accessed data.

Until now, many teams used a simple pattern:

- Keep “active” indices on hot nodes (fast, expensive).

- Move older indices to UltraWarm (cheaper), but read-only.

That read-only limitation is the part that caused real-world pain. Plenty of “older” data still gets late-arriving events: delayed telemetry, billing adjustments, compliance backfills, post-incident enrichment, or reprocessing. If your warm tier can’t accept writes, you’re forced into awkward workflows—reindexing, duplicating pipelines, or keeping more data hot than you want.

With the new warm tier on OI2 instances, you get:

- Hot tier: for frequent queries and recent ingest

- Warm tier (S3-backed, writeable): for data that’s queried less often but still changing

And crucially, you can automate data rotation from hot to warm using Index State Management (ISM).

Multi-tier storage is infrastructure optimization in disguise

Answer first: Multi-tier storage is a practical example of intelligent resource allocation: put expensive low-latency resources only where the workload truly needs them, and let cheaper durable storage carry the rest.

Most companies get this wrong at first. They over-provision hot storage “just in case,” then spend the next year trying to claw back cost without breaking dashboards.

The better approach is to admit a simple truth: data value decays faster than companies delete it. Logs from 3 hours ago are high value; logs from 3 months ago might be “only when something goes wrong.” Yet many clusters treat them the same.

Hot/warm tiering maps cleanly to real access patterns

Here’s what I’ve found works in practice for OpenSearch-heavy environments:

- Hot data is anything tied to alerts, SLO monitoring, incident response, active customer investigations, or current ML feature generation.

- Warm data is primarily for audits, trend analysis, longer-range anomaly investigation, and periodic reporting.

The new multi-tier setup makes that separation less painful because warm no longer means frozen-in-time.

Why AI teams should care (even if they don’t run OpenSearch)

Search clusters increasingly sit in the critical path of AI operations:

- Retrieval for RAG pipelines (logs, tickets, runbooks, product docs)

- Observability signals used to detect model drift and outages

- Security analytics feeding automated triage

All of these benefit when you can keep “just enough” data fast while pushing the rest to S3-backed warm storage—without redesigning ingestion.

What’s new about the warm tier: writeable, S3-backed, and sized for reality

Answer first: The new warm tier uses OpenSearch Optimized (OI2) instances and supports write operations, while storing warm data cost-effectively using Amazon S3 with local caching.

AWS’s announcement includes a few operational details that are worth translating into planning guidance:

Warm tier is “addressable” beyond local cache

For warm tier deployments on OI2 (sizes large to 8xlarge), AWS notes you can have addressable warm storage up to 5× the local cache size.

What that means in plain language:

- You still get local storage used as a performance layer.

- Your warm dataset can be materially larger than what fits locally.

- S3 provides the durable backend capacity.

This is the same architectural bet we see across cloud infrastructure: cache locally, persist cheaply, and let the system manage what stays hot.

You don’t need manual data shuffling if you use ISM

Index State Management can rotate indices from hot to warm as they age. If you already run time-series patterns (daily indices for logs, hourly for high-volume telemetry), this is a clean fit.

A practical rotation policy (example) looks like:

- Keep indices 0–7 days in hot for fast incident response.

- Move indices 8–90 days to warm (still queryable, now writeable for late events).

- After 90–180 days, consider snapshot/archival and deletion depending on compliance.

Those numbers aren’t universal, but the pattern is.

Compatibility and availability constraints you should plan around

The new multi-tier experience is available on:

- OpenSearch version 3.3+

- OI2 instance family

- 12 regions (including multiple US, Canada Central, key APAC regions, and several in Europe)

If you’re planning a 2026 platform refresh, this impacts architectural decisions: you can time your OpenSearch upgrade and instance-family move together rather than treating them as separate projects.

Where multi-tier helps the most: three concrete scenarios

Answer first: Multi-tier storage shines when you have time-series growth, uneven query patterns, and compliance pressure to retain data longer than you actively use it.

1) Observability at scale without a hot-tier tax

Logs and traces explode in volume, especially as teams add more services and more sampling detail. The business often demands longer retention “for audits” or “because we might need it.”

With writeable warm storage:

- You can keep recent data hot for fast investigations.

- You can retain months of history in warm without turning every late-arriving log into a reindexing project.

- You can reduce the pressure to over-provision SSD-heavy hot nodes.

2) Security analytics and retroactive enrichment

Security teams frequently enrich older events after the fact (new indicators of compromise, updated threat intel, reclassification). A read-only warm tier forces you to either:

- Keep more data hot (expensive), or

- Export data to another system for enrichment (complex).

Writeable warm makes retroactive enrichment more straightforward because the storage tier doesn’t block updates.

3) AI operations: long-range drift and “what changed?” investigations

Model issues often surface weeks after a deployment. When you investigate drift, you want:

- Access to historical traffic patterns

- Past feature distributions

- Prior error traces and slow queries

Warm storage gives you cost-effective history, and the writeability supports delayed labeling and correction workflows.

How to decide if you should adopt it (a checklist that saves time)

Answer first: Adopt multi-tier if you can clearly separate “needs fastest performance” from “needs to be retained,” and you’re willing to enforce lifecycle policies.

Use this checklist before you touch production:

Workload fit

- Your indices are time-based (daily/weekly/monthly) or can be made time-based.

- At least 50–80% of retained data is queried infrequently.

- You have late-arriving events or periodic backfills (writeable warm helps a lot here).

Operational readiness

- You can upgrade to OpenSearch 3.3+ on the managed service.

- You’re comfortable defining ISM policies and testing them.

- You have basic query observability (p95 latency, slow logs, cache behavior).

Cost and performance guardrails

- You know your retention targets (30/90/180/365 days).

- You can define an SLA split: e.g., hot queries must be under X ms; warm queries can be slower.

- You can separate dashboards: “last 24 hours” vs “last 90 days.”

If you can’t articulate those points, tiering projects tend to stall because nobody agrees what “acceptable warm performance” means.

The AI angle: tiering policies are becoming self-driving

Answer first: Multi-tier storage sets up the mechanics for AI-driven optimization: systems can learn access patterns and automatically adjust what stays hot, what moves warm, and when.

Cloud providers are steadily building infrastructure that can be optimized with feedback loops:

- Monitor access frequency, query latency, cache hit rates

- Predict which indices will be accessed soon (based on incidents, seasonality, business cycles)

- Adjust lifecycle transitions and cache sizes accordingly

Even if you don’t have an “AI ops optimizer” running your cluster today, adopting multi-tier architectures makes it easier to add one later. Your data placement becomes a policy decision rather than a manual migration project.

Here’s a stance I’ll defend: the future of cloud cost control isn’t more dashboards—it’s better defaults plus automated, policy-based data placement. Multi-tier OpenSearch is a step in that direction.

Practical next steps if you’re evaluating OpenSearch multi-tier

Answer first: Start with one workload, define hot/warm SLAs, automate rotation, then measure query behavior for 2–4 weeks before expanding.

A sensible rollout plan:

- Pick a single domain (observability logs, app search analytics, security events).

- Define retention by tier (e.g., 7 days hot, 90 days warm).

- Implement ISM to rotate indices and validate that dashboards and alerts behave as expected.

- Measure real outcomes:

- Hot-node storage growth rate

- p95 query latency for hot vs warm ranges

- Incident workflow impact (does investigation slow down?)

- Expand gradually once the numbers look good.

If you’re building an AI platform on top of cloud infrastructure, this is also a good moment to ask: Which data needs to be instantly retrievable for models and agents, and which data just needs to be available? Your answer becomes the blueprint for tiering.

Snippet-worthy take: Hot storage is for velocity. Warm storage is for history. The win is treating that as a policy, not a firefight.

What would change in your environment if keeping 6–12 months of searchable history didn’t force you to pay hot-tier prices—or freeze your data the moment it ages?