Connect FSx for ONTAP to S3 access so AI and analytics tools can use file data without copying it. Faster RAG, simpler governance, fewer pipelines.

FSx for ONTAP + S3: Faster AI Access to File Data

Most enterprises don’t have a “data” problem. They have a data access problem.

A lot of the information that would make AI and analytics useful—engineering specs, policy PDFs, customer contracts, research archives, media libraries—still lives in NAS-style file systems for good reasons: permissions models, application compatibility, and decades of operational muscle memory. Meanwhile, the cloud-native AI and analytics ecosystem overwhelmingly expects Amazon S3-style object access.

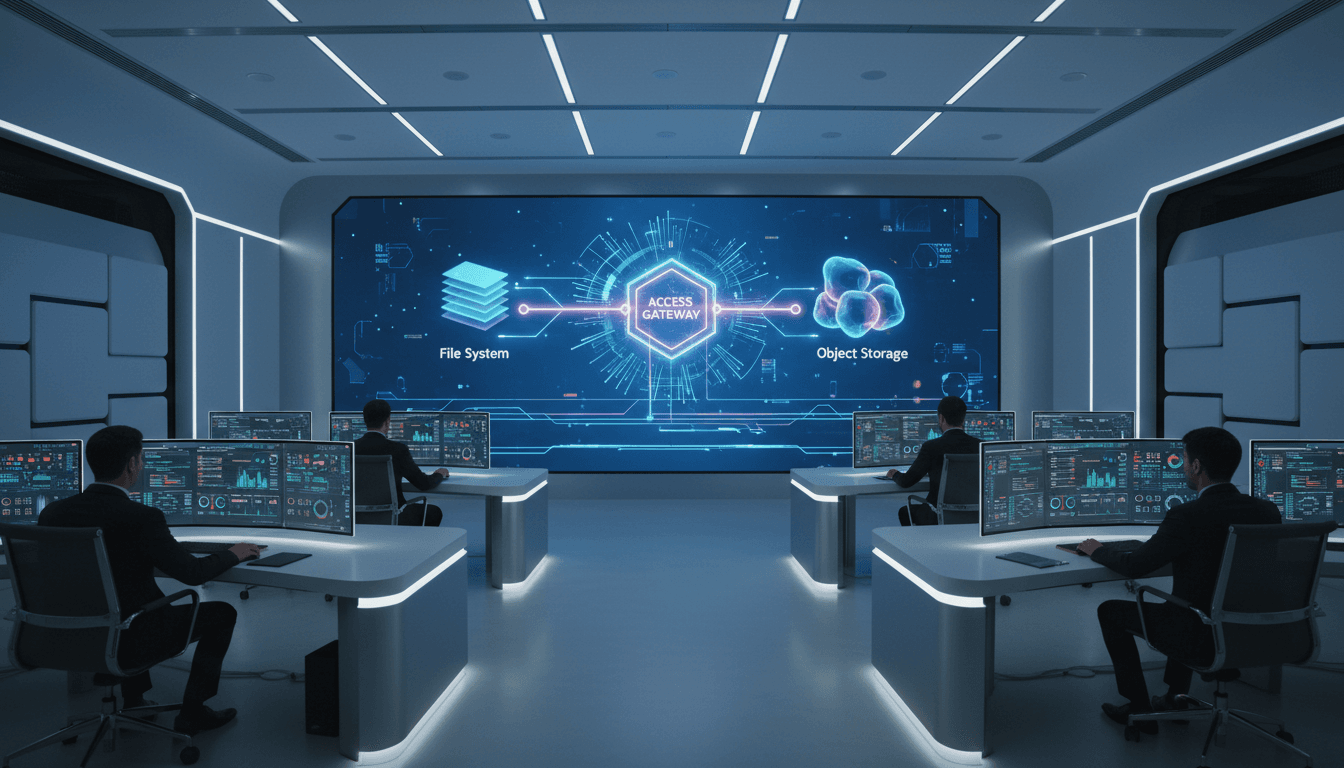

AWS’s new capability—Amazon S3 Access Points for Amazon FSx for NetApp ONTAP—is a practical bridge between those worlds. It lets you perform S3 object operations on data that continues to reside in FSx for ONTAP volumes, so teams can connect “file data” to tools built for S3 without a painful copy-and-sync workflow. In the broader AI in Cloud Computing & Data Centers series, this is a perfect example of a provider reducing data movement so compute can be allocated more intelligently, more efficiently, and with fewer operational surprises.

Why “seamless data access” matters for AI workloads

Answer: AI systems are only as useful as the speed and governance of the data pipelines feeding them.

If you’re building RAG applications, training ML models, or running recurring analytics, the slowest part is often not the GPU. It’s the plumbing:

- Teams duplicate file shares into object storage “just for AI.”

- Permissions drift across copies.

- Data freshness turns into an argument (“Which copy is the source of truth?”).

- Storage costs quietly double because the same dataset exists in two places.

This matters because workload management in modern cloud environments depends on predictable data access. When data access is messy, everything becomes harder: autoscaling, capacity planning, cost controls, and even incident response.

Data movement is the hidden tax on AI in the cloud

Here’s what I see most often: a business wants an internal assistant over enterprise documents. The documents live in file shares. The AI tool expects S3. Someone builds a pipeline to copy files into a bucket nightly.

It works… until:

- Legal asks for retention controls and auditability.

- Security asks for least-privilege access by team and environment.

- The business asks for “near real-time,” not “tomorrow morning.”

Reducing the number of copies reduces the number of governance problems. That’s the core value of S3 access to FSx for ONTAP: treat the same underlying data as both file-accessible and S3-accessible.

What AWS actually added: S3 Access Points attached to FSx for ONTAP

Answer: You can attach an S3 Access Point to an FSx for ONTAP volume, exposing the file data through an S3 endpoint for object operations.

Conceptually, think of it like adding a second “front door” to the same data:

- File protocols still work for existing apps and users (the “NAS door”).

- S3 object access becomes available for cloud-native AI/analytics tools (the “S3 door”).

The important part is operational: you’re not redesigning your storage strategy overnight. You’re extending it.

Why this fits the data center story (not just an AWS feature drop)

In data centers and large cloud estates, the best infrastructure improvements are the ones that:

- reduce cross-system copying,

- simplify control planes,

- and make performance/cost behavior more predictable.

S3 access to FSx for ONTAP checks those boxes. It’s not flashy. It’s the kind of integration that makes “AI in production” less chaotic.

Practical use cases that map directly to AI, analytics, and workload efficiency

Answer: This capability is most valuable when you want S3-native services to work with enterprise file data without replatforming or duplicating it.

Below are the patterns that tend to produce real outcomes (and fewer late-night pages).

1) Retrieval-Augmented Generation (RAG) over enterprise file shares

RAG lives or dies on document access and refresh. With S3 access, you can connect proprietary file data to services that expect S3 semantics—like knowledge base tooling for RAG—while keeping the underlying dataset in the file system your organization already trusts.

What improves in practice:

- Fresher answers because you can reduce batch copy schedules.

- Cleaner governance because you’re not managing multiple “authoritative” copies.

- Simpler incident handling because access paths are easier to reason about.

2) ML training and feature generation without “bucket-izing” everything first

Many ML pipelines assume training data sits in object storage. But a lot of enterprise training corpora starts as files: images on a share, CSV exports, documents, CAD assets.

S3 access gives teams a shorter path from “data exists” to “training job runs,” and that’s not just convenience. It affects resource efficiency:

- Fewer pre-processing jobs whose only purpose is data relocation.

- Less duplicated storage consumption.

- Shorter time-to-first-experiment, which reduces wasted compute cycles.

3) BI and analytics over file data using S3-native integrations

AWS’s walkthrough example uses AI-powered BI tooling (QuickSight/“Quick Suite” in the source material) to query and chat over documents made available through an S3 Access Point.

The real story here isn’t the UI. It’s the architecture: once the data is addressable via S3, a large ecosystem opens up—analytics engines, ETL frameworks, governance tools—without forcing a “move everything to S3” migration first.

4) Serverless and container apps accessing shared enterprise datasets

Serverless functions and microservices tend to integrate more naturally with S3 than with traditional file mounts, especially when you’re optimizing for ephemeral compute.

Common datasets that benefit:

- configuration libraries and reference data

- content repositories (media, templates, product docs)

- model artifacts and evaluation sets

- shared application assets for multi-team platforms

This ties directly to intelligent resource allocation: object-based access patterns are easier to scale horizontally and easier to meter.

Getting started: a clean path from ONTAP volume to S3 endpoint

Answer: You create an S3 Access Point and attach it to a specific FSx for ONTAP volume, then use the access point alias where an S3 bucket URL would normally go.

At a high level, the flow is straightforward:

- Choose your FSx for ONTAP file system and target volume.

- Create an S3 Access Point from the FSx console (or via CLI/SDK).

- Configure identity and network settings.

- Use the access point alias in S3-integrated services.

Implementation choices that matter (more than the console clicks)

If you want this to actually reduce operational load, decide these up front:

- Volume-to-access-point mapping: Keep the scope tight. One access point per domain/dataset is easier to secure and easier to reason about.

- Identity model: Pick an approach that aligns with how you currently enforce access in ONTAP/NAS environments. “Quick and open” becomes tomorrow’s audit finding.

- Network placement: Treat this like any other data-plane decision. Put it where latency, routing, and security boundaries make sense.

Cost model: account for request-based charges

You’re billed for S3 requests and data transfer through the access point, in addition to standard FSx charges. That can be a win or a surprise.

This is where workload management discipline pays off:

- RAG workloads can generate a lot of small reads.

- BI exploration can spike request counts during peak usage.

- ETL jobs can churn metadata operations.

A practical approach is to run a 2–4 week pilot, capture request metrics, and then tune caching, partitioning, and access patterns.

Security and governance: don’t recreate the mess you’re trying to escape

Answer: The safest deployments treat the S3 Access Point as a governed interface, not a shortcut around file permissions.

When teams hear “S3 access,” they sometimes assume “S3-style openness.” Don’t.

Here are the controls worth prioritizing:

- Least-privilege IAM policies for each consuming workload (RAG indexers, ETL jobs, BI tools).

- Segmentation by access point so different data domains don’t share a single blast radius.

- Audit-friendly naming and tagging so you can explain who accessed what and why.

- Change control for knowledge base updates if you’re using AI assistants. Garbage in becomes confident garbage out.

A good rule: if you can’t describe the access boundary in one sentence, it’s probably too broad.

Where this lands in the “AI in Cloud Computing & Data Centers” narrative

Answer: It’s an infrastructure optimization play—reduce data duplication, improve throughput, and make AI workloads easier to schedule and govern.

In late 2025, a lot of organizations are balancing three pressures at once:

- more AI experimentation from business teams,

- tighter cost scrutiny,

- and stricter security/regulatory expectations (especially in multi-region and sovereignty-driven architectures).

The integration between FSx for ONTAP and S3 is a provider-level move that supports all three. It keeps legacy-friendly file workflows intact while making the data usable by AI and analytics systems that expect object access. That’s exactly the kind of hybrid reality most enterprises live in.

If you’re responsible for cloud infrastructure or data platforms, the real question isn’t “Should we use files or objects?” It’s: How quickly can we switch compute and tools without copying the same data ten different ways?

Next steps: a practical pilot plan that produces leads (and results)

Pick one dataset that meets these criteria:

- widely used across teams,

- currently stored on FSx for ONTAP (or a NAS you’re migrating),

- and repeatedly copied into S3 for analytics/AI.

Then run a pilot with a single S3-native consumer (a RAG knowledge base, an ETL job, or a BI integration). Measure:

- time to first successful query/answer,

- request volume and cost behavior,

- access-control clarity (how many policy exceptions did you need?),

- and operational load (how many “sync jobs” did you delete?).

If you want, I can help you map your current file-share and AI pipeline into a minimal architecture that keeps governance clean while improving performance and cost predictability. What’s the one dataset your teams keep copying into S3 “because they have to”?