AMD and OpenAI’s 6GW GPU partnership signals a new era for cloud AI capacity. See what it means for performance, cost, and AI services.

AMD–OpenAI 6GW GPU Deal: What It Means for Cloud AI

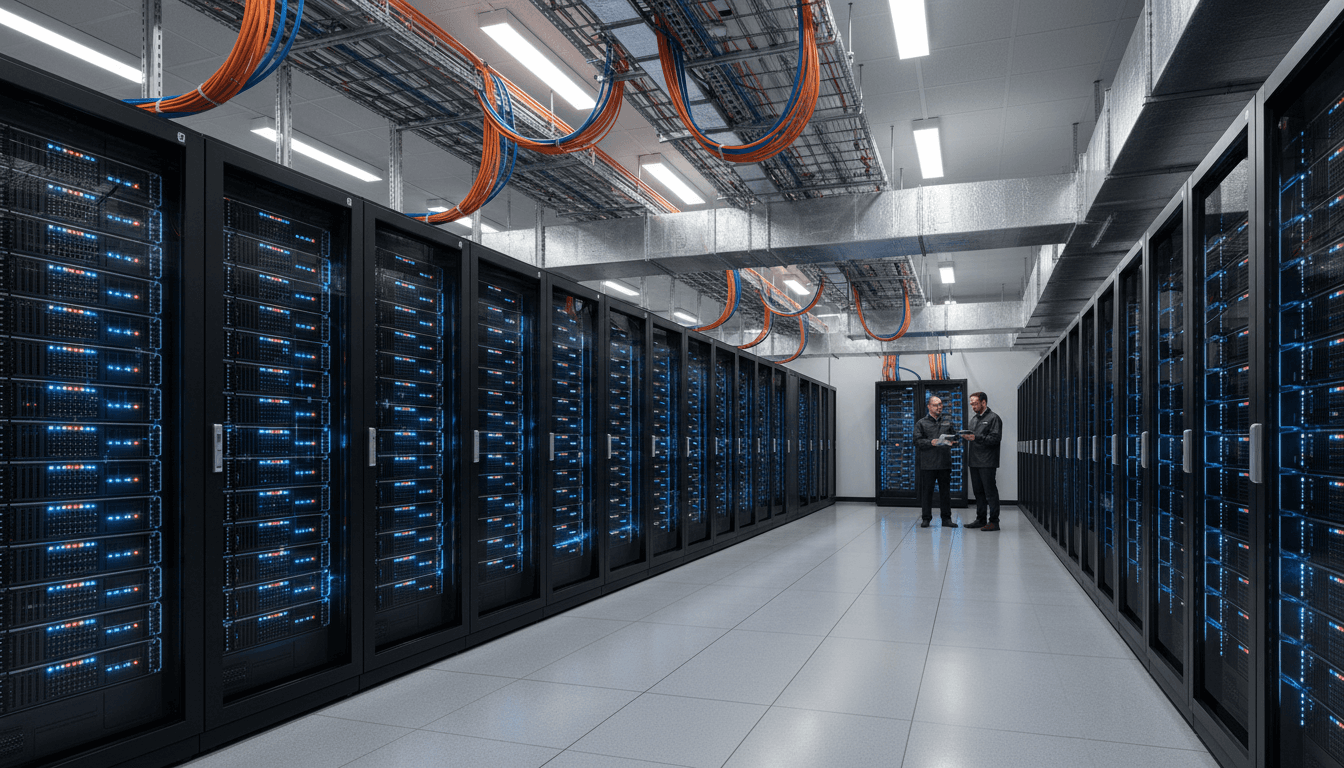

A 6-gigawatt (GW) GPU deployment agreement isn’t a “bigger data center” headline. It’s a signal that AI infrastructure in the United States is entering an era where power, scheduling, and supply chains matter as much as model quality.

OpenAI and AMD announced a multi-year partnership to deploy 6 GW of AMD Instinct GPUs, with an initial 1 GW deployment of AMD Instinct MI450 Series GPUs expected to start in the second half of 2026. That timeline lands right when many U.S. enterprises are finalizing 2026 budgets and trying to answer a question that’s gotten uncomfortable: Can we actually get enough compute to keep our AI products reliable and affordable?

This post is part of our “AI in Cloud Computing & Data Centers” series, where we focus on the unglamorous layer that decides whether AI products feel magical or frustrating: cloud AI infrastructure—capacity, energy efficiency, and intelligent resource allocation.

The real story: AI infrastructure is a power problem now

AI at scale is constrained by three things: chips, electricity, and time. The OpenAI–AMD announcement puts the “electricity” part front and center with a rare, plain-English figure—gigawatts.

A useful way to interpret this: in 2023–2025, many conversations about generative AI focused on models and features. By late 2025, the market is increasingly shaped by capacity planning.

Why “6 gigawatts of GPUs” matters (even if you don’t run a data center)

If you’re building digital services—customer support automation, copilots for internal tools, AI search, personalization, fraud detection—the compute supply behind your AI stack affects:

- Latency and throughput: how fast responses come back and how well systems handle spikes.

- Cost stability: whether your per-request cost is predictable enough to productize.

- Reliability: whether you can meet SLAs during peak demand.

- Roadmap risk: whether you can confidently plan new AI features without fearing capacity crunches.

My take: this partnership is best understood as a capacity hedge and an ecosystem bet. OpenAI is securing multi-generation compute. AMD is securing a flagship customer that pushes performance, software maturity, and deployment know-how.

What OpenAI and AMD actually announced (and why it’s structured this way)

OpenAI and AMD disclosed several concrete points that are easy to miss if you only skim the headline:

- Multi-year, multi-generation agreement to deploy 6 GW of AMD GPUs for OpenAI’s next-generation AI infrastructure.

- Initial 1 GW deployment based on AMD Instinct MI450 Series starting 2H 2026.

- The partnership builds on earlier collaboration across MI300X and MI350X series.

- AMD issued OpenAI a warrant for up to 160 million shares, vesting in tranches tied to scaling purchases up to 6 GW plus milestone and share-price targets.

The warrant structure isn’t trivia—it’s an execution incentive

This deal includes a performance-aligned financial instrument (the warrant) tied to deployment milestones. That’s telling. At this scale, “partnership” doesn’t mean co-marketing; it means:

- joint work on hardware-software co-optimization,

- shared responsibility for rack-scale design choices,

- and constant pressure on yield, packaging, networking, and driver/compiler stability.

The warrant is essentially a mechanism that says: both sides will be judged by deployment reality, not press releases.

Why this changes the conversation for U.S. cloud AI services

For companies delivering AI-driven digital services in the U.S., the biggest practical shift is that inference capacity is becoming a first-class product constraint.

Training gets headlines; inference pays the bills

Most business value comes from inference—serving requests at scale inside apps, contact centers, analytics products, and developer platforms. Inference success depends on:

- Resource allocation: fitting mixed workloads (chat, embeddings, vision, agents) onto the right GPU pools.

- Workload management: routing requests based on model, priority tier, and latency targets.

- Energy efficiency: keeping cost-per-token down as electricity prices and demand charges bite.

A 6 GW infrastructure plan suggests OpenAI expects demand growth that isn’t incremental. It implies a long runway of higher usage across consumer and enterprise products.

What “rack-scale AI solutions” signals

The announcement references “rack-scale AI solutions.” That’s important because AI performance bottlenecks are increasingly system-level:

- GPU-to-GPU interconnect and network topology

- memory bandwidth and capacity per accelerator

- failure domains (one bad node can disrupt a job)

- observability for saturation, throttling, and thermal headroom

In other words, this is about data center AI design, not just purchasing chips.

How this partnership fits the “AI in Cloud Computing & Data Centers” trend

The cloud world is shifting from “elastic CPU” thinking to “scarce accelerator” thinking. If you operate a SaaS platform, a marketplace, a fintech app, or even a media workflow, you’re bumping into the new reality: GPU capacity behaves more like airline seats than like compute cycles.

Trend 1: Intelligent resource allocation becomes a competitive advantage

When GPUs are scarce and expensive, the winners are the teams that treat scheduling as a product feature. Practical examples we’re seeing across cloud AI infrastructure:

- Multi-tenant isolation so one customer can’t starve others

- Priority queues for premium tiers and critical workflows

- Model routing (small model first, escalate only when needed)

- Batching and caching to reduce duplicate compute

This is where AI infrastructure optimization shows up in user experience: fewer “busy” errors, more predictable response times, and pricing that doesn’t jump around.

Trend 2: Energy efficiency is now directly tied to product margins

6 GW is also a reminder that AI is negotiating with the physics of power delivery. For operators, energy efficiency isn’t a sustainability footnote—it’s unit economics.

If you want AI features that can ship broadly (not just to a few high-value accounts), you need:

- models and serving stacks that are efficient per request,

- data centers that can support high-density racks,

- and operational tooling to manage thermal and power constraints without performance cliffs.

Trend 3: Multi-vendor compute reduces systemic risk

A strategic compute partnership with AMD helps diversify supply and execution paths. For the broader U.S. digital economy, multi-vendor ecosystems can reduce:

- single-vendor pricing pressure,

- deployment delays from constrained supply,

- and platform risk if one stack hits a performance or availability wall.

I’m opinionated here: more viable GPU platforms is good for everyone building AI products. Competition forces better software support, better pricing discipline, and faster iteration.

Practical implications for teams building AI-driven digital services

If you’re not buying GPUs by the gigawatt (most of us aren’t), the move still changes your planning. Here’s how to translate it into decisions you can make in Q1–Q2 2026.

1) Plan for “capacity-aware” product design

Assume GPU capacity will remain a managed resource. Design workflows that degrade gracefully:

- Use model cascades: cheap/fast model first, then escalate.

- Prefer retrieval + smaller models for many knowledge tasks.

- Add timeouts, partial answers, and async completion for heavy jobs.

A blunt rule that works: If your product breaks when a single model endpoint slows down, it’s not ready for scale.

2) Make inference cost visible to engineering and product

The teams that win in 2026 treat cost like latency: observable and measurable. Put these into your dashboards:

- cost per 1,000 requests (by endpoint)

- tokens per task (median and p95)

- cache hit rate

- batch size efficiency

- GPU utilization (when you control serving)

This is classic workload management applied to AI.

3) Revisit your data strategy before you chase bigger models

If you can cut prompt length by 30% through better retrieval or cleaner knowledge bases, you often get a cost and latency win that no GPU upgrade can match.

Practical steps:

- clean and deduplicate docs feeding RAG

- segment knowledge bases by domain to reduce retrieval noise

- measure answer quality against prompt size explicitly

4) Treat vendor roadmaps as part of your risk register

The OpenAI–AMD partnership is multi-generation. That’s a hint to do the same with your own dependencies:

- What happens if your preferred model family is constrained for 90 days?

- Can you shift to a smaller model or alternate provider without rewriting your app?

- Do you have a fallback mode that preserves core workflows?

This is not paranoia; it’s basic platform engineering in the AI era.

People also ask: what should businesses expect from bigger GPU deployments?

Will this make AI cheaper for customers?

Over time, more capacity and better efficiency tend to push costs down, but not in a straight line. Demand often rises just as fast. The more reliable expectation is better availability and more predictable performance—which is what makes AI features safe to bake into core business processes.

Does this mean AMD GPUs will dominate AI data centers?

No single deal decides that. What it does indicate is that AMD is positioned as a serious long-term platform for large-scale AI infrastructure, including the hard part: software and systems co-optimization.

When will this affect real-world products?

The first 1 GW MI450 deployment is slated for 2H 2026, so you’d expect downstream effects—capacity expansion, new serving configurations, and potentially better price-performance profiles—to show up progressively after that.

What to do next if you’re building on cloud AI in 2026

This AMD–OpenAI 6GW GPU deal is a reminder that AI is no longer “just software.” It’s an industrial-scale infrastructure race—and U.S.-based partnerships like this are one reason the American digital economy is moving so quickly on AI-powered services.

If you’re responsible for an AI roadmap, I’d focus on two parallel tracks:

- Engineering for efficiency: routing, batching, caching, retrieval quality, and observability.

- Engineering for resilience: multi-model fallbacks, capacity-aware UX, and vendor flexibility.

The forward-looking question worth asking your team right now: If your AI usage doubles next quarter, what breaks first—latency, cost, or reliability?