OpenAI–Foxconn highlights a new reality: AI is a physical supply chain problem. Learn what it means for U.S. cloud and data center capacity planning.

AI Supply Chains: What OpenAI–Foxconn Signals

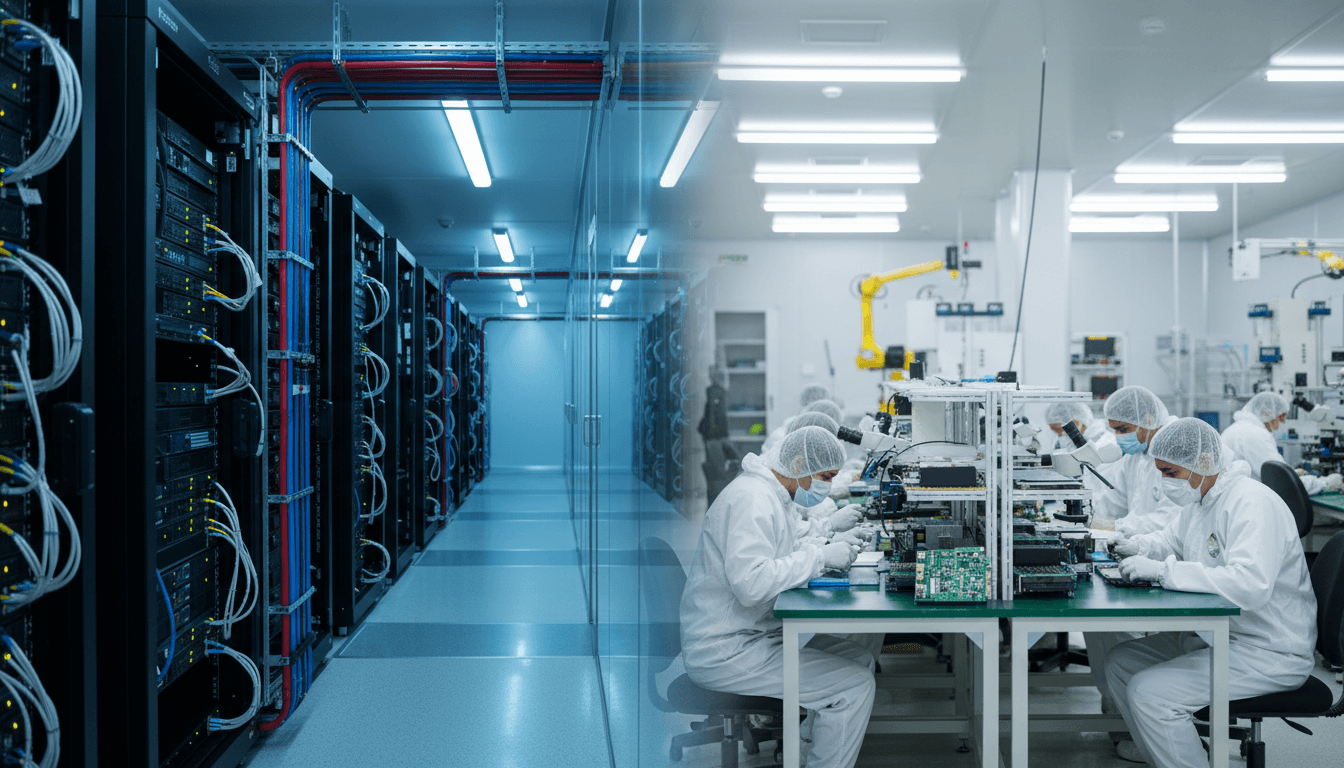

A modern AI product doesn’t “ship” the way software used to. It lands as a stack: data centers full of GPUs, firmware, networking, security controls, and a continuous stream of model updates. When a major AI lab and a global manufacturer collaborate to strengthen U.S. manufacturing across the AI supply chain, that’s not a press-release footnote—it’s a signal.

Here’s the signal I take from the OpenAI–Foxconn collaboration: AI is now a physical supply chain problem as much as a software problem. If you’re building digital services—especially in cloud computing and data centers—your reliability, cost, and speed increasingly depend on how well the “real world” part of AI (hardware, power, assembly, testing, logistics) is designed and operated.

This post expands the story into something practical: what an AI supply chain actually includes, why U.S.-based manufacturing matters in 2025, and how cloud and data center teams can translate partnerships like this into better uptime, lower costs, and faster delivery.

Why this partnership matters for cloud and data centers

Answer first: This collaboration matters because AI capacity is constrained by physical infrastructure, and strengthening U.S. manufacturing improves lead times, resiliency, and control over critical components that power AI workloads.

For the last decade, many teams treated “infrastructure” as someone else’s problem. If you needed more compute, you bought more cloud. But the AI boom turned compute into scarce inventory. Training and serving large models requires specialized hardware (accelerators, high-bandwidth memory, advanced networking) and data center-ready systems. If any step in that chain bottlenecks—manufacturing, QA, shipping, power delivery, or rack integration—your “software roadmap” slips.

In the AI in Cloud Computing & Data Centers series, we’ve focused on how AI optimizes infrastructure (workload scheduling, energy efficiency, predictive maintenance). This story adds a new layer: how infrastructure gets built in the first place—and how AI-driven partnerships can accelerate and stabilize that build-out.

The new reality: AI demand is elastic, infrastructure isn’t

Cloud services can scale in minutes; factories can’t. Hardware production ramps take quarters, not sprints. Strengthening U.S. manufacturing capacity across the AI supply chain reduces the friction between:

- Exploding AI service demand (new copilots, agents, analytics, search)

- Long procurement cycles (GPUs, NICs, optical modules, power gear)

- Deployment complexity (liquid cooling, high-density racks, compliance)

If you sell AI features in the U.S. market, you’re effectively selling a promise about compute availability. That promise is only as strong as your supply chain.

What “AI supply chain” actually means (beyond chips)

Answer first: The AI supply chain is the end-to-end pipeline that turns silicon, power, and systems engineering into usable AI capacity—from component sourcing to data center deployment and lifecycle management.

Most people hear “AI supply chain” and think “chips.” Chips matter, but they’re one dependency in a chain that looks more like this:

- Compute components: accelerators, CPUs, HBM, storage, power supplies

- Networking: high-speed switches, NICs, optical transceivers, cabling

- System integration: board assembly, burn-in, thermal design, firmware

- Data center readiness: rack build, liquid/air cooling compatibility, PDUs

- Deployment operations: imaging, secure provisioning, attestation, patching

- Lifecycle: monitoring, predictive maintenance, spare parts, refurbishment

That’s why a partnership between an AI company and a manufacturing leader is so relevant to digital services. Every step above affects your ability to deliver AI experiences at scale.

The under-discussed constraint: power and thermals

If you run data centers, you already know the quiet constraint in 2025: power availability and heat removal. High-density AI clusters push:

- Higher rack power (often multiples of traditional CPU racks)

- More complex cooling (rear-door heat exchangers, direct-to-chip, liquid loops)

- Tighter integration requirements (facility + rack + workload planning)

Manufacturing capacity that aligns to these constraints—systems designed for dense AI, tested for reliability, and shipped with consistent build quality—reduces time-to-serve. For cloud providers and enterprise data centers, that translates into faster capacity turn-up and fewer deployment surprises.

How AI improves manufacturing—and why U.S. scaling is different

Answer first: AI strengthens manufacturing by reducing defects, improving throughput, and enabling better forecasting—but scaling in the U.S. adds requirements around security, workforce development, and compliance that change how systems are built.

When we talk about AI in manufacturing, we’re not talking about vague “automation.” The highest ROI uses are specific and measurable:

- Computer vision quality inspection: catching assembly defects earlier

- Predictive maintenance: reducing unplanned downtime on critical equipment

- Demand forecasting + inventory optimization: fewer stockouts and less waste

- Process optimization: tuning parameters to improve yield and consistency

Now layer in why U.S. manufacturing expansion is strategically important:

- Lead times and risk: shorter, more controllable logistics paths

- Resilience: less exposure to single-region disruptions

- Security and trust: stronger control over chain-of-custody for sensitive gear

- Compliance: easier alignment with U.S. regulatory and customer requirements

Here’s my stance: for AI infrastructure, resilience beats marginal cost savings. If your business depends on inference capacity for customer-facing experiences, a single supply disruption can cost more than you ever saved by sourcing purely for lowest unit cost.

What this means for digital services teams

You don’t need to run a factory to benefit from manufacturing improvements. You need to:

- Specify systems that are deployment-ready (power, cooling, networking)

- Require repeatable builds (configuration drift kills reliability)

- Get serious about secure provisioning (firmware, keys, attestation)

Partnerships that bring manufacturers closer to AI labs and cloud operators reduce the gap between how hardware is produced and how it’s actually used at scale.

The cloud-to-factory feedback loop: a practical model

Answer first: The winning model is a feedback loop where cloud telemetry informs manufacturing, and manufacturing constraints inform cloud scheduling and capacity planning.

This is where the story fits perfectly into the AI in Cloud Computing & Data Centers theme. AI doesn’t just run on infrastructure; it helps design, deploy, and operate it.

1) Telemetry-informed reliability (from data center to production line)

Data centers generate massive operational data: thermal profiles, fan curves, failure rates by component batch, error logs, performance counters. When that data flows back to manufacturing:

- Component selection improves

- Burn-in tests become more predictive

- Firmware defaults get tuned for real workloads

- Early-life failure rates drop

That’s not theoretical. Most large-scale operators already do some version of this internally. The next step is making it cross-organizational, which is what collaborations like OpenAI–Foxconn suggest.

2) Manufacturing-informed capacity planning (from factory to cloud)

Manufacturing has real constraints: supplier lead times, yield variability, shipping windows. If cloud capacity planning ignores those realities, you get:

- Overpromised launches

- Emergency procurement at premium prices

- Fragmented fleets that are harder to operate

Better alignment means cloud teams can plan workload placement, model rollouts, and customer commitments around what will actually be delivered.

Snippet-worthy truth: If your AI roadmap isn’t synced to your hardware roadmap, you don’t have a roadmap—you have a wish list.

A mini playbook: how to build a resilient AI infrastructure supply chain

Answer first: Focus on standardization, security, observability, and deployment speed—the four levers that most directly translate supply chain improvements into better service delivery.

If you’re a SaaS leader, platform engineer, or IT buyer trying to make sense of “AI supply chain” headlines, here’s what to do next.

Standardize the fleet (or you’ll pay forever)

Mixed hardware fleets drive:

- More QA matrices

- More performance variability

- More incident surface area

Action steps:

- Define 2–3 reference architectures for training and inference

- Enforce configuration standards for NICs, optics, firmware, and BIOS settings

- Treat “one-off” configurations as exceptions that require executive approval

Build security into provisioning, not as a retrofit

AI infrastructure is high-value and frequently targeted. Secure-by-default provisioning should include:

- Hardware identity and attestation where applicable

- Signed firmware and controlled update pipelines

- Strict chain-of-custody for sensitive components

- Role-based access and logging for rack deployment activities

Make deployment speed a first-class KPI

Capacity that sits in boxes is worthless. Track:

- Dock-to-rack time

- Rack-to-serve time (when workloads can run)

- Failure rate during burn-in and initial production

Then invest in automation: golden images, infrastructure-as-code for switch configs, repeatable rack integration procedures.

Use AI for operations where it’s proven

Skip the hype. Prioritize AI where I’ve seen consistent wins:

- Anomaly detection for thermal and power patterns

- Predictive maintenance on cooling and power systems

- Workload scheduling that respects power/cooling constraints

Done well, these reduce incidents and let you run infrastructure closer to optimal utilization.

People also ask: what does this mean for U.S. businesses buying AI?

Answer first: It means more predictable timelines, better supportability, and stronger compliance options—if buyers ask for them.

Does U.S. manufacturing automatically reduce costs? Not automatically. Labor and facility costs can be higher, but buyers often gain value through faster delivery, fewer disruptions, and better serviceability.

Will this improve AI availability for enterprises? It can, especially when it reduces integration bottlenecks and accelerates data center deployments. Availability is as much about time-to-deploy as it is about chip supply.

How should startups think about this? Startups can’t shape factories, but they can avoid vendor lock-in traps and make smarter architecture choices. Keep portability in mind (model serving stacks, inference quantization options, multi-region capacity planning).

Where this goes next for AI in cloud computing & data centers

The most practical interpretation of the OpenAI–Foxconn collaboration is this: AI scale requires co-design across software, hardware, and manufacturing. The U.S. digital economy benefits when that co-design happens closer to where services are delivered—because it shortens feedback loops and improves resilience.

If you lead infrastructure, platform, or digital services, treat AI supply chain strength as part of your product quality. Your customers don’t care why capacity is tight. They care whether features ship on time, latency stays low, and outages don’t happen.

The next step is a candid internal question: Which part of your AI service is most constrained by physical infrastructure—compute procurement, power, cooling, or deployment operations—and what would you change if you planned for that constraint upfront?