AI infrastructure is becoming a DOE-level priority in the U.S. Here’s what that means for cloud computing, data centers, and scaling AI services reliably.

AI Infrastructure in the U.S.: DOE Signals the Next Wave

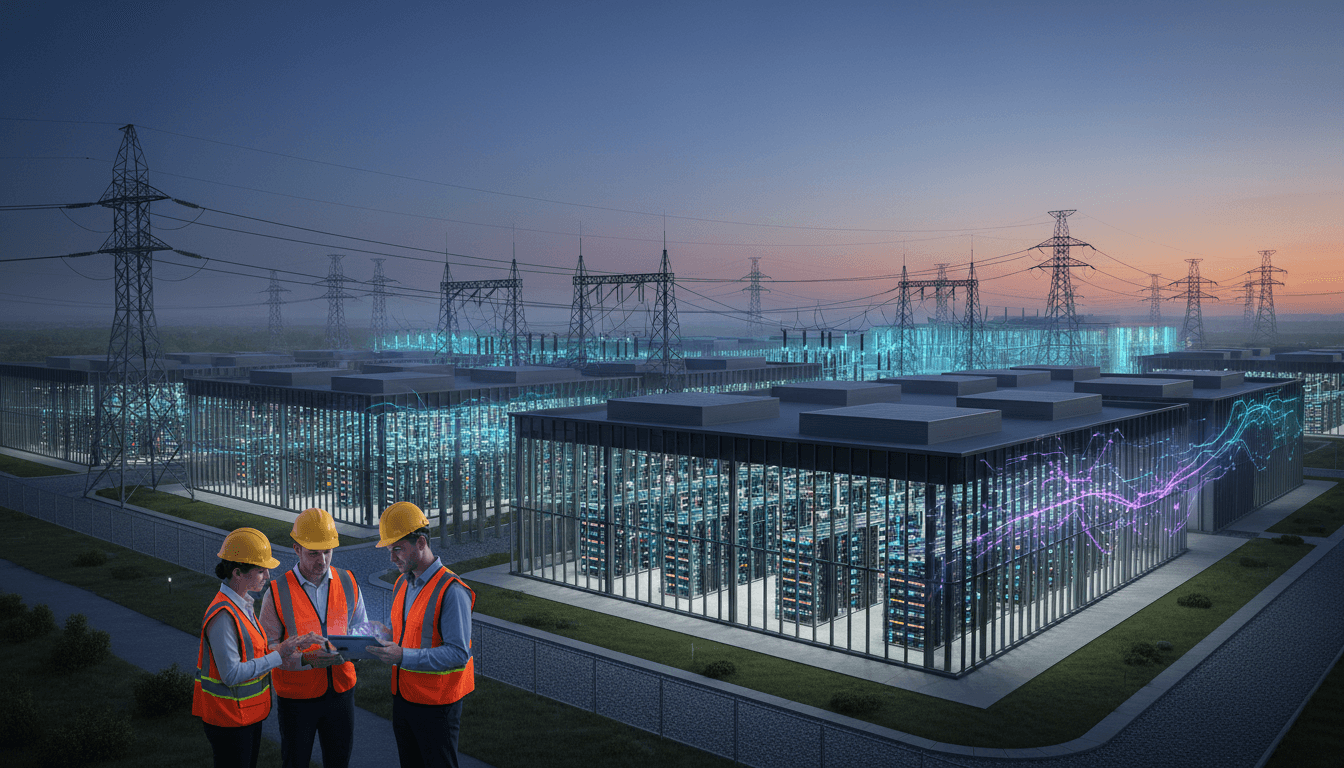

Most companies talk about AI like it’s only a software problem. It isn’t. The next wave of AI-powered digital services in the United States will be decided by infrastructure: power, data centers, chips, grid interconnection, cooling, and the operational discipline to run all of it reliably.

That’s why OpenAI engaging with the U.S. Department of Energy (DOE) on AI infrastructure—even if the public page is currently inaccessible due to a restricted load/403—still matters. When major AI builders and the federal agencies responsible for energy research, grid reliability, and large-scale compute are in the same conversation, it’s a signal: AI is being treated as national-scale infrastructure, not a side project.

This post is part of our “AI in Cloud Computing & Data Centers” series, where we focus on what actually makes AI work in production. Here’s the practical angle: what DOE-style infrastructure thinking means for cloud computing, data center strategy, energy efficiency, and the AI services your customers will expect in 2026.

Why AI infrastructure is now a DOE-level concern

Answer first: DOE interest means AI buildout is colliding with energy reality—power availability, grid constraints, and the need for efficient, reliable data centers.

Training and serving modern AI models is compute-heavy, and compute is power-hungry. Over the past two years, the U.S. has seen a surge of announcements around hyperscale expansions, dedicated “AI data centers,” and accelerated chip deployments. That activity quickly runs into hard constraints:

- Interconnection queues and transmission limits slow down new large loads.

- Local substation capacity can be the bottleneck, not the land or the building.

- Cooling and water decisions increasingly look like public policy decisions.

- Reliability and resilience requirements rise when AI services become embedded in healthcare, finance, logistics, and government.

DOE sits at the center of many of these issues—through national labs, energy efficiency research, grid modernization programs, and coordination across regions. When an AI company responds to DOE on infrastructure, it’s part of a broader pattern: public-private alignment on what “responsible scaling” looks like.

The myth: “AI runs in the cloud, so it’s someone else’s problem”

The reality? If you ship AI features, you’re already in the infrastructure business—just indirectly.

Even if you don’t own a single server, your product roadmap depends on:

- GPU capacity planning (availability swings can change unit economics overnight)

- Latency budgets (where inference runs matters)

- Data gravity (moving data is expensive, slow, and risky)

- Energy cost volatility (which often shows up as cloud pricing pressure)

DOE-level attention accelerates standard-setting around these constraints. And standards—formal or informal—shape how cloud providers design offerings, how colocation markets price power, and how enterprises negotiate capacity.

What “AI infrastructure” really includes (beyond GPUs)

Answer first: AI infrastructure is a full stack: power + facilities + compute + networking + operations + security—and the weakest layer sets your ceiling.

When people say “AI infrastructure,” they often mean “more GPUs.” That’s the loudest part, but not the most limiting. For teams planning AI in cloud computing and data centers, the stack is broader:

Power and grid interconnection

For AI-heavy footprints, power delivery is the product requirement. A site can have the perfect fiber routes and real estate and still fail on timeline because the grid can’t deliver enough MW soon enough.

Practical implications for U.S. digital services:

- If you need predictable AI latency at scale, you’ll care about where power is available and how stable it is.

- You’ll see more regional clustering of AI capacity in power-advantaged zones.

- “Time to power” becomes a key KPI next to “time to deploy.”

Data center design for sustained high utilization

AI workloads drive higher, steadier utilization than many traditional enterprise workloads. That changes facility design priorities:

- Higher rack densities and more aggressive thermal design

- Faster refresh cycles (depreciation schedules start to look different)

- Greater need for instrumentation to manage hotspots and failure domains

Networking and data movement

Training clusters are sensitive to network topology and congestion; inference fleets are sensitive to end-user latency. Expect more hybrid patterns:

- Centralized training hubs

- Distributed inference near metros and edge aggregation points

Operations: reliability, observability, and safety

The operational side is where “AI-powered digital services” succeed or fail. Mature AI infrastructure requires:

- Load forecasting and automated capacity allocation

- Multi-region failover planning for inference

- Policy controls for sensitive workloads

A DOE conversation implicitly elevates these concerns because energy infrastructure thinking is about reliability under load.

How public-private collaboration shapes U.S. AI leadership

Answer first: When industry and DOE align, the U.S. sets de facto infrastructure norms that ripple through cloud offerings, procurement, and enterprise architecture.

A lot of AI policy talk gets stuck on models. Infrastructure is where policy becomes operational reality.

Here’s what tends to happen when public agencies and AI builders coordinate on infrastructure topics:

- Common measurement frameworks emerge (think: energy efficiency metrics for AI workloads, reporting expectations, lifecycle planning).

- Procurement patterns shift (government and regulated industries adopt standards faster than you’d expect).

- Supply chain priorities become clearer (chips, transformers, switchgear, cooling equipment).

- R&D translation speeds up (national lab work informs commercial practice—especially in energy efficiency and grid optimization).

This matters for U.S. tech leadership because infrastructure standards create flywheels:

- Cloud providers align product roadmaps around the same constraints.

- Data center operators modernize faster to stay competitive.

- Software companies get more reliable primitives to build on.

Snippet-worthy: “The country that scales AI reliably and efficiently sets the rules for everyone else.”

December 2025 reality check: capacity is a strategy, not a purchase

Heading into 2026, AI demand isn’t limited to research labs anymore. Customer support, coding assistants, fraud detection, personalization, and document automation are now baseline expectations across U.S. digital services.

That means infrastructure planning has moved from “nice to have” to “board-level risk.” If you’re selling AI-enabled services, capacity shortfalls translate to:

- degraded user experience (latency spikes)

- higher cost per request

- throttled feature rollouts

- reliability incidents that erode trust

DOE engagement is a signal that the ecosystem is trying to avoid “build fast, regret later.”

What this means for cloud computing & data center teams

Answer first: Expect more pressure on energy efficiency, workload placement, and provable reliability—because AI growth will be judged by operational impact, not demos.

If you run infrastructure, platforms, or a cloud center of excellence, you can act now without waiting for policy documents.

1) Treat energy as a first-class SLO

Most orgs track cost and uptime. For AI, add energy visibility so you can make decisions that hold up under scrutiny.

Start with:

- per-workload energy estimates (even if approximate)

- peak vs. average power draw by cluster

- efficiency improvements tied to model choices (smaller models for more requests)

A practical stance: If you can’t describe the energy profile of your AI workloads, you don’t really control your AI costs.

2) Optimize inference like a product, not a sidecar

Inference is where most businesses feel AI daily. The big wins come from engineering discipline:

- Use routing: send simple requests to smaller/faster models

- Cache common outputs (with guardrails)

- Batch where latency allows

- Place inference close to users when it cuts real latency (not just on paper)

In cloud computing terms, this is intelligent resource allocation—matching model, hardware, and geography to the request.

3) Design for “capacity shocks”

GPU availability and pricing can change quickly. Build resilience into your architecture:

- multi-provider strategy where feasible

- abstraction layers so models can move

- graceful degradation modes (fallback models, queued responses)

This is the infrastructure version of incident planning: you don’t rise to the occasion; you fall to your preparation.

4) Use AI for data center optimization (yes, it’s recursive)

The best “AI in data centers” programs don’t stop at running AI workloads—they use AI to operate the facility and platform better:

- predictive maintenance on cooling and power systems

- anomaly detection for thermal hotspots

- workload scheduling based on energy price signals and carbon intensity

These are the kinds of operational improvements that align with DOE’s mandate: efficiency and reliability at scale.

People also ask: practical questions about AI infrastructure

Is AI infrastructure only for hyperscalers?

No. Even mid-market SaaS companies feel it through cloud pricing, service quotas, latency requirements, and compliance. You may not buy transformers, but you’ll make product decisions based on capacity and cost curves.

What’s the fastest way to reduce AI inference cost?

Start with model routing and right-sizing. Many teams overuse the largest model for every request. A tiered approach (small model first, escalate when needed) often cuts cost without hurting user outcomes.

Will energy constraints slow AI feature rollouts in the U.S.?

They can, especially in regions with limited grid expansion capacity. The teams that win will be the ones that plan workload placement, efficiency, and capacity contracts early—before a feature depends on a resource that’s suddenly scarce.

Where this goes next for U.S. digital services

AI infrastructure is becoming the quiet foundation under U.S. technology leadership. DOE attention is one piece of evidence that the country is shifting from experimentation to industrialization—setting expectations for reliability, efficiency, and scale.

If you build AI-powered digital services, the smartest move in 2026 won’t be “add more features.” It’ll be build the infrastructure posture that lets you ship those features predictably: energy-aware architecture, capacity-resilient platforms, and data center strategies built for sustained high utilization.

The question worth asking as you plan next quarter: If demand doubles overnight, does your AI stack bend—or does it break?