Google Cloud’s latest updates show AI moving into databases, security, and capacity planning—shaping smarter cloud ops. See what to adopt next.

AI-Driven Cloud Ops: Google Cloud Updates That Matter

Most infrastructure teams aren’t short on features—they’re short on signal. That’s why Google Cloud’s release notes from the last 60 days are worth reading as a story about where cloud operations is headed: AI-first orchestration, tighter security controls, and smarter resource planning that’s starting to look a lot like “autopilot for data centers.”

If you work in cloud infrastructure, platform engineering, or data center operations, this matters for a practical reason: the cost and complexity of running AI workloads (and the apps around them) is climbing, while expectations for uptime and security are not going down. The latest Google Cloud changes show a clear pattern: providers are embedding AI directly into the control plane, not just offering AI services on top.

Below is what I’d pay attention to, what it enables, and how to turn it into concrete operational wins.

AI is moving into databases (and it changes workflows)

The biggest shift in these notes isn’t a single model release—it’s the way generative AI is being brought into the places ops teams already live: databases.

Data agents inside managed databases

Google Cloud introduced data agents (Preview) that let users interact with data conversationally in multiple managed database offerings:

- AlloyDB for PostgreSQL

- Cloud SQL for MySQL

- Cloud SQL for PostgreSQL

- Spanner

This isn’t a “chatbot for SQL” gimmick. The operational implication is that data access patterns can become more automated and more standardized—if you govern them properly.

Here’s a realistic use case: a platform team builds a controlled data agent that can answer business questions (“What’s the weekly churn by segment?”) and also perform safe operational tasks (“Show the top 10 slow queries and suggest indexes”). Now the same agent can be used by analytics teams, application engineers, and SREs—without everyone reinventing query snippets.

What I’ve found works best in practice:

- Treat data agents like production interfaces, not experiments.

- Put them behind strong auth, logging, and least-privilege access.

- Define an explicit “allowed operations” policy (read-only vs. read/write).

Gemini models in the data plane

Google Cloud also expanded Gemini model availability across products:

- Gemini 3 Flash (Preview) is now available in Vertex AI and Gemini Enterprise, and can be used in AlloyDB generative AI functions.

- AlloyDB supports Gemini 3.0 models in its generative AI functions.

The real operational story is: AI-assisted query generation and evaluation is becoming native in managed databases. That’s a big deal for performance management and developer productivity—because it closes the loop between “generate,” “test,” and “fix” right where queries run.

Vector + semantic capabilities are becoming default

Across the notes you’ll see repeated investment in vector search and embeddings:

- BigQuery autonomous embedding generation (Preview)

- BigQuery semantic search via

AI.SEARCH(Preview) - Cloud SQL for PostgreSQL “Vector assist” (Preview)

- AlloyDB AI vector search acceleration (GA)

This trend matters for cloud architecture: the “RAG stack” is consolidating. Instead of running separate vector databases, teams can increasingly keep vector workloads inside the data platforms they already manage—reducing operational sprawl.

Infrastructure capacity planning is becoming more “AI-like”

AI workloads force you to think differently about capacity. GPUs, TPUs, and high-memory nodes behave like scarce inventory, not elastic commodity compute.

Compute reservations designed for AI workloads

Compute Engine now supports future reservation requests in calendar mode (GA) for GPU/TPU/H4D resources for up to 90 days.

This is one of those “boring” changes that can save a project. If you’ve ever had a training run blocked by unavailable GPUs, you know why.

How to use this in a way that actually helps:

- Reserve for known milestones: model pre-training, fine-tuning sprints, quarterly benchmarking.

- Tie reservations to cost reporting so teams can see the real cost of guaranteed capacity.

- Build a reservation playbook: who requests, who approves, how you release unused capacity.

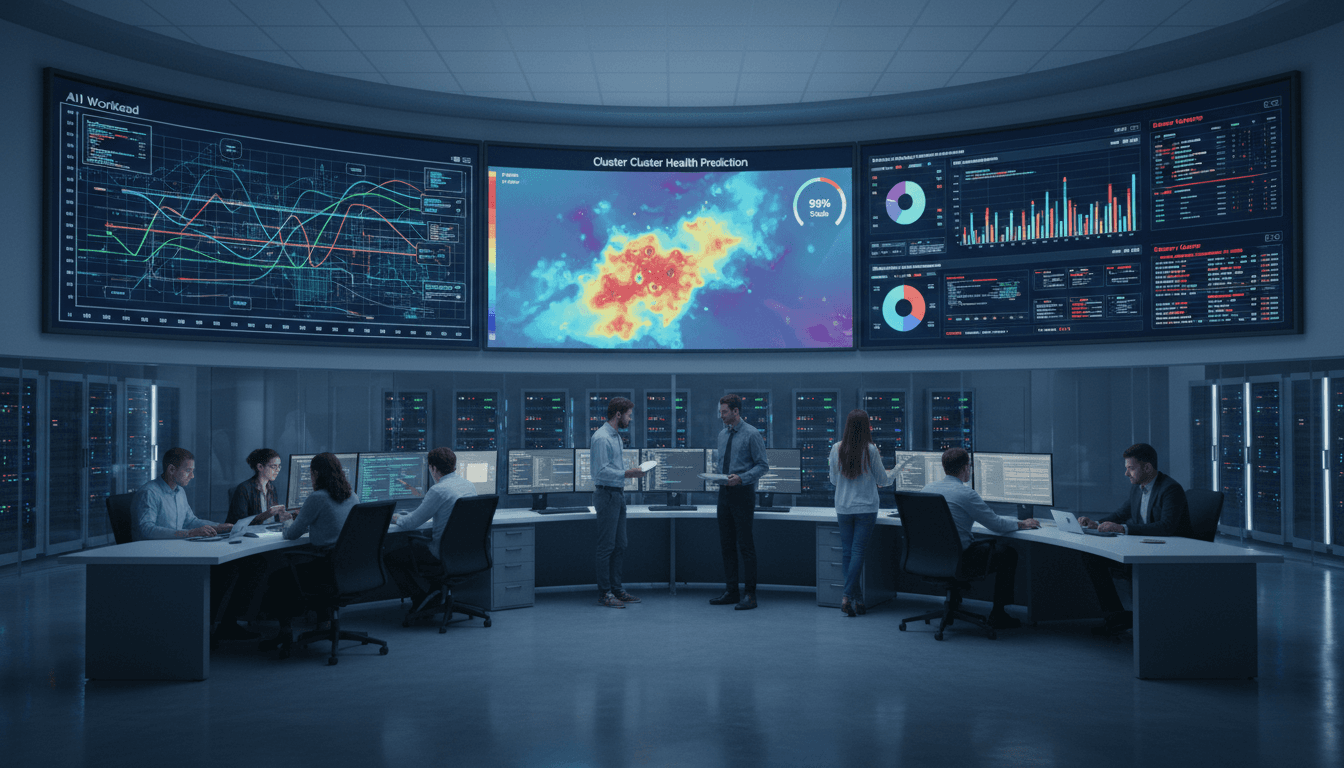

AI Hypercomputer: predicting node health

AI Hypercomputer adds node health prediction (GA) for AI-optimized GKE clusters to avoid scheduling on nodes likely to degrade in the next five hours.

That’s a direct example of cloud providers using predictive signals to improve reliability at the cluster level. In data center terms, it’s like proactive hardware triage—but exposed to you as a scheduling feature.

If you run large-scale training jobs, this is the kind of feature that reduces “mystery failures” that burn expensive GPU time.

MIG flexibility to maximize obtainability

Compute Engine also expanded managed instance group flexibility (including the ANY distribution shape) to improve resource obtainability.

The stance I’d take: any tool that increases obtainability without adding operational chaos is worth adopting, especially for batch and AI-adjacent workloads.

Security is getting more centralized—and more AI-aware

The security updates in these notes are not cosmetic. They point to two parallel moves:

- stronger centralized governance across distributed systems, and

- controls specifically designed for agentic AI.

API security across multi-gateway environments

Apigee Advanced API Security can now govern security posture across multiple projects, environments, and gateways using API hub.

That’s important because modern organizations rarely run APIs in one place. They run them across multiple gateways, regions, and sometimes multiple clouds.

Centralizing risk scores and security profiles is how you avoid this common failure mode:

“We secured the important APIs… we just can’t list them all.”

Model Context Protocol (MCP) becomes a first-class citizen

API hub now supports Model Context Protocol (MCP) as an API style, and BigQuery introduced a remote MCP server (Preview).

MCP matters because it’s the emerging pattern for how AI agents discover and use tools. The operational impact is governance:

- If tools are APIs, MCP is how agents catalog and call them.

- That means you need registry, approval, versioning, and monitoring for “agent tools,” not just human-facing APIs.

Cloud API Registry (Preview) adds to this story by focusing specifically on MCP servers and tools.

Model Armor and AI protection

Security Command Center updates highlight AI Protection and Model Armor integrations (some GA, some Preview depending on feature/tier).

This is one of the clearest signs that cloud security is adapting to a new risk category: prompt injection, data exfiltration via tools, and unsafe model interactions.

If you’re building agents, the practical checklist to start with is:

- Log prompts/responses (and decide retention)

- Sanitize user prompts and model outputs

- Control tool access with least privilege

- Monitor for abnormal tool-call patterns

The release notes also point to a bigger truth: agent security is becoming a platform feature, not an app-only problem.

Reliability upgrades that look small—until they save you

A few updates are easy to skim past, but they can create real operational improvements.

Cloud Load Balancing rejecting non-compliant HTTP methods

Starting December 17, 2025, non-RFC-compliant request methods are rejected by a first-layer Google Front End before traffic reaches your load balancer/backends.

That typically means:

- slightly fewer weird edge-case errors in your app

- cleaner error-rate signals

It’s also a reminder that providers are increasingly enforcing standards at the edge, which reduces noise for operations teams.

Single-tenant Cloud HSM (GA)

Single-tenant Cloud HSM is now generally available in several regions.

If you’re in regulated industries or handling high-assurance key material, dedicated HSM instances with quorum approval can simplify compliance conversations. It’s not cheap, but it’s often cheaper than the operational overhead of bespoke on-prem HSM fleets.

Cloud SQL enhanced backups (GA)

Cloud SQL enhanced backups now integrate with Backup and DR, and include features like:

- centralized backup management projects

- enforced retention

- granular scheduling

- PITR even after instance deletion

That’s the direction ops teams want: fewer ad-hoc backup scripts, more policy-driven protection.

What to do next (so these updates become real outcomes)

It’s tempting to treat release notes as “FYI.” If you want this wave of AI-driven cloud operations to actually reduce toil and cost, you need to make it part of your platform roadmap.

A practical 30-day plan

-

Pick one AI-embedded workflow to pilot

- Example: a data agent for read-only operational analytics (slow queries, top tables, workload spikes).

-

Formalize your AI tool governance

- Decide how you’ll register and approve agent tools (MCP is a strong hint of where the industry is going).

-

Lock in capacity strategy for Q1 2026

- If you run GPU workloads, start using future reservations for known training/fine-tuning windows.

-

Upgrade your “AI security baseline”

- Treat prompt/output sanitization and tool access controls as mandatory, not optional.

The broader theme in this “AI in Cloud Computing & Data Centers” series is simple: cloud operations is becoming model-assisted operations. Providers are baking intelligence into scheduling, database interaction, security posture management, and even edge request handling.

If you’re building a platform strategy for 2026, the real question isn’t whether you’ll use AI in the cloud. It’s whether you’ll use it in a way that measurably improves performance, reliability, and energy-efficient resource allocation—or whether it becomes just another surface area to maintain.