Stargate’s AI datacenter expansion signals more capacity, lower latency, and steadier costs for U.S. digital services. Plan for scale now.

AI Datacenter Expansion: What Stargate Signals Next

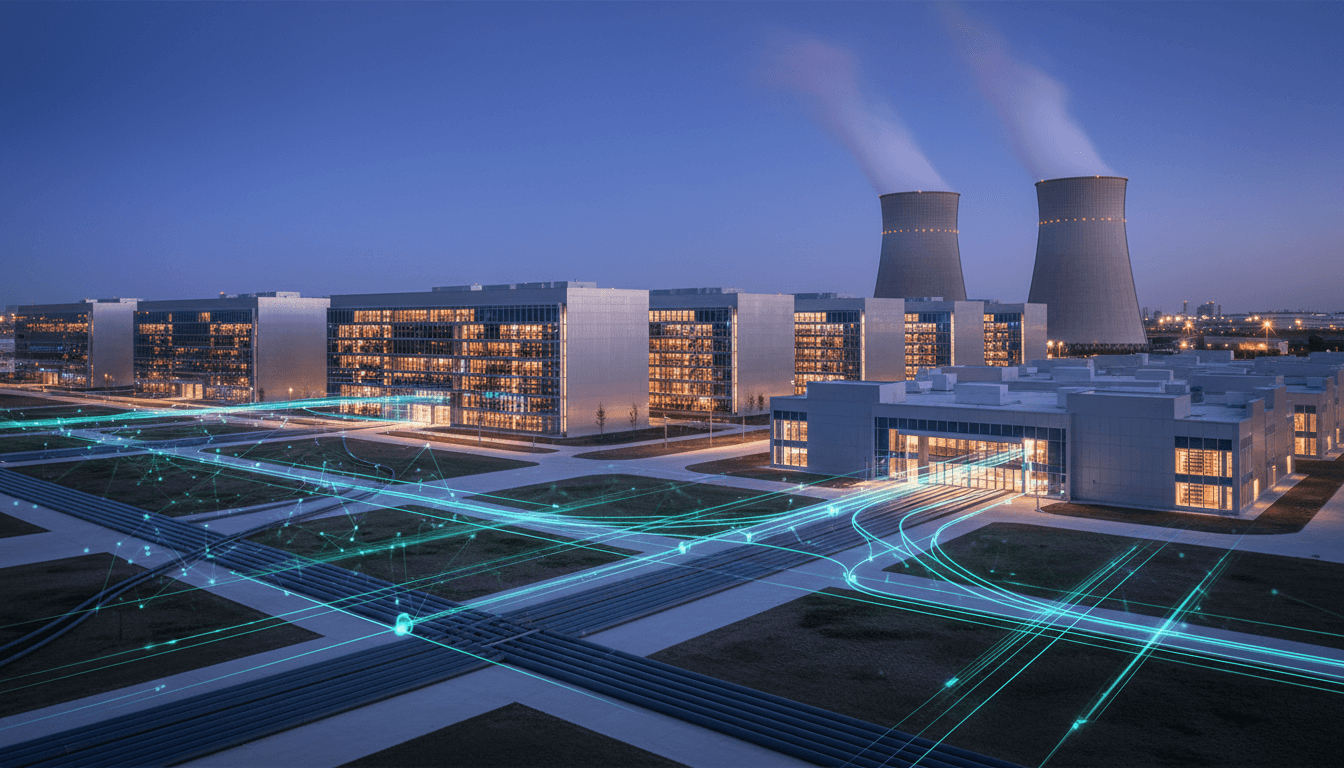

AI infrastructure is becoming a competitive advantage, not a back-office detail. When OpenAI, Oracle, and SoftBank expand an AI datacenter initiative like Stargate with additional sites, they’re not just adding capacity—they’re laying track for the next wave of AI-powered digital services in the United States.

What’s tricky (and easy to miss) is that datacenters aren’t just “bigger clouds.” AI workloads behave differently: they’re spiky, GPU-hungry, network-sensitive, and extremely expensive when they’re idle. If you run a SaaS product, a digital agency, a managed service provider, or a fast-growing startup, infrastructure decisions upstream will shape what you can ship downstream—features, latency, reliability, and ultimately pricing.

This post breaks down what the Stargate expansion likely means for AI in cloud computing and data centers, why the OpenAI–Oracle partnership matters for U.S. digital services, and how operators and builders can plan for the realities of AI infrastructure in 2026.

Stargate’s expansion matters because AI capacity is the bottleneck

The direct takeaway: AI datacenter expansion is about removing the constraint that most limits AI product growth—compute. Models are getting larger, inference demand is exploding, and customers now expect AI features inside “normal” software. That combination turns compute into the new scarcity.

In practical terms, new datacenter sites reduce risk across three dimensions:

- Capacity risk: shortages of GPU/accelerator availability that delay product launches

- Performance risk: latency and throughput issues when workloads are forced into distant regions

- Cost risk: price volatility when demand spikes and supply can’t catch up

For U.S.-based digital services, this is a big deal because a lot of the AI experience customers notice—fast responses, stable performance during peak usage, fewer timeouts—depends on whether inference capacity is actually there.

AI workloads push data centers differently than traditional cloud

A typical web app scales with more CPU, more memory, and more instances. AI systems scale with different physics:

- Accelerators are the unit of scale. GPUs/TPUs/other accelerators are the choke point, not vCPUs.

- Network fabric matters. Training and high-throughput inference care deeply about interconnect bandwidth and topology.

- Storage is no longer “just storage.” Feeding accelerators requires high IOPS and strong throughput; slow data pipelines waste expensive compute.

- Power and cooling become product constraints. AI racks drive power density higher, and that changes which sites can be brought online quickly.

Here’s the sentence that tends to be true in the field: If your accelerators are waiting on data or network, you’re paying premium prices for idle silicon.

Why OpenAI + Oracle is a signal for “AI as a utility layer”

The key point: partnerships between model providers and enterprise cloud operators are turning AI into a utility layer—like storage and compute—but with stricter guarantees.

Oracle’s enterprise footprint, compliance posture, and experience running large-scale infrastructure makes it a natural match for organizations that want AI power without building their own GPU farms. Pair that with OpenAI’s model ecosystem and you get a blueprint many U.S. digital service providers will follow: buy reliable AI capacity the same way you buy database capacity.

This matters because the AI market is shifting away from “cool demos” toward operational AI:

- AI features embedded in SaaS workflows (support, sales enablement, document processing)

- AI copilots that must respond consistently under load

- Industry-specific agents that require data residency and auditability

When infrastructure expands, it reduces friction for these use cases to move from pilot to production.

What the SoftBank angle suggests about scale and timelines

SoftBank’s involvement usually points to a specific thesis: go big early, because infrastructure lead times are long and demand compounds. Building AI data center sites isn’t like renting more servers. It requires power contracts, cooling design, specialized hardware procurement, and long commissioning cycles.

So when you see a multi-party initiative expanding sites, it’s reasonable to interpret it as planning for:

- higher baseline inference demand (not just occasional bursts)

- multi-region footprints for reliability and latency

- long-term capacity commitments that make unit economics workable

For buyers of AI services—SaaS leaders, CTOs, platform owners—this is good news. It increases the odds that “we can’t get enough GPUs” stops being a recurring planning crisis.

Five practical impacts for SaaS and digital service providers in the U.S.

Here’s the part that should change how you plan. More AI datacenter sites generally mean more predictable AI product delivery—if you design for it.

1) Lower latency becomes realistic for mainstream AI features

If capacity is closer to users, you can keep more requests in-region. For customer-facing AI (chat, search, content generation, real-time recommendations), shaving even 200–400ms matters.

Actionable move: instrument latency by geography and tie it to conversion, retention, or resolution time. If AI latency doesn’t show up on a business dashboard, it won’t get budget.

2) Reliability improves, but only if you build multi-region behavior

More sites don’t automatically make your product resilient. You still need architecture that can tolerate region-level degradation.

Actionable move:

- design failover for inference endpoints (active-active or active-passive)

- build graceful degradation (smaller model, cached responses, or “AI temporarily unavailable” flows)

- keep idempotency and retry behavior sane to avoid request storms

A hard truth: most AI outages aren’t model failures—they’re capacity, networking, or dependency failures.

3) Cost predictability gets better, but unit economics still need discipline

Even with more supply, inference can dominate COGS if you treat models like infinite resources. The winners in 2026 will be teams that do cost engineering early.

Actionable cost controls that actually work:

- route by complexity: small/cheap model for easy tasks, larger model only when needed

- cache aggressively: repeated questions, repeated summarizations, repeated retrieval results

- batch where possible: async processing for non-interactive tasks (reports, enrichment)

- measure cost per successful outcome, not cost per request

4) Data residency and enterprise procurement get simpler

More U.S. sites can reduce cross-border processing concerns and support stricter governance needs.

Actionable move: map your AI data flows now:

- what data leaves your system?

- where is it processed?

- what gets stored, for how long?

- what can you redact or tokenize before inference?

Teams that answer these cleanly win deals faster.

5) New infrastructure pushes competition toward “who builds the better product,” not “who got GPUs”

When compute is scarce, well-funded companies win by outbidding everyone else. As capacity expands, differentiation moves back to what customers feel: workflow design, accuracy on real tasks, UX, integrations, and trust.

Actionable move: stop treating AI as a feature checklist. Treat it as a product surface:

- define your AI acceptance tests (what “good” looks like)

- create human-in-the-loop pathways for high-risk outputs

- invest in evaluation harnesses tied to real user tasks

How this fits into AI in cloud computing and data centers

The larger theme of this series is simple: AI is forcing cloud infrastructure to evolve—operationally and economically. The Stargate expansion is one visible marker of that evolution.

Data center design is becoming AI-aware

AI-ready sites prioritize:

- higher power density per rack

- advanced cooling approaches and airflow management

- fast storage tiers and high-throughput data pipelines

- low-latency, high-bandwidth network fabrics

If you run infrastructure (or buy a lot of it), these constraints will show up as real product questions: “Can we deploy in-region?” “Can we hit our SLOs?” “Can we afford this at scale?”

Cloud workload management will increasingly be model-aware

Traditional autoscaling reacts to CPU or request counts. AI-era autoscaling must consider:

- token throughput

- queue depth

- accelerator utilization

- model routing decisions (small vs large)

If you’re building SaaS, this is a strategic advantage: teams that make inference observability first-class will ship faster and waste less.

What should teams do now? A 30-day checklist

If you want to benefit from AI infrastructure expansion instead of just reading about it, do these within a month:

- Baseline your AI COGS: cost per 1,000 actions (tickets summarized, calls transcribed, pages extracted).

- Add model routing: implement at least a two-tier model strategy (fast/cheap vs heavy/accurate).

- Create an evaluation set: 100–300 real examples from production and a scoring rubric.

- Harden reliability: retries, timeouts, backpressure, and fallback responses.

- Document data handling: what gets sent, stored, logged, and redacted.

This is the work most teams postpone—and it’s exactly what separates profitable AI features from expensive experiments.

The U.S. digital economy runs on infrastructure—AI makes it obvious

More AI datacenter sites for Stargate doesn’t just help big players. It raises the ceiling for everyone building digital services: faster inference, more predictable capacity, and better regional performance. The companies that benefit most won’t be the ones who “use AI.” They’ll be the ones who engineer for AI reality—latency, cost, reliability, and governance.

If you’re planning your 2026 roadmap, treat AI infrastructure like you treat payments or identity: it’s foundational, it affects every workflow, and customers notice when it breaks. Where do you want your product to sit on that curve—scrambling for capacity, or designing for scale while the infrastructure catches up?