Micro1’s leap to $100M ARR spotlights booming demand for AI data training. Here’s what it means for media AI, cloud costs, and vendor selection.

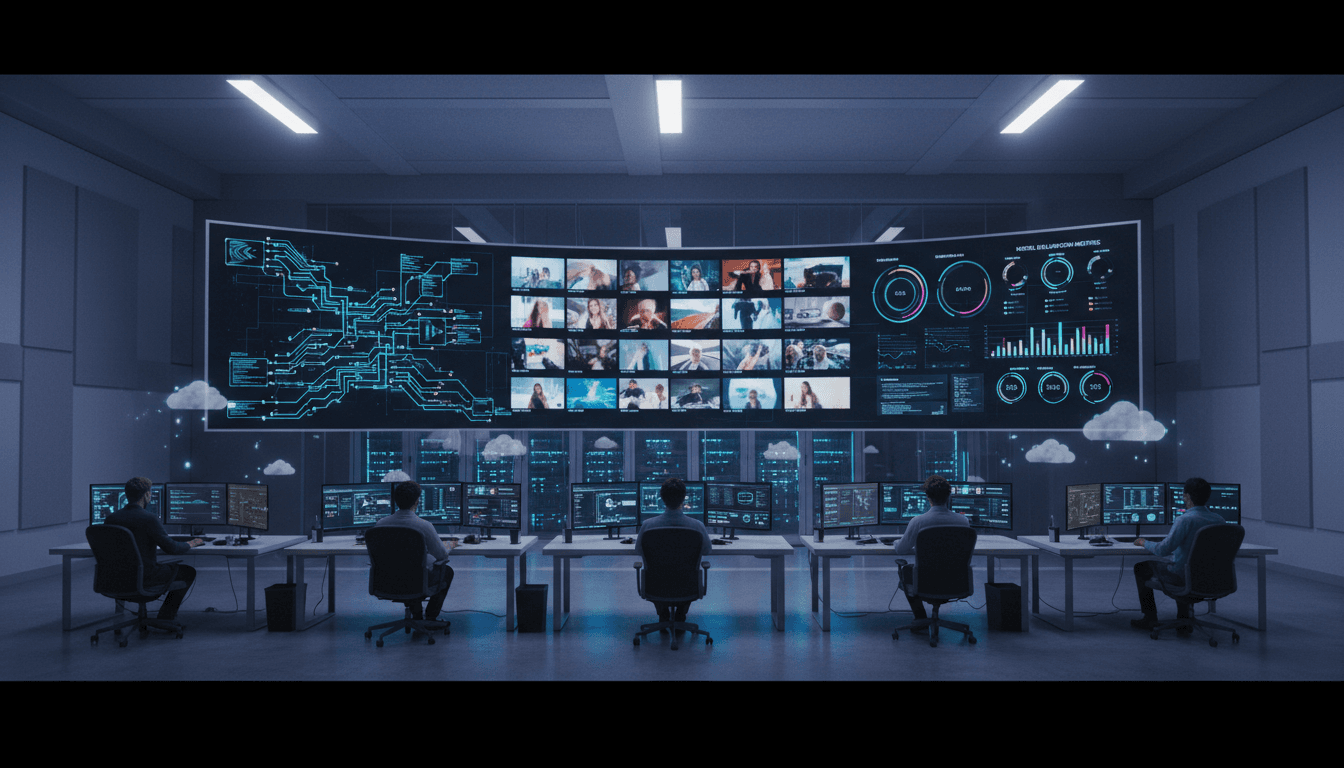

Micro1’s $100M ARR Surge: What It Signals for Media AI

Micro1 says it crossed $100 million in annual recurring revenue (ARR) after starting this year at roughly $7 million ARR. That kind of jump isn’t a “nice quarter.” It’s a market signal.

If you work in media and entertainment—streaming, gaming, sports, news, studios—this matters for a simple reason: data training is becoming a bigger line item than many teams planned for, and the vendors that make training data faster, safer, and more scalable are getting paid.

This post is part of our AI in Cloud Computing & Data Centers series, so I’m going to frame Micro1’s momentum through the infrastructure lens: what this growth says about demand for AI data training platforms, how it changes cloud workload planning, and what media leaders should do next.

Micro1’s ARR jump is a demand signal for training data

Micro1’s claimed move from ~$7M ARR to $100M+ ARR in a single year points to one thing: enterprises are spending aggressively on the “unsexy” layer of AI—data labeling, data training operations, and human-in-the-loop QA.

AI teams can’t ship reliable models on prompts and vibes. They need:

- Large volumes of curated training examples

- Tight feedback loops (label → train → evaluate → relabel)

- Governance and auditability (who labeled what, when, and why)

- Consistent quality across languages, regions, and content types

This is exactly where competitors to Scale AI tend to sit: managed training data pipelines that turn raw content into model-ready datasets.

Why media & entertainment feels this shift earlier than other industries

Media companies run into messy edge cases faster than, say, internal document search.

A recommendation model for a streaming service has to understand (and continuously re-learn):

- New releases and fast-changing catalogs

- Local regulations and cultural context

- Parental controls and sensitive content flags

- Viewer intent that changes by device, household, and time of day

That’s why training data work doesn’t plateau. It compounds. Every new format (short clips, live sports micro-highlights, interactive ads) creates new labeling and evaluation needs.

Snippet-worthy take: In media AI, the model isn’t the hard part. Keeping the training data pipeline current is.

The hidden engine behind recommendations, search, and content ops

The fastest way to understand Micro1’s growth is to look at where training data spend is rising inside media orgs: personalization and automation at scale.

Recommendation engines: the “data flywheel” is real (and expensive)

Modern recommendation systems increasingly blend classical ranking with generative and multimodal signals:

- Text embeddings from titles, synopses, subtitles

- Vision signals from thumbnails and frames

- Audio signals from dialogue, music cues

- Behavioral sequences (session-level intent)

Each signal needs ground truth to stay stable—especially after model updates.

Teams end up funding ongoing work like:

- Preference tagging for cold-start titles

- “Why was this recommended?” explanation labels

- Counterfactual evaluations (did this change harm niche audiences?)

If you’re rolling out a new recommender model in Q1 2026, expect that the data program becomes its own product.

Content moderation and brand safety: accuracy beats volume

Media businesses don’t get to treat safety as an afterthought. Advertisers and regulators won’t allow it, and audiences won’t forgive it.

Training data vendors that can support:

- Policy-aligned labels (your rules, not generic internet rules)

- Multi-lingual nuance

- High-precision adjudication workflows

…are positioned to win large contracts.

Operational automation: where ROI shows up first

I’ve found media teams often get the quickest wins by training models around workflows, not “creative magic.” Examples:

- Automated metadata enrichment (cast, themes, moods)

- Ad break detection and scene segmentation

- Sports highlight clipping and summarization

- Archive search across footage libraries

All of these require high-quality supervised datasets and evaluation sets. That’s a direct on-ramp to vendors building scalable data training solutions.

What this means for cloud computing and data center strategy

Micro1’s ARR story isn’t just a venture headline. It’s also a cloud capacity planning headline.

When data training accelerates, three infrastructure patterns show up immediately:

1) Training data pipelines become always-on workloads

Many orgs still budget AI as periodic spikes: “We’ll train a model this quarter.”

Reality in 2025–2026 is closer to continuous training and evaluation:

- Daily dataset refreshes

- Frequent regression testing

- Ongoing annotation for new content types

- Parallel experiments across regions

This changes how you use cloud infrastructure:

- You need predictable baseline compute for ETL and feature generation

- You need burst capacity for retraining and backfills

- You need storage tiering because raw media is huge and expensive

2) GPU is only part of the bill—data movement hurts more

Media datasets are large, and moving them is costly. The bills that surprise teams:

- Egress fees when data crosses clouds/regions n- Repeated reads/writes during preprocessing

- Duplicate dataset versions because governance requires retention

A practical shift I’m seeing: teams treat data locality as a first-class AI design constraint.

If your labeling vendor and your training environment live far apart (network-wise), you’ll feel it in both time-to-train and spend.

3) Governance pressure pushes hybrid and private workflows

Media companies deal with:

- Licensing constraints (who can view the footage)

- Talent/union considerations in some markets

- Contractual restrictions on training use

- Customer privacy obligations for personalization data

This is pushing more hybrid cloud architectures:

- Sensitive raw assets stay in a private environment

- Derived features and embeddings move to cloud

- Annotators work through controlled portals with audit logs

The vendors that grow fastest will support these patterns without turning setup into a six-month integration project.

If you’re evaluating AI data training vendors, focus on these 6 questions

Micro1 positioning as a Scale AI competitor highlights how crowded (and confusing) this category is getting. The trap is choosing based on pitch decks instead of operational fit.

Here are six questions that separate “works in a demo” from “works in production”—especially for media and entertainment.

1) Can they prove label quality with measurable SLAs?

Ask for quality reporting that includes:

- Inter-annotator agreement (by task and language)

- Adjudication rates and turnaround times

- Drift monitoring (quality over time)

If the vendor can’t quantify quality, you’ll end up paying twice: once for labels, then again for relabeling.

2) Do they support multimodal workflows (video, audio, text) end-to-end?

Media AI rarely stays single-modality. Look for:

- Frame-level and segment-level video annotation

- Audio event labeling (music, profanity, crowd noise)

- Subtitle alignment and entity labeling

3) How do they handle rights, privacy, and auditability?

You want:

- Role-based access controls

- Immutable audit logs (who accessed what)

- Dataset versioning with lineage

- Clear data retention and deletion policies

4) Can they run inside your cloud boundary or VPC?

For high-sensitivity content, the best answer is often:

- Vendor tooling deployed into your environment, or

- A secure enclave model with strict controls

This matters for both compliance and cloud cost control.

5) What’s their approach to model evaluation, not just labeling?

A mature partner helps you build:

- Gold sets and challenge sets

- Regression suites for recommender changes

- Bias and safety evaluations tied to your policies

Labeling without evaluation is how teams ship regressions.

6) Can they scale during seasonal peaks?

December is a perfect example. Media workloads spike around holidays: new releases, higher viewing, more customer support, more moderation.

Ask vendors how they handle:

- Ramp staffing without quality collapse

- 24/7 ops for global launches

- Peak annotation throughput guarantees

A practical playbook for media teams planning 2026 AI budgets

Micro1’s growth is a reminder that AI spend is shifting from “models” to “operations.” If you want to build durable advantage, budget for the unglamorous parts.

Here’s what I recommend for Q1–Q2 2026 planning:

- Create a training data roadmap next to your model roadmap. Treat datasets like products with owners, SLAs, and refresh schedules.

- Measure unit economics: cost per labeled minute of video, cost per policy decision, cost per evaluation run. If you can’t measure it, you can’t optimize it.

- Design for data locality early to reduce cloud egress and pipeline latency.

- Split workloads intentionally: keep sensitive assets private/hybrid; push scalable preprocessing and training bursts to cloud.

- Standardize evaluation gates before model releases—especially for recommendations and moderation.

One-liner you can use internally: If the dataset isn’t maintained, the model is a liability.

Where this is heading: data training becomes a core infrastructure layer

Micro1 claiming $100M+ ARR after starting the year around $7M suggests the market is treating data training platforms as critical infrastructure, not auxiliary services. For media and entertainment, that’s logical: personalization, discovery, and safety depend on it.

As this space heats up, expect three near-term outcomes:

- More vendor specialization (sports, kids content, live streaming, gaming)

- Stronger integration with cloud infrastructure (workload management, storage tiering, governance)

- More scrutiny on provenance (what content trained what model, under what rights)

If you’re building or buying AI for recommendations, moderation, or content operations in 2026, the real question isn’t “Which model should we pick?” It’s “Can our data training pipeline keep up with our catalog, our audience, and our policies?”