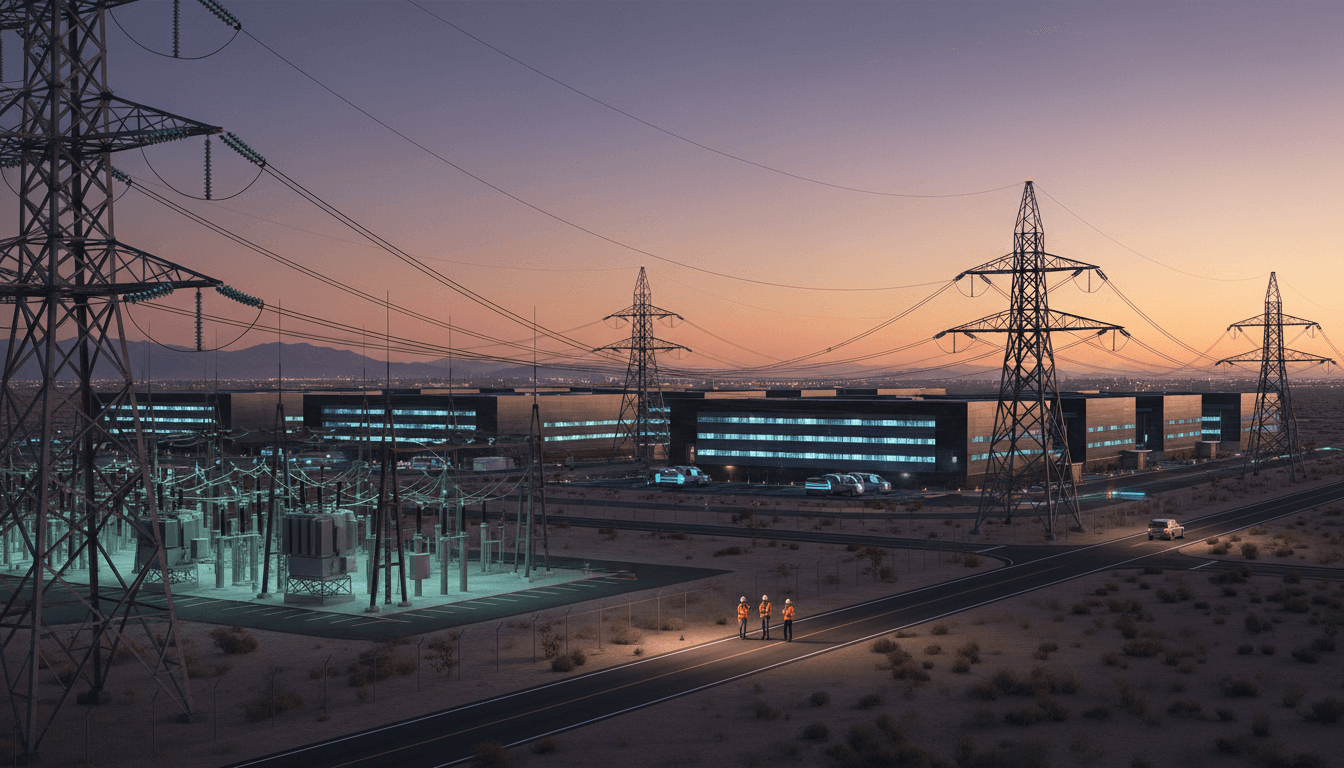

OpenAI’s DOE response shows why AI data center infrastructure—power, permits, and policy—now determines how fast U.S. digital services can scale.

AI Data Center Infrastructure: What OpenAI Told DOE

A new AI model can ship in weeks. A new AI data center can take years.

That’s the real tension behind OpenAI’s May 2025 response to the U.S. Department of Energy (DOE): software progress is fast, but AI infrastructure—power, land, permits, grid interconnection, and high-density compute—moves at the pace of physical construction and regulation. If the U.S. wants AI-driven digital services to scale reliably (and competitively), the bottleneck isn’t just talent or algorithms. It’s electricity and time.

This post is part of our “AI in Cloud Computing & Data Centers” series, where we track how the next era of cloud growth is being shaped by energy constraints, data center design, and policy choices. OpenAI’s DOE proposals are a useful case study because they connect three threads that are usually discussed separately: national competitiveness, cloud-scale compute, and grid modernization.

AI infrastructure is destiny (and that’s not a slogan)

AI infrastructure is destiny because compute access determines who can build, deploy, and afford advanced AI. If you can’t stand up capacity fast enough—or can’t power it cheaply and reliably—your AI roadmap turns into a waiting list.

OpenAI’s framing is blunt: AI is a foundational technology on the level of electricity, and democratic countries should not outsource its trajectory to authoritarian regimes. Behind the geopolitics is a very practical point for U.S. technology and digital services: cloud AI is becoming a core utility for product development, customer support, analytics, cybersecurity, and automation. When compute becomes scarce, prices rise, queues grow, and experimentation slows.

For leaders building digital services—SaaS platforms, fintech apps, healthcare portals, logistics networks—this matters because AI features aren’t “extras” anymore. They’re increasingly table stakes:

- Real-time copilots for support agents

- Automated document intake and compliance checks

- Personalization and recommendation systems

- Fraud detection and anomaly monitoring

- Internal developer productivity tools

Those workloads don’t just require GPUs. They require data center capacity near robust transmission, predictable power contracts, and operational resilience.

The hidden constraint: power density and grid readiness

Modern AI clusters concentrate enormous demand in small footprints. The U.S. isn’t short on total generation in a simplistic sense, but it is short on:

- Fast interconnection for large loads

- Transmission capacity to move power where new campuses are built

- Permitting speed for generation and grid upgrades

- Predictable pricing for long-term compute economics

This is why DOE involvement is logical. DOE can influence how federal land assets, energy programs, and national labs intersect with commercial buildouts.

Why DOE and federal land matter for cloud-scale AI

Federal land matters because it can compress timelines—if the process is designed to move at the speed of private capital. OpenAI’s proposal pushes for a public solicitation where private companies can propose building, operating, maintaining, and eventually decommissioning AI infrastructure on federal land.

That idea isn’t just about “more land.” It’s about reducing friction in four areas that routinely delay AI data center projects:

- Site selection constraints (zoning, community opposition, environmental reviews)

- Power availability (substation buildouts, transmission upgrades)

- Permitting complexity (multiple agencies, sequential reviews)

- Financing risk (uncertainty inflates the cost of capital)

From the perspective of the U.S. digital economy, the payoff is straightforward: more compute supply lowers the “AI tax” on every company trying to add AI features.

National labs as an “infrastructure multiplier”

OpenAI highlights mission-driven collaborations with U.S. national labs, including a multi-year partnership with Los Alamos National Laboratory launched in January 2025. The bigger takeaway isn’t a single partnership—it’s the model:

Pair public-sector scientific talent and specialized facilities with private-sector AI systems and operations.

When that works, it produces benefits that typical commercial cloud rollouts don’t prioritize:

- Accelerated research in materials, energy, and national security domains

- Shared learnings about reliability, safety testing, and evaluation

- A deeper domestic bench of practitioners who know how to run large AI systems

In practice, it can also influence the broader cloud market. Techniques proven in mission environments—fault tolerance, secure enclaves, auditability—often become patterns that later show up in enterprise cloud products.

The three policy moves that change AI capacity the fastest

The fastest way to expand AI compute in the U.S. is to speed up permitting, standardize deployment patterns, and reduce investor uncertainty. OpenAI’s proposals map cleanly to those levers.

1) Public solicitation for private-sector AI hubs

OpenAI argues DOE should solicit private proposals for AI infrastructure on federal land. Done well, this creates a pipeline of projects with clearer rules, clearer timelines, and repeatable structures.

Here’s what “done well” looks like from a cloud and data center operator’s standpoint:

- Standard templates for leases and land use

- Pre-vetted environmental and cultural review pathways

- Defined requirements for water usage, noise, and decommissioning

- Grid interconnection coordination baked into the program

The point is repeatability. Repeatable deployments are how cloud providers scale. If every project is bespoke, you don’t get a true national buildout—you get a handful of one-off campuses.

2) Streamlined permitting (the unglamorous bottleneck)

OpenAI calls out streamlined permitting as essential, including programmatic reviews for repeatable deployments, categorical exclusions where appropriate, “shot clocks” for assessments, and surge staffing. This is the most pragmatic part of the submission.

I’m opinionated here: permitting speed is now a competitiveness metric. If it takes materially longer to build power and interconnect large loads in the U.S. than elsewhere, AI investment will route around the U.S. even if we have excellent talent.

OpenAI also notes “AI-powered permitting tools” across workflows. That’s not a throwaway line. Agencies process massive volumes of documents—environmental impact statements, interconnection studies, public comments. If government workflows don’t adopt document AI, they’ll be outpaced by the scale of new infrastructure demand.

3) Financing and contracts that reduce risk

OpenAI advocates for predictable lease agreements and federal mechanisms to reduce private investment risk—examples mentioned include competitive electricity tariffs and targeted tax incentives.

Why it matters: AI data centers are capital-intensive and schedule-sensitive. Delays don’t just push back revenue; they can strand hardware plans, miss model training windows, and disrupt customer commitments.

A simple way to think about it:

- Uncertainty increases the cost of capital

- Higher capital costs increase compute costs

- Higher compute costs slow AI adoption across the economy

When policymakers talk about “keeping AI affordable,” this is one of the few levers that actually changes unit economics at scale.

What Stargate signals for the U.S. cloud and data center market

Stargate signals a shift from incremental capacity to campus-scale AI buildouts designed for national distribution. OpenAI describes Stargate as an unprecedented investment, with the first supercomputing campus underway in Abilene, Texas, and additional sites being identified in Texas and other states.

Whether you’re a cloud buyer or a digital services leader, the practical implication is geographic: the U.S. is moving toward multiple AI supercomputer hubs rather than a single concentrated region. That’s good for:

- Latency-sensitive inference (customer-facing AI experiences)

- Resilience and regional failover

- Local economic development (construction, operations, skilled trades)

- More balanced grid planning compared to extreme clustering

A realistic view: “AI available to all” is not automatic

OpenAI references lessons from rural electrification—specifically that top-down approaches can miss community realities and leave gaps that last decades.

The modern parallel is easy to spot:

- Big metro regions get AI-ready connectivity, jobs, and training

- Rural and smaller communities get slower access, fewer pathways in

If we want “AI available to all” to mean something in 2026 and beyond, it can’t just be “an enterprise cloud exists.” It has to include:

- Community-based AI literacy programs

- Workforce pipelines for data center operations and skilled trades

- Small-business access to AI tools that don’t require specialized staff

OpenAI points to national calls for AI education. That’s the right direction, but the execution has to be local. National funding without local delivery becomes another well-intended program that never reaches the last mile.

What this means for companies scaling AI-powered digital services

If you’re building AI features, you should treat infrastructure constraints as a product risk—not just a vendor issue. Most teams only think about model quality and integration. They should also think about capacity planning, cost volatility, and reliability.

Practical steps to reduce your exposure to compute scarcity

-

Design for cost variability

- Build routing that can shift between models or tiers based on latency/cost budgets.

-

Separate training, fine-tuning, and inference assumptions

- Your peak inference needs may not match your experimentation cycles. Plan them independently.

-

Measure “AI efficiency” like you measure cloud efficiency

- Track tokens per task, retries, prompt bloat, caching hit rates, and model utilization.

-

Plan for regional resilience

- Even if your provider abstracts it away, push for multi-region options for mission-critical AI workflows.

-

Watch policy signals like you watch product roadmaps

- Permitting reform, grid buildouts, and incentive programs change the medium-term price and availability of AI compute.

A quick “People also ask” set that comes up in boardrooms

Is AI infrastructure the same as cloud infrastructure? Not anymore. AI infrastructure is cloud infrastructure plus high-density power delivery, specialized cooling, accelerated networking, and a supply chain built around GPUs and advanced semiconductors.

Why is DOE involved in AI data centers? Because energy availability, grid interconnection, and land assets are limiting factors for AI compute expansion.

Will more AI data centers lower costs for businesses? Yes, increased capacity generally reduces scarcity pricing. But costs also depend on power contracts, hardware supply, and utilization efficiency.

The stance I’d take: policy is now part of the architecture

For years, cloud architecture meant regions, VPCs, Kubernetes, observability, and identity. That’s still true. But for AI-heavy services, policy and infrastructure are now part of the architecture too—permitting timelines, interconnection queues, and energy strategy shape what’s feasible.

OpenAI’s response to DOE is really a request to treat AI compute the way America treated prior foundational buildouts: as something that benefits from coordination, standards, and speed. If the U.S. gets this right, it won’t just help a few large AI labs. It will lower the cost and friction of building AI features across healthcare, finance, manufacturing, education, and government services.

If you’re planning your 2026 AI roadmap, here’s the question worth sitting with: are you treating AI capacity as guaranteed, or are you building your product and operations to thrive even when compute is tight?