AI data center growth is now a product reliability issue. Learn what resilience and security mean for AI-driven digital services in the U.S.

AI Data Center Growth: Resilience and Security in the US

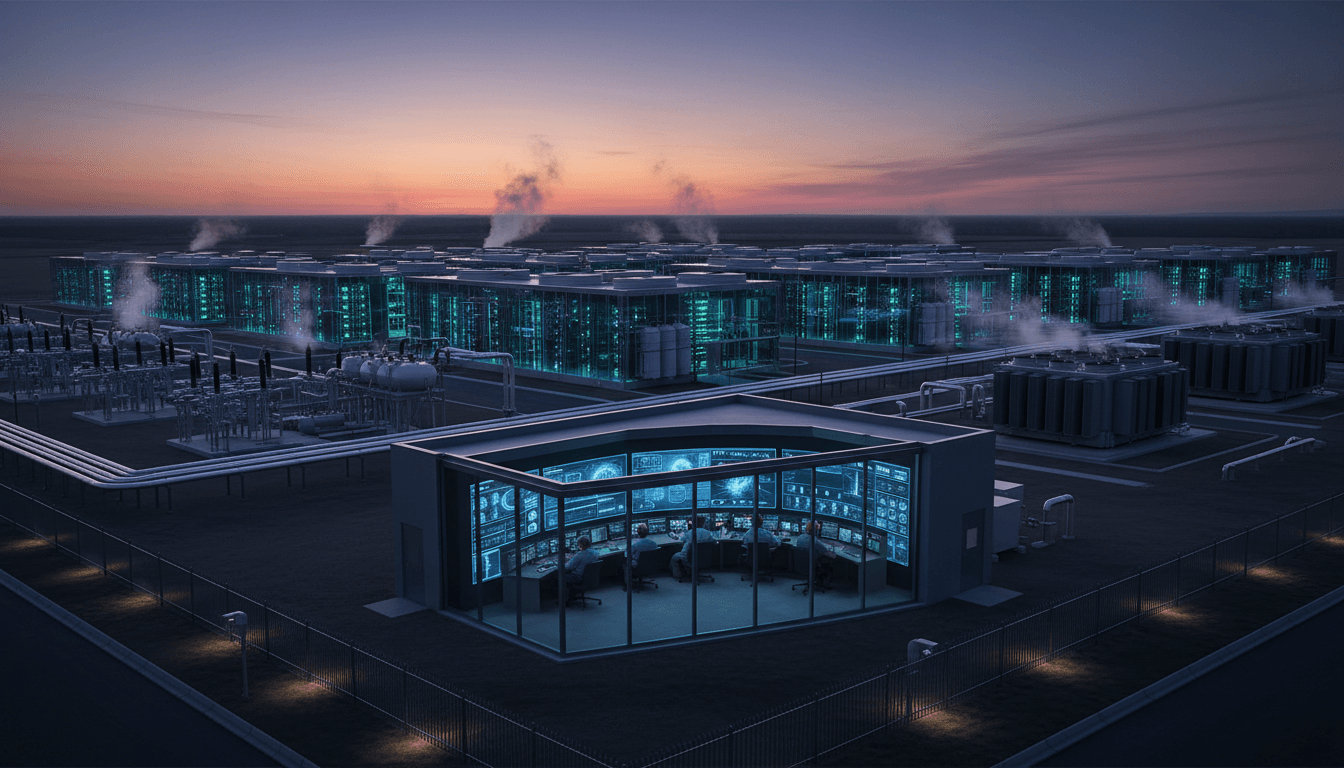

The AI boom has a very physical footprint: data centers, power contracts, fiber routes, cooling systems, and security controls. If you’re building AI-driven digital services—customer support automation, personalized experiences, fraud detection, developer tools, analytics products—your “AI strategy” is only as strong as the infrastructure it runs on.

That’s why OpenAI’s public engagement with the U.S. government on data center growth, resilience, and security matters beyond policy circles. When leading AI companies weigh in with agencies like the NTIA (National Telecommunications and Information Administration), they’re not just talking about their own capacity. They’re shaping the conditions that will determine whether U.S. cloud computing and data centers can scale AI workloads reliably, affordably, and securely.

This post is part of our “AI in Cloud Computing & Data Centers” series. The theme is simple: infrastructure is now a product feature. Uptime, latency, compliance, and security aren’t background concerns—they’re the difference between an AI service users trust and one they abandon.

Why data center growth is now an AI competitiveness issue

AI growth is constrained less by model ideas and more by compute, power, and deployment reliability. The fastest way to stall a roadmap is to underestimate the infrastructure realities behind training and serving models at scale.

Data centers are under new stressors:

- Power availability is becoming the primary gating factor for new capacity in many U.S. regions.

- Network capacity and routing diversity matter more as inference becomes real-time and user-facing.

- Cooling and water constraints are increasingly tied to permitting, local politics, and operating cost.

- Hardware supply chain volatility (especially accelerators) can turn expansion plans into paper plans.

For SaaS and digital service teams, this shows up as very practical questions:

- Can we guarantee low-latency inference for users nationwide?

- What happens when an entire region has a grid event?

- Are we ready for stricter reporting expectations around incidents and cyber posture?

The policy signal—“we’re serious about scaling data centers responsibly”—affects permitting, interconnection queues, incentives, and the pace at which capacity comes online. That’s why the conversation matters even if you’ll never file a comment letter yourself.

Myth: “Cloud abstracts all of this away”

Cloud does abstract a lot, but not the risks you’re judged on.

If you sell an AI-powered service, customers still hold you accountable for:

- availability (SLOs, SLAs)

- data security (breach impact, key management)

- compliance posture (SOC 2, ISO 27001, HIPAA, etc.)

- incident response speed (detection, containment, communications)

Cloud is your platform, not your shield. The reality? Infrastructure policy influences product reliability.

Resilience: what “reliable AI” actually requires

Resilience is the ability to keep delivering acceptable service when parts of the system fail. For AI systems, that’s more nuanced than “multi-region = done.” AI introduces new failure modes: model endpoints saturate, GPU clusters fragment, vector databases fall behind, and prompt-injection attacks can force emergency mitigations.

From a practical standpoint, resilience for AI in cloud computing and data centers comes down to three layers.

1) Grid and facility resilience (the unsexy, non-negotiable layer)

If the facility can’t sustain power and cooling, everything above it is theater.

What strong looks like:

- N+1 (or better) redundancy on critical systems (power distribution, cooling loops)

- Backup power that’s tested under load (not just compliance checkbox testing)

- Diverse power feeds where feasible, plus clear failover procedures

- Environmental monitoring that triggers automated workload shedding (graceful degradation)

If you’re buying cloud, you don’t control the building—but you can control how you deploy across it.

2) Regional architecture resilience (how you survive the bad day)

Multi-region architecture is table stakes for customer-facing AI. But it only works if failover is fast and data dependencies are designed for it.

Patterns that hold up under pressure:

- Active-active inference across two regions for latency + redundancy

- Warm standby for less latency-sensitive features (batch scoring, embeddings refresh)

- Queue-based buffering to handle transient endpoint throttling

- Circuit breakers that automatically shift to smaller models or cached responses

Opinion: If your “failover plan” requires humans to wake up, join a bridge, and run a runbook before customers notice, you don’t have failover—you have a hope strategy.

3) Model and application resilience (the AI-specific layer)

AI services can degrade gracefully if you design them to.

Examples of graceful degradation that users accept:

- Switch from a large model to a smaller one when capacity is tight

- Reduce context window size temporarily

- Serve cached “good enough” answers for high-frequency queries

- Turn off expensive features (tool calls, image generation) during incidents

This is where infrastructure meets product design. Teams that plan degradation paths early ship more reliable AI features.

Security: the fastest way to lose trust in AI-driven services

Data center security isn’t only about fences and badges. For AI services, security is also about model access, data flows, secrets, and operational controls that prevent both external attacks and internal mistakes.

A useful way to think about it: AI expands your attack surface in three directions at once.

The three security surfaces AI adds

- Data security surface: training data, embeddings, logs, and user prompts can contain sensitive information.

- Model security surface: model endpoints can be abused (scraping, extraction attempts, jailbreaks, prompt injection).

- Infrastructure security surface: GPUs, clusters, CI/CD pipelines, identity systems, and control planes become higher-value targets.

Security and resilience are linked. A ransomware incident, a credential leak, or a supply chain compromise becomes an availability event fast.

Practical controls that matter (even if you’re “just using APIs”)

You don’t need a giant security team to improve posture. You need discipline.

- Identity and access management: enforce least privilege for engineers and service accounts

- Key management: rotate keys, isolate secrets, and avoid shipping credentials in client apps

- Network segmentation: separate inference services, data stores, and admin planes

- Data minimization: don’t retain prompts longer than necessary; redact sensitive fields

- Monitoring and detection: track unusual usage patterns, spikes in tool calls, and exfil indicators

Snippet-worthy truth: If you can’t explain where your prompts go, how long they persist, and who can access them, you don’t have an AI security posture—you have a liability.

People also ask: “Do we need our own data center for secure AI?”

For most companies, no. You need strong architecture, strong controls, and clear vendor guarantees. Dedicated infrastructure can help for certain regulated workloads, but the biggest wins usually come from:

- tightening IAM,

- reducing data retention,

- improving incident response,

- and designing for multi-region continuity.

What OpenAI’s NTIA engagement signals for U.S. tech leaders

When major AI providers engage on infrastructure policy, it’s a signal that capacity planning and national resilience are becoming part of the AI product conversation. That matters for any company that depends on AI services—especially those selling into enterprise or regulated markets.

Here’s what I’d take from this moment (December 2025) if I were running a product or platform team:

1) Expect more scrutiny on critical infrastructure dependencies

Enterprise buyers already ask about SOC 2 and incident history. The next wave is broader:

- regional dependency mapping

- business continuity plans for AI features

- third-party risk management for model providers

- reporting expectations when AI components fail

If your AI feature is in the critical path (support, billing, identity verification), customers will treat it like production infrastructure.

2) “Resilient-by-design” will be a sales advantage

Teams that can say, plainly, “We can lose a region and keep serving responses with degraded quality within 60 seconds” will win deals.

That statement implies you’ve done the work:

- multi-region routing

- replicated data stores (or acceptable fallbacks)

- tested runbooks and chaos drills

- cost controls to avoid bankruptcy during traffic spikes

3) Security posture will be measured at the system level

AI security isn’t a single control, and it isn’t solved by a policy document.

Buyers will look for evidence:

- threat modeling that includes prompt injection and tool abuse

- audit logs and anomaly detection

- secure software supply chain practices

- data governance that covers embeddings and derived data

A practical checklist for teams scaling AI in cloud computing

If you’re building or buying AI capabilities, your next quarter should include infrastructure decisions, not just model decisions. Here’s a straightforward checklist I’ve seen work.

Architecture and reliability

- Set explicit SLOs for AI endpoints (p95 latency, error rate, availability)

- Design a degradation plan (smaller model fallback, caching, feature flags)

- Implement multi-region strategy aligned to user geography and compliance

- Load test inference paths including vector search, tool calls, and rate limits

- Practice failover at least quarterly (not just document it)

Security and governance

- Classify data in prompts (PII, PHI, financial data) and redact by default

- Define retention rules for prompts, outputs, and logs

- Lock down secrets with managed vaults and rotation

- Monitor abuse patterns (scraping, injection attempts, unusual tool usage)

- Document incident response specific to AI failures (bad outputs, outages, data exposure)

Cost and capacity

- Measure cost per 1,000 requests (or per successful task completion)

- Cap worst-case spend with budgets and rate limiting

- Right-size models (don’t run the biggest model for every task)

- Use batching where latency allows

- Track GPU utilization (idle time is silent waste)

Opinion: If you don’t know your cost per successful AI task, you’re not scaling—you’re experimenting.

Where this fits in the “AI in Cloud Computing & Data Centers” series

This series is about the operational side of AI that most teams learn the hard way: capacity, reliability engineering, and infrastructure economics. The OpenAI–NTIA conversation is a reminder that the U.S. AI market isn’t only competing on models; it’s competing on whether we can build and secure the compute fabric that digital services depend on.

If you’re leading a SaaS platform, a fintech, a healthcare app, or a developer tool, your next steps are clear:

- treat AI infrastructure resilience as a roadmap item,

- treat AI security as a system property,

- and build a strategy that survives real-world failures.

What would change in your product if you had to run AI features through a regional outage—and still meet your customer SLAs?