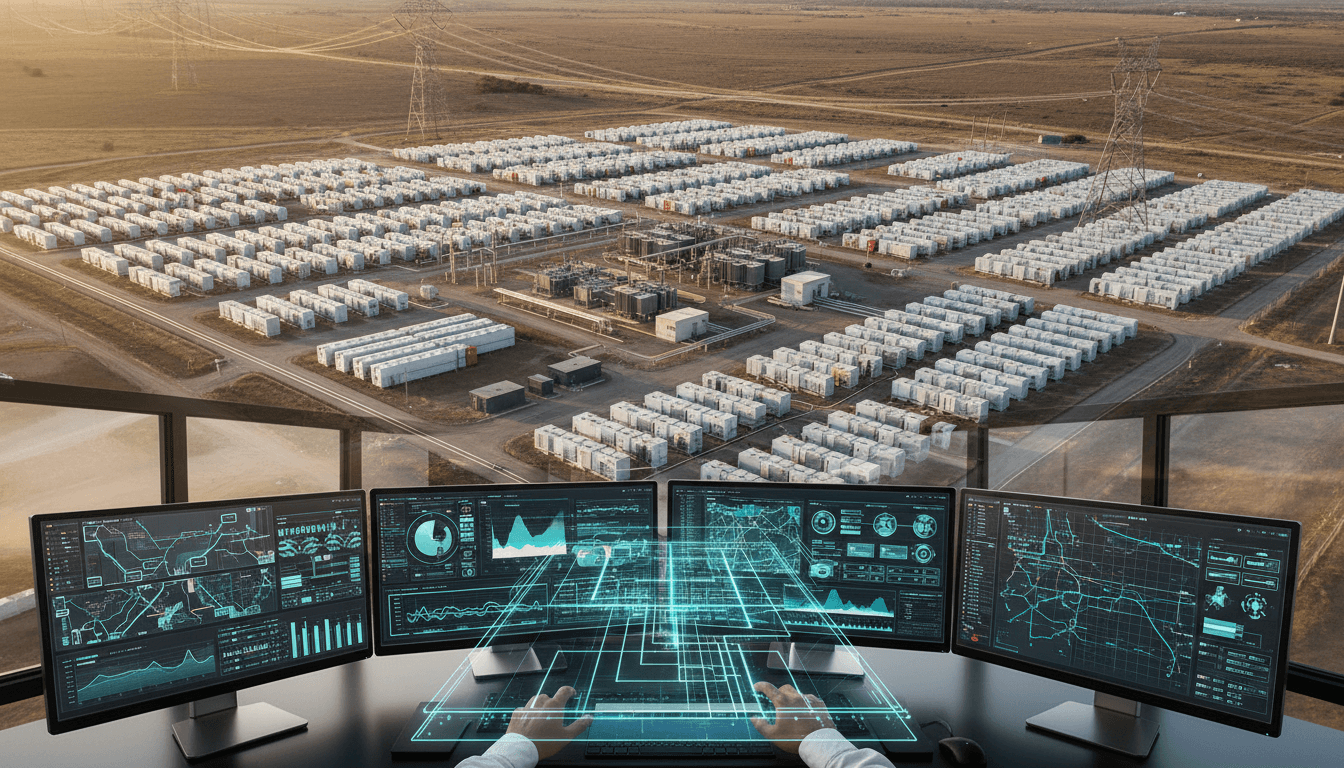

Texas is scaling BESS fast. Here’s how AI-driven grid optimization turns new storage into reliable, profitable infrastructure.

Texas Storage Boom: The AI Playbook for Grid Reliability

Texas isn’t “adding some batteries.” It’s building a parallel reliability layer for the grid.

State officials have said Texas added about 6.4 GW of energy storage capacity in 2024 and now has more than 12 GW deployed on the ERCOT system. They’ve also floated a headline-worthy projection: storage could reach ~30% of Texas grid capacity by 2030. Those are big numbers, but the more interesting story is what they enable—especially as AI-driven renewable integration and load growth (hello, data centers) put new stress on grid operations.

This week’s project announcements make the shift tangible: Peregrine’s 250 MW / 500 MWh Mallard Energy Storage near the Dallas–Fort Worth area, and GridStor’s 150 MW / 300 MWh Gunnar Reliability Project in Hidalgo County. Both are backed by tolling agreements with undisclosed Fortune 500 counterparties, a sign the market is getting serious about firming up supply.

Here’s my take, as part of our “AI for Energy & Utilities: Grid Modernization” series: building batteries is the easy part. The hard part—and the part where utilities and energy developers will separate from the pack—is operating storage with AI so it actually behaves like a reliability asset, not just a price-arbitrage machine.

What these Texas BESS projects signal (beyond megawatts)

These announcements signal one clear point: ERCOT is treating battery energy storage systems (BESS) as core grid infrastructure, not an experiment. Mallard and Gunnar aren’t boutique pilots. They’re utility-scale, contracted, and aimed at reliability.

The specifics matter:

- Mallard Energy Storage: 250 MW / 500 MWh located ~30 miles northeast of Dallas, developed by Peregrine Energy Solutions with technology from Wärtsilä (Quantum2) and EPC support from WHC Energy Services.

- Gunnar Reliability Project: 150 MW / 300 MWh in Hidalgo County, developed/operated by GridStor with construction underway and commercial operation expected by end of 2026.

The “Fortune 500 tolling agreement” detail is a bigger deal than it sounds

A tolling agreement effectively pays the storage owner for making capacity available under defined operating rules. Translation: someone large and sophisticated is buying certainty—not just hoping market prices work out.

That’s a strong market signal in late 2025 because ERCOT volatility cuts both ways:

- When scarcity pricing hits, batteries can mint money.

- When spreads compress, undisciplined dispatch can turn a battery into a disappointing balance sheet line.

Contracted structures (tolling, swaps, hybrids) are increasingly how serious buyers and developers de-risk the revenue stack.

Why Texas is moving fast: renewables, load growth, and ERCOT reality

Texas is moving fast because it has to. Renewable buildout plus load growth increases ramping needs and congestion risk, and ERCOT’s market design rewards flexible assets that can respond in minutes.

The operational problem batteries are being hired to solve

When the grid is stressed, operators don’t need “energy sometime today.” They need:

- Fast frequency response and regulation (seconds)

- Ramp support as solar drops and evening demand rises (minutes to hours)

- Local reliability and congestion relief (often nodal and location-specific)

A 500 MWh battery isn’t there to replace a week of wind lull. It’s there to handle the sharp edges—those moments when the system is most expensive, most fragile, and most political.

Why 2-hour batteries are still showing up

Both Mallard (500 MWh / 250 MW) and Gunnar (300 MWh / 150 MW) are 2-hour systems. Some observers treat that as “not long enough.” I disagree.

On ERCOT, 2-hour storage is often the sweet spot for:

- capturing peak price windows,

- providing ancillary services,

- supporting ramps,

- and keeping capex and interconnection requirements manageable.

Long-duration storage will matter, but the current wave is about deploying lots of flexible capacity quickly.

The next frontier: AI-enabled battery operations (where ROI actually gets won)

AI in energy storage isn’t about fancy dashboards. It’s about turning a battery from “hardware” into a continuously optimized control system.

Here are the highest-value AI use cases I see utilities and storage operators implementing right now.

1) AI demand forecasting + net load forecasting (the dispatch foundation)

The most profitable and reliable battery strategies start with accurate forecasts:

- feeder/substation load forecasts (distribution)

- nodal price sensitivity (transmission/market)

- renewable output (wind/solar)

- net load ramps (system level)

AI models (especially hybrid physics + machine learning approaches) outperform simplistic methods when:

- weather is volatile,

- demand is being reshaped by electrification,

- and new large loads (industrial sites, data centers) shift local patterns.

Practical point: if your forecast error is large, your dispatch will be reactive. Reactive batteries chase yesterday’s signal—and degrade faster doing it.

2) AI dispatch optimization under real constraints (not idealized math)

Most optimization conversations ignore the stuff that breaks projects in the real world:

- state-of-charge targets for reliability windows

- cycle limits tied to warranty terms

- thermal constraints (especially in Texas heat)

- interconnection/export limits

- congestion and curtailment risk

AI-driven optimization can weigh these constraints in near-real time and decide whether the battery should be:

- charging for a forecasted scarcity window,

- holding SOC for contingency reserve,

- providing regulation,

- or backing off to protect asset health.

A snippet-worthy truth: the best battery strategy is rarely “always discharge at the highest price.” It’s “discharge where you’re paid most after accounting for degradation and risk.”

3) Degradation modeling (because throughput is not free)

Battery degradation is the hidden tax on storage economics.

AI helps by building degradation models that reflect actual operating conditions:

- depth-of-discharge patterns

- C-rate behavior

- temperature exposure

- calendar aging vs cycling

With that, you can run a value-per-cycle approach:

- If a dispatch plan earns $X but consumes $Y of life, you compare it to the next-best alternative.

This is where many projects quietly lose money: they optimize revenue and ignore the “cost of using the battery.”

4) Predictive maintenance for BESS components (availability is the KPI)

In reliability projects, availability often matters more than peak revenue. AI-based predictive maintenance can reduce forced outages across:

- inverters/power conversion systems

- HVAC/thermal management

- cell monitoring and anomaly detection

- communications and controls

The goal isn’t “zero alarms.” It’s finding the right early warnings that prevent derates during the exact hours the grid needs the asset.

How utilities should evaluate new BESS projects in 2026 planning cycles

If you’re a utility planner, an IPP, or a large C&I buyer considering contracted storage, here’s what I’d pressure-test before signing anything.

A practical BESS diligence checklist (AI-aware)

-

Forecasting maturity

- Who owns the forecast models: the operator, an optimizer vendor, or the offtaker?

- Can the models be audited and improved, or are they a black box?

-

Dispatch governance

- What’s the hierarchy: reliability obligations first, then economics?

- How are constraint overrides handled during grid emergencies?

-

Degradation economics

- Is there a formal value-per-cycle policy?

- Are warranty constraints translated into operating rules automatically?

-

Telemetry and data quality

- Are data latencies measured and enforced?

- Is there a single source of truth for SCADA + BMS + market signals?

-

Cybersecurity and access controls

- Who can change control policies?

- Are model updates tracked like software releases?

My opinion: if a developer can’t answer these cleanly, the project might still get built—but you should assume performance risk.

What Mallard and Gunnar tell us about the “smart grid” phase Texas is entering

Texas has already proven it can deploy capacity quickly. The next phase is proving it can operate complex, distributed flexibility without turning system operations into chaos.

Mallard’s choice of established partners (Wärtsilä for technology and WHC for EPC) signals a preference for bankable execution. Gunnar’s tolling agreement and planned COD in 2026 underscore that contracted storage is becoming part of how large buyers manage reliability and costs.

Zooming out, this is the arc of grid modernization:

- Phase 1: Build renewables fast.

- Phase 2: Add batteries to smooth volatility.

- Phase 3 (now): Use AI to coordinate assets so the grid behaves predictably under stress.

If you’re working in grid modernization, the question isn’t whether to use AI. It’s where to place it so it improves outcomes you can measure: forecast error, availability, congestion exposure, and cost per delivered MWh of flexibility.

Next steps: turning storage projects into AI-ready grid assets

If you’re developing, owning, or procuring battery energy storage in Texas (or any fast-moving market), treat “AI enablement” as part of the project’s core design—not a future add-on.

Start with three concrete moves:

- Define the operating objective function (reliability vs revenue vs blended) and translate it into dispatch policies.

- Instrument the asset for learning (clean telemetry, labeled events, high-quality weather and market inputs).

- Model degradation like a real cost, because it is.

The storage boom in Texas is real. The bigger opportunity is making these assets behave like a coordinated fleet—predictable, auditable, and optimized. That’s what AI in energy and utilities is actually for.

If batteries are the new grid hardware, AI is the operating system. What will your organization do first: buy more hardware, or upgrade the OS that runs it?