Light-controlled hydrogel lenses could give soft robots autofocus without motors. See where soft optics improves AI perception in automation.

Soft Robot Vision: Light-Controlled Lenses Without Motors

Most robotics teams obsess over models, training data, and GPUs. Meanwhile, their cameras are still built like tiny industrial machines: rigid stacks of glass, motors, and wiring that don’t belong on a squishy gripper, a steerable endoscope, or a wearable medical device.

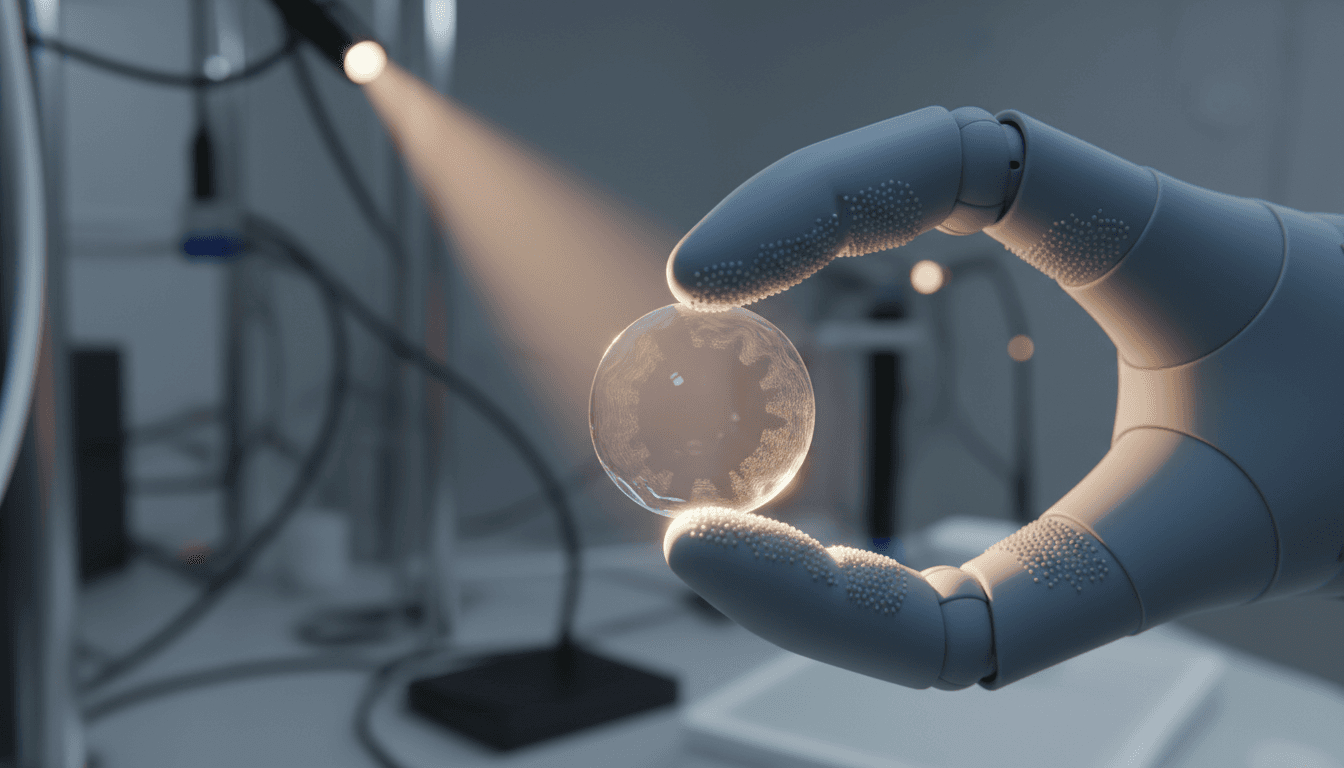

A new adaptive lens from researchers at Georgia Tech takes a different route: a flexible lens whose focus is controlled by light-activated “artificial muscles” made from hydrogel. No gears. No motors. Potentially even no on-lens electronics. If you care about AI in robotics and automation, this matters for a simple reason: AI perception is only as reliable as the sensor input you can keep stable, clean, and safe in the real world.

This post breaks down what this lens is, why it’s a big deal specifically for soft robotics vision, and how to think about deploying “soft optics” in manufacturing, healthcare, and logistics—where durability, compliance, and low power often beat pristine lab performance.

The real bottleneck in AI robot vision: hardware that can’t bend

AI perception fails in the field less because the model is dumb and more because the sensing stack is fragile, misaligned, or unsafe for the environment. Traditional variable-focus cameras typically rely on:

- Rigid lens elements that must stay precisely aligned

- Mechanical actuation (motors/voice coils) to shift lenses

- Electrical power and control signals at the sensor head

- Hard housings that don’t like impact, torsion, or body contact

That’s fine for a fixed industrial arm behind guarding. It’s a headache for:

- Soft grippers handling produce, pastries, or fragile electronics

- Continuum robots (snake-like) navigating tight spaces

- Wearables and skin-adjacent devices where stiffness creates irritation and motion artifacts

- Medical tools where anything rigid increases injury risk

A squishy robot with rigid eyes is like putting a glass chandelier on an off-road truck. The robot can be compliant, but the perception system becomes the weak link.

What the photo-responsive hydrogel soft lens (PHySL) actually is

PHySL is a soft, variable-focus lens whose shape is changed by a surrounding hydrogel “muscle” that contracts when illuminated. If you want the one-sentence explanation:

The lens changes focus because light makes a hydrogel ring shrink, which reshapes the soft lens—similar to how ciliary muscles reshape the human eye’s lens.

The key mechanism: light-driven actuation

The muscle material is a hydrogel—a water-rich polymer network. In this design, the hydrogel contracts when exposed to light. That contraction reshapes the lens and changes its focal length.

What’s clever here isn’t only “soft lens” (people have made soft lenses before). It’s the control method:

- You can actuate it remotely by projecting light.

- You can actuate it locally by shining light on different regions to fine-tune deformation.

- You can avoid wiring at the lens, which is a big deal for compact, untethered, or body-safe systems.

Why “no electronics at the lens” is a practical advantage

Electronics aren’t bad. But they come with packaging and reliability work you can’t ignore:

- sealing and sterilization constraints (healthcare)

- flex-cable fatigue (continuum/soft robots)

- ingress protection for washdown environments (food/warehouse)

- EMI/ESD management near motors and high-current drives (factory floors)

A light-controlled lens doesn’t magically eliminate system electronics, but it can move complexity away from the tip—which is often the part you most want to keep soft, safe, and disposable.

Why soft machines need adaptive optics (and AI benefits directly)

Adaptive optics isn’t a “nice to have” for soft robots; it’s how you keep perception usable while the robot deforms. If your camera bends, compresses, twists, or gets bumped, several issues show up immediately:

- focus drift (distance-to-object changes constantly)

- motion blur from compliant structures vibrating

- changing illumination from occlusion or self-shadowing

- calibration drift (intrinsics change if the optical stack shifts)

A variable-focus soft lens helps on multiple fronts.

Better input in dynamic environments

Modern AI vision pipelines—especially those doing grasp detection, defect inspection, or pose estimation—depend on crisp features. If the image is even slightly out of focus, your model starts compensating in ways that look like “mystery errors”:

- increased false rejects in inspection

- unstable grasp points on shiny or low-texture objects

- misreads on barcodes/labels at odd angles

A soft, tunable lens gives the system a way to regain focus on the fly without heavy mechanics.

Safer physical interaction without sacrificing perception

This lens is compliant. That matters in places where the camera may contact people, tissue, or delicate objects. A rigid camera module tends to force design tradeoffs:

- Keep the camera far away (worse view)

- Add hard protective windows (fogging, glare, cleaning burden)

- Overbuild housings (weight, stiffness, cost)

Soft optics supports a different design philosophy: put perception closer to the interaction because the sensing head can be safer when it inevitably bumps something.

Where this fits in robotics and automation (three concrete scenarios)

The immediate value of light-controlled soft lenses is enabling vision where rigid optics are a liability. Here are three places I’d bet on first.

1) Soft grippers in food and consumer goods

A soft gripper that picks strawberries, baked goods, or blister-packed products benefits from “eyes near the fingertips.” But fingertip cameras get squeezed, twisted, and washed.

A compliant, tunable lens can support:

- short working distances that change every pick

- rapid refocusing as the gripper conforms

- safer contact when the gripper bumps the product

Pair that with AI inference (grasp point selection, ripeness/blemish checks, orientation verification), and you get a perception stack that’s designed for physical messiness, not lab conditions.

2) Medical imaging tools that move with the body

Endoscopes and catheter-like devices operate in environments where rigid components can be risky. A soft, adaptive lens can:

- reduce pressure points on tissue

- tolerate bending without optical failure

- simplify tip packaging by moving control off-tip

If you’re building AI-assisted navigation or lesion detection, robust imagery is the whole product. A lens that can adapt focus without motors is attractive for miniaturization and reliability, two constraints that dominate medical device engineering.

3) Warehouse and field robotics where shocks happen

In logistics automation, cameras are constantly exposed to:

- impacts (totes, pallets, conveyors)

- vibration (mobile bases)

- dust and cleaning routines

Soft optics won’t replace rugged housings overnight, but it can reduce the chance that a single bump knocks focus or alignment out of spec. For AI systems doing barcode reading, dimensioning, or bin picking, that directly translates into fewer interventions.

How to think about “light-powered vision” in an AI system

Light-controlled optics shifts part of perception control from electromechanical actuation to optical/illumination control. That creates new design options—and some new engineering questions.

Control architecture: from motors to patterns of light

In a conventional autofocus module, you control a motor position. In a photo-responsive lens, you control:

- illumination intensity

- illumination pattern (where you shine it)

- exposure timing (how long you shine it)

That’s not a drawback. It’s an opportunity for tighter coupling with AI:

- AI estimates depth/blur → chooses illumination pattern → lens refocuses

- AI tracks deformation state of the soft robot → compensates with lens control

Put simply: perception becomes an active loop, not a passive camera feed.

Practical questions teams should ask early

If you’re evaluating soft, tunable optics for a product, here are the questions that decide whether it’s a science demo or deployable hardware:

- Response time: How fast can the lens change focus, and can it keep up with your robot cycle time?

- Repeatability: Does the focal length return to the same state over thousands of cycles?

- Drift and hysteresis: Do you need calibration maps per unit, per temperature, or per humidity?

- Environmental robustness: Hydrogels are water-rich—how do they behave with drying, fogging, cleaning agents, or sterilization?

- Optical quality: Is resolution sufficient for your task (inspection vs navigation vs coarse detection)?

A strong rule: the best lens isn’t the sharpest lens; it’s the lens that stays predictable after month three on the factory floor.

The next step: integrating soft optics with soft robotics platforms

The Georgia Tech team demonstrated a proof-of-concept electronics-free camera using the lens plus a light-activated microfluidic chip. That’s not just a fun prototype—it points to a broader direction:

- Soft sensing stacks (lens + light control + compliant packaging)

- Low-power perception modules for untethered soft robots

- Distributed vision (multiple small, safe “eyes” instead of one protected camera)

What I expect to happen in 2026–2027

Soft robotics is moving from “cool demos” to targeted deployments—especially in handling, healthcare tooling, and inspection in constrained spaces. For vision, I expect two parallel trends:

- More on-sensor intelligence (tiny models for autofocus/quality gating at the edge)

- More adaptive hardware (soft optics, tunable illumination, event-based sensors)

Those aren’t competing. They complement each other.

AI gets you robust perception in spite of noise. Adaptive hardware reduces noise at the source. The teams that win do both.

People also ask: what does this change for AI-powered automation?

Will soft lenses replace traditional industrial cameras?

Not broadly. Rigid optics will stay dominant for high-precision metrology and long standoff distances. Soft lenses will win where compliance, safety, and packaging constraints matter more than absolute resolution.

Does “electronics-free” mean the system doesn’t need power?

No. It means the lens actuation can be driven optically rather than by an electrical actuator at the lens. You still need power for illumination and the image sensor. The value is less wiring and less complexity at the tip.

Why does focus control matter if AI models can handle blur?

Models can tolerate some blur, but blur still costs you:

- higher error rates at the decision boundary

- more training data needed to cover edge cases

- less reliable performance under domain shifts

Keeping the image in focus is one of the cheapest ways to raise system-level reliability.

Where to go from here if you’re building AI vision for soft robots

If you’re serious about AI in robotics & automation, treat perception hardware as a first-class design problem—especially for soft machines. Light-controlled hydrogel lenses are an early sign that the camera itself is getting more “robotic”: adaptive, compliant, and designed for contact.

If you’re exploring soft robotics vision for manufacturing, healthcare, or logistics, a practical next step is to map your use case against four constraints—contact risk, deformation, power budget, and maintenance/cleaning. If two or more are severe, you should at least prototype a soft or tunable optics approach.

The interesting question for the next wave of automation isn’t “Can AI see?” It’s “Can AI keep seeing after the robot bends, bumps, washes down, and repeats the task 50,000 times?”