Deep-sea ROVs reveal what AI robotics needs to work in harsh environments. Learn practical lessons for autonomy, perception, and reliable automation.

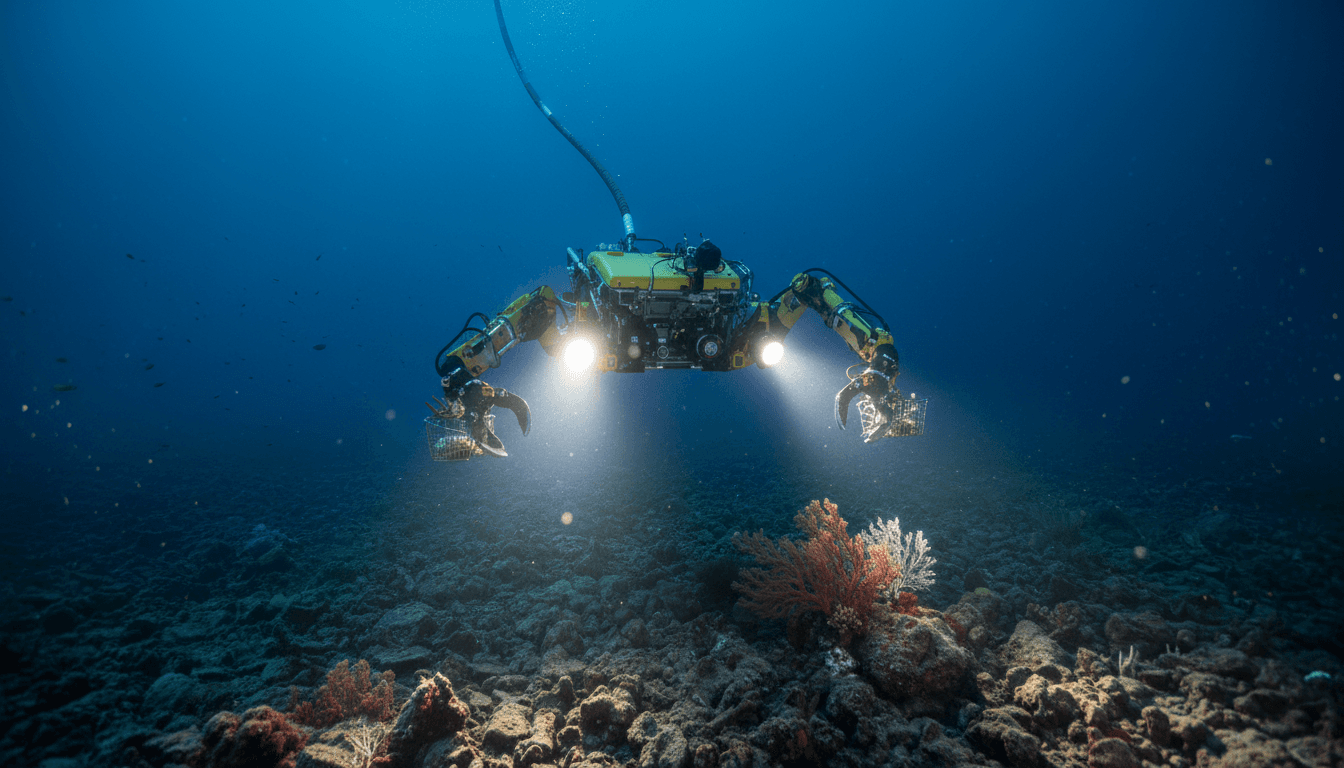

AI Underwater Robotics: Lessons from Deep-Sea ROVs

At 4,000 meters below the surface, you don’t get a second chance. Pressure is crushing, visibility is limited, communications are thin, and a small mistake can cost a mission day—or an entire expedition.

That’s why underwater robotics is a great stress test for the broader “AI in robotics & automation” story. If your autonomy stack, sensing pipeline, and operations tooling can survive the deep ocean, it’s far more likely to survive messy, real-world deployments in factories, ports, and field service.

IEEE Spectrum’s profile of ROV engineer Levi Unema (who’s built, maintained, and piloted NOAA’s deep-ocean robots) is a reminder that the hard part of robotics isn’t just building a robot. It’s building a system—hardware, software, data, people, and procedures—that keeps working when the environment refuses to cooperate.

Underwater robotics is automation with the training wheels off

Deep-sea ROVs are often described as “remote-controlled,” but that undersells what’s happening operationally. In practice, these vehicles are distributed robotic systems that must:

- Sense in poor lighting, turbidity, and marine snow

- Act with precise motion near fragile seabed ecosystems

- Stream video and instrument data through kilometers of tether

- Keep electronics stable despite water, corrosion, and pressure

- Support a live “human-in-the-loop” workflow with scientists making decisions in real time

The takeaway for industrial automation leaders is simple: the ocean forces the same discipline your factory will eventually demand—robust perception, fault tolerance, diagnostics, maintainability, and decision support.

And it’s exactly where AI fits.

Where AI actually matters underwater (and why it translates to industry)

In underwater robotics, AI earns its keep in four places:

- Perception under constraints: computer vision must operate with low contrast and limited range; sonar interpretation requires pattern recognition over noisy signals.

- Assisted autonomy: station keeping, smooth manipulator control, and semi-autonomous behaviors reduce pilot workload and prevent accidents.

- Data triage: expeditions generate enormous video + sensor streams; AI helps flag “moments that matter” for scientists.

- Predictive maintenance and troubleshooting: when you’re “in the middle of the Pacific,” you need early warning and rapid root-cause isolation.

Those map cleanly to manufacturing, logistics, and service robotics: perception in messy environments, shared autonomy for safety, automated quality/exception detection, and predictive maintenance.

The engineering reality: pressure, packaging, and painful trade-offs

One detail from Unema’s work that should stick with every robotics builder: the economics of packaging drives engineering decisions.

Deep-sea electronics often live in sealed housings (commonly titanium cylinders). Smaller housings reduce cost and weight. But smaller housings also mean:

- tighter thermal margins

- harder maintenance and upgrades

- more complex EMI/grounding decisions

- fewer options for instrumentation expansion

Unema describes the constant trade-off between weight, size, and cost, and the need to isolate electronics to avoid grounding faults.

Here’s the broader automation lesson: AI features aren’t “just software.” They have hardware implications—compute, power, thermal design, connectors, ingress protection, serviceability, and the instrumentation you need for debugging.

A practical rule I’ve found useful

If you can’t answer these three questions, your AI robotics roadmap is probably fragile:

- What data will we rely on when sensors degrade? (fog, glare, biofouling, dust, vibration)

- What’s the fallback behavior when models are uncertain? (stop, slow, hand off, re-plan)

- How will we diagnose failures in the field without a lab? (logs, traces, health dashboards, replay)

Deep-sea operations force these answers early, because the environment punishes optimism.

Tethers, bandwidth, and why autonomy becomes non-negotiable

Unema’s NOAA ROVs use several kilometers of cable containing just three single-mode optical fibers—and all comms and data must funnel through that constraint while instruments demand more throughput every year.

That detail matters because it’s the same story as edge robotics everywhere:

- Factories have network congestion.

- Warehouses have Wi‑Fi dead zones.

- Mining and offshore sites have limited uplink.

- Field service robots can’t depend on cloud latency.

When bandwidth is constrained or intermittent, autonomy stops being a nice-to-have. You need local decision-making, local safety behavior, and local perception.

What “good autonomy” looks like in practice

For most operators, autonomy shouldn’t mean “no humans.” It should mean:

- Humans choose goals; robots handle the stable, repeatable sub-tasks

- Robots explain what they’re doing and why (confidence, detected obstacles, recommended actions)

- Humans can take over instantly when the environment surprises the system

That’s as true for an ROV sampling coral as it is for an AMR navigating a busy picking aisle.

Human-in-the-loop ops: the underrated AI product

The article highlights a real operational workflow: scientists watch camera feeds and advise where to direct the vehicle; the pilot executes; sometimes manipulator arms collect samples.

This is a template worth copying.

Most robotics teams over-invest in robot behaviors and under-invest in the operating experience:

- mission UI/UX

- annotation and event bookmarking

- shared situational awareness

- playback and incident review

- shift handover tooling

AI can improve all of these without pretending the robot is fully autonomous.

High-value AI features that don’t require “full autonomy”

If you’re trying to drive near-term ROI (and avoid science-fair demos), start here:

- Automatic event detection: “possible defect,” “unusual object,” “leak suspected,” “anomaly in vibration signature”

- Operator copilots: suggested next actions, checklists, safe speed limits, best camera angle

- Semantic search over video: “show me all frames with cracks on weld seams” or “all sightings of valve number 12”

- Confidence-aware controls: the robot slows down or increases standoff distance when perception confidence drops

Underwater exploration needs these because humans can’t watch everything all the time. So does every modern operations team.

Maintenance at sea is the blueprint for reliability engineering

Unema describes the reality: things break, and you fix them with what you have.

That’s not romantic. It’s a reliability requirement.

In robotics deployments, downtime cost isn’t theoretical:

- production lines lose output

- warehouses miss shipping cutoffs

- service fleets violate SLAs

Deep-sea ROV teams cope by designing for field repair and by building strong maintenance routines—often done in winter “refit” seasons, followed by summer operations.

What industrial robotics teams should steal from expedition ops

- Pre-mission check discipline

- Not just “does it power on,” but sensor validation, actuator range tests, and comms stress checks.

- Spare strategy based on failure modes

- Carry what fails most and what’s hardest to replace on-site.

- Configuration control

- Know exactly which firmware/model/config is running on which unit.

- Failure rehearsal

- Practice what happens when perception fails, when comms drop, when a joint overheats.

AI adds a fifth requirement: model lifecycle management (training data lineage, evaluation sets, drift monitoring, and rollback plans).

From ocean exploration to commercial automation: where the market is heading

Ocean exploration is mission-driven, but the same technologies are spilling into commercial use cases:

- Offshore energy: inspection of subsea infrastructure, corrosion detection, leak monitoring

- Ports and maritime logistics: hull inspection, propeller checks, underwater construction support

- Environmental monitoring: habitat mapping, carbon cycle observation, biodiversity surveys

- Defense and security: mine countermeasures, surveillance, search and recovery

The trend line is clear: more sensing, more data, and more automation per operator. AI is the only practical way to scale that.

And here’s the contrarian point: the biggest value may come from AI that helps humans make decisions faster, not from chasing full autonomy too early.

The winning robotics teams build systems that keep working when the environment is rude.

People also ask: “What skills matter most in AI robotics roles?”

Unema’s career path (manufacturing automation → industrial robotics → underwater ROV engineering) reflects what I see across the industry: the strongest robotics engineers are cross-disciplinary.

If you’re hiring—or upskilling—prioritize these skill clusters:

- Systems thinking: interfaces, failure modes, integration risk

- Field diagnostics: logging, instrumentation, root-cause analysis

- Controls + perception basics: even if specialists exist, teams move faster when everyone speaks the language

- Data discipline: dataset versioning, labeling strategy, evaluation design

- Operational empathy: understanding the operator workflow and designing for it

AI talent matters, but AI without operations is a prototype.

What to do next if you’re evaluating AI for robotics programs

If you’re leading an automation roadmap for 2026 budgets (and you probably are, given it’s late December and planning season is real), use a “deep-sea mindset” for your next project review:

- Pick one high-friction workflow and instrument it

- Example: inspection triage, exception handling, rework detection.

- Add AI where it reduces operator load, not where it makes slides look better

- Event detection, assistive autonomy, or semantic search usually beats “fully autonomous navigation” as a first win.

- Demand measurable reliability targets

- Uptime, mean time to recovery, false alarm rate, and intervention rate.

- Treat deployment as the product

- Monitoring, rollback, retraining cadence, and support runbooks should be planned before the pilot starts.

If you want leads from this (and not just applause), offer a concrete next step: a readiness assessment, an on-site workflow audit, or a short proof-of-value engagement tied to one KPI.

The ocean doesn’t care about your roadmap. Your factory floor won’t either.