Learn how the gateway + child bot pattern improves AI call steering, routing accuracy, and self-service IVR performance at enterprise scale.

AI Call Steering at Scale: The Gateway + Child Bot Pattern

NatWest had a problem that sounds boring until you’ve lived it: 1,600+ distinct customer intents coming in through voice, every day, across a major bank’s contact center. That’s not “press 1 for balance” territory. That’s people saying things like “I need to stop a payment,” “my card’s been swallowed,” “can you explain this charge,” or “I’m traveling and my card’s declining”—all in their own words, in a hurry, often stressed.

Most companies get this wrong by trying to build one giant NLU model that recognizes everything. It becomes hard to tune, easy to break, and almost impossible to improve quickly without risking collateral damage.

NatWest’s approach—using Amazon Connect with a federated Amazon Lex architecture—shows a cleaner pattern that applies far beyond banking: a gateway bot to identify the domain, then specialist child bots to do the precise work. If you’re building AI self-service IVR or modernizing a contact center, this is one of the most practical designs you can copy.

Why “one bot for everything” fails in real contact centers

If you’re aiming for accurate intent classification and fast routing, a single monolithic bot is usually the wrong bet.

Amazon Lex guidance (and plenty of real-world scars) point to a simple truth: NLU quality drops when you cram too many intents into one model. Overlapping utterances become more likely (“payment,” “transfer,” “balance,” and “transaction” can collide), and tuning one area can degrade another.

For NatWest, the stakes weren’t academic:

- Misclassified intent means the caller lands in the wrong flow.

- That leads to transfers, repeated authentication, repeated explanations, and longer average handle time (AHT).

- More transfers and longer calls hit staffing, queues, and abandonment—especially painful during seasonal surges (think end-of-year spending disputes, travel-related card issues, and post-holiday fraud spikes).

Here’s the blunt version: the cost of a wrong route is often higher than the cost of an extra clarifying question. Contact centers that optimize only for “shortest IVR” routinely create longer end-to-end journeys.

The hidden operational cost: testing becomes the bottleneck

Monolithic intent models create a delivery trap. Every change requires broad regression testing because everything is interconnected.

NatWest reported 100+ changes delivered through rapid tuning cycles because they could test and improve child bots in isolation. That’s the kind of agility most contact center teams want, and almost none get.

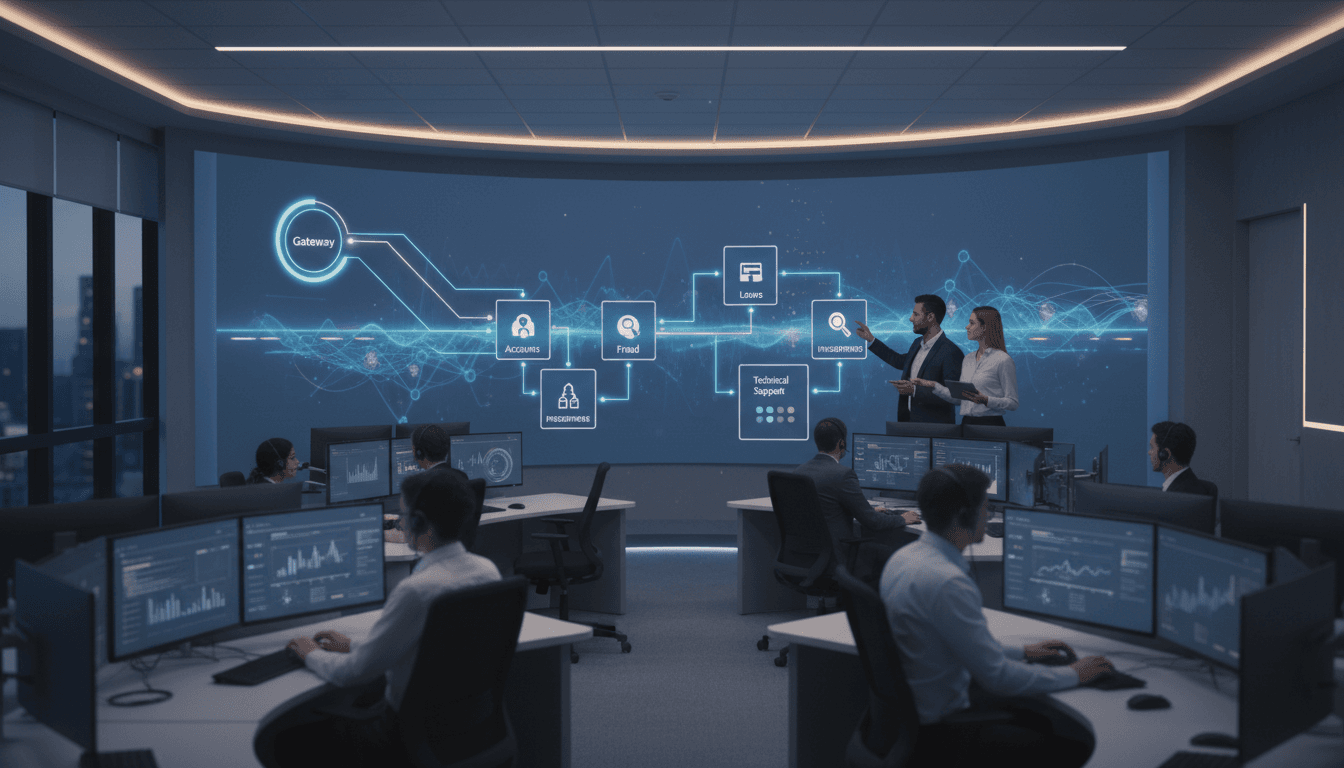

The federated Lex architecture: gateway bot + specialist child bots

The core design is simple:

Use one “Gateway” bot to catch the broad category, then hand off to a domain-specific “Child” bot to identify the exact intent and finish the job.

NatWest implemented this with Amazon Connect contact flows orchestrating multiple Lex bots, with AWS Lambda acting as the glue.

How the call flow works (in plain English)

- A customer calls in, and Amazon Connect starts the contact flow.

- Connect invokes a Gateway Lex bot to capture the high-level intent category (like “balance” or “payment”).

- The Gateway triggers a Lambda code hook.

- Lambda looks up which child bot should handle that category.

- Lambda returns the child bot identifier (ARN) plus the customer transcript.

- A second Lambda invokes the child bot using Lex’s Recognize Text API, passing the transcript so the customer doesn’t repeat themselves.

- The Child bot asks a small number of targeted questions to pinpoint the granular intent.

This isn’t just “routing.” It’s structured intent discovery.

Why the gateway pattern improves intent classification

The gateway bot only needs to distinguish between a relatively small number of domains (often 10–30). That’s a much easier classification problem than 1,600 intents.

Once the domain is known, the child bot deals with a narrower slice of language, so:

- utterance overlap drops

- training sets are easier to manage

- precision improves with fewer examples

- changes stay local to the domain

If you’re chasing higher containment in AI self-service IVR, this is one of the most reliable ways to get there.

Design details that matter (and prevent ugly customer experiences)

A federated bot setup only works if the handoff is invisible to the customer. NatWest’s implementation included several tactics worth stealing.

Use a fast handoff to avoid dead air

Lex bots have default timeouts while waiting for user input. But in this architecture, the child bot is invoked internally based on what the customer already said.

NatWest reduced that “waiting” pause by setting the session attribute:

x-amz-lex:audio:start-timeout-msto 1 millisecond during the internal transition

Then they reset it once the child bot is active. The effect is subtle but important: no awkward silence that makes callers think the system froze.

Don’t fetch bot metadata at runtime the hard way

A common engineering mistake is calling build-time APIs (or anything throttle-prone) during live traffic.

NatWest stored child bot ARNs in AWS Systems Manager Parameter Store (or DynamoDB) so the Gateway’s Lambda could look them up quickly and reliably.

Practical guidance you can apply:

- treat routing metadata like configuration

- keep it in a fast, scalable store

- version it and change-control it like you would IVR prompts

Retry logic belongs at the gateway

If the gateway mishears or the customer gives an ambiguous opening (“It’s about my account”), passing that confusion to a child bot just wastes time.

NatWest’s strategy: retry and clarify at the gateway until a valid high-level domain is captured.

A simple, effective gateway reprompt pattern:

- Ask for the reason for the call

- If confidence is low, ask a constrained clarifier (e.g., “Is this about a card, a payment, or online banking?”)

- Only then route to a child bot

This is how you reduce transfers without turning your IVR into an interrogation.

Confirm intent in the child bot, not the gateway

A lot of teams add confirmation prompts too early (“Did you mean balance?”), which creates friction and still doesn’t solve granularity.

NatWest avoided confirmation prompts in the gateway and instead used confirmation slots in the child bot.

That’s a smart trade:

- gateway focuses on domain selection

- child bot confirms the specific job-to-be-done

Result: fewer confirmations overall, and the confirmations that do occur are better informed.

Analytics: federated bots don’t excuse fragmented reporting

Federation can create a measurement mess if you’re not careful. Two bots means two sets of logs, metrics, and confusion during QA.

NatWest’s answer was straightforward: correlate gateway and child interactions using session IDs and shared attributes.

If you want this to drive real contact center optimization, build your reporting around questions leaders actually ask:

- Which gateway domains have the lowest containment?

- Which child bots generate the most fallbacks?

- Where do customers abandon—before or after handoff?

- Which intents create the most agent escalations?

- What’s the AHT difference between “routed correctly” vs “routed incorrectly then transferred”?

Here’s the stance I take: If you can’t measure the journey end-to-end, you didn’t finish the architecture.

A practical playbook for implementing the gateway + child bot pattern

You don’t need 1,600 intents to benefit from this. If your organization has:

- multiple product lines

- multiple authentication paths

- multiple back-end systems

- frequent policy changes

…federation buys you speed and safety.

Step 1: Design your domains like a contact center operator would

Your gateway domains should map to how customers talk, not how your org chart looks.

Good domains:

- “Card problems”

- “Payments and transfers”

- “Fraud and disputes”

- “Online and mobile banking access”

Risky domains:

- “Retail banking operations”

- “Deposits servicing”

- “Consumer product support”

If a caller wouldn’t say it, don’t make it a gateway domain.

Step 2: Keep each child bot small on purpose

A practical target is 20–50 intents per child bot. You’re aiming for clarity and separation, not maximum density.

When a child bot grows too large, split it by sub-domain (for example, “payments” into “make a payment,” “stop a payment,” “payment not received,” “refunds”).

Step 3: Create a repeatable onboarding process

NatWest used a naming convention like:

nlcs-<gateway-intent>-child-bot

And stored routing metadata using a consistent parameter naming pattern.

The lesson isn’t the exact names—it’s that you need a factory, not a one-off project. If onboarding a new intent domain takes a month, you’ll stop improving.

Step 4: Tune like a product team, not a project team

Federation enables rapid iteration, but only if you operationalize it:

- weekly review of top failure utterances

- targeted training set expansion per child bot

- A/B testing prompt wording inside a domain

- fast rollback path per bot alias

That last point matters in regulated environments: being able to roll back a single domain bot is far safer than rolling back your entire self-service brain.

What this case study means for AI in customer service in 2026

Voice self-service is back in the spotlight because companies are finally pairing it with NLU that actually works. The organizations that win won’t be the ones with the flashiest demos. They’ll be the ones with:

- higher routing accuracy

- fewer transfers

- shorter time-to-resolution

- measurable, continuous improvement

NatWest’s federated approach with Amazon Connect and Amazon Lex is a strong pattern for any enterprise contact center that’s outgrown its legacy IVR. It treats intent classification as an engineering system: modular, testable, observable, and designed for change.

If you’re planning your 2026 roadmap for AI in customer service, here’s the question to pressure-test your strategy: Are you building one bot to rule them all, or a system that can actually evolve every week without breaking?