Learn how NatWest handled 1,600+ banking intents with AI call steering using Amazon Connect and Lex—improving routing accuracy and self-service scale.

AI Call Steering for 1,600 Banking Intents (No Mess)

NatWest had a problem most contact center teams don’t talk about until it’s already hurting customers: 1,600+ distinct reasons people call—and a legacy call steering engine that needed modernization without breaking the customer journey.

Here’s the uncomfortable truth: when intent detection fails, everything downstream gets more expensive. Calls get misrouted, customers repeat themselves, agents inherit confused context, and handle times creep up. And in banking—especially in peak seasons like December, when customers check balances, chase card deliveries, dispute transactions, and manage travel notifications—those small failures stack up fast.

NatWest’s approach is a strong, practical blueprint for any financial services org (or any high-intent enterprise contact center) trying to scale AI-powered self-service without turning their bot into a giant, fragile monolith. They used Amazon Connect with Amazon Lex—but the real lesson isn’t “use these tools.” It’s how they designed for accuracy, change velocity, and analytics when the intent catalog is enormous.

Why “one giant bot” usually fails in banking self-service

Answer first: A single, monolithic NLU bot breaks down at scale because intent overlap increases, accuracy drops, and every change becomes risky.

Amazon Lex (and most NLU systems) performs best when it can cleanly separate intents. When you cram hundreds or thousands of intents into one model, you create two common failure modes:

- Intent collision: “balance inquiry,” “available balance,” “pending card transaction,” and “recent transactions” start to blur—especially over voice, where callers are informal and often stressed.

- Operational drag: A change to one domain (say, disputes) forces you to re-test a huge surface area. Teams slow down because they’re afraid of breaking something.

NatWest faced this at an extreme level: over 1,600 intents. Even if a platform technically supports large numbers, practical performance and maintainability are the bottlenecks.

If you’re running a contact center, this matters because misclassification is a cost multiplier:

- More transfers (and more abandoned calls)

- Longer average handle time (AHT)

- Lower containment in self-service

- Lower CSAT, because customers feel “the system isn’t listening”

The fix isn’t adding more training phrases forever. The fix is architecture.

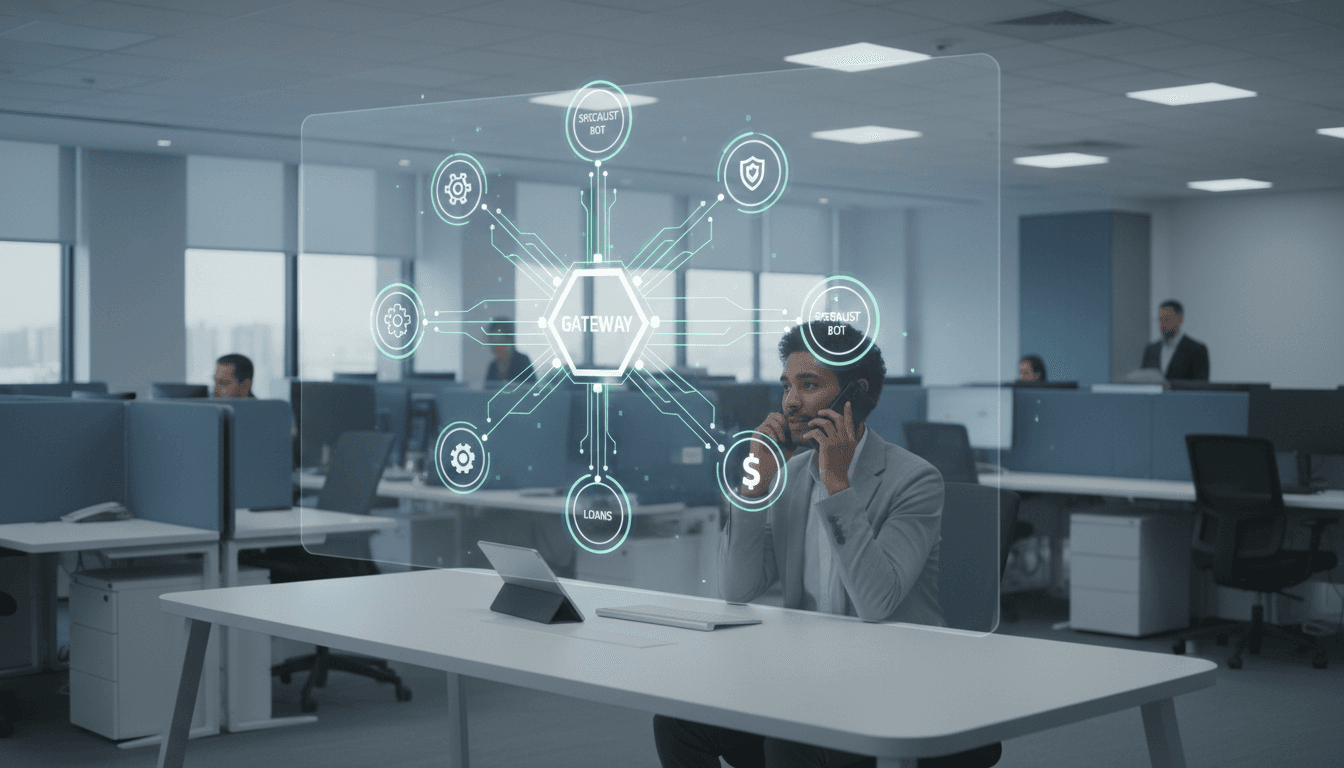

The federated bot pattern: one “Gateway” + many specialist bots

Answer first: NatWest used a federated architecture where a small “Gateway” bot handles high-level routing, then hands off to a domain-specific “child bot” for precise intent resolution.

Think of it like a well-run front desk:

- The Gateway bot quickly identifies the general category (“balance,” “payment,” “card,” “fraud,” “dispute,” etc.).

- A child bot takes over for that category and asks the right follow-ups to land on the exact intent.

This solves the core scaling problem by keeping each bot in a manageable “intent neighborhood.” It also makes the experience feel more natural for callers because the second-stage bot can ask domain-appropriate clarifying questions.

What actually happens in the call flow (in plain English)

NatWest’s flow (implemented with Amazon Connect + Amazon Lex + AWS Lambda) works like this:

- A caller enters an Amazon Connect contact flow.

- Connect invokes the Gateway Lex bot to capture a high-level intent.

- A Lex code hook (Lambda) looks up which child bot should handle that category.

- The system stores the chosen child bot reference and the customer transcript in attributes.

- A second Lambda invokes the child bot using the Recognize Text API, passing the transcript so the customer doesn’t have to repeat themselves.

- The child bot narrows down to the specific intent and continues the conversation.

A key design choice: NatWest stored child bot ARNs in a reliable config store (SSM Parameter Store or DynamoDB) instead of calling build-time APIs at runtime. That’s the kind of detail that prevents throttling and weird outages later.

Snippet-worthy takeaway: “Use the Gateway bot to pick a domain; use the child bot to pick the exact intent.”

Design details that protect customer experience (and your KPIs)

Answer first: The “small” implementation choices—timeouts, retries, and where you confirm intent—decide whether callers trust self-service.

A federated design can still feel clunky if you don’t handle transitions cleanly. NatWest’s approach includes three tactics that are worth copying.

1) Remove dead air during internal handoffs

When a child bot is invoked internally, the platform may still behave like it’s waiting for user input, creating a noticeable pause. NatWest avoided that by setting a session attribute (x-amz-lex:audio:start-timeout-ms) to an extremely low value during the internal handoff, then resetting it for normal conversation.

This matters because silence reads as failure on voice calls. Even a short delay increases hang-ups.

2) Retry at the top, not in the weeds

If the Gateway bot can’t confidently pick a category, handing off to a child bot will fail too—just with extra steps.

NatWest built retry/clarification logic into the Gateway: re-prompt, ask a targeted clarification, then proceed only when the high-level category is valid.

Practical prompt examples that work well in a Gateway stage:

- “Is this about your card, a payment, or your account balance?”

- “Are you trying to make a payment or check a payment?”

Short. Specific. Low cognitive load.

3) Confirm intent only after you have the right context

NatWest avoided confirmation prompts in the Gateway stage and instead used confirmation within the child bot, where the domain context is richer.

That’s a smart move. Confirming too early creates awkward loops:

- Caller: “I need help with a payment.”

- Bot: “Do you mean payment?”

That kind of interaction feels robotic—and it doesn’t reduce errors.

A better pattern:

- Gateway: routes to Payments domain.

- Payments child bot: “Are you trying to make a payment, cancel one, or check a payment status?”

- Then confirm the specific action.

Intent management at scale: modular ownership beats central chaos

Answer first: Breaking intents into domain bots enables faster iteration, safer releases, and clearer team ownership.

NatWest reported 100+ changes delivered via rapid tuning cycles without “big-bang testing” overhead. That’s the real business win: shipping improvements weekly (or daily) without destabilizing the whole system.

If you’re leading contact center transformation, this is the difference between:

- A bot that stagnates because every update is risky

- A bot ecosystem that improves continuously through focused testing

A simple operating model that works

Here’s what I’ve found works best when you adopt a federated Lex-style pattern:

- Assign a domain owner per child bot (Payments, Cards, Accounts, Fraud, etc.).

- Define an “intent contract” per domain: what the bot must return to routing/agents (intent name, confidence, required slots, compliance flags).

- Test locally, certify globally: each child bot has its own regression tests; the Gateway has routing tests to ensure mapping still works.

Onboarding new domains without drama

NatWest used a consistent naming and mapping approach. That’s not just tidiness—it’s how you prevent a federated setup from becoming unmanageable.

A clean onboarding checklist looks like:

- Add a new high-level intent in the Gateway.

- Create the child bot using a consistent naming convention.

- Map key utterances to the Gateway intent.

- Store the child bot reference in a configuration store.

The hidden benefit: you can expand self-service coverage without re-architecting.

Reporting and analytics: don’t lose the story between bots

Answer first: Federated bots create split data trails, so you must correlate sessions across Gateway and child bots to understand containment, misroutes, and drop-offs.

If you run analytics, a federated design introduces a predictable challenge: the Gateway and child bot are separate entities, and many dashboards default to treating them as separate conversations.

NatWest addressed this by correlating interactions using:

- Session IDs

- Shared attributes passed across the handoff

If you want the architecture to pay off, measure the right things end-to-end:

- Containment rate by domain (Payments vs Cards vs Accounts)

- Top misroutes (Gateway chose the wrong domain)

- Clarification rate (how often the Gateway had to re-ask)

- Time-to-intent (seconds from greeting to final classified intent)

- Fallback paths (where callers exit to agents)

One-liner for stakeholders: “A federated bot improves accuracy, but only correlated analytics prove it.”

What contact center leaders should copy from NatWest

Answer first: Treat AI call steering as a product with architecture, release velocity, and measurement—not a one-off bot build.

If you’re modernizing a contact center in 2026 planning cycles, here are the most transferable lessons.

Use NLU where it’s strongest

NLU is great at interpreting messy human language, not at being a universal router for everything. The Gateway stage should stay small and stable, focusing on broad categories.

Keep intents “close” to the resolution journey

A child bot should own:

- The clarifying questions

- The slot/parameter capture

- The confirmation step

- The integration points (where appropriate)

That keeps conversations coherent and reduces “why are you asking me that?” moments.

Build for winter peaks and high-load events

December banking contacts spike for predictable reasons: travel, card issues, holiday fraud patterns, payment timing questions, and end-of-year account checks. A federated model helps because you can tune the highest-volume domains quickly without re-testing everything.

Plan your lead handoff early

Self-service isn’t “automation or agents.” It’s both.

The best implementations treat the bot as a context builder. When escalation is needed, the agent should receive:

- The final intent

- Collected slots (last 4 digits, account type, payment date, etc.)

- What the customer already tried

That’s how you reduce AHT and raise first contact resolution.

Next steps: turn your intent catalog into a scalable self-service roadmap

NatWest’s Amazon Connect + Amazon Lex federation approach shows a practical way to run AI in customer service when the intent catalog is massive and accuracy is non-negotiable. The architecture is modular, the customer experience avoids repetition, and the operating model supports continuous improvement.

If you’re sitting on hundreds (or thousands) of intents today, the question isn’t whether you can build an NLU bot. You can. The question is whether you can keep it accurate while shipping changes every month.

Want a useful place to start? Audit your top 50 call drivers and map them into 6–12 high-level domains. If you can’t cleanly assign each driver to a domain, your current intent taxonomy is the first thing to fix—before you tune another utterance.

What would happen to your containment rate if your bot stopped trying to be a “do everything” system and started behaving like a smart router with specialist help behind it?