RL environments train AI agents to act, recover, and improve—not just generate text. See how simulation-based learning powers logistics, healthcare, and smart cities.

RL Environments: The Next Leap for AI Agents

A funny thing has happened as AI models got bigger: the easiest benchmarks started to matter less.

Yes, scale worked. In roughly five years, large language models went from “occasionally coherent autocomplete” to systems that can write working code, reason through complex prompts, and hold multi-turn conversations that actually stay on track. But if you’ve tried to deploy these models into real operations—logistics planning, clinical workflows, city infrastructure—you’ve seen the ceiling: smart text isn’t the same as competent action.

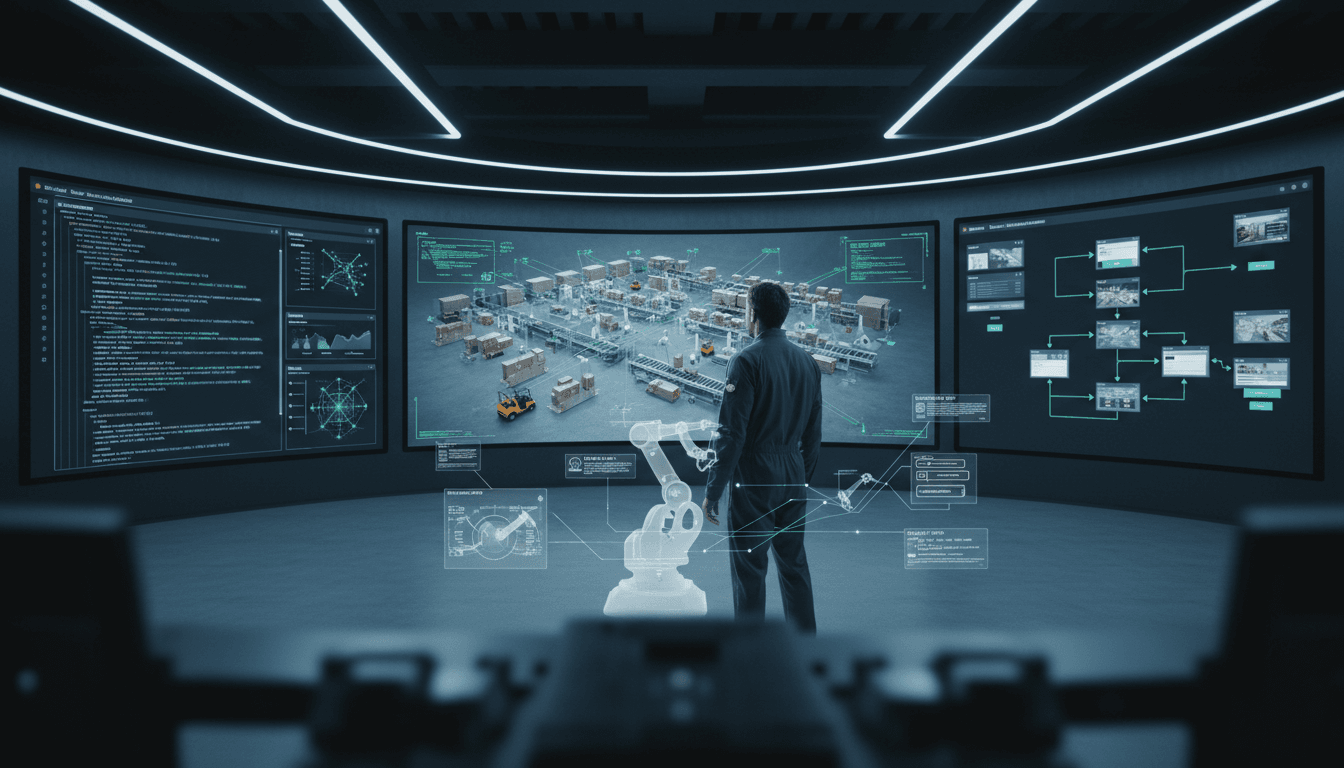

That’s why reinforcement learning (RL) environments are becoming the new battleground. The next leap in agentic AI won’t come from stuffing more tokens into training. It’ll come from giving models places to practice—digital “classrooms” where they can try, fail, recover, and improve. In our “Artificial Intelligence & Robotics: Transforming Industries Worldwide” series, this is the moment where AI shifts from predicting to doing.

Bigger models aren’t the bottleneck anymore—practice is

The core bottleneck is moving from data scarcity to experience scarcity. We’ve spent a decade optimizing “learn from static text” pipelines: pretraining on internet-scale corpora, then polishing with human feedback to make outputs more helpful and safer.

That progression worked because language is compressible: patterns repeat, and next-token prediction captures a lot of human knowledge. But operational work isn’t like that. Real deployments require:

- Handling missing context and messy inputs

- Executing multi-step tasks across tools and systems

- Recovering when something breaks (timeouts, permissions, UI changes)

- Adapting policies to constraints (cost, safety, compliance)

A model can describe how to do these things without reliably doing them. That gap—between knowing and performing—is exactly what RL environments are designed to close.

Here’s the stance I’ll take: the organizations that build training environments for their workflows will outpace the ones that only fine-tune prompts and add more data.

What an RL environment actually teaches (and why it’s different)

An RL environment turns training into an interactive loop, not a static prediction task. Instead of only predicting the next word, an agent:

- Observes the current state (what’s on screen, what the system returned, what the queue looks like)

- Takes an action (click, query, write code, route a shipment, schedule a nurse)

- Receives a reward signal (did it succeed? was it fast? did it violate a constraint?)

- Repeats—thousands to millions of times

Data teaches knowledge; environments teach behavior

Think of training data as “what good looks like.” It provides examples, language, and domain concepts. RL environments teach the habits that turn concepts into outcomes: persistence, verification, backtracking, and tool use.

A concrete example: a chat model can generate code snippets. Put the same model in a live coding sandbox with tests, a compiler, dependency conflicts, and ambiguous error messages. Suddenly it has to do what real developers do:

- Run code

- Read failures

- Make targeted edits

- Retest

- Stop when it passes

That’s not just “more data.” It’s a different kind of learning—learning by acting.

The “messy middle” is where agents either grow up or fail

Most real-world work lives in the messy middle:

- A purchase order doesn’t match a contract

- A patient record is missing a key lab value

- A road closure invalidates a delivery route

- A webpage changes layout and the automation script breaks

Humans handle this by experimenting and adapting. Agents need the same opportunity, and RL environments provide it safely—without breaking production systems.

Why industries should care: RL environments turn AI into operations

RL environments are the bridge from impressive demos to dependable automation. That’s especially true in industries where robotics and AI systems must operate under constraints: safety, latency, compliance, cost, and uptime.

Below are three practical ways this shows up across the “AI & robotics transforming industries” theme.

Logistics: training dispatchers that don’t panic when reality changes

Logistics is a constant fight against the unexpected: weather disruptions, late trailers, inventory mismatches, driver hours, dock congestion. A static model can propose a plan. A trained agent must continually re-plan as conditions change.

A high-value RL environment for logistics looks like a simulation of:

- Warehouses, docks, and carrier schedules

- Inventory availability and replenishment timing

- Road networks with stochastic delays

- Policy constraints (service-level agreements, driver hours, cost ceilings)

Reward functions can encode business outcomes:

- On-time delivery rate

- Total transportation cost

- Number of re-routes

- Customer churn proxies (late deliveries, partial fills)

A well-trained agent doesn’t just pick “the best route.” It learns what experienced planners know: keep options open, hedge against variability, and validate assumptions early.

Healthcare: safer automation through secure, high-fidelity simulations

Healthcare workflows are full of sensitive data and high-stakes decisions. You can’t “A/B test” an unproven agent on real patients.

That’s why RL environments matter: they let you train clinical and administrative agents in secure simulations that mirror reality but avoid real-world harm.

Examples of where simulated training pays off:

- Prior authorization workflows: navigating payer rules, documentation, and exceptions

- Hospital bed management: balancing admissions, staffing, and acuity constraints

- Clinical documentation assistance: verifying codes, catching missing info, suggesting follow-ups

The key point: the reward isn’t “sounds correct.” It’s reduces denials, reduces delays, avoids unsafe recommendations, and escalates when uncertain.

A well-designed environment can also train “stop conditions,” where the agent learns to hand off to a human when risk is high. That’s how human-AI collaboration stays sane.

Smart cities: agents that can coordinate, not just predict

Smart city systems—traffic control, utilities, emergency response—are multi-agent and constraint-heavy. Prediction helps (forecast demand, anticipate congestion). Action helps more (coordinate signals, allocate resources, reroute flows).

RL environments let cities and vendors test strategies in simulations of:

- Intersections and traffic patterns

- Public transit schedules

- Power grid demand and outages

- Emergency response logistics (ports, roads, supply chains)

This matters because coordination failures are expensive. A single bad policy can create cascading delays across an entire metro area. Simulated training allows repeated failure without real consequences, which is exactly what it takes to produce robust policies.

Building “classrooms for AI”: what makes an RL environment useful

A good RL environment is realistic, instrumented, and aligned to the outcome you actually want. Most teams get at least one of those wrong.

1) Realistic: include the failure modes you hate

If your environment is too clean, your agent becomes fragile.

Add the things that break production:

- Noisy inputs and incomplete records

- Permission errors and rate limits

- UI changes and layout shifts

- Contradictory requirements from stakeholders

- Adversarial or edge-case requests

A memorable rule: if your agent never learns to recover, it won’t recover when it matters.

2) Instrumented: make evaluation automatic

If you can’t measure it, you can’t improve it. Instrument environments to capture:

- Task success rate (end-to-end completion)

- Time-to-complete and number of steps

- Cost-to-complete (API/tool calls, compute)

- Safety/compliance violations

- Human escalation rate

These become both training signals and deployment gates.

3) Aligned: rewards should match business reality

Reward design is where many RL projects quietly fail. If you reward speed only, you’ll get reckless agents. If you reward success only, you may get agents that “cheat” by exploiting shortcuts that won’t exist in production.

Practical reward design patterns that work:

- Balanced objectives: reward success, penalize safety violations, slightly penalize unnecessary steps

- Shaped rewards: give partial credit for milestones (e.g., “validated constraints,” “ran tests,” “requested missing info”)

- Negative rewards for brittle behavior: penalize repeating the same failing action, infinite loops, or ignoring tool errors

Snippet-worthy definition: An RL environment is a controlled world where an AI agent learns policies by taking actions, receiving feedback, and optimizing for outcomes—not for plausible text.

People also ask: “Do RL environments replace training data?”

No—RL environments amplify training data by turning knowledge into skill. High-quality labeled data still matters: it teaches domain language, common patterns, and baseline competence. What changes is the center of gravity.

A practical mental model:

- Data teaches “what” and “why.”

- Environments teach “how,” “when,” and “what to do when it goes wrong.”

For companies building AI agents for operations, this pairing is non-negotiable: good data for grounding + good environments for robustness.

A pragmatic roadmap for companies deploying AI agents in 2026

If you want autonomous AI agents that help your business (not just your demos), start with one workflow and build its classroom. Here’s a sequence that I’ve found works in practice.

- Pick a workflow with clear outcomes and repeatable steps (claims triage, dispatch planning, IT ticket resolution).

- Define “success” with hard metrics (cycle time, error rate, cost per case, SLA adherence).

- Build a sandboxed environment with real tools (ticket system clone, ERP test instance, synthetic web apps, simulated warehouse).

- Add failure cases deliberately (missing fields, conflicting constraints, tool downtime).

- Train with staged difficulty: start easy, then introduce mess—like a flight simulator that adds turbulence.

- Deploy with guardrails: human approval for high-risk actions, audit logs, and rollback.

- Keep the environment alive: when production changes, update the simulation so the agent doesn’t drift.

This approach fits the broader theme of AI and robotics transforming industries: robots and agents don’t become dependable by reading manuals; they become dependable by practicing under constraints.

Where this is heading: from prediction to competence

The next phase of AI progress is being built quietly—in coding sandboxes, browser playgrounds, OS simulators, warehouse twins, and secure enterprise testbeds. These RL environments are the infrastructure that turns impressive language models into reliable agentic AI.

If you’re leading operations, product, or innovation, the most useful question isn’t “Which model should we choose?” It’s: What environment will we train it in, and what will we reward?

Companies that answer that question well will ship AI systems that can handle the messy real world—logistics disruptions, clinical constraints, and smart city coordination—without falling apart the moment something changes.

What would your AI agent need to practice 10,000 times before you’d trust it with one real shift?