RealMan’s open-source RealSource robot dataset brings aligned multi-modal data to embodied AI. See why it matters for deployment—and how to use it.

Open Robot Datasets: RealSource Speeds Physical AI

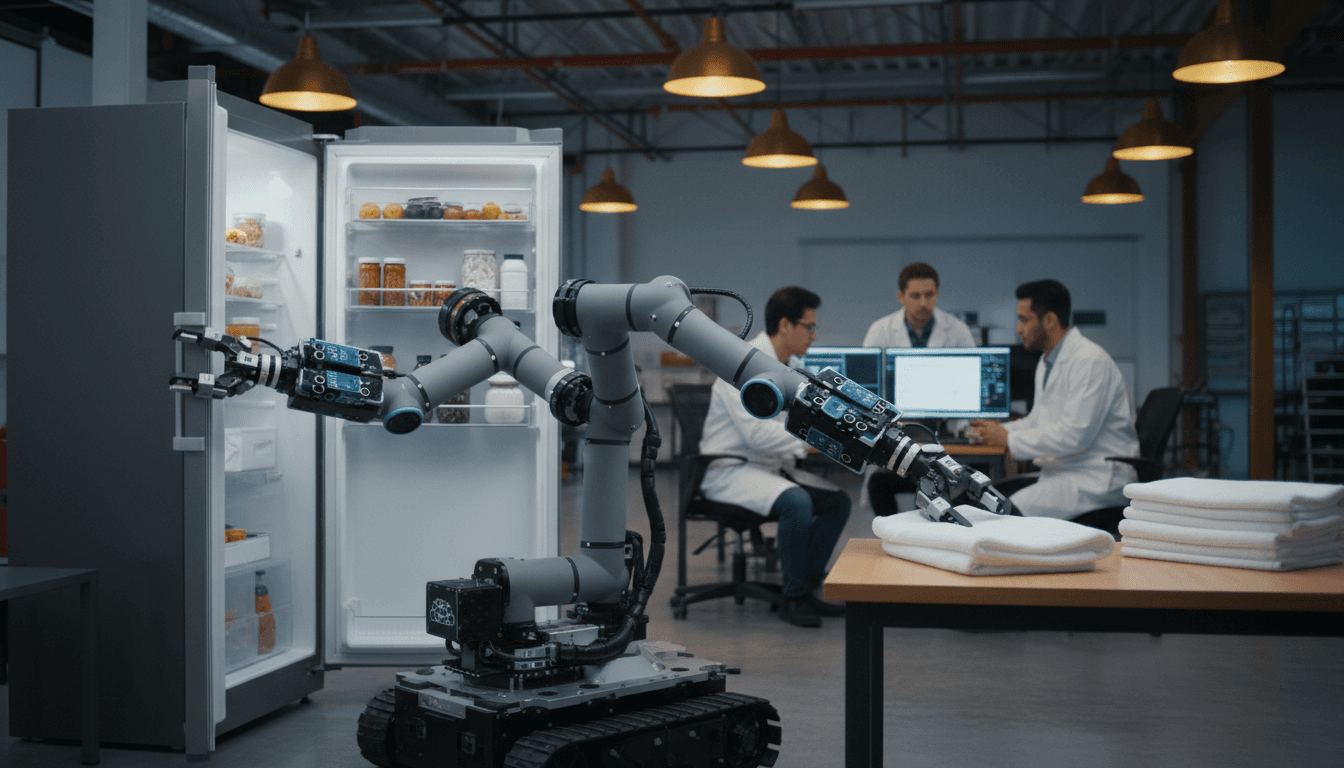

A robot can’t learn “the real world” from perfect lab demos. It needs the messy stuff: glare from a kitchen light, a refrigerator door that sticks, a tote that’s slightly deformed, a force sensor that jitters when someone bumps the table.

That’s why RealMan Robotics’ decision to open-source its RealSource robot dataset matters. RealSource isn’t just another folder of videos—it’s a multi-modal dataset captured with synchronized sensors across 10 real-world scenarios, built inside a dedicated 3,000 m² training center in Beijing. For teams building embodied AI (models that perceive, decide, and act in the physical world), aligned data is the oxygen supply. And too many robotics projects are still breathing through a straw.

This post is part of our “Artificial Intelligence & Robotics: Transforming Industries Worldwide” series, where we track the concrete developments that move AI-powered robotics from demos to deployments. Open datasets are one of those developments—quietly decisive, and often misunderstood.

Why open robot datasets are the fastest way to scale embodied AI

Answer first: Open robot datasets reduce the time and cost of training and validating real-world robot behavior, because they let more teams test ideas against the same, physically grounded data.

Most robotics organizations collect data, but they don’t share it. That creates three predictable problems:

- Reinvention: Every team records similar “pick-and-place” or “open-a-door” sequences from scratch.

- Benchmark fog: If everyone trains on different private data, it’s hard to tell whether a method is better—or just luckier.

- Slow generalization: Models overfit to a single building, a single camera mount, or a single operator’s style.

Open data doesn’t solve everything (hardware differences still exist), but it does create a common starting line. In the same way open-source software accelerated cloud computing, open robotics data accelerates physical AI—because it turns progress into something repeatable.

Here’s the stance I’ll take: the robotics industry won’t hit its next adoption wave through more secret datasets. It’ll get there by standardizing how we collect, synchronize, and evaluate multi-modal robot experiences.

What RealSource actually includes (and why “aligned” is the point)

Answer first: RealSource is designed to capture the full perception-to-action loop—RGB, depth, robot state, forces, end-effector pose, and commands—synchronized at the hardware level.

RealMan describes RealSource as a “high-quality, multi-modal robot dataset” aimed at an industry shortage: fully aligned real-world data. Alignment is the key word.

In robotics, the hard part isn’t collecting some signals. It’s collecting signals that match:

- The camera frame a policy “saw”

- The exact joint angles and velocities at that moment

- The force-torque readings during contact

- The action command that followed

- The timestamps and camera parameters needed to reconstruct context

RealSource covers the full perception–decision–execution chain, including:

- RGB imagery (and, on relevant platforms, depth)

- Joint angles and joint velocities

- Six-axis force sensing

- End-effector pose

- Action commands

- Timestamps and camera parameters

RealMan also claims hardware-level spatiotemporal synchronization, aligning sensors to a unified physical coordinate system. That may sound like an implementation detail, but it’s the difference between:

- “We have videos and logs” and

- “We have training-grade trajectories that a model can actually learn from.”

If you’ve ever tried to train a manipulation policy and discovered your force data is drifting half a second from your video, you already know why this matters.

The 10 scenarios: closer to operations than a lab

Answer first: RealSource focuses on task variety and environmental messiness—exactly what models need to generalize.

RealSource was built in RealMan’s Beijing Humanoid Robot Data Training Center, which includes a high-volume Training Zone and a Scenario Zone described as a “Robot University.” The 10 environments span practical domains such as:

- Smart home and eldercare

- Daily living

- Agriculture

- New retail

- Automotive assembly

- Catering

Example tasks include opening refrigerator doors, folding laundry, and sorting materials on factory lines—things that combine perception, contact, and error recovery.

RealMan emphasizes that collection happens outside a “laboratory greenhouse,” meaning environments are realistic and noisy. That’s a good direction. Robots deployed in hospitals, warehouses, and retail backrooms don’t get clean backgrounds or perfect lighting.

The robots used: why platform diversity helps

Answer first: Multiple robot embodiments reduce the chance that the dataset teaches a single “body-specific” trick.

RealSource uses three RealMan platforms for data collection:

- RS-01: a wheeled folding mobile robot with 20 DoF and multi-modal vision

- RS-02: a dual-arm lifting robot with RGB and depth vision, dual 7-DoF arms, 9 kg payload per arm, six-axis force sensing, and overhead fisheye perception

- RS-03: a dual-arm, “dual-eyed” robot with binocular stereo vision for precise manipulation

All three integrate large-FOV wrist and head cameras (listed as H 90° / V 65°) with full spatiotemporal synchronization.

Why that matters: embodied intelligence fails when it’s too tied to one camera angle or one gripper geometry. Cross-platform data pushes models toward more transferable representations.

The real business impact: how this translates to deployments

Answer first: Better aligned, multi-modal data shortens the path from prototype to production by improving reliability, safety, and debugging speed.

If you’re a research team, open datasets are about publishing and benchmarks. If you’re an industry team, it’s about risk. When a robot fails in production, it doesn’t fail as “AI.” It fails as:

- A dropped item that breaks a product

- A collision that triggers a safety stop

- A stalled workflow that creates labor spikes

- A system integrator call at 2 a.m.

Multi-modal datasets like RealSource help address these failure modes because they include signals that explain why a robot did what it did.

Manufacturing and assembly: contact-rich tasks need force + vision

In automotive assembly and factory sorting, “seeing” isn’t enough. Contact events—press fits, constrained insertions, bin picking with jams—require force feedback. When datasets include synchronized force and pose, teams can:

- Train policies that learn contact transitions (the moment free space becomes friction)

- Build better anomaly detection (force spikes that predict a jam)

- Validate safety envelopes (what “normal” forces look like)

RealMan lists millisecond-level joint data and six-axis force sensing as part of the value proposition. Even if you don’t train an end-to-end policy, those signals are gold for troubleshooting.

Retail and food service: variability is the job

Retail backrooms and commercial kitchens are brutal environments for automation:

- Packaging changes weekly

- Lighting is inconsistent

- Objects are deformable or reflective

- Humans share the workspace

A dataset that supports task repetition under diverse objects, environments, and lighting conditions is directly relevant here. Generalization doesn’t come from clever architecture alone; it comes from training exposure to the kinds of variations you’ll face after installation.

Healthcare and eldercare: reliability beats novelty

Service robotics in healthcare isn’t about flashy demos—it’s about predictable behavior in cluttered spaces. Tasks such as opening doors, fetching items, and assisting with daily living require:

- Careful motion near people

- Robust perception under occlusion

- Smoothness and stability in execution

RealMan cites dataset “smoothness” and “noise resistance” metrics. I’m always cautious with proprietary metrics, but the direction is right: robots in care environments need policies that behave calmly, not just correctly.

What to look for before you bet on any open robotics dataset

Answer first: Evaluate an open robotics dataset like you’d evaluate a supplier: check synchronization, task diversity, sensor modalities, and whether it matches your deployment risks.

Open-source availability is step one. Practical usefulness is step two.

Here’s a checklist I’ve found helpful when assessing datasets for embodied AI and mobile manipulation:

1) Can you reconstruct the timeline without guesswork?

Look for:

- Clear timestamps

- Known sensor rates

- Frame loss statistics (RealMan claims <0.5% frame loss)

If the data drops frames during fast motion, you end up training a robot to behave like it has “teleportation moments.” That’s not a joke—policies pick up those artifacts.

2) Do modalities match the failure modes you care about?

If your robot will do contact-heavy work, you need force/torque. If you’ll navigate crowds, you need wide-FOV perception and localization context. RealSource emphasizes RGB, joint states, forces, pose, and commands, which maps well to manipulation.

3) Is it calibrated enough to be usable without weeks of cleanup?

RealMan highlights factory-calibrated out-of-the-box use. That’s a major adoption factor. A dataset can be “open,” but if every user has to reverse-engineer coordinate frames, it won’t spread.

4) Are demonstrations realistic—or too perfect?

RealSource claims collection in realistic, noisy environments. What you want to see in practice is:

- Small errors

- Recovery attempts

- Variations in grasp approach

Perfect trajectories make robots brittle, because production is never perfect.

The under-discussed shift: from “robot code” to “robot data operations”

Answer first: Robotics teams are becoming data operations teams, and open datasets are pushing the industry toward shared standards.

Robotics used to be dominated by hand-tuned pipelines: perception code + motion planning + heuristics. That stack still matters, but the center of gravity is moving. Teams now win by managing:

- Data collection at scale

- Dataset versioning

- Scenario coverage

- Sensor synchronization

- Evaluation protocols

RealMan’s approach—building a dedicated training center with scenario zones and operator training—signals that shift clearly. It’s not just R&D anymore. It’s robotics production, and the raw material is data.

And this is where open-source datasets become a strategic catalyst: they create a shared substrate for research and deployment. When enough teams train on similar formats and scenarios, we start to get:

- Faster iteration cycles

- More comparable benchmarks

- More portable policy components

That’s how AI-powered robotics spreads from a few well-funded labs into mid-market manufacturers, regional healthcare providers, and service operators.

What to do next if you’re building with physical AI

Answer first: Use open datasets like RealSource to shorten experimentation cycles, then map your own data collection to the same modalities and synchronization standards.

If you’re leading an AI or robotics initiative, here are practical next steps that don’t require a hardware overhaul:

- Audit your current logs. Are your camera frames, joint states, and actions actually aligned—or just “roughly timestamped”?

- Define your top 5 failure modes. Dropped objects? Door-opening stalls? Force spikes? Your dataset needs to capture signals that explain those.

- Prototype training on a public dataset first. It’s cheaper to find architecture and tooling issues before collecting weeks of your own data.

- Standardize collection across environments. If you can’t repeat a task across lighting, clutter, and object variants, you’re training for a demo.

- Plan for multi-modal evaluation. Don’t just score success/fail. Track smoothness, forces, near-collisions, and recovery time.

RealMan says it plans to keep expanding RealSource with more scenarios and modalities. If that expansion keeps its promises—aligned sensors, realistic tasks, usable calibration—it will be the kind of open resource that accelerates real deployments.

The bigger question for 2026 isn’t whether robots will get smarter. It’s whether organizations will treat robot data as shared infrastructure, the same way the software world treats open-source libraries. If that happens, embodied intelligence stops being a boutique effort and starts looking like an industry.