Reservoir computing chips can predict sensor-driven actions in milliseconds using microwatts. See why low-latency edge AI matters for robotics and industry.

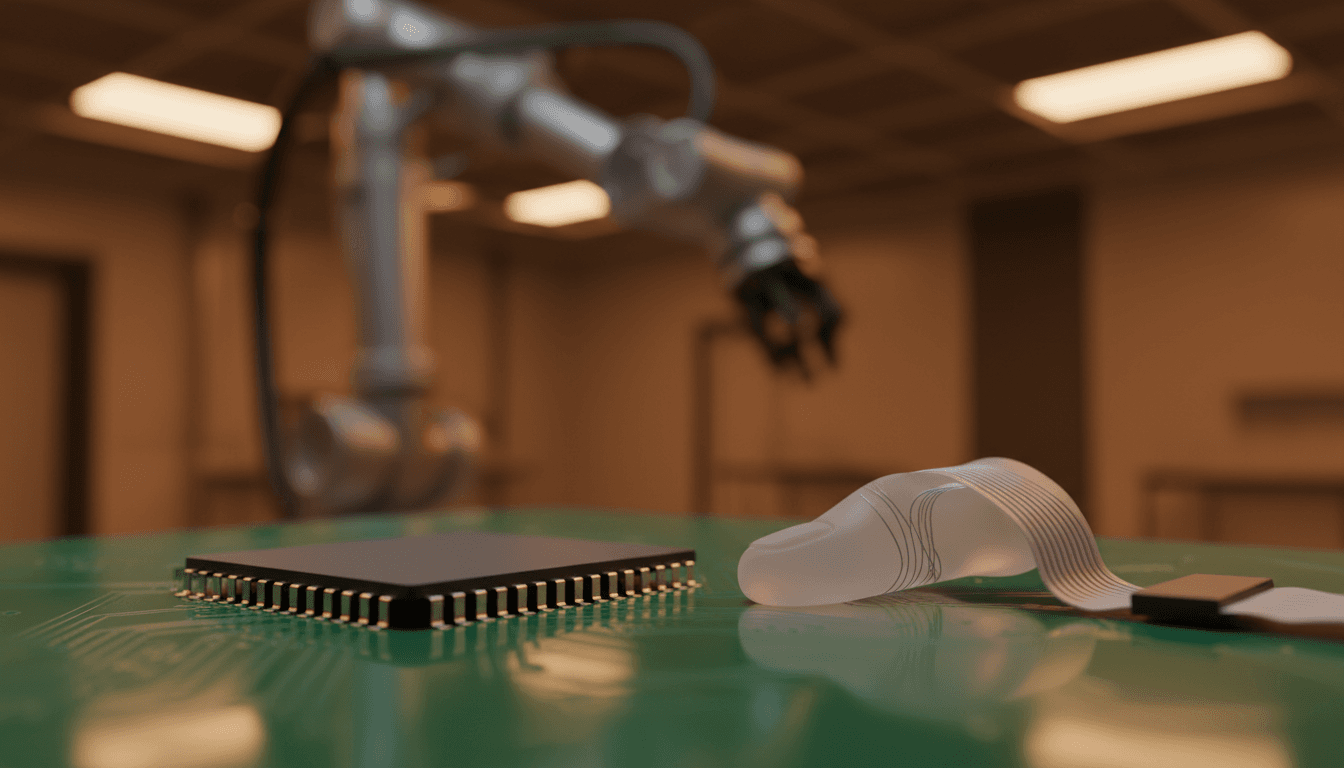

Edge AI Chips That Predict Fast—Like Rock-Paper-Scissors

A reservoir-computing chip from a Hokkaido University and TDK team can predict a person’s rock-paper-scissors gesture in the time it takes to say “shoot.” That’s not a party trick—it’s a clean demo of something industrial teams struggle with every day: making accurate predictions from sensor data with almost no latency and almost no power draw.

Most companies get this wrong by assuming “better AI” always means bigger neural networks and more compute. On factory floors, inside mobile robots, and on wearables, that assumption fails fast. Edge devices don’t have the power budget, thermal headroom, or network reliability to ship every signal to the cloud and wait.

This post breaks down what’s actually interesting about the rock-paper-scissors demo, why reservoir computing is resurfacing right now, and where low-power, real-time prediction chips fit into the larger “Artificial Intelligence & Robotics: Transforming Industries Worldwide” story.

The rock-paper-scissors demo is really a latency benchmark

The key point: the chip isn’t “reading minds.” It’s learning your specific thumb-motion patterns using an accelerometer, then predicting which gesture you’ll land on—rock, paper, or scissors—fast enough to respond before your motion completes.

That’s the real benchmark: closed-loop decision-making under tight timing constraints. In robotics, that’s the difference between:

- A cobot that stops safely when a human hand enters its workspace

- A gripper that adjusts force before an object slips

- An autonomous mobile robot (AMR) that corrects its path when a wheel starts to skid

Rock-paper-scissors just makes the idea instantly understandable: time-series sensor data comes in, the model forecasts the next state, and the system acts immediately.

Why “edge AI latency” is the problem hiding in plain sight

Edge AI conversations often obsess over accuracy. In practice, latency and energy per inference are what make deployments succeed or fail.

If your model is accurate but slow, your robot reacts late. If it’s accurate but power-hungry, your wearable dies by lunch or your embedded controller overheats. If it needs a round trip to the cloud, a spotty connection becomes a safety and uptime risk.

The Hokkaido/TDK chip targets exactly those constraints: fast prediction and ultra-low power. Their prototype reportedly uses 20 microwatts per core, with four cores totaling ~80 µW.

Reservoir computing, explained like you’ll actually use it

Reservoir computing is a machine learning approach built for time-series prediction—signals where what happens next depends on what happened moments ago. That includes vibration, current draw, pressure, accelerometer data, audio, and many control-system signals.

Here’s the simplest way to frame it:

Reservoir computing keeps a “memory” of recent inputs inside a fixed, looped network, then only trains a small output layer.

Reservoir computing vs. neural networks (what changes operationally)

Traditional neural networks (including many deep learning models) typically:

- Pass data forward through layers

- Train by adjusting many weights via backpropagation

- Require substantial compute for training, and often for inference

Reservoir computing flips two assumptions:

- The internal network (“reservoir”) is fixed—you don’t train those connections.

- You train only the readout layer that maps reservoir states to outputs.

Operationally, this matters because training can be dramatically simpler and faster. That’s why reservoir computing keeps resurfacing when people get serious about edge AI and real-time learning.

Why “edge of chaos” matters for prediction

Researchers often describe reservoirs as operating at the edge of chaos: dynamic enough to represent many possible states, stable enough to remain usable. In plain terms, the reservoir’s internal loops create a rich set of responses to incoming signals—perfect for extracting structure from messy real-world time series.

If your job is forecasting the next moment of a system that’s noisy, nonlinear, or partially chaotic, a reservoir can be a very practical tool.

Why this chip matters: physical reservoir computing in CMOS

The standout engineering choice here is hardware implementation.

Instead of running the reservoir on a general-purpose processor, the team built an analog reservoir-computing circuit in a CMOS-compatible form. That’s a big deal because CMOS compatibility is what makes technology manufacturable at scale for embedded devices.

Each artificial “neuron” node (as described in the RSS summary) includes:

- A nonlinear resistor (nonlinearity is essential for expressive dynamics)

- A memory element based on MOS capacitors (to hold state over time)

- A buffer amplifier (to keep signals usable across the circuit)

The chip includes four cores, each with 121 nodes.

The practical design tradeoff: a simple cycle reservoir

Connecting nodes in a complex, web-like pattern is painful on silicon. The team used a simple cycle reservoir—nodes connected in one large loop—to reduce wiring complexity.

I like this decision because it’s the kind of compromise real product teams make:

- You accept a simpler topology

- You gain manufacturability and lower design risk

- You still get strong performance on many time-series tasks

This is how edge AI becomes a product instead of a lab demo.

Where low-power prediction chips show up in real industries

The rock-paper-scissors win is fun. The industrial payoff is predicting “what happens next” locally, where the sensor data is born.

Below are realistic, high-value scenarios where low-latency edge AI prediction changes system design.

Manufacturing: predictive maintenance that doesn’t need the cloud

Predictive maintenance often fails because it’s deployed like an IT project: stream everything, store everything, analyze later. On a factory floor, that can be expensive and slow.

A reservoir chip sitting near the motor controller can learn patterns in:

- Vibration signatures

- Motor current waveforms n- Acoustic emissions

…and forecast anomalies in real time. The win isn’t just earlier detection—it’s local, immediate action, such as slowing a machine, rerouting work, or triggering inspection.

Logistics and robotics: tighter control loops for AMRs and pick systems

In warehouses, AMRs and automated picking systems run on tight power budgets and need fast reactions.

A low-power predictor can help with:

- Wheel slip and traction prediction

- Payload shift detection using IMUs

- Predicting human motion near shared workspaces (safety + throughput)

If inference is fast enough, you can move parts of autonomy from “plan, then act” toward “predict and correct continuously.” That’s how systems feel smooth and safe.

Wearables and smart devices: “always-on” without constant charging

Wearables are where power budgets get brutally real. A chip using tens of microwatts is in the range where always-on sensing becomes practical.

Use cases include:

- Gesture control that works offline

- Activity recognition with fast personalization

- Early warning signals from physiological time series (movement, tremor patterns)

And yes, “trained on your particular gestures” matters. Personalization is often the difference between a feature people try once and a feature they use daily.

Energy and infrastructure: fast forecasting at the edge

Time-series prediction shows up everywhere in infrastructure:

- Grid edge devices predicting load fluctuations

- Pumps predicting cavitation onset

- HVAC systems predicting thermal drift

A low-power predictor allows more distributed intelligence—less dependence on centralized compute, and fewer single points of failure.

People also ask: is this replacing deep learning on the edge?

No—and that’s the right answer.

Reservoir computing is not a universal substitute for deep neural networks. Vision-language models, large-scale perception, and complex planning still benefit from modern deep learning.

The better mental model is:

Use reservoir computing where you need fast, low-power, time-series prediction close to sensors. Use deep learning where you need rich perception and representation learning.

In many robotics stacks, both will coexist:

- Deep learning handles perception (camera/LiDAR understanding)

- Reservoir-style models handle fast prediction and control-adjacent signals

- Classical control handles stability and safety guarantees

That layered approach tends to ship.

How to evaluate reservoir computing for your edge AI roadmap

If you’re responsible for deploying AI in robotics, manufacturing, or smart devices, here’s a practical checklist I’ve found useful.

1) Start with the signal, not the model

Reservoir computing shines when the input is a stream with meaningful temporal structure.

Good candidates:

- IMU streams (accelerometer/gyroscope)

- Vibration/current/pressure waveforms

- Audio snippets

- Any sensor where “the last second matters”

2) Define your hard constraints first

Before choosing any architecture, set:

- Maximum end-to-end latency (milliseconds)

- Energy budget per inference (or per hour)

- Thermal envelope and duty cycle

- On-device training needs (yes/no)

If you can’t articulate these, you’ll overbuild and miss the business target.

3) Decide what must happen on-device

A common winning pattern:

- On-device model does fast detection/prediction

- Only “events” get logged or transmitted

- Cloud retraining happens periodically for fleet-wide improvements

This reduces bandwidth costs and privacy exposure while improving responsiveness.

4) Watch the integration friction

The quiet killer is integration:

- Can the chip fit your existing sensor pipeline?

- Is calibration straightforward?

- Can you update the readout layer safely?

- What happens when the signal drifts over months?

Edge AI succeeds when maintenance is boring.

What this means for AI-powered robotics in 2026

The message I take from the Hokkaido/TDK work is simple: the next wave of AI in robotics won’t be defined only by bigger models. It’ll be defined by where intelligence runs—right next to sensors—and how quickly it can predict what happens next.

The rock-paper-scissors demo is a proxy for the real goal: human-machine interaction that feels instant, and automation that stays reliable even when networks and power budgets don’t cooperate.

If you’re building products in manufacturing, logistics, or smart devices, it’s time to treat low-power edge AI hardware as a design option—not an afterthought. Where could faster, cheaper prediction change your system from “reactive” to “anticipatory”?