IEEE AI ethics certification helps teams reduce bias, improve transparency, and align with regulation. See how CertifAIEd applies to people and products.

IEEE AI Ethics Certification: Trustworthy AI at Scale

Most companies are already using AI in ways that create real operational risk—often without realizing it. The risk isn’t just “the model might be wrong.” It’s that an autonomous intelligent system can harm people, trigger regulatory exposure, or quietly erode trust in your brand: biased screening in hiring, misidentification in surveillance workflows, privacy leaks in customer support automation, or synthetic media used to spread misinformation.

That’s why the arrival of two new AI ethics certifications from IEEE (one for professionals, one for products) matters more than it sounds at first. If your organization is serious about deploying AI across workflows—especially in AI-powered automation and robotics—ethics can’t be a slide deck. It has to become a repeatable practice with owners, checklists, and independent assessment.

IEEE’s CertifAIEd program is a practical signal that AI governance is maturing. Not in a philosophical way. In a “who’s accountable, how do we document decisions, how do we prove compliance, and how do we keep models from drifting into unacceptable behavior” way.

Why AI ethics certification is showing up everywhere

AI ethics certification is becoming mainstream for one reason: AI is moving from experiments to production systems that affect money, safety, and civil rights.

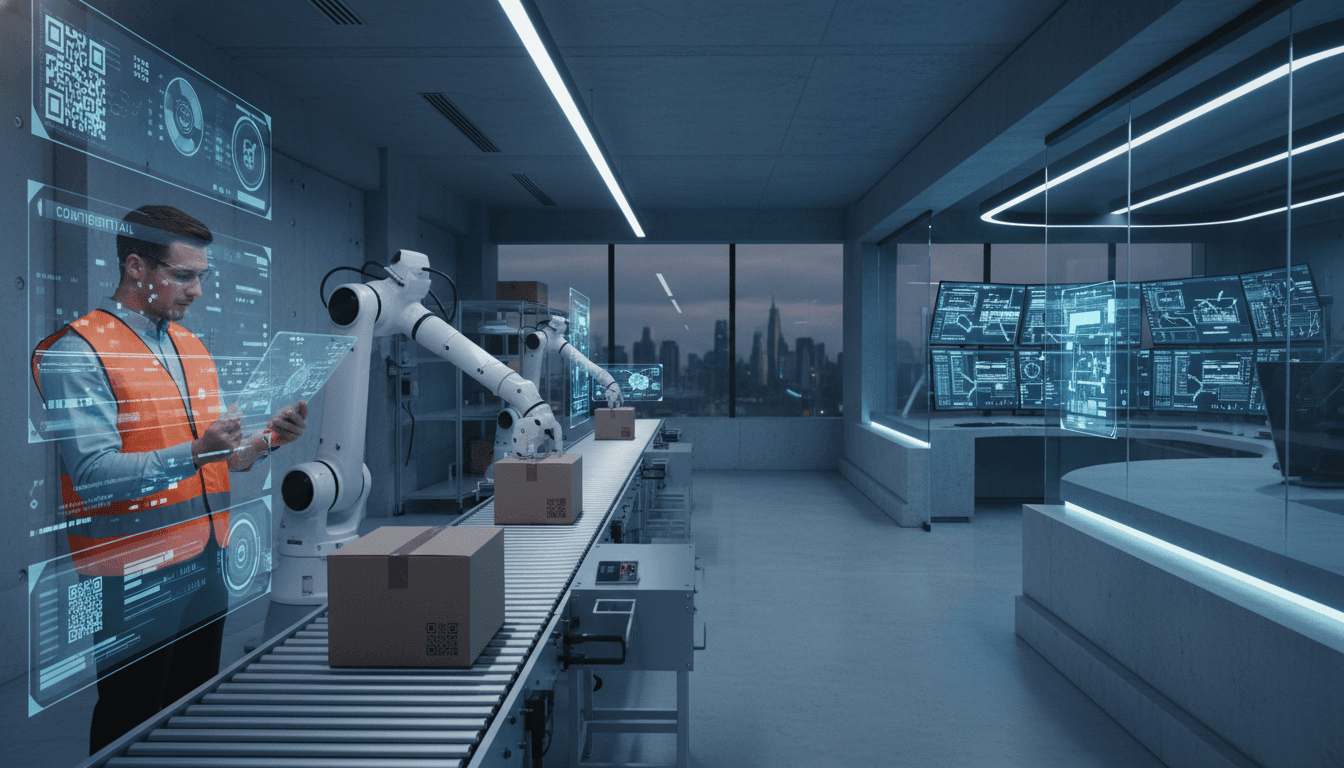

In the “Artificial Intelligence & Robotics: Transforming Industries Worldwide” series, we’ve tracked how AI-driven automation changes factories, hospitals, warehouses, and city infrastructure. The pattern is consistent: as AI becomes embedded in operations, the cost of a failure becomes nonlinear. A mistake isn’t a bad dashboard—it can be a denied loan, a wrongfully flagged customer, or a robotic process that makes a thousand bad decisions per hour.

Three forces are pushing certification forward:

1) Trust is now a procurement requirement

Large enterprises and public agencies increasingly want proof that AI systems are transparent, accountable, privacy-respecting, and bias-aware. “We tested it internally” doesn’t travel well across procurement, audits, or board scrutiny.

2) Regulation is tightening (and global)

In 2025, compliance isn’t a single-country problem. Many organizations are aligning their AI programs to the European Union AI Act and similar frameworks emerging worldwide. Even if you don’t sell in the EU, you’ll feel the gravitational pull because partners, customers, and insurers will.

3) AI incidents are easier to create than to clean up

Deepfakes and realistic synthetic media can damage reputations fast. Bias claims can trigger legal reviews and stall product launches. Privacy failures can force notification obligations and regulatory fines. It’s cheaper to build governance upfront than to do emergency forensics later.

A simple stance I’ll defend: If an AI system touches hiring, lending, identity, health, or physical operations, you should treat ethics and compliance like security—measurable and continuously maintained.

What IEEE CertifAIEd actually certifies (and why that’s useful)

IEEE CertifAIEd is built around an AI ethics methodology organized under four practical pillars: accountability, privacy, transparency, and avoiding bias. These aren’t abstract values; they map cleanly to day-to-day decisions teams make when deploying autonomous intelligent systems (AIS).

Here’s how those pillars show up in real-world AI deployment:

Accountability: someone owns the outcome

Accountability means you can answer questions like:

- Who approved this model for production?

- Who monitors its performance and failures?

- What happens when the system is wrong?

- What’s the escalation path when harm is detected?

In robotics and industrial automation, this matters because AI decisions can become physical actions. If a computer vision model misclassifies a safety zone, the incident isn’t a customer complaint—it’s an injury report.

Privacy: data minimization isn’t optional

Privacy in AI is often where “smart” turns into “creepy.” Customer support bots, employee monitoring tools, and smart-city systems can collect far more than needed.

Ethical deployment means being explicit about:

- What data is collected and why

- How long it’s retained

- Who can access it

- Whether it’s used for training (and under what consent)

Transparency: people deserve understandable systems

Transparency isn’t “publish the full model weights.” In business reality, transparency means:

- You can explain what the system is doing at the level stakeholders need

- You can document training data lineage and limitations

- Users aren’t deceived about whether they’re interacting with AI

For AI-driven efficiency to be sustainable, people must be able to challenge decisions and understand boundaries.

Avoiding bias: measure it, don’t just promise it

Bias becomes operational when it’s baked into training data, labeling practices, and evaluation metrics. That’s how discrimination can sneak into hiring, lending, pricing, or policing-like workflows.

Ethical AI programs treat bias as an engineering and governance problem:

- Define fairness metrics appropriate to the domain

- Test across subgroups (not just overall accuracy)

- Monitor drift and feedback loops

The professional certification: who it’s for and how it helps

The IEEE CertifAIEd professional certification is designed for people who assess whether an autonomous intelligent system adheres to IEEE’s ethics methodology.

Eligibility is notably practical: at least one year of experience using AI tools or systems in your organization’s processes. And IEEE’s message here is the right one: you don’t have to be an AI engineer to do meaningful ethical oversight.

According to IEEE SA’s program leadership, this certification is relevant to roles such as:

- HR and talent teams using AI screening tools

- Insurance underwriters using automated risk scoring

- Policy and compliance teams shaping AI governance

- Operations leaders deploying AI decision support

That matters because many AI harms happen outside the data science team. The tool is purchased, integrated into workflows, and then quietly influences decisions at scale.

What the training covers (in business terms)

The curriculum focuses on how to:

- Ensure AI systems are open and understandable (practical transparency)

- Identify and mitigate algorithmic bias

- Protect personal data

It also includes use cases, which is where these programs either succeed or fail. I’ve found that teams change behavior faster when they can map principles to “what would we do in our loan approvals workflow?” rather than “what is fairness?”

Cost, format, and validity

Courses are offered in virtual, in-person, and self-study formats. Learners take a final exam, and successful candidates receive a three-year certification.

For self-study exam prep, pricing is listed as US $599 for IEEE members and $699 for nonmembers.

The product certification: turning ethics into a customer-facing signal

The IEEE CertifAIEd product certification evaluates whether an organization’s AI tool or AIS conforms to the IEEE framework and stays aligned with legal and regulatory principles (explicitly including alignment with the EU AI Act).

This is important: product certification shifts ethics from “internal aspiration” to “external commitment.” In procurement-heavy industries—manufacturing, healthcare, logistics, government—external signals reduce friction.

How product assessment works

An IEEE CertifAIEd assessor evaluates the product against the criteria. The RSS summary notes there are 300+ authorized assessors.

After assessment:

- The company submits the results to IEEE Conformity Assessment

- IEEE certifies the product

- A certification mark is issued

The promise is straightforward: customers can interpret the mark as evidence the product conforms to the latest IEEE AI ethics specifications.

Why this matters for AI + robotics deployments

In robotics and autonomous systems, AI often arrives as part of a vendor package: sensors + software + an autonomy layer. A certification mark helps operations teams and risk owners answer:

- Does this vendor have a repeatable ethics process?

- Can we document due diligence?

- Are there ongoing checks, not just a one-time audit?

And yes, it’s also a competitive differentiator. But the bigger win is risk containment.

A practical roadmap: how to use certification in your AI governance

Certifications don’t replace good governance—but they can accelerate it. Here’s a pragmatic way to integrate IEEE CertifAIEd into your AI strategy without turning it into bureaucracy theater.

1) Start by classifying your AI use cases

Treat AI like you treat safety or financial controls: not every system needs the same rigor.

A simple three-tier model works:

- Tier 1 (High impact): hiring, lending, identity verification, medical triage, surveillance, robotics safety

- Tier 2 (Medium impact): customer service summarization, fraud triage with human approval, demand forecasting

- Tier 3 (Low impact): internal productivity tools with no personal data, non-decisional copilots

Prioritize certification efforts around Tier 1 and critical Tier 2 systems.

2) Put certified people where decisions actually happen

If your AI governance team sits far from procurement, HR, operations, and legal, it won’t stick.

A structure that works:

- 1–2 certified professionals embedded in major business units using AI

- A central AI governance lead to coordinate standards and reporting

- A recurring review cadence (quarterly for high-impact systems)

3) Use product certification for vendor management

If you buy AI systems (and most companies do), ask vendors for:

- Evidence of independent assessment

- Monitoring practices and incident reporting procedures

- Change management: what happens when models update?

If a vendor can present a recognized product certification, your vendor risk review becomes faster and more defensible.

4) Make “ethics” measurable

Teams get stuck when ethics stays subjective. Make it measurable with artifacts:

- Model cards and data sheets

- Bias and performance evaluation across subgroups

- Privacy impact assessments

- Human oversight and appeal processes

Certification can help standardize what “good documentation” looks like.

Common questions leaders ask (and direct answers)

“Do we really need an AI ethics certification if we already have security and compliance?”

Yes—because security and compliance programs typically don’t cover bias, explainability, or human impact with enough specificity. AI needs its own controls, even if they’re integrated into existing governance.

“Is certification only for tech companies?”

No. The fastest-moving need is in non-tech organizations using AI in business processes: insurers, retailers, manufacturers, logistics providers, healthcare networks, and city infrastructure operators.

“Will this slow down AI adoption?”

It slows down reckless adoption. It speeds up sustainable adoption by reducing surprise failures, stalled launches, and procurement dead-ends.

Where this fits in the bigger AI & robotics story

AI-powered robotics and autonomous systems are scaling globally because they deliver tangible benefits: fewer defects, faster throughput, better forecasting, safer inspections, and more responsive service operations.

But there’s a tradeoff: the more autonomy you introduce, the more you need trust infrastructure—the processes, assessments, and accountability mechanisms that keep systems aligned with human values and legal requirements.

IEEE CertifAIEd is one of the clearest signals that AI ethics is becoming operational. For teams building and deploying autonomous intelligent systems, that’s good news.

If you’re planning your 2026 AI roadmap right now, here’s the move I’d make: identify your highest-impact AI systems and ensure at least a few people on the team can run credible ethics assessments—then push key vendors toward independent product certification.

The next wave of AI winners won’t just ship models faster. They’ll prove their systems deserve to be trusted. What would it take for your organization to say that with a straight face?