Agentic AI leaves a hidden data trail. Learn six engineering habits to shrink retention, tighten access, and keep autonomy without sacrificing privacy.

Shrink Agentic AI Data Trails Without Losing Autonomy

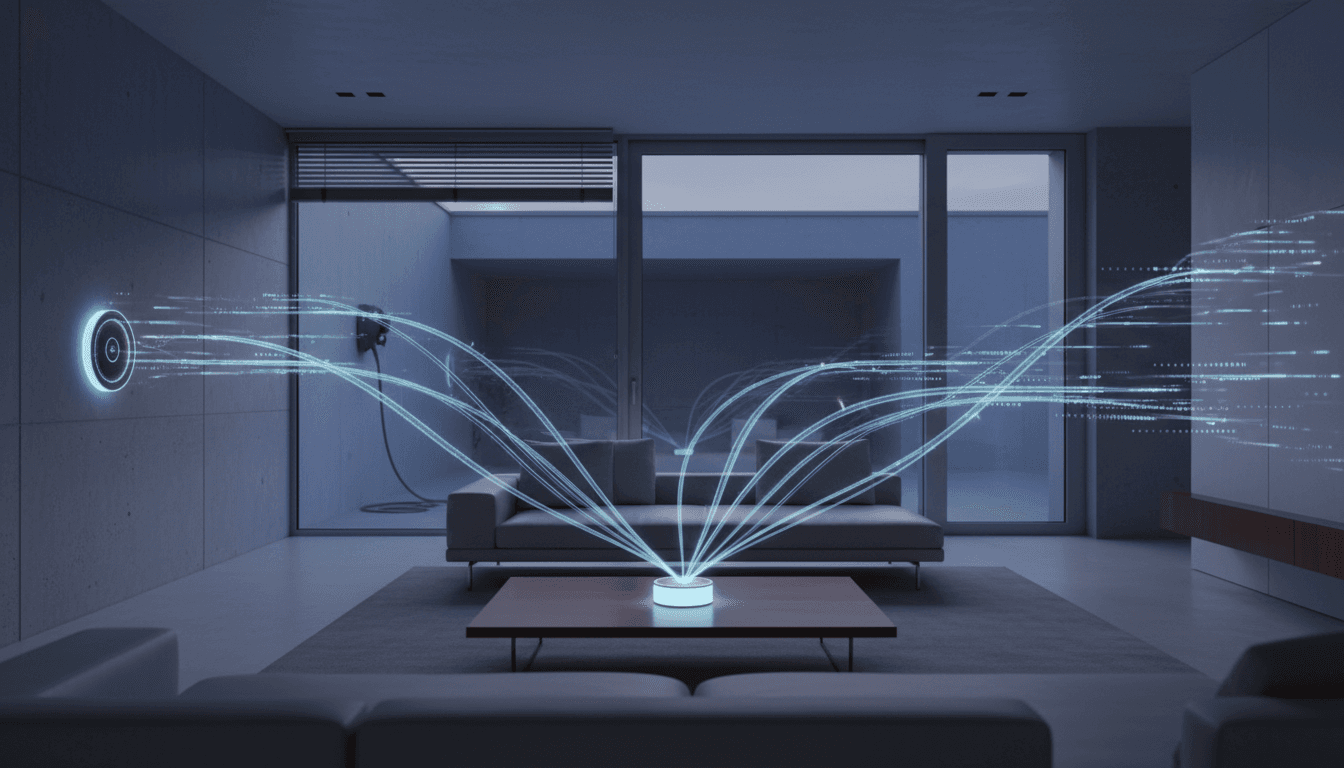

A typical smart home “optimizer” doesn’t just flip a thermostat. It watches, predicts, decides, and acts—often dozens or hundreds of times per day. That’s what makes agentic AI so useful… and why it leaves such a large data footprint by default.

Most companies get this wrong: they treat privacy as a policy problem (“add a consent screen”) instead of an engineering problem (“stop producing and retaining so much data in the first place”). The reality is simpler than you think. If your AI agent can plan and execute tasks, it can also plan and execute data minimization—as long as you design it that way.

This matters well beyond smart homes. The same plan–act–reflect loop now shows up in enterprise copilots, warehouse robotics orchestration, IT automation agents, customer service agents, and travel-booking assistants. As AI and robotics keep transforming industries worldwide, the organizations that win leads and trust in 2026 won’t be the ones with the most features—they’ll be the ones with credible, verifiable data governance.

Why agentic AI creates a “hidden data trail”

Agentic AI creates a hidden data trail because planning and reliability depend on recording context: what the user wanted, what tools the agent used, what it tried, what happened, and what it learned.

In a smart-home scenario, an agent might:

- Pull electricity price feeds and weather forecasts

- Learn household comfort preferences

- Adjust thermostats and blinds

- Schedule EV charging

- Log outcomes to improve next week’s plan

Each of those steps can generate artifacts that stick around:

The trail isn’t one log—it’s many copies

A common misconception is that the “data” is only the chat transcript. In practice, agentic systems produce multiple layers of records:

- Planner traces (intermediate reasoning, tool calls, decisions)

- Action logs (what changed, when, and on which device/system)

- Caches (weather, prices, embeddings, summaries)

- Reflections (post-run notes that quietly become behavioral profiles)

- Permissions and tokens (proof the agent could access sensitive tools)

- Third-party telemetry (device manufacturers, analytics SDKs, cloud monitors)

Even if the agent stores pseudonymous profiles locally and avoids cameras/mics, it can still accumulate a surprisingly intimate picture of routines: wake times, occupancy patterns, travel days, charging behavior, and work-from-home cadence.

Reliability pushes teams toward “keep everything”

Teams building AI-powered automation often default to broad retention because it makes debugging easier. When something breaks, logs are comforting. But “keep everything forever” turns operational convenience into long-term risk:

- Security exposure: more stored data means a larger breach blast radius

- Compliance load: retention policies, deletion requests, audit readiness

- Trust erosion: users and buyers increasingly ask, “What do you store?”

If you’re building agentic AI for any industry—manufacturing, logistics, healthcare operations, smart buildings—this is the same pattern. Autonomy increases value, but autonomy also increases the amount of behavioral exhaust.

The smart home is a preview of AI + robotics everywhere

Smart homes look like consumer tech, but architecturally they’re close cousins of industrial AI and robotics.

A home optimizer is basically a small-scale orchestration system:

- Sensors and signals (prices, weather, motion/door sensors)

- Actuators (thermostats, blinds, plugs, chargers)

- A controller (agentic AI planner)

- Feedback loops (did comfort improve? did cost drop?)

Now zoom out:

- A warehouse agent schedules pick paths, charging cycles, and robot downtime.

- A hospital operations agent coordinates staffing, beds, and supply ordering.

- A smart building agent manages HVAC, lighting, and occupancy zones.

In every case, the same question shows up in procurement and security reviews: Can you prove your agent doesn’t retain more data than it needs?

That’s why shrinking data trails isn’t a “nice-to-have.” It’s a go-to-market advantage—especially for lead generation in regulated or security-sensitive industries.

Six engineering habits that shrink agentic AI data trails

You don’t need a new privacy doctrine. You need disciplined implementation patterns that match how agents actually work.

1) Constrain memory to the task window

The most effective move is also the least glamorous: limit what the agent can remember, and for how long.

For a home optimizer, a practical approach is a one-week run window:

- The agent can use working memory for that week’s plan and adjustments.

- “Reflections” are structured and minimal (think checkboxes and short fields, not freeform diaries).

- Anything that persists gets an expiration timestamp.

Snippet-worthy rule: If the agent can’t justify why it needs the data next week, it shouldn’t exist next week.

2) Make deletion simple, thorough, and confirmable

Deletion fails in agentic systems because data is scattered: local logs, cloud stores, embeddings, caches, monitoring tools.

A strong pattern is run-based tagging:

- Every artifact gets a

run_id(plans, tool outputs, logs, embeddings, caches). - A single “delete this run” command cascades across systems.

- The system returns a human-readable confirmation of what was removed.

Keep a separate minimal audit trail for accountability—event metadata only, on its own retention clock.

What I’ve found works well in practice: treat deletion like a product feature, not a backend chore. If users can’t verify it, they won’t trust it.

3) Use short-lived, task-specific permissions

Overbroad access is how agents become scary. It’s also how data trails balloon.

Instead of “always-on” permissions, issue temporary capability keys:

- Thermostat control key valid for 15 minutes

- EV charging scheduler key valid for one action

- Blind tilt key valid for a specific time window

When keys expire quickly, the agent:

- Can’t keep “trying things” indefinitely

- Stores less sensitive context “just in case”

- Reduces the damage if credentials are exposed

This is as relevant in enterprise automation as it is in homes. AI agents that touch calendars, ticketing systems, ERP, or robotics controllers should operate on least privilege + short duration.

4) Provide a readable agent trace (not just logs)

If you want trust, give users visibility that doesn’t require a security engineer.

A good agent trace answers, in plain language:

- What the agent intended to do

- What it actually did

- Which data sources it accessed

- Where outputs were stored

- When each stored item will be deleted

Two must-haves:

- Exportability (users can download a trace)

- One-click purge (delete data for a run/account with confirmation)

In B2B settings, this becomes a sales asset: buyers can show internal stakeholders a clear trace instead of hand-waving.

5) Enforce “least intrusive data” by design

Agents tend to escalate. If a signal is ambiguous, they grab more data.

A privacy-first rule is: use the least intrusive method that achieves the task.

Smart home example:

- If occupancy can be inferred from door sensors + motion detection, don’t pull a camera snapshot.

- Prohibit escalation unless it’s strictly necessary and no equally effective alternative exists.

Industrial parallel:

- If a factory agent can detect anomalies from vibration sensors, don’t start collecting high-resolution video of workstations by default.

This habit protects users and reduces operational cost—because storing and securing “richer” data (video, audio, raw sensor streams) is expensive.

6) Practice mindful observability

Observability is where well-meaning teams accidentally build surveillance.

Mindful observability means:

- Log only essential identifiers

- Avoid storing raw sensor data when summaries will do

- Cap logging frequency and volume

- Disable third-party analytics by default

- Give every stored record a retention limit

If you’re using LLM-based agents, apply the same principle to prompts and tool outputs. You usually don’t need a perfect transcript of everything the model saw—especially if it contains personal routines.

What a privacy-first agentic AI system looks like in practice

A privacy-first agent isn’t less capable. It’s more intentional.

In the home optimizer scenario, the user experience can stay “magical”:

- The living room is precooled before peak pricing.

- Blinds adjust before midday heat.

- EV charging happens when rates drop.

But under the hood, the system behaves differently:

- Data is scoped to a run (for example, a week).

- Stored artifacts have expiration times.

- Permissions are temporary.

- The user can open a trace page and see retention at a glance.

- Deletion produces confirmation, not vibes.

That combination maps directly to enterprise AI governance conversations happening right now. In late 2025, many organizations are expanding agent pilots into production—and security teams are tightening requirements around data retention, access control, and auditability. Systems that can demonstrate these controls clearly tend to move faster through approvals.

Implementation checklist (use this for your next agent build)

If you’re building agentic AI for smart homes, smart buildings, robotics orchestration, or enterprise automation, this checklist is a solid starting point:

- Define the task window (day/week/month) and cap memory to it.

- Tag everything (plans, tool calls, embeddings, caches) with a

run_id. - Automate deletion across stores, and return user-visible confirmation.

- Separate audit from debug logs; keep audit minimal with short retention.

- Adopt expiring permissions (least privilege + short-lived tokens).

- Ship an agent trace UI that a non-technical user can read.

- Add an escalation policy for intrusive data sources (video/audio). Default deny.

- Disable third-party analytics by default and document observability limits.

If you can do these eight things, you’re already ahead of most teams.

The bigger point for AI & robotics leaders

Agentic AI is becoming the control layer for connected devices—at home and across entire industries. That’s the theme running through this “Artificial Intelligence & Robotics: Transforming Industries Worldwide” series: more autonomy, more coordination, more impact.

But autonomy without restraint produces a predictable outcome: a growing behavioral dataset that nobody intended to create. And once that dataset exists, it becomes a security target, a compliance burden, and a trust problem.

If you’re evaluating or building AI-powered systems right now, the question to ask isn’t “Does it have privacy features?” It’s: Does the system’s default operation minimize data, prove deletion, and limit access automatically?

The teams that treat data minimization as a core product capability will earn more deployments—and more leads—because buyers can see the difference immediately.

Forward-looking question: As agents start coordinating not just apps but physical devices (robots, chargers, HVAC, industrial controllers), will your data governance scale with that autonomy—or collapse under its own logs?