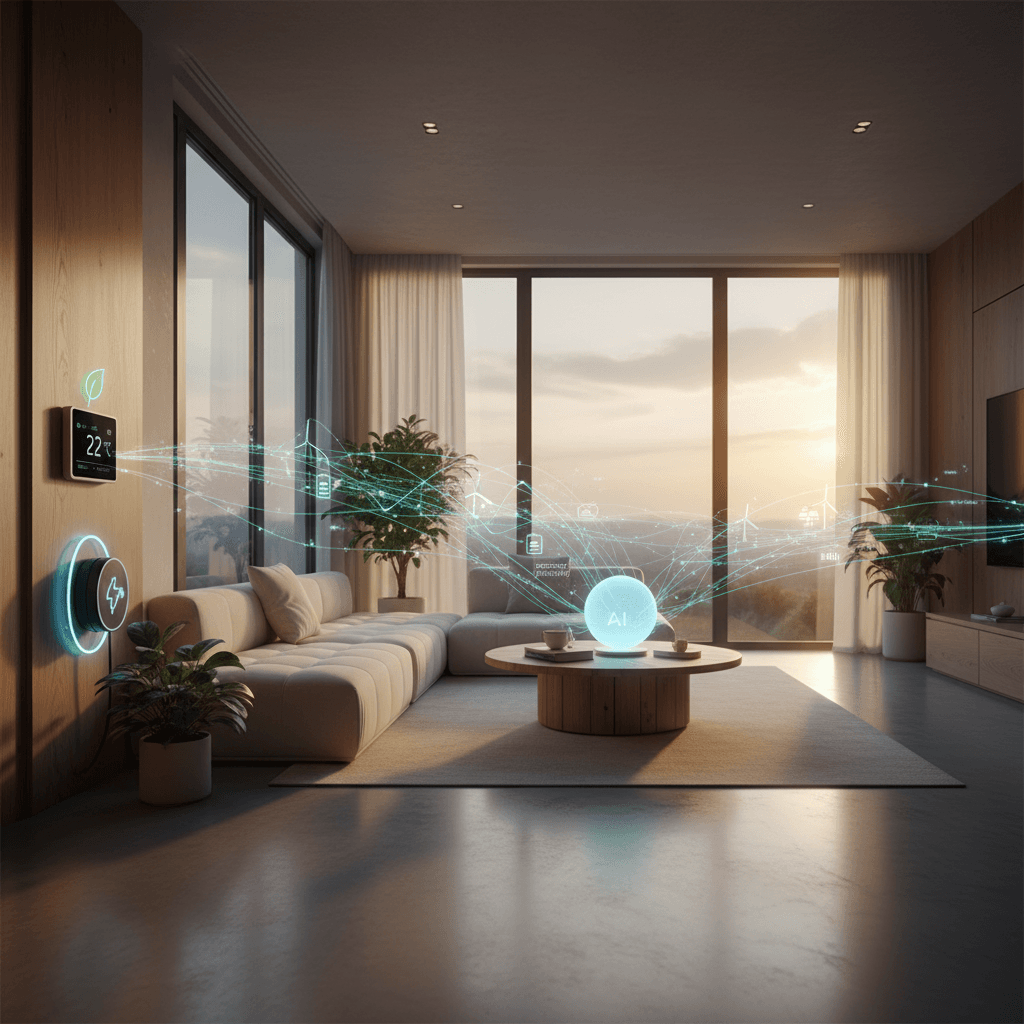

Agentic AI can slash energy use in smart homes—but also create huge data trails. Here’s how to keep the green gains while radically shrinking what you store.

Most households adding smart thermostats or EV chargers see one thing first: the energy savings. A well‑tuned system can cut heating and cooling use by 10–25%, shift EV charging to off‑peak hours, and trim hundreds of dollars a year from the power bill.

But there’s a second “meter” running that doesn’t appear on your utility dashboard: the data meter. Every optimized schedule, every sensor reading, every inferred habit becomes part of a dense digital trail. And as agentic AI spreads across smart homes, grids, and cities, that hidden trail is growing fast.

Here’s the thing about agentic AI in green technology: the same features that make it brilliant for energy efficiency—perception, planning, and autonomous action—also make it very good at quietly collecting and storing personal data. The good news is we can keep the environmental benefits without building permanent dossiers on people’s lives.

This post breaks down how agentic AI systems collect data, why that matters for sustainable tech, and six practical engineering habits that shrink the data footprint while keeping (or even improving) performance.

How Agentic AI Turns Energy Savings into Data Trails

Agentic AI systems don’t just answer questions; they observe, plan, and act. In a smart home, that usually means a loop like this:

- Perceive: Read sensors, prices, weather, and user preferences.

- Plan: Use an LLM-based planner or policy engine to schedule actions.

- Act: Adjust thermostats, blinds, EV charging, and other devices.

- Reflect: Log what worked, tweak the next plan.

That loop is fantastic for energy management. A home optimizer can:

- Precool or preheat before peak prices.

- Shade windows before the sun overheats rooms.

- Charge EVs when grid carbon intensity is lowest.

- Coordinate with rooftop solar and batteries.

The problem is how that loop is usually engineered.

Where the Data Comes From

By default, a typical agentic AI for smart homes or buildings will:

- Log every instruction it receives and every action it takes.

- Cache electricity prices, carbon intensity data, and weather for fast access.

- Store temporary computations and intermediate states for debugging.

- Keep reflections or “notes to self” to improve future runs.

- Maintain broad, long‑lived permissions to devices and data sources.

On top of that, each connected device—thermostat, inverter, EV charger—often logs its own analytics to the cloud. So you don’t just have one data trail; you have overlapping, duplicated trails across apps, vendor clouds, and monitoring tools.

Most companies get this wrong. They design for convenience and debugging first, and privacy later (if at all). For a quick prototype, that’s understandable. For production systems shaping the future of smart, sustainable infrastructure, it’s lazy engineering.

This matters because green technology is supposed to make the world better, not just more efficient. If the cost of smart energy management is long-lived, intrusive behavioral profiles, people will eventually push back—and adoption will slow.

Why Privacy Matters for Green Technology Adoption

Privacy isn’t a nice‑to‑have in the sustainability space; it’s a growth constraint. If people don’t trust energy‑saving AI, they’ll turn features off, opt out of data sharing, or avoid the tech entirely.

From what I’ve seen across deployments in buildings and homes, privacy affects green tech in three concrete ways:

-

User Adoption

Households are much more willing to accept automation if they feel in control of what’s stored, who sees it, and how long it lives. -

Regulatory Risk

Energy and utilities are heavily regulated already. Add AI plus sensitive occupancy patterns, and you’re inviting scrutiny—and potential fines—if your data practices are sloppy. -

System Design Flexibility

When data minimization is baked in from the start, you’re free to integrate new features or partners without renegotiating privacy from scratch.

The reality? It’s simpler than you think to get this right. You don’t need exotic cryptography or new theories of privacy. You need disciplined, boring engineering habits that respect how agentic systems actually operate.

Six Habits to Shrink AI Agents’ Data Footprints

A privacy‑first AI agent for green technology keeps doing the smart energy work—but with a radically smaller, more predictable data trail. Here are six habits that make that possible.

1. Constrain Memory to the Task and Time Window

The agent should remember only what’s needed, only as long as needed.

For a home energy optimizer, that might mean:

- Working in weekly runs: each week is a self‑contained planning cycle.

- Storing minimal, structured reflections tied to that week only.

- Assigning expiration timestamps to every persistent item.

Instead of building a year‑long archive of who was home at 7 p.m., the agent keeps just enough data to improve the next run—then erases it.

Concrete practices:

- Use task-scoped memory objects (

run_2025w48) with explicit TTL (time‑to‑live). - Prefer aggregated stats (e.g., “weekday evenings usually occupied”) over raw sequences of events.

- Avoid storing natural‑language transcripts when structured fields will do.

2. Make Deletion Easy, Fast, and Verifiable

Deletion shouldn’t be a support ticket; it should be a button.

Every plan, cache, log, embedding, or reflection should be tagged with a run ID, so one “Delete this run” command:

- Finds all related data across local and cloud storage.

- Deletes it in a single, orchestrated sweep.

- Returns clear confirmation to the user.

You still keep a minimal audit trail for accountability—timestamps, anonymized event types, error codes—under its own expiration clock. But the rich behavioral data is gone.

From an engineering standpoint, this means:

- Designing storage schemas around event and run IDs from day one.

- Treating deletion as a first‑class feature, with tests and monitoring.

- Exposing deletion and retention in the UI in plain language, not legalese.

3. Use Temporary, Task‑Specific Access Permissions

The agent should never have more access than it needs for the current job.

In a smart green home, that means:

- Issuing short‑lived tokens to control specific devices (e.g., thermostat, blinds, charger).

- Scoping tokens by action (e.g., “set temperature 18–24°C between 5–9 p.m.”) instead of “full control forever.”

- Automatically revoking permissions when the task or run ends.

This approach:

- Limits the blast radius of any compromise.

- Reduces how much device state the agent needs to store long‑term.

- Makes it obvious which capabilities are live at any point in time.

For building‑scale or grid‑interactive systems, the same principle applies to APIs from utilities, building management systems, and DER (distributed energy resources) platforms.

4. Provide a Clear “Agent Trace” for Human Oversight

If users can’t see what the agent did and why, they won’t trust it—especially when it’s managing core infrastructure like heating, cooling, and EV charging.

A good agent trace shows, in readable form:

- What the agent planned to do.

- What it actually executed.

- Which data sources it accessed.

- Where data was stored and for how long.

Users should be able to:

- Export a trace of a given week or scenario.

- Delete all data associated with that trace.

- Understand retention and deletion in one screen.

For energy teams managing fleets of buildings or devices, this trace doubles as an operations console: it helps debug undesirable behaviors (like over‑cooling or missed charging windows) without needing invasive logs.

5. Always Choose the Least Intrusive Data Source

A privacy‑first AI agent treats intrusiveness as a cost.

If a home optimizer can infer occupancy from:

- Door sensors,

- Motion detectors,

- Smart meter load patterns,

then it shouldn’t escalate to video feeds or microphone data, even if those are technically available. Higher‑intrusion data should be explicitly prohibited unless both conditions are met:

- There’s a clear, documented need.

- There is no equally effective, less intrusive alternative.

In practice, that means:

- Designing policies and code that rank data sources by sensitivity.

- Baking those rankings into the planner so it picks low‑sensitivity options by default.

- Providing users simple controls to disable higher‑intrusion sources entirely.

For green technology, this is an easy win. In most energy use cases, fine‑grained behavior isn’t necessary. Hourly load curves, coarse occupancy, and basic comfort preferences are enough to make strong optimizations.

6. Practice Mindful Observability, Not Surveillance

Every engineering team wants observability. But too many systems treat it as a license to record everything forever.

Mindful observability means the agent:

- Logs only essential identifiers and metrics (e.g., error types, runtime, high‑level decisions).

- Avoids storing raw sensor streams if derived metrics are enough.

- Caps log frequency and volume.

- Disables third‑party analytics by default, or uses heavily minimized feeds.

You still get:

- The ability to debug weird schedules.

- Performance metrics for model improvements.

- Safety and accountability signals.

But you don’t create a second, shadow copy of every user action just for the observability stack.

What a Privacy‑First Green AI Agent Looks Like

Put these six habits together and the picture changes.

Your smart home or building still:

- Precools before peak prices.

- Schedules EV charging for the cleanest hours.

- Coordinates with solar, batteries, and tariffs.

But now:

- Memory is scoped: data lives in weekly (or task‑based) containers with clear expiry.

- Deletion is real: “clear last week’s run” actually erases all rich data for that period.

- Permissions are narrow and temporary: the agent can’t silently hang on to control.

- Data sources are conservative: simple sensors beat cameras every time.

- Observability is lean: enough to run and improve, not enough to profile lifestyles.

This pattern generalizes well beyond homes:

- Travel and mobility agents that coordinate low‑carbon itineraries based on calendars.

- Industrial energy optimizers that tune processes and schedules across factories.

- Smart city systems that adjust lighting, traffic, and storage around renewable supply.

All of these agents follow the same plan‑act‑reflect loop—and all can apply the same privacy‑first habits.

How to Start: Practical Next Steps for Teams

If you’re building or buying agentic AI for sustainability, here’s how to move from theory to practice.

For product and engineering teams

- Redesign data models around runs and TTLs. Treat expiration as a property of every record.

- Implement a visible delete‑run feature. Test it as you would test billing or security.

- Classify data sources by sensitivity. Encode those rankings directly into planners and policies.

- Tighten observability. Replace raw logs with structured events and capped histories.

For energy managers, sustainability leads, and buyers

When evaluating vendors, ask blunt questions:

- How long does your system keep occupancy or schedule data?

- Show me how a user deletes a week of activity—what actually happens underneath?

- Which data sources are required, and which are optional?

- Do you use video, audio, or fine‑grained location for optimization? Why?

If a vendor can’t answer clearly, or if deletion is “on request” instead of self‑service and instant, treat that as a red flag.

Green technology doesn’t just need to be efficient; it needs to be trustworthy. Agentic AI can absolutely power cleaner grids, smarter homes, and more sustainable industries without hoarding personal data.

The systems that win over the next decade will be the ones that bake privacy into their architecture, not their marketing. Build AI agents that respect people’s data today, and you’ll have an easier time scaling the climate tech we all depend on tomorrow.