Vision AI fails in robotics for predictable reasons: data gaps, imbalance, labeling errors, and drift. Learn a practical playbook to ship reliable models.

Why Vision AI Fails in Robotics (and How to Fix It)

A vision model can score 95% in a lab benchmark and still fall apart on a factory floor. I’ve seen teams celebrate a great validation curve, then watch a robot mis-pick parts because the lighting shifted, a supplier changed packaging, or one “rare” defect suddenly became common.

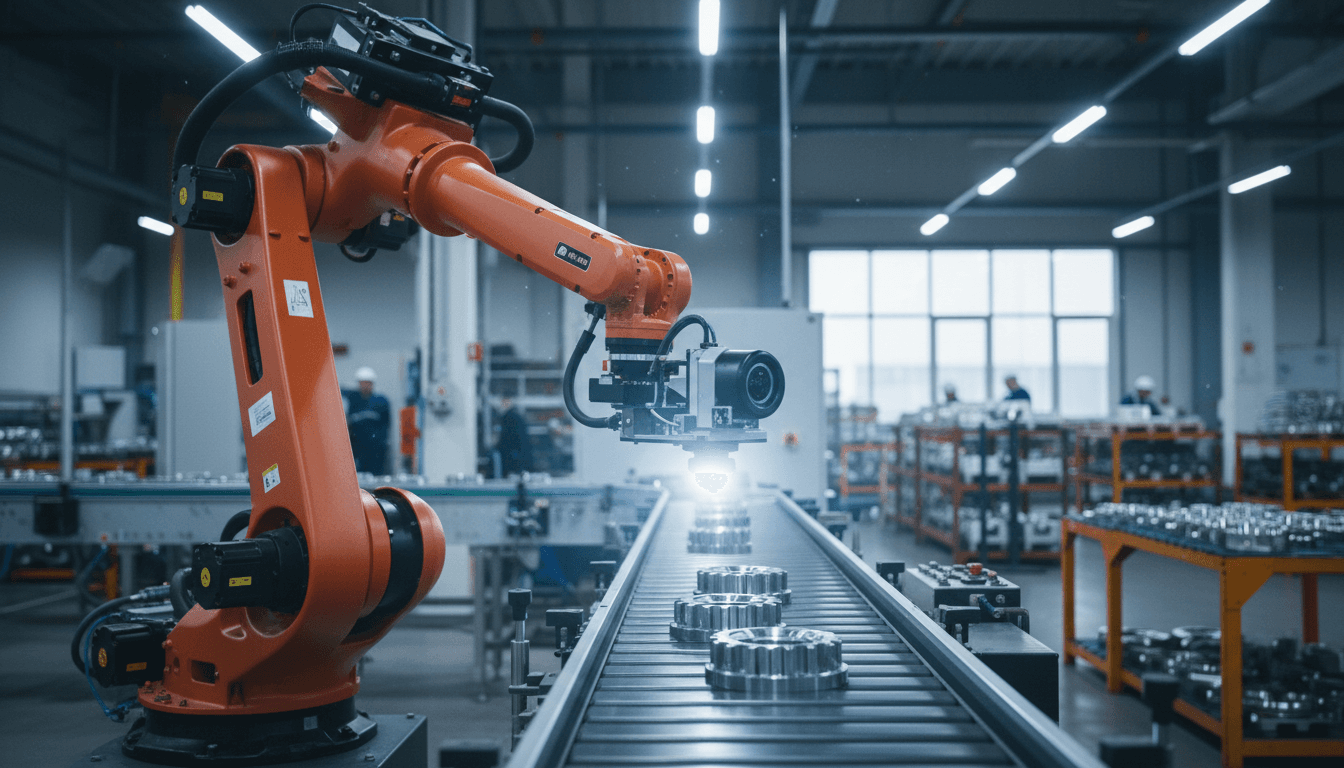

This matters because vision AI is the nervous system of many AI-powered robots. If the robot can’t reliably see, it can’t reliably grasp, sort, inspect, or navigate. And when vision fails in production, it doesn’t fail quietly: it creates downtime, scrap, safety incidents, and bad customer experiences.

A recent industry white paper on why vision AI models fail highlights a blunt truth: most failure modes are data and evaluation problems, not model-architecture problems. If you’re deploying AI in industrial robotics—warehouse automation, manufacturing inspection, retail loss prevention, autonomous vehicles—your biggest wins come from building a more disciplined pipeline around data curation, evaluation, and production monitoring.

Vision AI failures aren’t “edge cases” once you deploy

Answer first: In robotics, “edge cases” quickly become daily cases because environments change and robots encounter long-tail conditions at scale.

In controlled testing, the world is tidy: consistent camera angles, stable lighting, limited clutter. Real deployments aren’t. A single facility might have multiple shifts, different operators, seasonal humidity changes, lens smudges, forklifts casting shadows, reflective wrap, and pallets staged in new patterns. In December, many operations also see peak throughput and temporary staffing—exactly when small model weaknesses amplify into bigger operational losses.

Robots also create their own distribution shift. Once a system goes live, it changes behavior in the environment (different placement, different flow, different handling), which changes the images you collect next. If you’re not actively monitoring drift, you can end up training on yesterday’s world.

Here’s the stance I take: treat production vision like a living system, not a one-time model release. If your plan is “train → deploy → forget,” the model is already on a countdown.

A quick way to spot “edge case denial” in your org

If your incident reports keep describing failures as “rare” without quantifying frequency, you’re not managing risk—you’re labeling it.

A better practice is to track:

- Failure rate per 1,000 robot cycles (picks, inspections, lane merges)

- Top-10 recurring visual conditions in failure images (glare, occlusion, motion blur)

- Time-to-detection and time-to-mitigation for drift events

The four failure modes that sink production vision systems

Answer first: Vision AI models typically fail due to (1) insufficient or unrepresentative data, (2) class imbalance and missing long-tail coverage, (3) labeling errors and inconsistent ground truth, and (4) bias and leakage that inflate offline metrics.

The white paper frames these patterns with real-world examples (including large-scale deployments in automotive, retail, and semiconductor contexts). You don’t need those exact scenarios to learn the core lesson: your model is only as production-ready as the data and evaluation that shaped it.

1) Insufficient data (or the wrong data)

It’s common to have “a lot of images” but not the right variety of images.

In industrial robotics, data gaps often look like:

- Too many daytime samples, not enough night shift

- Mostly clean parts, not enough oily/dirty/used parts

- One camera calibration, then hardware replacement changes color response

- Few samples under motion (blur) even though conveyors move fast

Fix: Build a coverage map before you add more data. List the conditions your robot encounters and measure how many labeled samples exist for each bucket.

A practical coverage map for robotics vision might include:

- Lighting: bright, dim, backlit, flicker

- Motion: stopped, slow, fast (blur)

- Surface: reflective, matte, transparent

- Occlusion: none, partial, heavy

- Orientation: rotations, flips, stacked

- Camera health: clean lens vs smudged lens

If you can’t fill this grid with real labeled examples, your benchmark score is misleading.

2) Class imbalance: the defect you care about is the one you barely labeled

Class imbalance is brutal in inspection and safety-critical robotics. The “bad” class is rare—until it isn’t.

Common imbalance traps:

- Defect detection where positives are <1% of images

- Safety violations where incidents are scarce (good) but then hard to learn

- Retail fraud/loss patterns that shift as behavior adapts

Fix: Don’t accept a single overall metric. Use per-class metrics and cost-weighted evaluation.

For example:

- Track recall on critical classes (missed defect cost)

- Track false positive rate (unnecessary stops, operator overrides)

- Use PR curves, not just accuracy

Also: plan your data collection so you’re not waiting for failures to happen. In robotics, you can often simulate rare conditions (controlled glare tests, deliberate occlusions, seeded defect runs) to build long-tail coverage.

3) Labeling errors: the quiet killer of “good” models

A model can’t outperform inconsistent ground truth. In robotics, labeling mistakes often come from ambiguity:

- Where exactly is the boundary of a defect?

- Is a partially occluded object “present” or “unknown”?

- Are you labeling what the camera sees or what the robot must do?

Fix: Treat labeling as engineering, not clerical work.

What works in practice:

- A labeling rubric with examples (positive/negative/ignore cases)

- Inter-annotator agreement checks on a weekly sample

- Gold set audits: maintain a small set of “known-correct” labels and recheck them every tooling change

- Active error review: prioritize labeling review on the images the model finds confusing (low confidence, high disagreement)

My opinion: if you don’t budget time for label QA, you’re choosing hidden technical debt.

4) Bias and data leakage: inflated offline performance

Two production disasters show up again and again:

- Bias: the model performs well for one facility/line/operator and poorly for another.

- Leakage: you accidentally let near-duplicate scenes or correlated frames land in both training and validation. Your “test” isn’t really a test.

Robotics is especially prone to leakage because video frames are highly correlated. If you split randomly, you can end up evaluating on almost the same scene you trained on.

Fix: Split data in a way that matches deployment reality:

- By time window (train on earlier weeks, test on later weeks)

- By facility/site

- By production line or camera ID

- By SKU families or suppliers

If your model still holds up under those splits, you’ve earned confidence.

Why robotics makes vision reliability harder than web-scale CV

Answer first: Robotics adds closed-loop consequences, strict latency constraints, and physical-world variability—so small vision errors become expensive system failures.

A product recommender can be wrong and you lose a click. A robot can be wrong and you lose an hour.

Robotics-specific complications:

- Closed-loop coupling: a bad detection changes robot motion, which changes the next frames.

- Latency budgets: you might need an answer in 30–100 ms to avoid missing a pick.

- Calibration drift: vibration and temperature change camera alignment over time.

- Safety requirements: some errors must fail safe (stop), others must degrade gracefully.

That’s why “just train a better model” is rarely the best next move. You need a system view: data, evaluation, monitoring, and fallback behaviors.

The reliability mindset: design for “known unknowns”

A production robot should have a plan for uncertainty.

Concrete patterns:

- Confidence gating: low confidence triggers re-capture, alternate viewpoint, or human review.

- Multi-sensor checks: combine vision with weight sensors, depth, RFID, or torque feedback.

- Failover policies: define what happens when vision can’t decide—stop, slow down, reroute, or request assistance.

Evaluation and monitoring that actually protect your deployment

Answer first: To future-proof vision AI in industrial robotics, evaluate with deployment-faithful splits, stress tests, and continuous monitoring for drift and confidence decay.

Teams often overinvest in training and underinvest in measurement. Measurement is what prevents surprise outages.

Build an evaluation suite that mirrors operations

At minimum, include:

- Scenario-based test sets (glare, occlusion, blur, clutter)

- Facility-specific slices (site A vs site B)

- Shift-based slices (day vs night)

- SKU/supplier slices (packaging changes)

- Rare-but-critical sets (safety, defects)

A good rule: if ops can describe the scenario, your evaluation should measure it.

Monitor three signals in production

If you only monitor accuracy, you’ll notice problems too late. Better signals arrive earlier:

- Input drift: changes in image statistics, embeddings, camera metadata, or scene composition

- Confidence drift: average confidence drops, or the low-confidence tail grows

- Outcome drift: increasing overrides, rework, re-inspection, or robot recovery behaviors

If you already track OEE, downtime, or scrap, connect them to vision events. That’s where the ROI story becomes obvious.

A practical one-liner: If your robot’s camera feed changes faster than your retraining cadence, monitoring is your only early warning system.

A pragmatic playbook for more reliable vision AI in robotics

Answer first: The fastest path to dependable vision AI is a data-centric workflow: map coverage, fix labels, harden evaluation, and create a monitoring-to-retraining loop.

Here’s a playbook you can use in the next 30–60 days.

Step 1: Define “done” in operational terms

Replace “95% accuracy” with metrics ops cares about:

- Missed defect rate per 10,000 units

- False stop rate per shift

- Human intervention minutes per day

- Mis-pick rate per 1,000 picks

Step 2: Create a failure review cadence

Weekly is a good starting point:

- Pull the top 50 failure clips/images

- Categorize root causes (lighting, occlusion, label ambiguity, new SKU)

- Decide: collect data, relabel, adjust thresholds, or change workflow

Step 3: Fix the dataset before retraining

Prioritize:

- Removing duplicates and near-duplicates

- Correcting systematic label errors

- Filling long-tail buckets that match your failure categories

Step 4: Gate releases with stress tests

Don’t ship without:

- A time-based test set

- A worst-case slice report

- A confidence calibration check (so 0.9 means something)

Step 5: Close the loop from monitoring to retraining

Set triggers:

- Drift threshold exceeded → start data capture

- Confidence drop sustained for N hours → open incident

- New SKU onboarding → collect and label before go-live

This is how vision AI becomes an industrial capability, not a science project.

Where this fits in the bigger AI + robotics story

In our “Artificial Intelligence & Robotics: Transforming Industries Worldwide” series, we keep coming back to a pattern: the winners aren’t the companies with the fanciest demos—they’re the ones that operationalize reliability.

Vision AI sits at the center of modern automation, from warehouse robots to lab automation and smart inspection. If you take the data-centric approach seriously—especially around class imbalance, label quality, leakage-proof evaluation, and production monitoring—you’ll ship systems that stay stable through supplier changes, seasonal peaks, and facility expansion.

If you’re planning a robotics rollout in 2026, here’s the question I’d ask your team right now: When the environment changes (not if), what’s your mechanism for noticing and responding before operations feel pain?