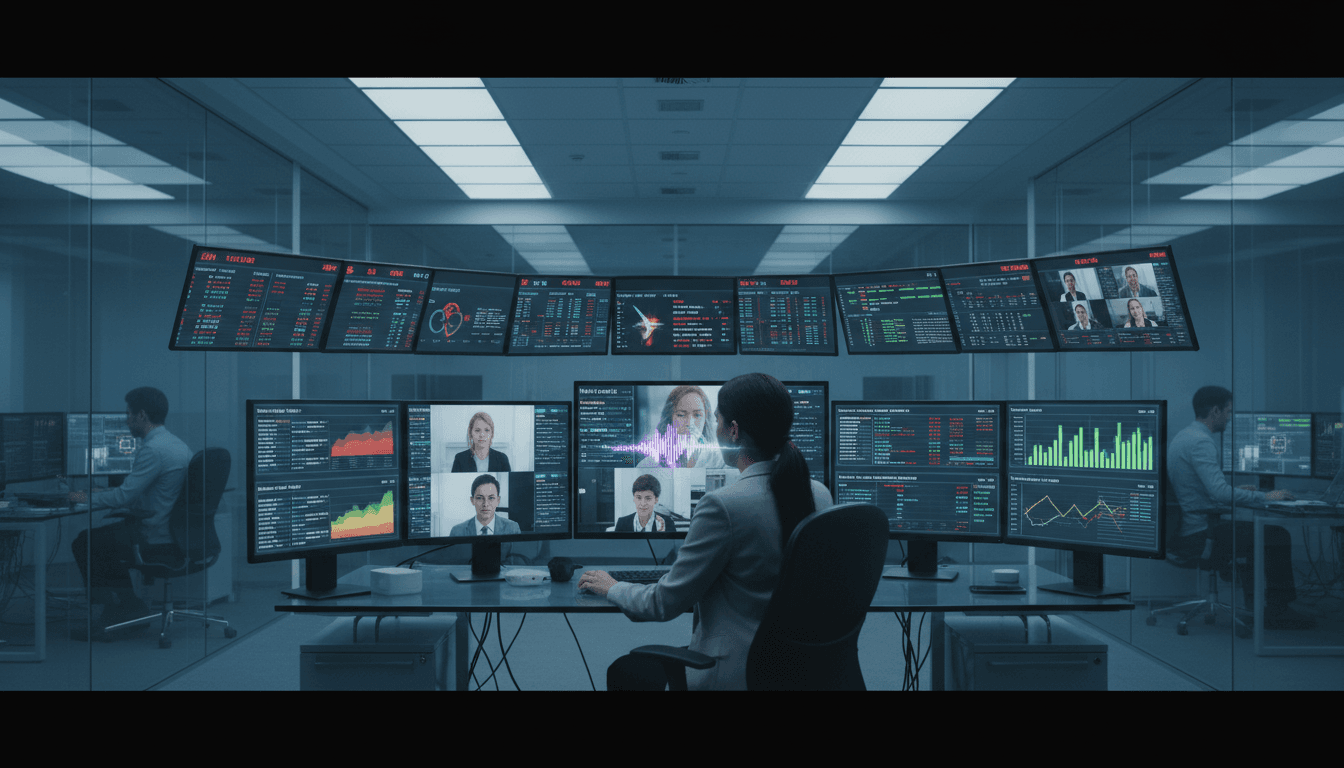

Deepfake fraud and outages are converging risks. Learn how banks and fintechs can build crisis-ready culture, AI fraud detection, and operational resilience.

Deepfakes and Downtime: Building Crisis-Ready Finance

A weird thing happened in financial services over the past two years: fraud got more believable, and outages got more visible.

Believable, because deepfakes and synthetic identities can now mimic a real customer (or exec) convincingly enough to slip past human intuition and outdated controls. Visible, because when payments fail or apps go dark, customers don’t just grumble—they screenshot, post, and churn.

The blocked AFP/Finextra piece (it sits behind bot protection) signals what many banks and fintechs are actively comparing notes on right now—deepfakes, downtime, and the need for a crisis-ready culture. BMO and Affirm are useful reference points because they sit on different ends of the spectrum (large bank vs. high-growth fintech), yet the operational reality converges: AI in finance only helps if your organisation is trained and tooled to operate under stress.

Crisis readiness is a culture problem before it’s a tech problem

A crisis-ready culture means your teams can make fast, correct decisions when the situation is noisy, partial, and adversarial. Tools matter, but culture determines whether tools get used properly.

In practice, “crisis-ready” isn’t a poster in the office. It’s operational muscle built through repetition:

- Clear incident roles (who declares, who communicates, who approves mitigations)

- Pre-written customer comms for common scenarios (fraud spikes, card processing delays, app outages)

- Blameless postmortems that produce measurable follow-ups (not vague “improve monitoring”)

- Cross-functional drills that include product, fraud, comms, legal, and customer support

Here’s my stance: most organisations over-invest in detection and under-invest in decision velocity. When a fraud wave hits or a vendor fails, what you need is a fast path from signal → confidence → action. That path is built with training, escalation rules, and leadership alignment.

What “crisis-ready” looks like in an AI-enabled bank or fintech

AI introduces new operating requirements:

- Model risk is now incident risk. If a fraud model drifts or a feature pipeline breaks, it can be as damaging as an outage.

- Attackers adapt quickly. Deepfake techniques iterate weekly, not yearly.

- Customer expectations are instant. If real-time payments settle in seconds, customers expect real-time problem resolution too.

A crisis-ready culture treats fraud and reliability as two halves of the same promise: trust.

Deepfakes are forcing fraud teams to rethink “identity”

Deepfakes aren’t just celebrity videos. In finance, they show up as:

- Voice cloning to defeat call-centre authentication

- Video deepfakes for remote onboarding or high-risk account recovery

- Synthetic identities that blend real and fake attributes to build “creditworthy” profiles

The uncomfortable truth: knowledge-based authentication (KBA) is increasingly fragile. If a fraudster can scrape personal data and pair it with a convincing voice, “What’s your mother’s maiden name?” becomes theatre.

How AI fraud detection needs to evolve (practical pattern)

The winning approach is layered—behaviour, device, and intent, not just “does the face match the ID.” Specifically:

- Behavioural biometrics: typing cadence, swipe patterns, navigation behaviour. Deepfakes can mimic faces; they struggle to mimic habits.

- Device and network intelligence: device fingerprinting, emulator detection, SIM-swap signals, IP reputation.

- Risk-based step-up: only ask for stronger verification when risk rises; don’t punish every customer with friction.

- Transaction graph analysis: mule accounts, payment loops, sudden counterparty changes.

A snippet-worthy rule that holds up: Deepfakes beat single checks. They don’t beat systems that correlate weak signals across time.

“People also ask”: How do you detect deepfake fraud in financial services?

Detecting deepfake fraud works best when you combine:

- Liveness detection (active prompts, passive signals, replay detection)

- Session integrity checks (screen recording, remote-control tools, emulator indicators)

- Behavioural signals (how the user moves through flows)

- Back-end consistency (does the account history match the claimed urgency and action)

If you’re relying mainly on selfie matching, you’re playing defence with last decade’s playbook.

Downtime is not just an IT issue—it’s a balance-sheet issue

Outages now create immediate financial and compliance exposure. For banks, it can trigger regulatory scrutiny. For fintechs, it can kill growth.

Downtime hits in at least four ways:

- Direct revenue loss (failed card transactions, missed lending conversions)

- Fraud opportunity (attackers probe instability; controls are often loosened during incidents)

- Customer support load (cost spikes and slower resolution)

- Reputation drag (trust is slow to rebuild)

And here’s the part teams don’t like to admit: crises often cascade across vendors. Payments processors, identity providers, cloud regions, observability stacks—each dependency is a possible single point of failure if you haven’t designed for degradation.

Designing for “degraded but safe” operations

Crisis-ready organisations plan for operating in partial failure. For example:

- If your identity vendor is down, can you gracefully fall back to a lower-risk pathway for existing customers?

- If your risk engine is degraded, can you pause specific high-risk actions (new payees, large transfers) while keeping low-risk actions running?

- If your core app is down, do you have status comms and alternate servicing channels ready (and staffed)?

A strong operational resilience posture uses AI, but doesn’t depend blindly on it. Fail open and you’ll invite fraud. Fail closed and you’ll lose customers. The right answer is usually: fail selectively, based on risk.

The bridge between deepfakes and downtime: incident response that includes fraud

Most companies split these responsibilities:

- Reliability teams handle uptime, latency, and incidents.

- Fraud teams handle bad actors and suspicious activity.

That separation breaks down during modern events. A major outage creates fraud “fog,” and a fraud spike can look like a traffic surge or DDoS.

A crisis-ready culture merges playbooks so teams can act together:

A unified playbook: what to pre-decide

Pre-decide these items before the next incident:

- Risk thresholds for throttling: what volume/velocity triggers step-up or temporary limits?

- Safe-mode feature flags: which features can be disabled without breaking the core promise?

- Customer messaging rules: what can be said in the first 15 minutes (and by whom)?

- Data retention and audit trails: what must be captured for regulatory review and postmortems?

“The first hour of an incident is a decision-making contest.”

If your approvals chain requires five meetings, you’ll lose that contest.

Where AI helps in crisis operations (without the hype)

AI is genuinely useful in three specific places:

- Alert triage: clustering similar alerts, reducing noise, highlighting novel patterns.

- Fraud ops productivity: summarising cases, suggesting next-best actions, generating clear investigator notes.

- Incident communications: drafting customer updates that are accurate, consistent, and channel-appropriate—then reviewed by humans.

The trap is letting AI become a black box during a crisis. The standard should be: AI-assisted, human-directed.

What Australian banks and fintechs should do in 2026 planning cycles

Because this post sits in our AI in Finance and FinTech series, let’s talk execution. If you’re doing your 2026 roadmap planning right now (December is when a lot of teams lock budgets), these are the moves that pay off.

1) Treat identity as a lifecycle, not a login step

Deepfakes target your edges: onboarding, account recovery, high-value transfers. Shift from point-in-time identity checks to ongoing confidence scoring.

Practical steps:

- Implement risk scoring per session, not per user.

- Monitor payee creation and first-time transfer behaviour aggressively.

- Add step-up options that don’t rely solely on face/voice.

2) Run “deepfake drills” the same way you run outage drills

Tabletop exercises shouldn’t be reserved for cyber. Add scenarios like:

- A customer claims “that wasn’t me” on a video-verified account recovery.

- A voice-cloned caller persuades an agent to bypass controls.

- A fraud ring triggers load patterns that resemble a system incident.

Measure outcomes: time-to-detect, time-to-contain, false positives, customer impact.

3) Build resilience into AI systems, not just production systems

If your fraud model relies on a feature store, streaming pipeline, and third-party enrichment, you need resilience there too.

Baseline requirements:

- Fallback scoring when enrichment is missing

- Model monitoring (drift, feature freshness, prediction distribution)

- Kill switch for risky automation

4) Optimise for customer trust, not just loss rates

Reducing fraud losses is critical, but the customer experience during investigation matters just as much.

Good patterns:

- Clear timelines (“we’ll update you in 24 hours”)

- Visible controls (“we blocked this transfer because…”) without oversharing

- Fast restoration paths for legitimate customers

Trust is built when customers feel protected and respected.

A simple readiness checklist (steal this)

Use this as a quick gut-check for your fraud detection and operational resilience posture:

- Can you identify deepfake-assisted events (voice, video, synthetic IDs) in your telemetry?

- Do you have risk-based step-up that’s consistent across channels (app, web, call-centre)?

- Can you operate in degraded mode without opening the fraud floodgates?

- Are fraud and reliability playbooks integrated with shared escalation paths?

- Do you measure decision speed (time to contain, time to communicate), not just detection accuracy?

If you answered “no” to two or more, your next crisis will be more expensive than it needs to be.

Where this is heading: crisis readiness becomes a product feature

Deepfakes will keep improving, and outages won’t disappear—systems are too interconnected, and attackers are too adaptive. The winners won’t be the companies that promise “it’ll never happen.” They’ll be the ones that can say: we stay available, we spot fraud early, and we recover fast when something breaks.

That’s the real connection between deepfakes and downtime: both test whether your organisation can protect trust under pressure.

If you’re building or buying AI in finance—fraud detection, credit decisioning, or personalised financial solutions—treat crisis readiness as part of the deliverable, not a side project. What would change in your product roadmap if you assumed the next deepfake incident and the next major outage are already on the calendar?